Introduction

Have you ever seen a dataset that contains almost all null values? If so, you are not by yourself. One of the most frequent issues in machine learning is sparse datasets. Several factors, like inadequate surveys, sensor data with missing readings, or text with missing words, can lead to their existence.

When trained on sparse datasets, our machine-learning models can produce results with relatively low accuracy. This is because machine learning algorithms operate on the assumption that all data is available. When there are missing values, the algorithm might be unable to determine the correlations between the features correctly. The model’s accuracy will increase if trained on a large dataset without missing values. Therefore, to fill sparse datasets with approximately correct values rather than random ones, we must manage them with extra care.

In this guide, I will cover the Definition, reasons, and techniques for dealing with sparse datasets.

Learning Objectives

- Gain a comprehensive understanding of sparse datasets and their implications in data analysis.

- Explore various techniques for handling missing values in sparse datasets, including imputation and advanced approaches.

- Discover the significance of exploratory data analysis (EDA) in uncovering hidden insights within sparse datasets.

- Implement practical solutions for dealing with sparse datasets using Python, incorporating real-world datasets and code examples.

This article was published as a part of the Data Science Blogathon.

Table of contents

- Introduction

- What are Sparse Datasets?

- Why Sparse Datasets are Challenging?

- Considerations with Sparse Datasets

- Preprocessing Techniques for Sparse Datasets

- Handling Imbalanced Classes in Sparse Datasets

- Choosing the Right Machine Learning Algorithms for Sparse Datasets

- Evaluating Model Performance on Sparse Datasets

- Conclusion

- Frequently Asked Questions (FAQs)

What are Sparse Datasets?

A dataset with many missing values is said to be a sparse dataset. No specific threshold or fixed percentage defines a dataset as light based solely on the percentage of missing values. However, a dataset with a high percentage of missing values (commonly exceeding 50% or more) can be considered relatively sparse. Such a significant proportion of missing values can pose challenges in data analysis and machine learning.

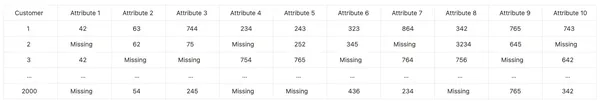

Example

Imagine that we have a dataset with data on consumer purchases from an online retailer. Let’s assume the dataset has 2000 rows (representing consumers) and ten columns (representing various attributes like the product category, purchase amount, and client demographics).

For the sake of this example, let’s say that 40% of the dataset entries are missing, suggesting that for each client, around 4 out of 10 attributes would have missing values. Customers might not have entered these values, or there might have been technical difficulties with data gathering.

Although there are no set criteria, the significant number of missing values (40%) allows us to classify this dataset as highly sparse. Such a large volume of missing data may impact the reliability and accuracy of analysis and modeling tasks.

Why Sparse Datasets are Challenging?

Due to the occurrence of a lot of missing values, sparse datasets pose several difficulties for data analysis and modeling. The following are some factors that make working with sparse datasets challenging:

- Lack of Insights: Since a lot of data is missing in sparse datasets, there is a problem of reduced information which leads to the loss of meaningful insights that might be helpful for modeling.

- Biased Results: If our model produces biased results, it poses a threat. We primarily observe biased outcomes in sparse datasets due to missing data, which makes the model rely on specific feature categories.

- Massive impact on model’s accuracy: Sparse datasets can negatively affect the accuracy of a machine learning model. Many algorithms don’t train the model unless all the missing values are handled. Missing values can lead the model to learn wrong patterns, which gives bad results.

Considerations with Sparse Datasets

When working with sparse datasets, there are several considerations to remember. These factors can help guide your approach to handling missing values and improving the accuracy of your models. Let’s explore some key considerations:

- Data loss, such as that experienced when a complex disc malfunction or a file is corrupted, can result in sparse datasets. Machine learning models may find it challenging to be trained as a result of missing or erroneous data that may result.

- Data inconsistency, such as when various data sources utilize different formats or definitions for the same data, can also result in sparse datasets. Due to this, merging data from many sources may be challenging, resulting in incorrect or lacking results.

- Overfitting is an issue that arises when a machine learning model learns the training data too well and is unable to generalize to new data. Sparse datasets might make it more challenging to prevent overfitting.

- Training machine learning models on large datasets can be challenging since sparse datasets can be more computationally expensive than dense datasets.

- It may be more challenging to grasp how a machine learning model functions when dealing with sparse datasets than with dense datasets.

Preprocessing Techniques for Sparse Datasets

Preprocessing is essential for adequately managing sparse datasets. You may boost the performance of machine learning models, enhance the data quality, and handle missing values by using the appropriate preprocessing approaches. Let’s examine some essential methods for preparing sparse datasets:

Data Cleaning and Handling Missing Values

Cleaning the data and handling missing values is the first stage in preprocessing a sparse dataset. Missing values can happen for several reasons, such as incorrect data entry or missing records. Before beginning any other preprocessing procedures, locating and dealing with missing values is crucial.

There are various methods for dealing with missing values. Simply deleting rows or columns with blank data is a typical strategy. However, this can result in data loss and lessen the model’s accuracy. Replacing missing values with estimated values is known as imputed missing values. The mean, median, and mode are a few of the available imputation techniques.

Scaling and Normalization of Features

The features should then be scaled and normalized after the data has been cleaned, and missing values have been handled. By ensuring that all parts are scaled equally, scaling can help machine learning algorithms perform better. Algorithms for machine learning can serve better by ensuring that all parts have a mean of 0 and a standard deviation of 1, which is achieved by normalization.

Feature Engineering and Dimensionality Reduction

The technique of feature engineering entails building new features from preexisting ones. It is possible to do this to enhance the effectiveness of machine learning algorithms. The technique of lowering the number of elements in a dataset is known as dimensionality reduction. This can be done to enhance the effectiveness of machine learning algorithms and facilitate data visualization.

Numerous dimensionality reduction and feature engineering methods are available. Typical strategies include:

- Feature selection entails choosing a subset of crucial features for the current task.

- Feature extraction: This process entails constructing new features out of preexisting ones.

- Reducing the number of features in a dataset is known as dimension reduction.

import pandas as pd

import numpy as np

from sklearn.impute import KNNImputer

from sklearn.preprocessing import StandardScaler

def preprocess_sparse_dataset(data):

missing_percentage = (data.isnull().sum() / len(data)) * 100

threshold = 70

columns_to_drop = missing_percentage[missing_percentage > threshold].index

data = data.drop(columns_to_drop, axis=1)

missing_columns = data.columns[data.isnull().any()].tolist()

# Imputing missing values using KNN imputation

imputer = KNNImputer(n_neighbors=5) # Set the number of neighbors

data[missing_columns] = imputer.fit_transform(data[missing_columns])

# Scaling and normalizing numerical features

numerical_columns = data.select_dtypes(include=np.number).columns.tolist()

scaler = StandardScaler()

data[numerical_columns] = scaler.fit_transform(data[numerical_columns])

return dataHandling Imbalanced Classes in Sparse Datasets

Sparse datasets frequently encounter the problem of unbalanced class distribution, where one or more classes may be disproportionately overrepresented. Machine learning algorithms may find it challenging to effectively anticipate the minority class due to a bias favoring the majority class. To address this problem, we can use several methods. Let’s investigate the following:

Understanding Class Imbalance

Before delving into management strategies, it is essential to understand the effects of imbalanced classes. In unbalanced datasets, the model’s performance may exhibit a high bias in favor of the majority class, leading to subpar prediction accuracy for the minority class. This is especially problematic when the minority class is important or represents a meaningful outcome.

Techniques for Addressing Class Imbalance

- Data Resampling: To establish a balanced training set, data resampling entails either oversampling the minority class, undersampling the majority class, or combining both. Techniques for oversampling include random oversampling, synthetic minority over-sampling (SMOTE), and adaptive synthetic sampling (ADASYN). Tomek Links, NearMiss, and Random Undersampling are examples of undersampling methods. Techniques for resampling are designed to increase minority-class representation or lessen majority-class dominance.

- Class Weighting: Many machine learning algorithms can assign different class weights to overcome the class imbalance. During model training, this gives the minority class more weight and the majority class lesser importance. It enables the model to prioritize the minority class and modify the decision boundary as necessary.

- Cost-Sensitive Learning: Cost-sensitive Learning entails allocating misclassification costs to various classes during model training. The model is motivated to focus more on its forecast accuracy by misclassifying the minority class at a higher price. A thorough understanding of the relevant cost matrix is necessary for this strategy to work.

- Ensemble Methods: Ensemble Techniques Multiple classifiers are combined using ensemble methods to increase prediction accuracy. It is possible to build an ensemble of models, each trained on a distinct subset of the data, using strategies like bagging, boosting, and stacking. The model’s capacity to identify patterns in both the majority and minority classes can be enhanced using ensemble approaches.

from imblearn.over_sampling import SMOTE

from imblearn.under_sampling import RandomUnderSampler

from sklearn.model_selection import train_test_split

def handle_imbalanced_classes(data):

X = data.drop('MonthlyIncome', axis=1)

y = data['MonthlyIncome']

# Performing over-sampling using SMOTE

oversampler = SMOTE()

X_resampled, y_resampled = oversampler.fit_resample(X, y)

# Performing under-sampling using RandomUnderSampler

undersampler = RandomUnderSampler()

X_resampled, y_resampled = undersampler.fit_resample(X_resampled, y_resampled)

return X_resampled, y_resampledChoosing the Right Machine Learning Algorithms for Sparse Datasets

Choosing suitable machine learning algorithms is essential for producing accurate and trustworthy results when working with sparse datasets. Due to their unique properties, some algorithms are better suited to handle sparse data. In this section, we’ll look at algorithms that work well with sparse datasets and discuss factors to consider when choosing an approach.

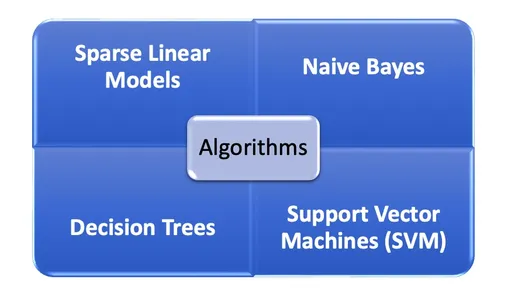

Algorithms Suitable for Sparse Datasets

- Naive Bayes: Common knowledge states that Naive Bayes classifiers perform effectively with sparse data. They efficiently model light features based on feature independence.

- Decision Trees: Algorithms based on decision trees, such as Random Forests and Gradient Boosting, can effectively handle sparse data. Decision trees can capture non-linear relationships in the data and intuitively manage missing values.

- Support Vector Machines (SVM): SVMs can effectively handle sparse data, especially when paired with the correct kernel functions. They are capable of handling high-dimensional feature spaces and are efficient at recording complex relationships.

- Sparse Linear Models: For use with sparse data, algorithms like Lasso Regression and Elastic Net Regression were developed. By penalizing the coefficients, they choose features that make the model light.

Considerations for Algorithm Selection

- The efficiency of the algorithm: Sparse datasets may contain numerous characteristics and missing values. Selecting algorithms that can effectively handle high-dimensional data is crucial.

- Model Interpretability: Some algorithms, such as decision trees and linear models, produce findings that are easy to grasp, which helps determine how features in sparse datasets affect the data.

- Algorithm Robustness: Noise and outliers may be present in sparse datasets. It’s crucial to pick algorithms that can successfully handle noise-resistant outliers.

- Scalability: Consider the algorithm’s ability to handle big datasets with many features. With high-dimensional data, some algorithms might not scale effectively.

- Domain Knowledge: Using domain knowledge can help choose algorithms compatible with the problem’s particulars and the data.

from sklearn.linear_model import LogisticRegression

def train_model(X, y):

# Training a sparse linear model (e.g., Logistic Regression) on the resampled data

model = LogisticRegression(solver='saga', penalty='elasticnet', l1_ratio=0.8, max_iter=1000)

model.fit(X, y)

return modelEvaluating Model Performance on Sparse Datasets

Machine learning model performance evaluation is crucial for determining their efficacy and making wise judgments. But because of the unique features of such data, assessing model performance on sparse datasets necessitates careful study. This part will look at handling class imbalance in performance evaluation, cross-validation, performance measures, etc.

Cross-Validation and Performance Metrics

Cross-validation is a popular method for assessing model performance, particularly in sparse datasets. It reduces the possibility of overfitting and aids in determining the model’s performance on hypothetical data. Considerations for cross-validation on sparse datasets are listed below:

- Stratified Sampling: Make sure each fold keeps the same class distribution as the original dataset when cross-validation. This is crucial to avoid skewed evaluation findings when dealing with unbalanced classes.

- K-Fold Cross-Validation: Partition the dataset into K subsets or folds for K-fold cross-validation. After testing the model on K-1 folds, we use the remaining fold for evaluation. Each fold serves as the validation set once during the K-fold iteration of this process. Following that, the performance measures are averaged over the K iterations.

- Repeated Cross-Validation: We repeat the cross-validation procedure numerous times using various randomly generated partitions of the data. This aids in producing performance estimations that are more trustworthy and solid.

Handling Class Imbalance in Performance Evaluation

The class disparity can severely impact performance evaluation, particularly when traditional measurements like accuracy are used. Think about using the following strategies to lessen the effects of class inequality:

- Confusion Matrix: By evaluating the true positives, true negatives, false positives, and false negatives in the confusion matrix, one can gain a deeper understanding of the model’s performance. It aids in comprehending how well the model may predict each class.

- Precision-Recall Curve: Plotting the precision-recall curve can show how precision and recall are traded off for various classification criteria. This curve is beneficial for unbalanced datasets.

- Class-Specific Evaluation: Pay attention to the performance indicators for the minority class rather than assessing the model’s performance across all categories.

from sklearn.model_selection import cross_val_score, StratifiedKFold

from sklearn.metrics import confusion_matrix, classification_report, precision_recall_curve

import matplotlib.pyplot as plt

def evaluate_model(model, X, y):

# Performing cross-validation using stratified K-fold

cv = StratifiedKFold(n_splits=5, shuffle=True, random_state=42)

scores = cross_val_score(model, X, y, cv=cv, scoring='accuracy')

print("Average Cross-Validation Accuracy:", scores.mean())

# Generating confusion matrix

y_pred = model.predict(X)

cm = confusion_matrix(y, y_pred)

print("Confusion Matrix:")

print(cm)

# Generating classification report

report = classification_report(y, y_pred)

print("Classification Report:")

print(report)

# Generating precision-recall curve

precision, recall, _ = precision_recall_curve(y, model.predict_proba(X)[:, 1])

plt.figure()

plt.plot(recall, precision)

plt.xlabel('Recall')

plt.ylabel('Precision')

plt.title('Precision-Recall Curve')

plt.show()Conclusion

Due to missing values and their effect on model performance, dealing with sparse datasets in data analysis and machine learning can be difficult. However, sparse datasets can be handled successfully with the appropriate methods and approaches. We can overcome the difficulties presented by sparse datasets and use their potential for valuable insights and precise forecasts by continuously experimenting with and modifying methodologies.

Key Takeaways

- High percentages of missing values are present in sparse datasets, affecting the precision and dependability of machine learning models.

- Preprocessing methods such as data cleansing, addressing missing values, and feature engineering are essential for managing sparse datasets.

- Correctly modeling datasets requires selecting appropriate techniques, such as Naive Bayes, decision trees, support vector machines, and sparse linear models.

- The creation of specialized algorithms, research into deep learning techniques, incorporating domain expertise, and using ensemble methods for better performance on sparse datasets are some future directions.

Frequently Asked Questions (FAQs)

A: There are several ways to handle missing values in sparse datasets, including mean imputation, median imputation, forward or backward filling, or more sophisticated imputation algorithms like k-nearest neighbors (KNN) imputation or matrix factorization.

A: Naive Bayes, decision trees, support vector machines (SVM), sparse linear models (like Lasso Regression), and neural networks are some techniques that operate well with sparse datasets.

A: Techniques like stratified sampling in cross-validation, applying appropriate performance metrics like accuracy, recall, and F1 score, and examining the confusion matrix are necessary for evaluating model performance on sparse datasets with imbalanced classes. Additionally, class-specific evaluation might show how well the approach serves underrepresented groups.

A: Creating specialized algorithms for sparse datasets, research into deep learning approaches, incorporating domain expertise into modeling, and using ensemble methods to boost performance are some future directions.

The media shown in this article is not owned by Analytics Vidhya and is used at the Author’s discretion.