Introduction

Generative AI has evolved dramatically, encompassing many techniques to create novel and diverse data. While models like GANs and VAEs have taken center stage, a lesser-explored but incredibly intriguing avenue is the realm of Neural Differential Equations (NDEs). In this article, we delve into the uncharted territory of NDEs in Generative AI, uncovering their significant applications and showcasing a comprehensive Python implementation.

This article was published as a part of the Data Science Blogathon.

Table of contents

The Power of Neural Differential Equations

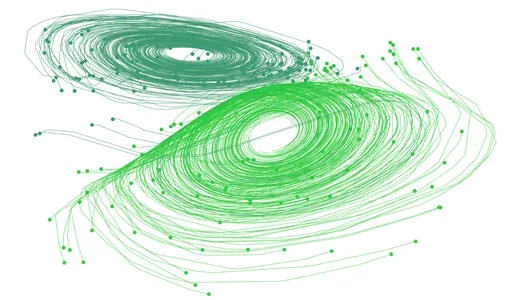

Neural Differential Equations (NDEs) fuse principles of differential equations and neural networks, resulting in a dynamic framework that can generate continuous and smooth data. Traditional generative models often generate discrete samples, limiting their expressive power and making them unsuitable for applications that require continuous data, such as time-series predictions, fluid dynamics, and realistic motion synthesis. NDEs bridge this gap by introducing a continuous generative process, enabling data creation that seamlessly evolves over time.

Applications of Neural Differential Equations

Time-series Data

Time-series data, characterized by its sequential nature, is pervasive across diverse domains, from financial markets to physiological signals. Neural Differential Equations (NDEs) emerge as a groundbreaking approach in time-series generation, offering a unique vantage point for understanding and modeling temporal dependencies. By combining the elegance of differential equations with the flexibility of neural networks, NDEs empower AI systems to synthesize time-evolving data with unparalleled finesse.

In the context of time-series generation, NDEs become the orchestrators of fluid temporal transitions. They capture hidden dynamics, adapt to changing patterns, and extrapolate into the future. NDE-based models adeptly handle irregular time intervals, accommodate noisy inputs, and facilitate accurate long-term predictions. This remarkable capacity redefines the forecasting landscape, allowing us to project trends, anticipate anomalies, and enhance decision-making across domains.

NDE-powered time-series generation offers a canvas for AI-driven insights. Financial analysts harness its prowess to forecast market trends, medical practitioners leverage it for patient monitoring, and climate scientists employ it to predict environmental changes. The continuous and adaptive nature of NDEs brings time-series data to life, enabling AI systems to dance harmoniously with the rhythm of time.

Physical Simulation

Stepping into the realm of physical simulations, Neural Differential Equations (NDEs) emerge as virtuosos capable of unraveling the intricate tapestry of natural phenomena. These simulations underpin scientific discovery, engineering innovation, and creative expression across disciplines. By melding differential equations with neural networks, NDEs breathe life into virtual worlds, enabling accurate and efficient emulation of complex physical processes.

NDE-driven physical simulations encapsulate the laws governing our universe, from fluid dynamics to quantum mechanics. Traditional methods often demand extensive computational resources and manual parameter tuning. NDEs, however, present a paradigm shift, seamlessly learning and adapting to dynamic systems, bypassing the need for explicit equation formulation. This accelerates simulation workflows, expedites experimentation, and broadens the scope of what can be simulated.

Industries like aerospace, automotive, and entertainment leverage NDE-powered simulations to optimize designs, test hypotheses, and create realistic virtual environments. Engineers and researchers navigate uncharted territories, exploring previously computationally prohibitive scenarios. In essence, Neural Differential Equations forge a bridge between the virtual and the tangible, manifesting the intricate symphony of physics within the digital realm.

Motion Synthesis

Motion synthesis, a critical component in animation, robotics, and gaming, is where Neural Differential Equations (NDEs) unveil their artistic and pragmatic prowess. Traditionally, generating natural and fluid motion sequences posed challenges due to the complexity of underlying dynamics. NDEs redefine this landscape, imbuing AI-driven characters and agents with lifelike motion that seamlessly resonates with human intuition.

NDEs imbue motion synthesis with continuity, seamlessly linking poses and trajectories and eradicating the jarring transitions prevalent in discrete approaches. They decode the underlying mechanics of motion, infusing characters with grace, weight, and responsiveness. From simulating the flutter of a butterfly’s wings to choreographing the dance of a humanoid robot, NDE-driven motion synthesis is a harmonious blend of creativity and physics.

The applications of NDE-driven motion synthesis are vast and transformative. In film and gaming, characters move with authenticity, eliciting emotional engagement. In robotics, machines navigate environments with elegance and precision. Rehabilitation devices adapt to users’ movements, promoting recovery. With NDEs at the helm, motion synthesis transcends mere animation, becoming an avenue for orchestrating symphonies of movement that resonate with both creators and audiences.

Implementing a Neural Differential Equation Model

To illustrate the concept of NDEs, let’s delve into implementing a basic Continuous-Time VAE using Python and TensorFlow. This model captures the continuous generative process and showcases the integration of differential equations and neural networks.

(Note: Ensure you install TensorFlow and relevant dependencies before running the code below.)

import tensorflow as tf

from tensorflow.keras.layers import Input, Dense, Lambda

from tensorflow.keras.models import Model

from tensorflow.keras import backend as K

def ode_solver(z0, t, func):

"""

Solves the Ordinary Differential Equation using Euler's method.

"""

h = t[1] - t[0]

z = [z0]

for i in range(1, len(t)):

z_new = z[-1] + h * func(z[-1], t[i-1])

z.append(z_new)

return z

def continuous_vae(latent_dim, ode_func):

input_layer = Input(shape=(latent_dim,))

encoded = Dense(128, activation='relu')(input_layer)

z_mean = Dense(latent_dim)(encoded)

z_log_var = Dense(latent_dim)(encoded)

def sampling(args):

z_mean, z_log_var = args

epsilon = K.random_normal(shape=(K.shape(z_mean)[0], latent_dim))

return z_mean + K.exp(0.5 * z_log_var) * epsilon

z = Lambda(sampling)([z_mean, z_log_var])

ode_output = Lambda(lambda x: ode_solver(x[0], x[1], ode_func))([z, t])

return Model(inputs=[input_layer, t], outputs=[ode_output, z_mean, z_log_var])

# Define ODE function (example: simple harmonic oscillator)

def harmonic_oscillator(z, t):

return [z[1], -z[0]]

# Define time points

t = np.linspace(0, 10, num=100)

# Instantiate and compile the Continuous-Time VAE model

latent_dim = 2

ct_vae_model = continuous_vae(latent_dim, harmonic_oscillator)

ct_vae_model.compile(optimizer='adam', loss='mse')

# Train the model with your data

# ...Conclusion

In the ever-evolving landscape of Generative AI, NDEs offer a compelling pathway to unlock the realm of continuous and evolving data generation. By seamlessly integrating the principles of differential equations and neural networks, NDEs open doors to applications spanning time-series predictions, physical simulations, and beyond. This uncharted territory beckons researchers and practitioners to explore the synergy between mathematics and deep learning. Revolutionize how we approach data synthesis and open a new dimension of creativity in artificial intelligence. The world of Neural Differential Equations invites us to harness the power of continuous dynamics and forge a path toward AI systems that effortlessly traverse the fluidity of time and space.

The key takeaway points from this article are:

- NDEs blend differential equations and neural networks to create continuous data generation models.

- NDEs excel in tasks requiring smooth and evolving data, such as time-series predictions, physical simulations, and motion synthesis.

- Continuous-time VAEs, a subset of NDEs, integrate differential equations into the generative AI process, enabling the creation of data that evolves.

- Implementing NDEs involves the combination of differential equation solvers with neural network architectures. Showcasing a powerful synergy between mathematics and deep learning.

- Exploring the realm of NDEs unlocks novel possibilities for Generative AI. Allowing us to generate data that flows seamlessly and continuously, revolutionizing fields that demand dynamic and evolving data synthesis.

Frequently Asked Questions

A. NDEs fuse principles of differential equations and neural networks. This results in a dynamic framework that can generate continuous and smooth data.

A. Yes, they are. Using a differential equation, find the exact location of a robotic arm in space at any given step. However, if the location is to be calculated for each step, the mathematical calculation can increase manifolds.

A. Solving differential equations using neural networks involves leveraging the expressive power of neural architectures to approximate the solutions of ordinary differential equations (ODEs) or partial differential equations (PDEs). This approach, known as NDEs, combines the principles of differential equations with the flexibility and scalability of neural networks.

The media shown in this article is not owned by Analytics Vidhya and is used at the Author’s discretion.