Introduction

As AI is taking over the world, Large language models are in huge demand in technology. Large Language Models generate text in a way a human does. They can be used to develop natural language processing (NLP) applications varying from chatbots and text summarizers to translation apps, virtual assistants, etc.

Google released its next-generation model named Palm 2. This model excels in advanced scientific and mathematical operations and is used in reasoning and language translations. This model is trained over 100+ spoken word languages and 20+ programming languages.

As it is trained in various programming languages, it can be used to translate one programming language to another. For example, if you want to translate Python code to R or JavaScript code to TypeScript, etc., you can easily use Palm 2 to do it for you. Apart from these, it can generate idioms and phrases and easily split a complex task into simpler tasks, making it much better than the previous large language models.

Learning Objectives

- Introduction to Google’s Palm API

- Learn how to access Palm API by generating an API key

- Using Python, learn how to generate simple text using text model

- Learn how to create a simple chatbot using Python

- Finally, we discuss how to use Langchain with Palm API.

This article was published as a part of the Data Science Blogathon.

Table of contents

What is Palm API?

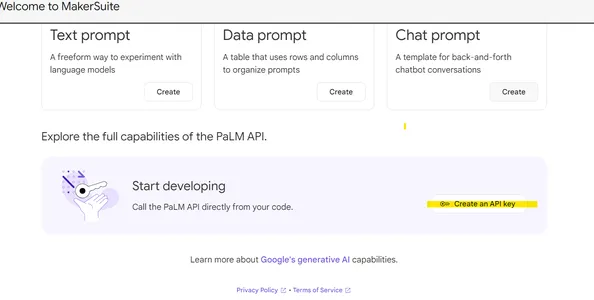

Using the Palm API, you can access the capabilities of Google’s Generative AI models and develop interesting AI-powered applications. However, if you want to interact directly with the Palm 2 model from the browser, you can use the browser-based IDE “MakerSuite”. But you can access the Palm 2 model using the Palm API to integrate large language models into your applications and build AI-driven applications using your company’s data.

Three different prompt interfaces are designed, and you can get started with any one among them using the Palm API. They are:

- Text Prompts: You can use the model named “text-bison-001 to generate simple text. Using text prompts, you can generate text, generate code, edit text, retrieve information, extract data, etc..

- Data Prompts: These allow you to construct prompts in a tabular format.

- Chat Prompts: Chat prompts are used to build conversations. You can use the model named “chat-bison-001” to use chat services.

How to Access Palm API?

Navigate to the website https://developers.generativeai.google/ and join the maker suite. You will be added to the waitlist and will be given access probably within 24 hours.

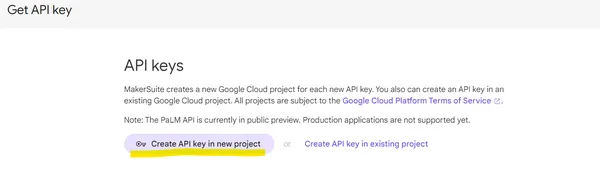

Generate an API key:

- You need to get your own API key to use the API.

- You can connect your application to the Palm API and access its services using the API key.

- Once your account is registered, you will be able to generate it.

- Next, go ahead and generate your API key, as shown in the screenshot below:

Save the API key as we will use it further.

Setting the Environment

To use the API with Python, install it using the command:

pip install google-generativeai

Next, we configure it using the API key that we generated earlier.

import google.generativeai as palm

palm.configure(api_key=API_KEY)

To list the available models, we write the below code:

models = [model for model in palm.list_models()]

for model in models:

print(model.name)Output:

models/chat-bison-001

models/text-bison-001

models/embedding-gecko-001How to Generate Text?

We use the model “text-bison-001” to generate text and pass GenerateTextRequest. The generate_text() function takes in two parameters i.e., a model and a prompt. We pass the model as “text-bison-001,” and the prompt contains the input string.

Explanation:

- In the example below, we pass the model_id variable with the model name and a prompt variable containing the input text.

- We then pass the model_id as model and the prompt as prompt to the generate_text() method.

- The temperature parameter indicates how random the response will be. In other words, if you want the model to be more creative, you can give it a value of 0.3.

- Finally, the parameter “max_tokens” indicates the maximum number of tokens the model’s output can contain. A token can contain approximately 4 tokens. However, if you don’t specify, a default value of 64 will be assigned to it.

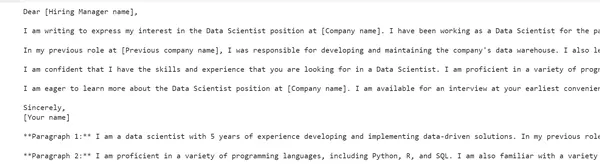

Example 1

model_id="models/text-bison-001"

prompt='''write a cover letter for a data science job applicaton.

Summarize it to two paragraphs of 50 words each. '''

completion=palm.generate_text(

model=model_id,

prompt=prompt,

temperature=0.99,

max_output_tokens=800,

)

print(completion.result)Output:

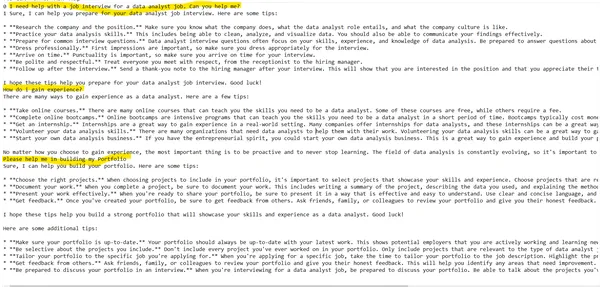

We define a while loop that asks for input and generates a reply. The response.last statement prints the response.

model_id="models/chat-bison-001"

prompt='I need help with a job interview for a data analyst job. Can you help me?'

examples=[

('Hello', 'Hi there mr. How can I be assistant?'),

('I want to get a High paying Job','I can work harder')

]

response=palm.chat(messages=prompt, temperature=0.2, context="Speak like a CEO", examples=examples)

for messages in response.messages:

print(messages['author'],messages['content'])

while True:

s=input()

response=response.reply(s)

print(response.last)Output:

Using Palm API with LangChain

LangChain is an open-source framework that allows you to connect large language models to your applications. To use Palm API with langchain, we import GooglePalmEmbeddings from langchain.embeddings. The langchain has an embedding class that provides a standard interface for various text embedding models such as OpenAI, HuggingFace, etc.

We pass the prompts as an array, as shown in the below example. Then, we call llm._generate() function and pass the prompts array as a parameter.

from langchain.embeddings import GooglePalmEmbeddings

from langchain.llms import GooglePalm

llm=GooglePalm(google_api_key=API_KEY)

llm.temperature=0.2

prompts=["How to Calculate the area of a triangle?","How many sides are there for a polygon?"]

llm_result= llm._generate(prompts)

res=llm_result.generations

print(res[0][0].text)

print(res[1][0].text)Output:

Prompt 1

1.

**Find the base and height of the triangle.

** The base is the length of the side of the triangle that is parallel to the ground, and the height is the length of the line segment that is perpendicular to the base and intersects the opposite vertex.

2.

**Multiply the base and height and divide by 2.

** The formula for the area of a triangle is A = 1/2 * b * h.

For example, if a triangle has a base of 5 cm and a height of 4 cm, its area would be 1/2 * 5 * 4 = 10 cm2.

Prompt 2

3Conclusion

In this article, we have introduced Google’s latest Palm 2 model and how it is better than the previous models. We then learned how to use Palm API with Python Programming Language. We then discussed how to develop simple applications and generate text and chats. Finally, we covered how to embed it using Langchain framework.

Key Takeaways

- Palm API allows users to develop applications using Large Language Models

- Palm API provides multiple text-generation services, such as text service to generate text and a chat service to generate chat conversations.

- Google generative-ai is the Palm API Python Library and can be easily installed using the pip command.

Frequently Asked Questions

A. To quickly get started with palm API in python, you can install a library using the pip command – pip install google generative-ai.

A. Yes, you can access Google’s Large Language Models and develop applications using Palm API.

A. Yes, Google’s Palm API and MakerSuite are available for public preview.

A. Google’s Palm 2 model was trained in over 20 programming languages and can generate code in various programming languages.

A. Palm API comes with both text and chat services. It provides multiple text generation capabilities.

The media shown in this article is not owned by Analytics Vidhya and is used at the Author’s discretion.