Introduction

Welcome into the world of Transformers, the deep learning model that has transformed Natural Language Processing (NLP) since its debut in 2017. These linguistic marvels, armed with self-attention mechanisms, revolutionize how machines understand language, from translating texts to analyzing sentiments. In this journey, we’ll uncover the core concepts behind Transformers: attention mechanisms, encoder-decoder architecture, multi-head attention, and more. With Python code snippets, you’ll dive into practical implementation, gaining a hands-on understanding of Transformers.

Learning Objectives

- Understanding transformers and their significance in natural language processing.

- Learn attention mechanism, its variants, and how it enables transformers to capture contextual information effectively.

- Learn fundamental components of the transformer model, including encoder-decoder architecture, positional encoding, multi-head attention, and feed-forward networks.

- Implement transformer components using Python code snippets, allowing for practical experimentation and understanding.

- Explore and understand advanced concepts such as BERT and GPT with their applications in various NLP tasks.

This article was published as a part of the Data Science Blogathon.

Table of contents

- Understanding Attention Mechanism

- How Self-attention Works?

- Basics of Transformer Model

- Detailed Explanation of Transformer Components

- Transformer Model Architecture

- Training and Evaluation of Model

- Implementation of Training and Evaluation

- Advanced Topics and Applications

- Additional Resources

- Frequently Asked Questions

Understanding Attention Mechanism

Attention mechanism is a fascinating concept in neural networks, especially when it comes to tasks like NLP. It’s like giving the model a spotlight, allowing it to focus on certain parts of the input sequence while ignoring others, much like how we humans pay attention to specific words or phrases when understanding a sentence.

Now, let’s dive deeper into a particular type of attention mechanism called self-attention, also known as intra-attention. Imagine you’re reading a sentence, and your brain automatically highlights the important words or phrases to comprehend the meaning. That’s essentially what self-attention does in neural networks. It enables each word in the sequence to “pay attention” to other words, including itself, to understand the context better.

How Self-attention Works?

Here’s how self-attention works with a simple example:

Consider the sentence: “The cat sat on the mat.

Embedding

First, the model embeds each word in the input sequence into a high-dimensional vector representation. This embedding process allows the model to capture semantic similarities between words.

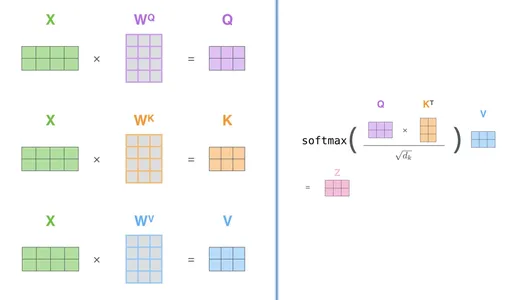

Query, Key, and Value Vectors

Next, the model computes three vectors for each word in the sequence: the Query vector, the Key vector, and the Value vector. During training, the model learns these vectors, and each serves a distinct purpose. Query Vector represents the word’s query, i.e., what the model is looking for in the sequence. Key Vector represents the word’s key, i.e., what other words in the sequence should pay attention to and Value Vector represents the word’s value, i.e., the information that the word contributes to the output.

Attention Scores

Once the model computes the Query, Key, and Value vectors for each word, it calculates attention scores for every pair of words in the sequence. This is typically achieved by taking the dot product of the Query and Key vectors, which assesses the similarity between the words.

SoftMax Normalization

The attention scores are then normalized using the softmax function to obtain attention weights. These weights represent how much attention each word should pay to other words in the sequence. Words with higher attention weights are deemed more crucial for the task being performed.

Weighted Sum

Finally, the weighted sum of the Value vectors is computed using the attention weights. This produces the output of the self-attention mechanism for each word in the sequence, capturing the contextual information from other words.

Here’s a simple explanation to calculate attention scores:

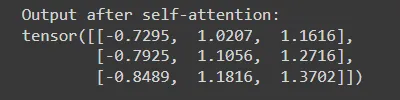

Now, let’s see how this works in code:

#install pytorch

!pip install torch==2.2.1+cu121

#import libraries

import torch

import torch.nn.functional as F

# Example input sequence

input_sequence = torch.tensor([[0.1, 0.2, 0.3], [0.4, 0.5, 0.6], [0.7, 0.8, 0.9]])

# Generate random weights for Key, Query, and Value matrices

random_weights_key = torch.randn(input_sequence.size(-1), input_sequence.size(-1))

random_weights_query = torch.randn(input_sequence.size(-1), input_sequence.size(-1))

random_weights_value = torch.randn(input_sequence.size(-1), input_sequence.size(-1))

# Compute Key, Query, and Value matrices

key = torch.matmul(input_sequence, random_weights_key)

query = torch.matmul(input_sequence, random_weights_query)

value = torch.matmul(input_sequence, random_weights_value)

# Compute attention scores

attention_scores = torch.matmul(query, key.T) / torch.sqrt(torch.tensor(query.size(-1),

dtype=torch.float32))

# Apply softmax to obtain attention weights

attention_weights = F.softmax(attention_scores, dim=-1)

# Compute weighted sum of Value vectors

output = torch.matmul(attention_weights, value)

print("Output after self-attention:")

print(output)

Basics of Transformer Model

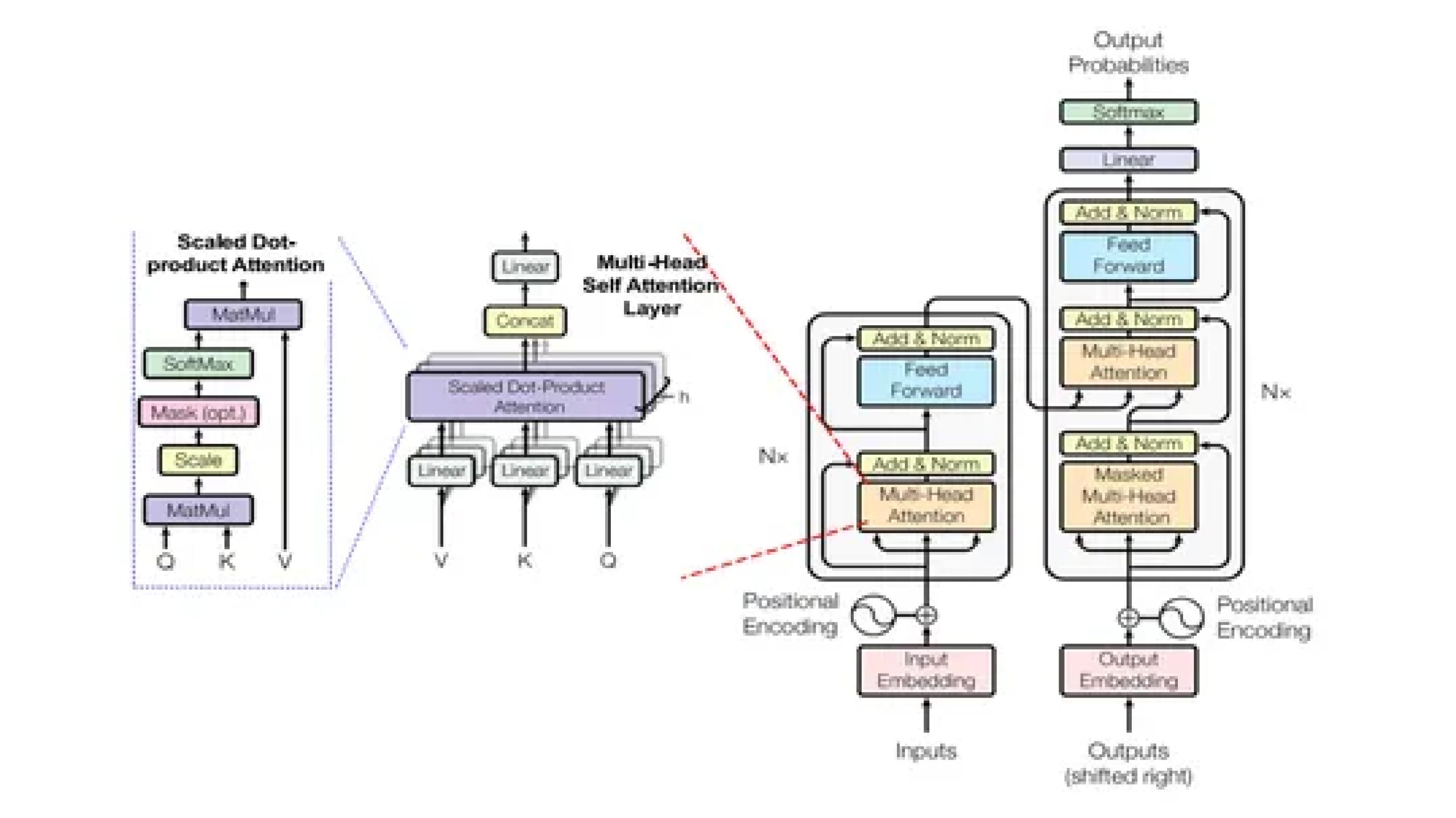

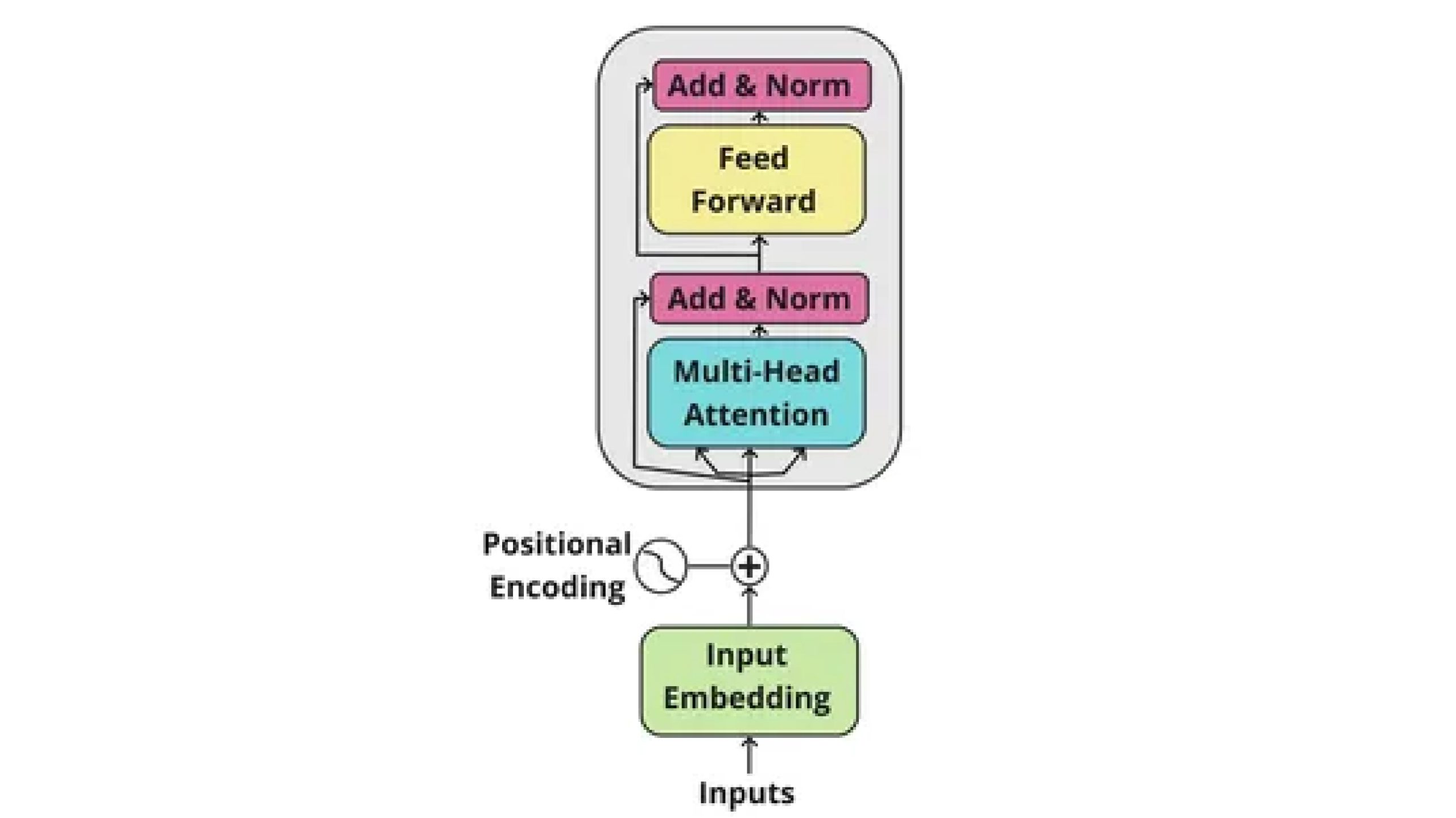

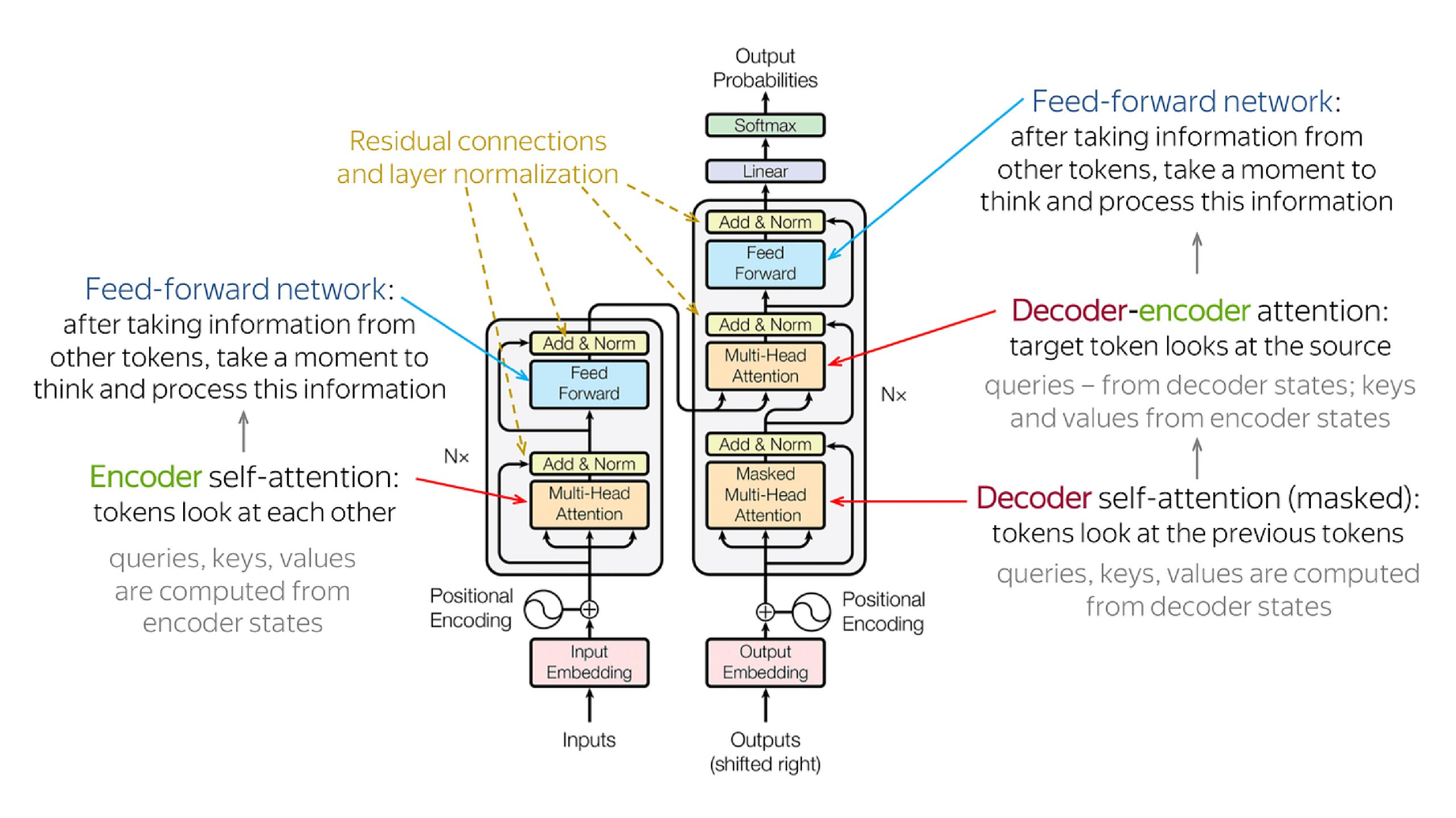

Before we dive into the intricate workings of the Transformer model, let’s take a moment to appreciate its groundbreaking architecture. As we’ve discussed earlier, the Transformer model has reshaped the landscape of natural language processing (NLP) by introducing a novel approach that revolves around self-attention mechanisms. In the following sections, we’ll unravel the core components of the Transformer model, shedding light on its encoder-decoder architecture, positional encoding, multi-head attention, and feed-forward networks.

Encoder-Decoder Architecture

At the heart of the Transformer lies its encoder-decoder architecture—a symbiotic relationship between two key components tasked with processing input sequences and generating output sequences, respectively. Each layer within both the encoder and decoder houses identical sub-layers, comprising self-attention mechanisms and feed-forward networks. This architecture not only facilitates comprehensive understanding of input sequences but also enables the generation of contextually rich output sequences.

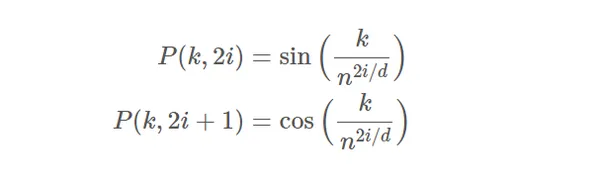

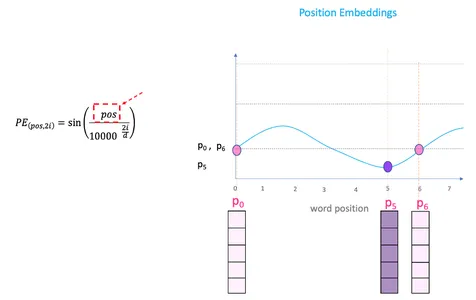

Positional Encoding

Despite its prowess, the Transformer model lacks an inherent understanding of the sequential order of elements—a shortcoming addressed by positional encoding. By imbuing input embeddings with positional information, positional encoding enables the model to discern the relative positions of elements within a sequence. This nuanced understanding is vital for capturing the temporal dynamics of language and facilitating accurate comprehension.

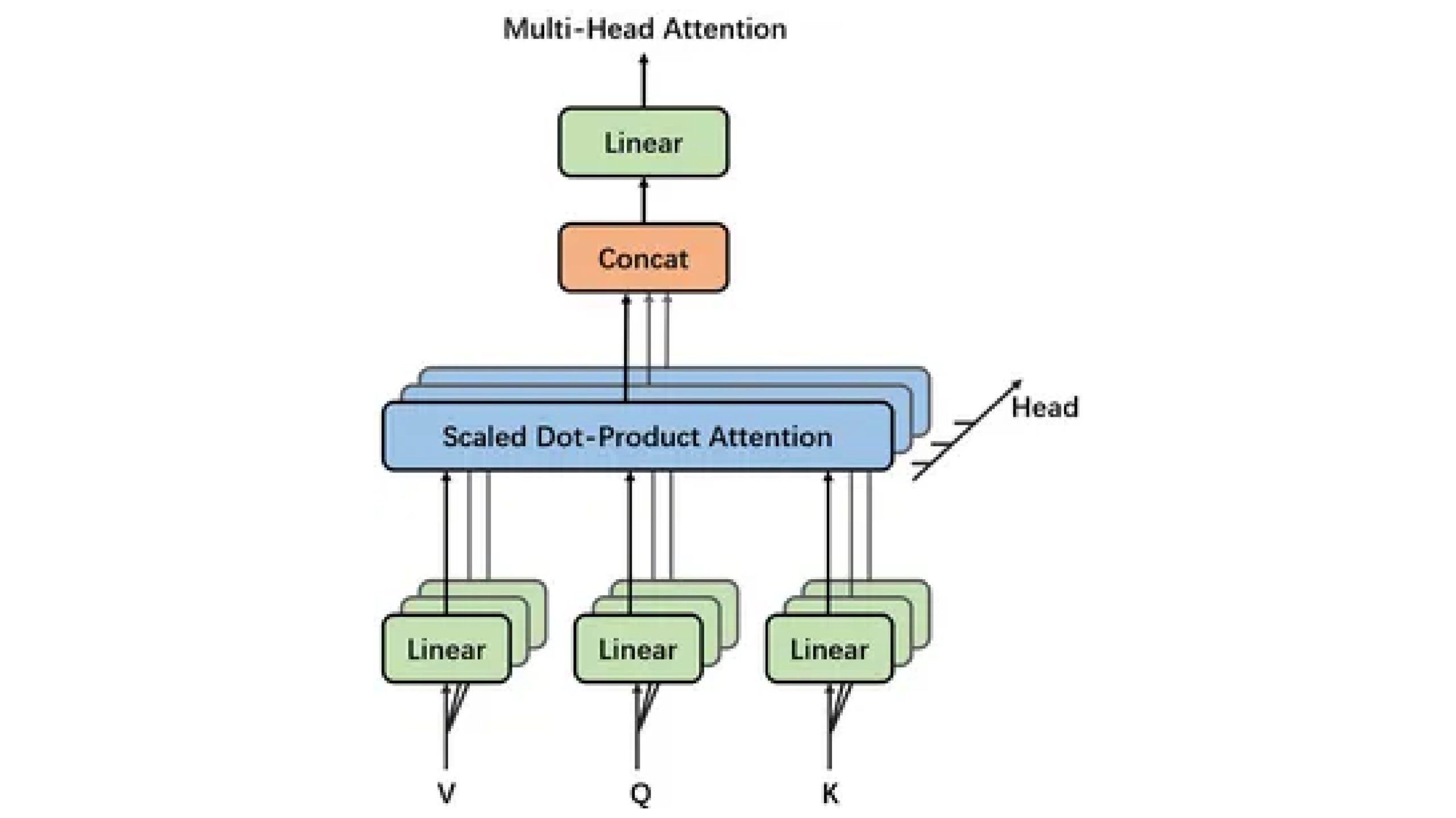

Multi-Head Attention

One of the defining features of the Transformer model is its ability to jointly attend to different parts of an input sequence—a feat made possible by multi-head attention. By splitting Query, Key, and Value vectors into multiple heads and performing independent self-attention computations, the model gains a nuanced perspective of the input sequence, enriching its representation with diverse contextual information.

Feed-Forward Networks

Akin to the human brain’s ability to process information in parallel, each layer within the Transformer model houses a feed-forward network—a versatile component capable of capturing intricate relationships between elements in a sequence. By employing linear transformations and non-linear activation functions, feed-forward networks empower the model to navigate the complex semantic landscape of language, facilitating robust comprehension and generation of text.

Detailed Explanation of Transformer Components

For implementation, first run codes of Positional Encoding, Multi-Head Attention Mechanism and Feed-Forward Networks, then Encoder, Decoder and Transformer Architecture.

#import libraries

import math

import torch

import torch.nn as nn

import torch.optim as optim

import torch.nn.functional as FPositional Encoding

In the Transformer model, positional encoding is a crucial component that injects information about the position of tokens into the input embeddings. Unlike recurrent neural networks (RNNs) or convolutional neural networks (CNNs), Transformers lack inherent knowledge of token positions due to their permutation-invariant property. Positional encoding addresses this limitation by providing the model with positional information, enabling it to process sequences in their correct order.

Concept of Positional Encoding:

Positional encoding is typically added to the input embeddings before they are fed into the Transformer model. It consists of a set of sinusoidal functions with different frequencies and phases, allowing the model to differentiate between tokens based on their positions in the sequence.

The formula for positional encoding is as follows:

Suppose you have an input sequence of length L and require the position of the ktℎ object within this sequence. The positional encoding is given by sine and cosine functions of varying frequencies:

Where:

- k: Position of an object in the input sequence, 0≤k<L/2

- d: Dimension of the output embedding space

- P(k,j): Position function for mapping a position k in the input sequence to index (k,j) of the positional matrix

- n: User-defined scalar, set to 10,000 by the authors of Attention Is All You Need.

- i: Used for mapping to column indices 0≤i<d/2, with a single value of i maps to both sine and cosine functions

Different Positional Encoding Schemes

There are various positional encoding schemes used in Transformers, each with its advantages and disadvantages:

- Fixed Positional Encoding: In this scheme, the positional encodings are pre-defined and fixed for all sequences. While simple and efficient, fixed positional encodings may not capture complex patterns in sequences.

- Learned Positional Encoding: Alternatively, positional encodings can be learned during training, allowing the model to adaptively capture positional information from the data. Learned positional encodings offer greater flexibility but require more parameters and computational resources.

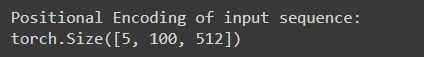

Implementation of Positional Encoding

Let’s implement positional encoding in Python:

# implementation of PositionalEncoding

class PositionalEncoding(nn.Module):

def __init__(self, d_model, max_len=5000):

super(PositionalEncoding, self).__init__()

# Compute positional encodings

pe = torch.zeros(max_len, d_model)

position = torch.arange(0, max_len, dtype=torch.float).unsqueeze(1)

div_term = torch.exp(torch.arange(0, d_model, 2).float() * (-torch.log(

torch.tensor(10000.0)) / d_model))

pe[:, 0::2] = torch.sin(position * div_term)

pe[:, 1::2] = torch.cos(position * div_term)

pe = pe.unsqueeze(0)

self.register_buffer('pe', pe)

def forward(self, x):

x = x + x + self.pe[:, :x.size(1)]

return x

# Example usage

d_model = 512

max_len = 100

num_heads = 8

# Positional encoding

pos_encoder = PositionalEncoding(d_model, max_len)

# Example input sequence

input_sequence = torch.randn(5, max_len, d_model)

# Apply positional encoding

input_sequence = pos_encoder(input_sequence)

print("Positional Encoding of input sequence:")

print(input_sequence.shape)

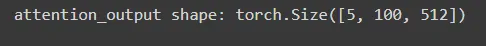

Multi-Head Attention Mechanism

In the Transformer architecture, the multi-head attention mechanism is a key component that enables the model to attend to different parts of the input sequence simultaneously. It allows the model to capture complex dependencies and relationships within the sequence, leading to improved performance in tasks such as language translation, text generation, and sentiment analysis.

Significance of Multi-Head Attention

The multi-head attention mechanism offers several advantages:

- Parallelization: By attending to different parts of the input sequence in parallel, multi-head attention significantly speeds up computation, making it more efficient than traditional attention mechanisms.

- Enhanced Representations: Each attention head focuses on different aspects of the input sequence, allowing the model to capture diverse patterns and relationships. This leads to richer and more robust representations of the input, enhancing the model’s ability to understand and generate text.

- Improved Generalization: Multi-head attention enables the model to attend to both local and global dependencies within the sequence, leading to improved generalization across various tasks and domains.

Computation of Multi-Head Attention:

Let’s break down the steps involved in computing multi-head attention:

- Linear Transformation: The input sequence undergoes learnable linear transformations to project it into multiple lower-dimensional representations, known as “heads.” Each head focuses on different aspects of the input, allowing the model to capture diverse patterns.

- Scaled Dot-Product Attention: Each head independently computes attention scores between the query, key, and value representations of the input sequence. This step involves calculating the similarity between tokens and their context, scaled by the square root of the depth of the model. The resulting attention weights highlight the importance of each token relative to others.

- Concatenation and Linear Projection: The attention outputs from all heads are concatenated and linearly projected back to the original dimensionality. This process combines the insights from multiple heads, enhancing the model’s ability to understand complex relationships within the sequence.

Implementation with Code

Let’s translate the theory into code:

# Code implementation of Multi-Head Attention

class MultiHeadAttention(nn.Module):

def __init__(self, d_model, num_heads):

super(MultiHeadAttention, self).__init__()

self.num_heads = num_heads

self.d_model = d_model

assert d_model % num_heads == 0

self.depth = d_model // num_heads

# Linear projections for query, key, and value

self.query_linear = nn.Linear(d_model, d_model)

self.key_linear = nn.Linear(d_model, d_model)

self.value_linear = nn.Linear(d_model, d_model)

# Output linear projection

self.output_linear = nn.Linear(d_model, d_model)

def split_heads(self, x):

batch_size, seq_length, d_model = x.size()

return x.view(batch_size, seq_length, self.num_heads, self.depth).transpose(1, 2)

def forward(self, query, key, value, mask=None):

# Linear projections

query = self.query_linear(query)

key = self.key_linear(key)

value = self.value_linear(value)

# Split heads

query = self.split_heads(query)

key = self.split_heads(key)

value = self.split_heads(value)

# Scaled dot-product attention

scores = torch.matmul(query, key.transpose(-2, -1)) / math.sqrt(self.depth)

# Apply mask if provided

if mask is not None:

scores += scores.masked_fill(mask == 0, -1e9)

# Compute attention weights and apply softmax

attention_weights = torch.softmax(scores, dim=-1)

# Apply attention to values

attention_output = torch.matmul(attention_weights, value)

# Merge heads

batch_size, _, seq_length, d_k = attention_output.size()

attention_output = attention_output.transpose(1, 2).contiguous().view(batch_size,

seq_length, self.d_model)

# Linear projection

attention_output = self.output_linear(attention_output)

return attention_output

# Example usage

d_model = 512

max_len = 100

num_heads = 8

d_ff = 2048

# Multi-head attention

multihead_attn = MultiHeadAttention(d_model, num_heads)

# Example input sequence

input_sequence = torch.randn(5, max_len, d_model)

# Multi-head attention

attention_output= multihead_attn(input_sequence, input_sequence, input_sequence)

print("attention_output shape:", attention_output.shape)

Feed-Forward Networks

In the context of Transformers, feed-forward networks play a crucial role in processing information and extracting features from the input sequence. They serve as the backbone of the model, facilitating the transformation of representations between different layers.

Role of Feed-Forward Networks

The feed-forward network within each Transformer layer is responsible for applying non-linear transformations to the input representations. It enables the model to capture complex patterns and relationships within the data, facilitating the learning of higher-level features.

Structure and Functioning of the Feed-Forward Layer

The feed-forward layer consists of two linear transformations separated by a non-linear activation function, typically ReLU (Rectified Linear Unit). Let’s break down the structure and functioning:

- Linear Transformation 1: The input representations are projected into a higher-dimensional space using a learnable weight matrix

- Non-Linear Activation: The output of the first linear transformation is passed through a non-linear activation function, such as ReLU. This introduces non-linearity into the model, enabling it to capture complex patterns and relationships within the data.

- Linear Transformation 2: The output of the activation function is then projected back into the original dimensional space using another learnable weight matrix

Implementation with Code

Let’s implement the feed-forward network in Python:

# code implementation of Feed Forward

class FeedForward(nn.Module):

def __init__(self, d_model, d_ff):

super(FeedForward, self).__init__()

self.linear1 = nn.Linear(d_model, d_ff)

self.linear2 = nn.Linear(d_ff, d_model)

self.relu = nn.ReLU()

def forward(self, x):

x = self.relu(self.linear1(x))

x = self.linear2(x)

return x

# Example usage

d_model = 512

max_len = 100

num_heads = 8

d_ff = 2048

# Multi-head attention

multihead_attn = MultiHeadAttention(d_model, num_heads)

# Feed-forward network

ff_network = FeedForward(d_model, d_ff)

# Example input sequence

input_sequence = torch.randn(5, max_len, d_model)

# Multi-head attention

attention_output= multihead_attn(input_sequence, input_sequence, input_sequence)

# Feed-forward network

output_ff = ff_network(attention_output)

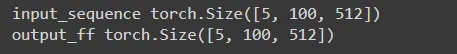

print('input_sequence',input_sequence.shape)

print("output_ff", output_ff.shape)

Encoder

The encoder plays a crucial role in processing input sequences in the Transformer model. Its primary task is to convert input sequences into meaningful representations that capture essential information about the input.

Structure and Functioning of Each Encoder Layer

The encoder consists of multiple layers, each containing the following components in sequential order: input embeddings, positional encoding, multi-head self-attention mechanism, and a position-wise feed-forward network.

- Input Embeddings: We first convert the input sequence into dense vector representations known as input embeddings. We map each word in the input sequence to a high-dimensional vector space using pre-trained word embeddings or learned embeddings during training.

- Positional Encoding: We add positional encoding to the input embeddings to incorporate the sequential order information of the input sequence. This allows the model to distinguish between the positions of words in the sequence, overcoming the lack of sequential information in traditional neural networks.

- Multi-Head Self-Attention Mechanism: After positional encoding, the input embeddings pass through a multi-head self-attention mechanism. This mechanism enables the encoder to weigh the importance of different words in the input sequence based on their relationships with other words. By attending to relevant parts of the input sequence, the encoder can capture long-range dependencies and semantic relationships.

- Position-Wise Feed-Forward Network: Following the self-attention mechanism, the encoder applies a position-wise feed-forward network to each position independently. This network consists of two linear transformations separated by a non-linear activation function, typically a ReLU. It helps capture complex patterns and relationships within the input sequence.

Implementation with Code

Let’s dive into the Python code for implementing the encoder layers with input embeddings and positional encoding:

# code implementation of ENCODER

class EncoderLayer(nn.Module):

def __init__(self, d_model, num_heads, d_ff, dropout):

super(EncoderLayer, self).__init__()

self.self_attention = MultiHeadAttention(d_model, num_heads)

self.feed_forward = FeedForward(d_model, d_ff)

self.norm1 = nn.LayerNorm(d_model)

self.norm2 = nn.LayerNorm(d_model)

self.dropout = nn.Dropout(dropout)

def forward(self, x, mask):

# Self-attention layer

attention_output= self.self_attention(x, x,

x, mask)

attention_output = self.dropout(attention_output)

x = x + attention_output

x = self.norm1(x)

# Feed-forward layer

feed_forward_output = self.feed_forward(x)

feed_forward_output = self.dropout(feed_forward_output)

x = x + feed_forward_output

x = self.norm2(x)

return x

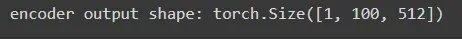

d_model = 512

max_len = 100

num_heads = 8

d_ff = 2048

# Multi-head attention

encoder_layer = EncoderLayer(d_model, num_heads, d_ff, 0.1)

# Example input sequence

input_sequence = torch.randn(1, max_len, d_model)

# Multi-head attention

encoder_output= encoder_layer(input_sequence, None)

print("encoder output shape:", encoder_output.shape)

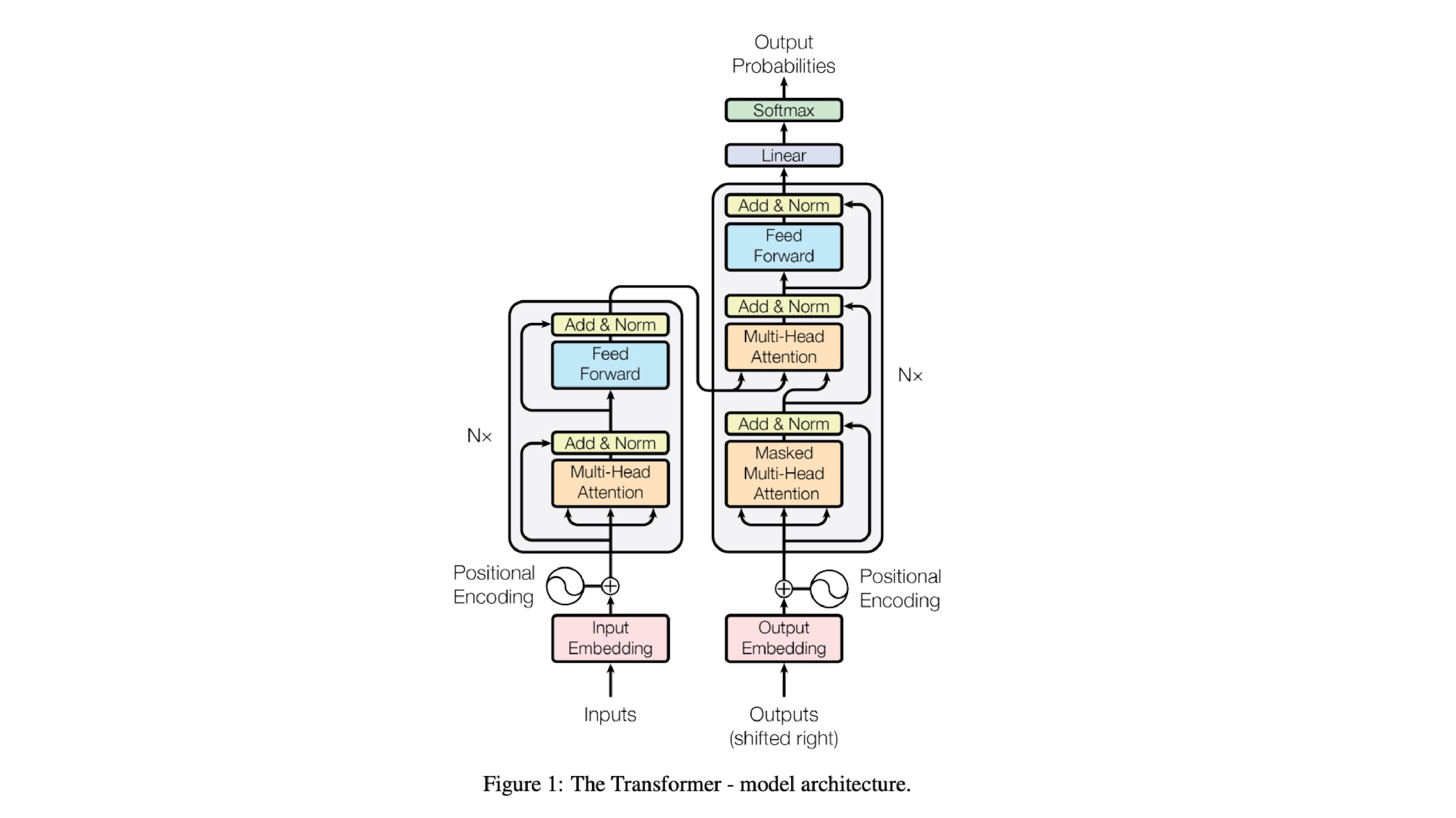

Decoder

In the Transformer model, the decoder plays a crucial role in generating output sequences based on the encoded representations of input sequences. It receives the encoded input sequence from the encoder and uses it to produce the final output sequence.

Function of the Decoder

The decoder’s primary function is to generate output sequences while attending to relevant parts of the input sequence and previously generated tokens. It utilizes the encoded representations of the input sequence to understand the context and make informed decisions about the next token to generate.

Decoder Layer and Its Components

The decoder layer consists of the following components:

- Output Embedding Shifted Right: Before processing the input sequence, the model shifts the output embeddings right by one position. This ensures that each token in the decoder receives the correct context from previously generated tokens during training.

- Positional Encoding: Similar to the encoder, the model adds positional encoding to the output embeddings to incorporate the sequential order of tokens. This encoding helps the decoder differentiate between tokens based on their position in the sequence.

- Masked Multi-Head Self-Attention Mechanism: The decoder employs a masked multi-head self-attention mechanism to attend to relevant parts of the input sequence and previously generated tokens. During training, the model applies a mask to prevent attending to future tokens, ensuring that each token can only attend to preceding tokens.

- Encoder-Decoder Attention Mechanism: In addition to the masked self-attention mechanism, the decoder also incorporates an encoder-decoder attention mechanism. This mechanism enables the decoder to attend to relevant parts of the input sequence, aiding in the generation of output tokens informed by the input context.

- Position-Wise Feed-Forward Network: Following the attention mechanisms, the decoder applies a position-wise feed-forward network to each token independently. This network captures complex patterns and relationships within the input and previously generated tokens, contributing to the generation of accurate output sequences.

Implementation with Code

# code implementation of DECODER

class DecoderLayer(nn.Module):

def __init__(self, d_model, num_heads, d_ff, dropout):

super(DecoderLayer, self).__init__()

self.masked_self_attention = MultiHeadAttention(d_model, num_heads)

self.enc_dec_attention = MultiHeadAttention(d_model, num_heads)

self.feed_forward = FeedForward(d_model, d_ff)

self.norm1 = nn.LayerNorm(d_model)

self.norm2 = nn.LayerNorm(d_model)

self.norm3 = nn.LayerNorm(d_model)

self.dropout = nn.Dropout(dropout)

def forward(self, x, encoder_output, src_mask, tgt_mask):

# Masked self-attention layer

self_attention_output= self.masked_self_attention(x, x, x, tgt_mask)

self_attention_output = self.dropout(self_attention_output)

x = x + self_attention_output

x = self.norm1(x)

# Encoder-decoder attention layer

enc_dec_attention_output= self.enc_dec_attention(x, encoder_output,

encoder_output, src_mask)

enc_dec_attention_output = self.dropout(enc_dec_attention_output)

x = x + enc_dec_attention_output

x = self.norm2(x)

# Feed-forward layer

feed_forward_output = self.feed_forward(x)

feed_forward_output = self.dropout(feed_forward_output)

x = x + feed_forward_output

x = self.norm3(x)

return x

# Define the DecoderLayer parameters

d_model = 512 # Dimensionality of the model

num_heads = 8 # Number of attention heads

d_ff = 2048 # Dimensionality of the feed-forward network

dropout = 0.1 # Dropout probability

batch_size = 1 # Batch Size

max_len = 100 # Max length of Sequence

# Define the DecoderLayer instance

decoder_layer = DecoderLayer(d_model, num_heads, d_ff, dropout)

src_mask = torch.rand(batch_size, max_len, max_len) > 0.5

tgt_mask = torch.tril(torch.ones(max_len, max_len)).unsqueeze(0) == 0

# Pass the input tensors through the DecoderLayer

output = decoder_layer(input_sequence, encoder_output, src_mask, tgt_mask)

# Output shape

print("Output shape:", output.shape)

Transformer Model Architecture

The Transformer model architecture is the culmination of various components discussed in previous sections. Let’s bring together the knowledge of encoders, decoders, attention mechanisms, positional encoding, and feed-forward networks to understand how the complete Transformer model is structured and functions.

Overview of Transformer Model

At its core, the Transformer model consists of encoder and decoder modules stacked together to process input sequences and generate output sequences. Here’s a high-level overview of the architecture:

Encoder

- The encoder module processes the input sequence, extracting features and creating a rich representation of the input.

- It comprises multiple encoder layers, each containing self-attention mechanisms and feed-forward networks.

- The self-attention mechanism allows the model to attend to different parts of the input sequence simultaneously, capturing dependencies and relationships.

- We add positional encoding to the input embeddings to provide information about the position of tokens in the sequence.

Decoder

- The decoder module takes the output of the encoder as input and generates the output sequence.

- Like the encoder, it consists of multiple decoder layers, each containing self-attention, encoder-decoder attention, and feed-forward networks.

- In addition to self-attention, the decoder incorporates encoder-decoder attention to attend to the input sequence while generating the output.

- Similar to the encoder, we add positional encoding to the input embeddings to provide positional information.

Connection and Normalization

- Between each layer in both the encoder and decoder modules, residual connections are followed by layer normalization.

- These mechanisms facilitate the flow of gradients through the network and help stabilize training.

The complete Transformer model is constructed by stacking multiple encoder and decoder layers on top of each other. Each layer independently processes the input sequence, allowing the model to learn hierarchical representations and capture intricate patterns in the data. The encoder passes its output to the decoder, which generates the final output sequence based on the input.

Implementation of Transformer Model

Let’s implement the complete Transformer model in Python:

# implementation of TRANSFORMER

class Transformer(nn.Module):

def __init__(self, src_vocab_size, tgt_vocab_size, d_model, num_heads, num_layers, d_ff,

max_len, dropout):

super(Transformer, self).__init__()

self.encoder_embedding = nn.Embedding(src_vocab_size, d_model)

self.decoder_embedding = nn.Embedding(tgt_vocab_size, d_model)

self.positional_encoding = PositionalEncoding(d_model, max_len)

self.encoder_layers = nn.ModuleList([EncoderLayer(d_model, num_heads, d_ff, dropout)

for _ in range(num_layers)])

self.decoder_layers = nn.ModuleList([DecoderLayer(d_model, num_heads, d_ff, dropout)

for _ in range(num_layers)])

self.linear = nn.Linear(d_model, tgt_vocab_size)

self.dropout = nn.Dropout(dropout)

def generate_mask(self, src, tgt):

src_mask = (src != 0).unsqueeze(1).unsqueeze(2)

tgt_mask = (tgt != 0).unsqueeze(1).unsqueeze(3)

seq_length = tgt.size(1)

nopeak_mask = (1 - torch.triu(torch.ones(1, seq_length, seq_length), diagonal=1)).bool()

tgt_mask = tgt_mask & nopeak_mask

return src_mask, tgt_mask

def forward(self, src, tgt):

src_mask, tgt_mask = self.generate_mask(src, tgt)

encoder_embedding = self.encoder_embedding(src)

en_positional_encoding = self.positional_encoding(encoder_embedding)

src_embedded = self.dropout(en_positional_encoding)

decoder_embedding = self.decoder_embedding(tgt)

de_positional_encoding = self.positional_encoding(decoder_embedding)

tgt_embedded = self.dropout(de_positional_encoding)

enc_output = src_embedded

for enc_layer in self.encoder_layers:

enc_output = enc_layer(enc_output, src_mask)

dec_output = tgt_embedded

for dec_layer in self.decoder_layers:

dec_output = dec_layer(dec_output, enc_output, src_mask, tgt_mask)

output = self.linear(dec_output)

return output

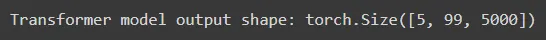

# Example usecase

src_vocab_size = 5000

tgt_vocab_size = 5000

d_model = 512

num_heads = 8

num_layers = 6

d_ff = 2048

max_len = 100

dropout = 0.1

transformer = Transformer(src_vocab_size, tgt_vocab_size, d_model, num_heads, num_layers,

d_ff, max_len, dropout)

# Generate random sample data

src_data = torch.randint(1, src_vocab_size, (5, max_len)) # (batch_size, seq_length)

tgt_data = torch.randint(1, tgt_vocab_size, (5, max_len)) # (batch_size, seq_length)

transformer(src_data, tgt_data[:, :-1]).shape

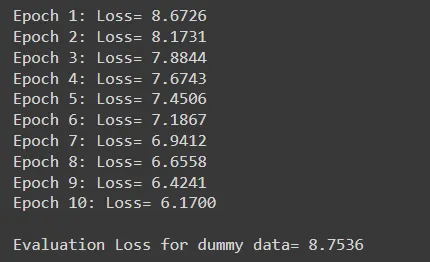

Training and Evaluation of Model

Training a Transformer model involves optimizing its parameters to minimize a loss function, typically using gradient descent and backpropagation. Once trained, the model’s performance is evaluated using various metrics to assess its effectiveness in solving the target task.

Training Process

- Gradient Descent and Backpropagation:

- During training, input sequences are fed into the model, and output sequences are generated.

- Comparing the model’s predictions with the ground truth involves using a loss function, such as cross-entropy loss, to measure the disparity between predicted and actual values.

- Gradient descent is used to update the model’s parameters in the direction that minimizes the loss.

- The optimizer adjusts the parameters based on these gradients, updating them iteratively to improve model performance.

- Learning Rate Scheduling:

- Learning rate scheduling techniques may be applied to adjust the learning rate during training dynamically.

- Common strategies include warmup schedules, where the learning rate starts low and gradually increases, and decay schedules, where the learning rate decreases over time.

Evaluation Metrics

- Perplexity:

- Perplexity is a common metric used to evaluate the performance of language models, including Transformers.

- It measures how well the model predicts a given sequence of tokens.

- Lower perplexity values indicate better performance, with the ideal value being close to the vocabulary size.

- BLEU Score:

- The BLEU (Bilingual Evaluation Understudy) score is often used to evaluate the quality of machine-translated text.

- It compares the generated translation to one or more reference translations provided by human translators.

- BLEU scores range from 0 to 1, with higher scores indicating better translation quality.

Implementation of Training and Evaluation

Let’s do a basic code implementation for training and evaluating a Transformer model using PyTorch:

# training and evaluation of transformer model

criterion = nn.CrossEntropyLoss(ignore_index=0)

optimizer = optim.Adam(transformer.parameters(), lr=0.0001, betas=(0.9, 0.98), eps=1e-9)

# Training loop

transformer.train()

for epoch in range(10):

optimizer.zero_grad()

output = transformer(src_data, tgt_data[:, :-1])

loss = criterion(output.contiguous().view(-1, tgt_vocab_size), tgt_data[:, 1:]

.contiguous().view(-1))

loss.backward()

optimizer.step()

print(f"Epoch {epoch+1}: Loss= {loss.item():.4f}")

#Dummy Data

src_data = torch.randint(1, src_vocab_size, (5, max_len)) # (batch_size, seq_length)

tgt_data = torch.randint(1, tgt_vocab_size, (5, max_len)) # (batch_size, seq_length)

# Evaluation loop

transformer.eval()

with torch.no_grad():

output = transformer(src_data, tgt_data[:, :-1])

loss = criterion(output.contiguous().view(-1, tgt_vocab_size), tgt_data[:, 1:]

.contiguous().view(-1))

print(f"\nEvaluation Loss for dummy data= {loss.item():.4f}")

Advanced Topics and Applications

Transformers have sparked a plethora of advanced concepts and applications in natural language processing (NLP). Let’s delve into some of these topics, including different attention variants, BERT (Bidirectional Encoder Representations from Transformers), GPT (Generative Pre-trained Transformer), and their practical applications.

Different Attention Variants

Attention mechanisms are at the heart of transformer models, allowing them to focus on relevant parts of the input sequence. Proposals for various attention variants aim to enhance the capabilities of transformers.

- Scaled Dot-Product Attention: The standard attention mechanism used in the original Transformer model. It computes attention scores as the dot product of query and key vectors, scaled by the square root of the dimensionality.

- Multi-Head Attention: A powerful extension of attention that employs multiple attention heads to capture different aspects of the input sequence simultaneously. Each head learns different attention patterns, enabling the model to attend to various parts of the input in parallel.

- Relative Positional Encoding: Introduces relative positional encoding to capture the relative positions of tokens more effectively. This variant enhances the model’s ability to understand the sequential relationships between tokens.

BERT (Bidirectional Encoder Representations from Transformers)

BERT, a landmark transformer-based model, has had a profound impact on NLP. It undergoes pre-training on large corpora of text data using masked language modeling and next sentence prediction objectives. BERT learns deep contextualized representations of words, capturing bidirectional context and enabling it to perform well on a wide range of downstream NLP tasks.

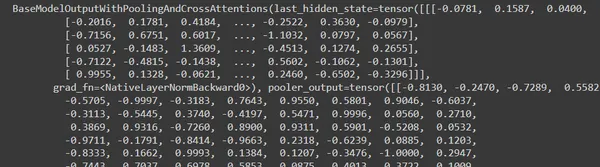

Code Snippet – BERT Model:

from transformers import BertModel, BertTokenizer

tokenizer = BertTokenizer.from_pretrained('bert-base-uncased')

model = BertModel.from_pretrained('bert-base-uncased')

inputs = tokenizer("Hello, world!", return_tensors="pt")

outputs = model(**inputs)

print(outputs)

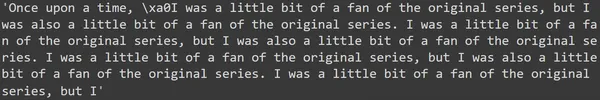

GPT (Generative Pre-trained Transformer)

GPT, a transformer-based model, is renowned for its generative capabilities. Unlike BERT, which is bidirectional, GPT utilizes a decoder-only architecture and autoregressive training to generate coherent and contextually relevant text. Researchers and developers have successfully applied GPT in various tasks such as text completion, summarization, dialogue generation, and more.

Code Snippet – GPT Model:

from transformers import GPT2LMHeadModel, GPT2Tokenizer

tokenizer = GPT2Tokenizer.from_pretrained('gpt2')

model = GPT2LMHeadModel.from_pretrained('gpt2')

input_text = "Once upon a time, "

inputs=tokenizer(input_text,return_tensors='pt')

output=tokenizer.decode(

model.generate(

**inputs,

max_new_tokens=100,

)[0],

skip_special_tokens=True

)

input_ids = tokenizer(input_text, return_tensors='pt')

print(output)

Conclusion

Transformers have revolutionized Natural Language Processing (NLP) with their ability to capture context and understand language intricacies. Through attention mechanisms, encoder-decoder architecture, and multi-head attention, they’ve enabled tasks like machine translation and sentiment analysis on a scale never seen before. As we continue to explore models like BERT and GPT, it’s clear that Transformers are at the forefront of language understanding and generation. Their impact on NLP is profound, and the journey of discovery with Transformers promises to unveil even more remarkable advancements in the field.

Key Takeaways

- Central to Transformers, self-attention allows models to focus on important parts of the input sequence, improving understanding.

- Transformers utilize this architecture to process input and generate output, with each layer containing self-attention and feed-forward networks.

- Through Python code snippets, we gained a hands-on understanding of implementing transformer components.

- Transformers excel in machine translation, text summarization, sentiment analysis, and more, handling large-scale datasets efficiently.

Additional Resources

For those interested in further reading and learning, here are some valuable resources:

- Research Papers:

- “Attention is All You Need” by Vaswani et al. (2017)

- “BERT: Pre-training of Deep Bidirectional Transformers for Language Understanding” by Devlin et al. (2018)

- “Language Models are Unsupervised Multitask Learners” by Radford et al. (2019)

- Tutorials:

- “Attention in transformers, visually explained” – Tutorial series on YouTube by 3Blue1Brown

- “Transformer Neural Networks, ChatGPT’s foundation” – Tutorial series on YouTube by StatQuest with Josh Starmer

- GitHub Repository:

Frequently Asked Questions

A. Transformers are a deep learning model in Natural Language Processing (NLP) that efficiently capture long-range dependencies in sequential data by processing input sequences in parallel, unlike traditional models.

A. Transformers use an attention mechanism to focus on relevant input sequence parts for accurate predictions. It computes attention scores between tokens, calculating weighted sums through multiple layers, effectively capturing contextual information.

A. Transformer-based models such as BERT, GPT, and T5 find widespread use in Natural Language Processing (NLP) tasks such as sentiment analysis, machine translation, text summarization, and question answering.

A. A transformer model consists of encoder-decoder architecture, positional encoding, multi-head attention mechanism, and feed-forward networks. It processes input sequences, understands token order, and enhances representational capacity and performance with nonlinear transformations.

A. Implement transformers using deep learning libraries like PyTorch and TensorFlow, which offer pre-trained models and APIs for custom models. Learn transformer fundamentals through tutorials, documentation resources, and online courses, gaining hands-on experience in NLP tasks.

The media shown in this article is not owned by Analytics Vidhya and is used at the Author’s discretion.