Introduction

The rapid expansion of the Generative AI model capabilities enables us to develop many businesses around GenAI. Today’s model not only generates text data but also, with the powerful multi-modal model like GPT-4, Gemini can leverage image data to generate information. This capability has huge potential in the business world such as you can use any image to get information about it directly from the AI with any overhead. In this article, We will go through the processes of using the Gemini Vision Pro multimodal model to get product information from an image and then creating a FastAPI-based REST API to consume the extracted information. So, let’s start learning by building a product discovery API.

Learning Objective

- What is REST architecture?

- Using REST APIs to access web data

- How to use FastAPI and Pydantic for developing REST API

- What steps to take to build APIs using Google Gemini Vision Pro

- How to use the Llamaindex library to access Google Gemini Models

This article was published as a part of the Data Science Blogathon.

Table of contents

What is a REST API?

A REST API or RESTful API is an application programming interface (API) that uses the design principles of the Representational State Transfer architecture. It helps developers to integrate application components in a microservices architecture.

An API is a way to enable an application or service to access resources within another service or application.

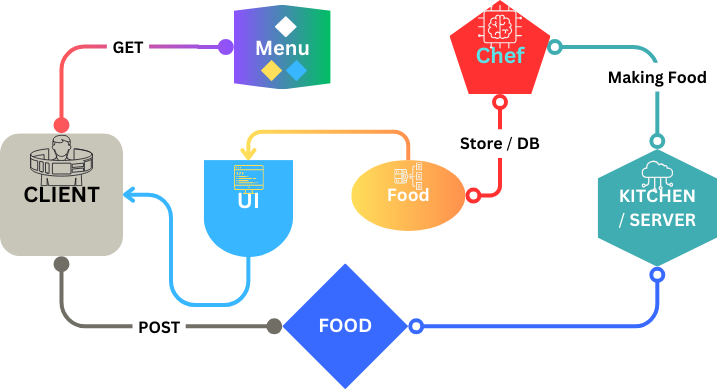

Let’s take a Restaurant analogy to understand the concepts.

You are a restaurant owner, so you have two services running when the restaurant is running.

- One is the kitchen, where the delicious food will be made.

- Two, the sitting or table area where people will sit and eat food.

Here, the kitchen is the SERVER where delicious food or data will be produced for the people or clients. Now, people (clients) will check the menu(API)and can place for order(request) to the kitchen (server) using specific codes (HTTP methods) like “GET”, “POST”, “PUT”, or “DELETE”.

Understand the HTTP method using the restaurant analogy

- GET: It means the client browses the menu before ordering food.

- POST: Now, clients are placing an order, which means the kitchen will start making the food or creating data on the server for the clients.

- PUT: Now, to understand the “PUT” method, imagine that after placing your order, you forgot to add ice cream, so you just update the existing order, which means updating the data.

- DELETE: If you want to cancel the order, delete the data using the “DELETE” method.

These are the most used methods for building API using the REST framework.

What is the FastAPI framework?

FastAPI is a high-performance modern web framework for API development. It is built on top of Starlette for web parts and Pydantic for data validation and serialization. The most noticeable features are below:

- High Performance: It is based on ASGI(Asynchronous Server Gateway Interface), which means FastAPI leverages asynchronous programming, which allows for handling high-concurrency scenarios without much overhead.

- Data Validation: FastAPI uses the most widely used Pydantic data validation. We will learn about it later in the article

- Automatic API documentation using Swagger UI with full OpenAPI standard JSON data.

- Easy Extensibility: FastAPI allows integration with other Python libraries and frameworks easily

What is Lammaindex?

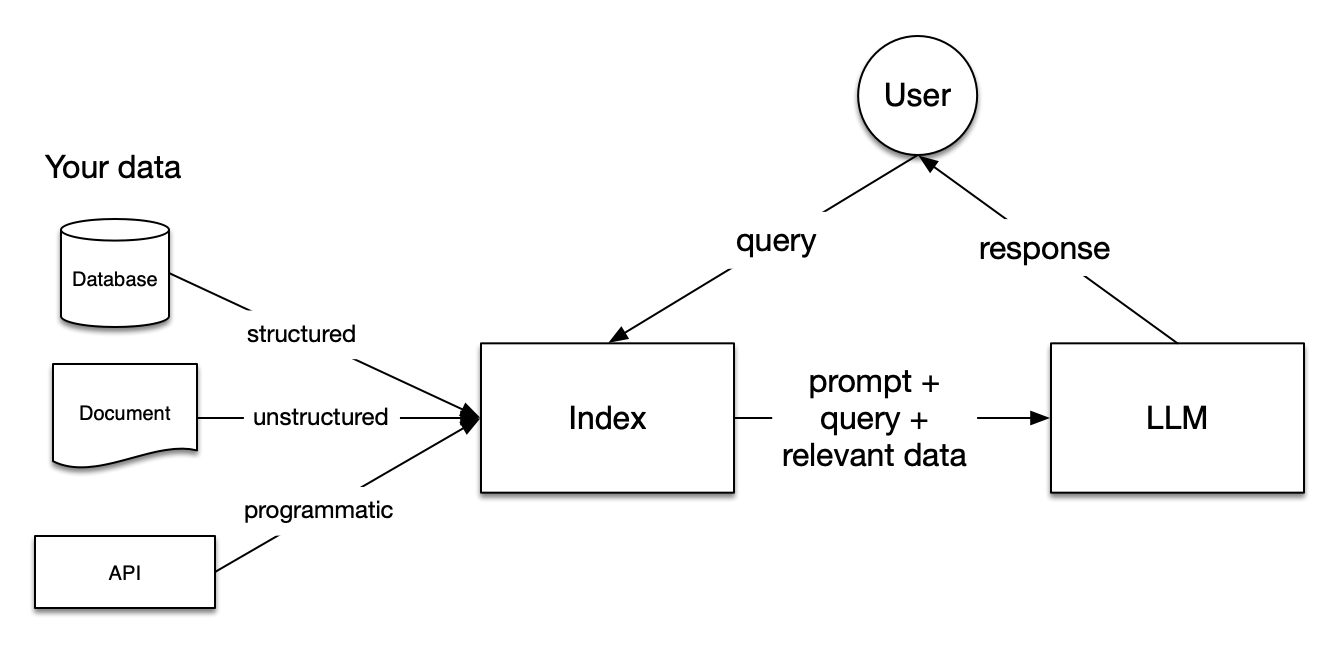

LLamaindex is a tool that acts as a bridge between your data and LLMs. LLMs can be local using Ollama (run LLMs on a Local machine) or an API service such as OpenAI, Gemini, etc.LLamaindex can build a Q&A system, Chat process, intelligent agent, and other LLM models. It lays the foundation of Retrieval Augmented Generation (see below image) with ease in three well-defined steps

- Step One: Knowledge Base (Input)

- Step Two: Trigger/Query(Input)

- Step Three: Task/Action(Output)

According to the context of this article, we will build Step Two and Step Three. We will give an image as input and retrieve the product information from the product in the image.

Setup project environment

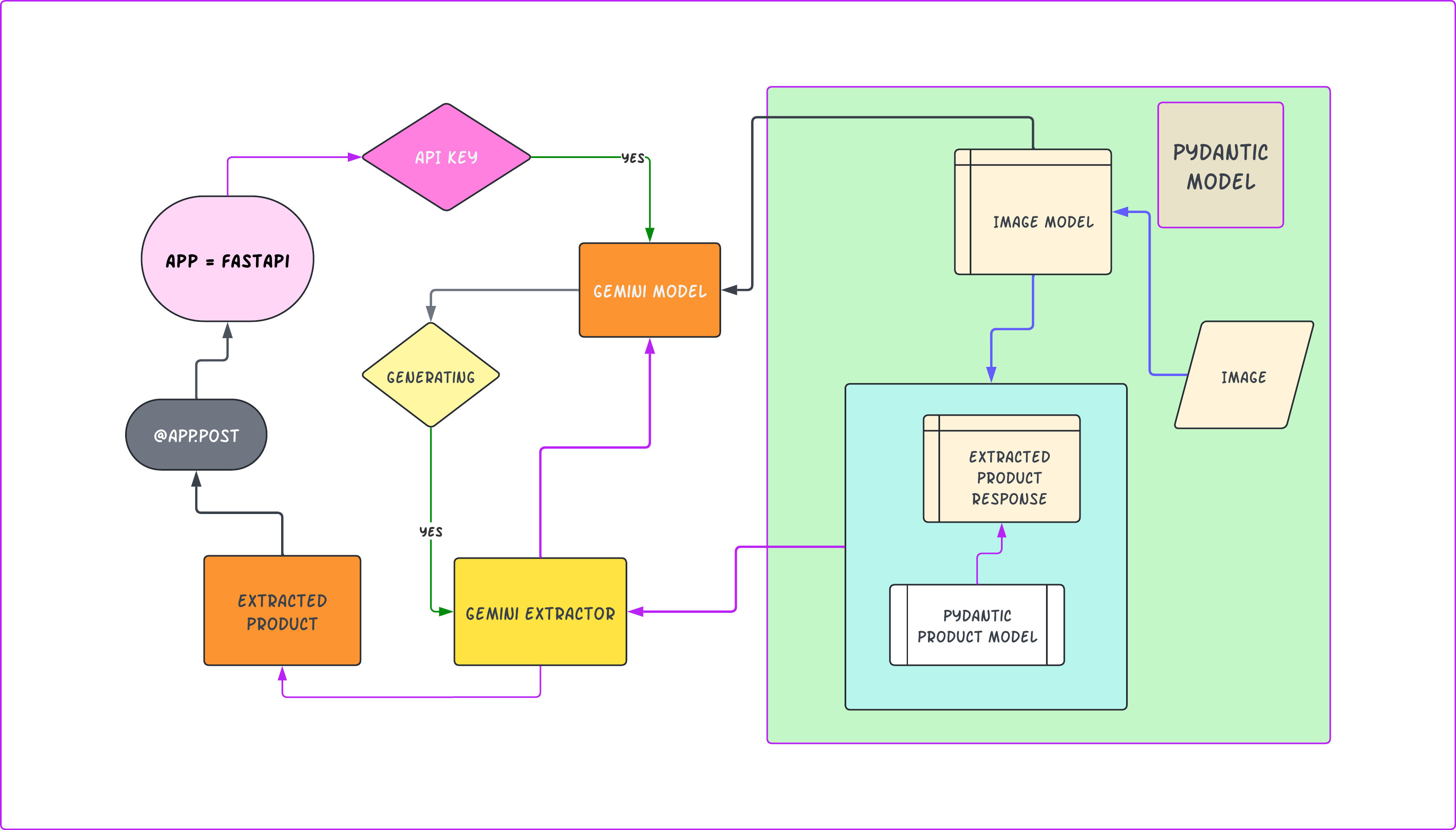

Here is the not-so-good flowchart of the application:

I will use conda to set up the project environment. Follow the below steps

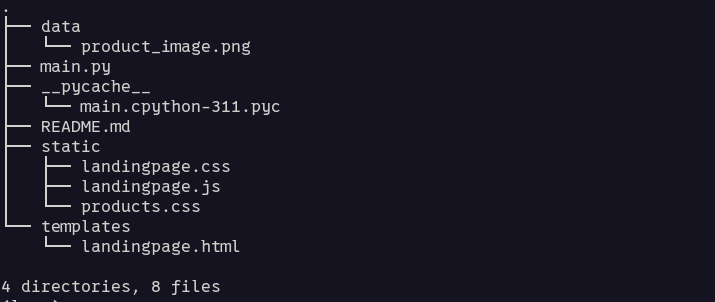

Schematic project structure

Step 1: Create a conda environment

conda create --name api-dev python=3.11

conda activate api-devStep 2: Install the necessary libraries

# llamaindex libraries

pip install llama-index llama-index-llms-gemini llama-index-multi-modal-llms-gemini

# google generative ai

pip install google-generativeai google-ai-generativelanguage

# for API development

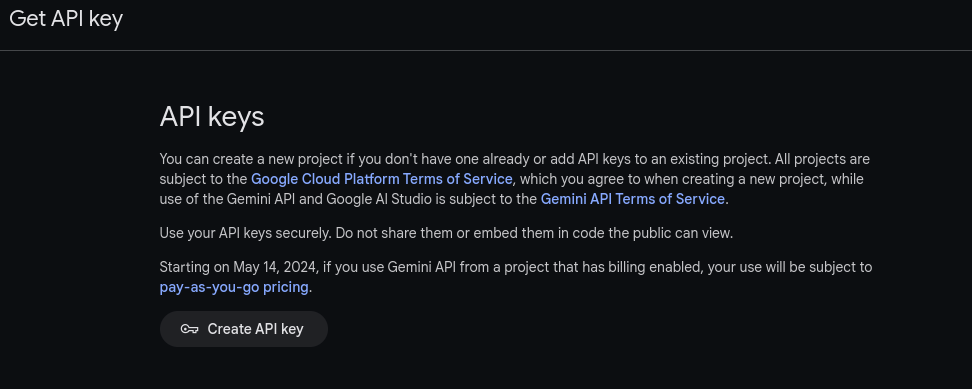

pip install fastapiStep 3: Getting the Gemini API KEY

Go to Google AI and Click on Get an API Key. It will go to the Google AI Studio, where you can Create API Key

Keep it safe and save it; we will require this later.

Implementing REST API

Create a separate folder for the project; let’s name it gemini_productAPI

# create empty project directory

mkdir gemini_productAPI

# activate the conda environment

conda activate api-devTo ensure FastAPI is installed correctly create a Python file name main.py and copy the below code to it.

# Landing page for the application

@app.get("/")

def index(request: Request):

return "Hello World" Because Fastapi is an ASGI framework, we will use an asynchronous web server to run the Fastapi application. There are two types of Server Gateway interfaces: WSGI and ASGI. They both sit between a web server and a Python web application or framework and handle incoming client requests, but they do it differently.

- WSGI or Web Server Gateway interface: It is synchronous, which means it can handle one request at a time and block execution of the other until the previous request is completed. Popular Python web framework Flask is a WSGI framework.

- ASGI or Asynchronous Server Gateway interface: It is asynchronous, which means it can handle multiple requests concurrently without blocking others. It is more modern and robust for multiple clients, long-live connections, and bidirectional communication such as real-time messaging, video calls, etc.

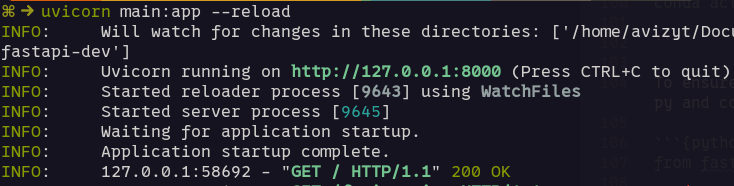

Uvicorn is an Asynchronous Server Gateway Interface (ASGI) web server implementation for Python. It will provide a standard interface between an async-capable Python web server, framework, and application. Fastapi is an ASGI framework that uses Uvicorn by default.

Now start the Uvicorn server and go to http://127.0.0.1:8000 on your browser. You will see Hello World written on it.

-- open your vscode terminal and type

uvicorn main:app --reload

Now, we are set to start coding the main project.

Importing Libraries

import os

from typing import List

# fastapi libs

from fastapi import FastAPI, Request, HTTPException

from fastapi.responses import HTMLResponse

from fastapi.responses import HTMLResponse

from fastapi.staticfiles import StaticFiles

from fastapi.templating import Jinja2Templates

# pydantic libs

from pydantic import BaseModel, ConfigDict, Field, HttpUrl

# llamaindex libs

from llama_index.multi_modal_llms.gemini import GeminiMultiModal

from llama_index.core.program import MultiModalLLMCompletionProgram

from llama_index.core.output_parsers import PydanticOutputParser

from llama_index.core.multi_modal_llms.generic_utils import load_image_urls

After importing libraries, create a file .env and put the Google Gemini API Key you got earlier.

# put it in the .env file

GOOGLE_API_KEY="AB2CD4EF6GHIJKLM-NO6P3QR6ST"Now instantiate the FastAPI class and load the GOOGLE API KEY from env

app = FastAPI()

load_dotenv()

GOOGLE_API_KEY = os.getenv("GOOGLE_API_KEY")Create a simple landing Page

Create a GET method for our simple landing page for the project.

# Landing page for the application

@app.get("/", response_class=HTMLResponse)

def landingpage(request: Request):

return templates.TemplateResponse(

"landingpage.html",

{"request": request}

)To render HTML in FastAPI we use the Jinja template. To do this create a template directory at the root of your project and for static files such as CSS and Javascript files create a directory named static. Copy the below code in your main.py after the app.

# Linking template directory using Jinja Template

templates = Jinja2Templates(directory="templates")

# Mounting Static files from a static directory

app.mount("/static", StaticFiles(directory="static"), name="static")The code above links your templates and static directory to the FastAPI application.

Now, create a file called landingpage.html in the template directory. Go to GithubLink and copy the code /template/landingpage.html to your project.

Truncated code snippets

<!DOCTYPE html>

<html lang="en">

<head>

<meta charset="UTF-8">

<meta name="viewport" content="width=device-width, initial-scale=1.0">

<title>Product Discovery API</title>

<link href="{{url_for('static', path='/landingpage.css')}}"></link>

</head>

<body>

<header class="header">

<div class="container header-container">

<h1 class="content-head is-center">

Discover Products with Google Gemini Pro Vision

....

</header>

<main>

....

</main>

<footer class="footer">

<div class="container footer-container">

<p class="footer-text">© 2024 Product Discovery API.

All rights reserved.</p>

</div>

</footer>

</body>

</html>After that, create a directory named static and two files, landingpage.css and landingpage.js, in your static directory. Now, go to GithubLink and copy the code from landingpage.js to your project.

Truncated code snippets

document.getElementById('upload-btn').addEventListener('click', function() {

const fileInput = document.getElementById('upload');

const file = fileInput.files[0];

......

document.getElementById('contact-form').addEventListener(

'submit', function(event) {

event.preventDefault();

alert('Message sent!');

this.reset();

}

);for CSS, go to the Github link and copy the code landingpage.css to your project.

Truncated code snippets

body {

font-family: 'Arial', sans-serif;

margin: 5px;

padding: 5px;

box-sizing: border-box;

background-color: #f4f4f9;

}

.container {

max-width: 1200px;

margin: 0 auto;

padding: 0 20px;

}

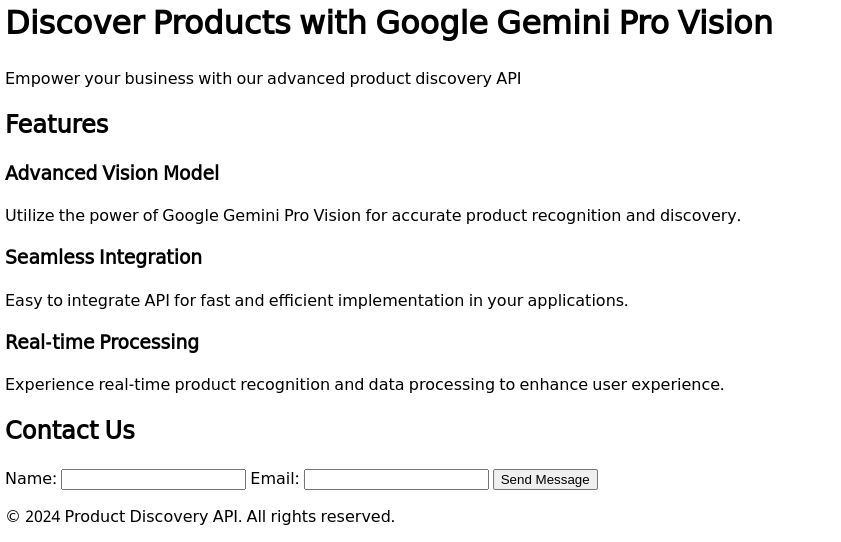

The final page will look like below

This is a very basic landing page created for the article. You can make it more attractive using CSS styling or React UI.

Implementing an Information Extraction Function

We will use the Google Gemini Pro Vision model to extract product information from an image.

Creating a function name gemini_extractor.

def gemini_extractor(model_name, output_class, image_documents, prompt_template_str):

gemini_llm = GeminiMultiModal(api_key=GOOGLE_API_KEY, model_name=model_name)

llm_program = MultiModalLLMCompletionProgram.from_defaults(

output_parser=PydanticOutputParser(output_class),

image_documents=image_documents,

prompt_template_str=prompt_template_str,

multi_modal_llm=gemini_llm,

verbose=True,

)

response = llm_program()

return response

We will use Llamaindex’s GeminiMultiModal API to work with Google Gemini API in this function. The LLmaindex MultiModalLLMCompletion API will take the output parser, image data, prompt, and GenAI model to get our desired response from the Gemini Pro Vision model.

For extracting information from the image, we have to engineer a prompt for this purpose

prompt_template_str = """\

You are an expert system designed to extract products from images for\

an e-commerce application. Please provide the product name, product color,\

product category and a descriptive query to search for the product.\

Accurately identify every product in an image and provide a descriptive\

query to search for the product. You just return a correctly formatted\

JSON object with the product name, product color, product category and\

description for each product in the image\

"""With this prompt, we instruct the model that it is an expert system that can extract information from an image. It will extract the information below from the given image input.

- Name of product

- Color

- Category

- Description

This prompt will be used as an argument in the above gemini_extractor function later.

We all know that a Generative AI model can often produce undesired responses. This is a problem when working with a generative AI model because it will not always follow the prompt (most of the time). To mitigate this type of issue, Pydantic comes in the scene. FastAPI was built using Pydantic to validate its API model.

Creating a Product model using Pydantic

class Product(BaseModel):

id: int

product_name: str

color: str

category: str

description: str

class ExtractedProductsResponse(BaseModel):

products: List[Product]

class ImageRequest(BaseModel):

url: str

model_config = ConfigDict(

json_schema_extra={

"examples": [

{

"url": "https://images.pexels.com/photos/356056/pexels-photo-356056.jpeg?\

auto=compress&cs=tinysrgb&w=1260&h=750&dpr=1"

}

]

}

)The above Product class defines a data model for a product, and the ExtractedProductResponse class represents a response structure that contains a list of these products, as well as the ImageRequest class for providing input images for clients. We used Pydantic to ensure the structural integrity of the data validation and serialization.

Creating Extracted Product API endpoint with POST method

all_products = []

@app.post("/extracted_products")

def extracted_products(image_request: ImageRequest):

responses = gemini_extractor(

model_name="models/gemini-pro-vision",

output_class=ExtractedProductsResponse,

image_documents=load_image_urls([image_request.url]),

prompt_template_str=prompt_template_str,

)

all_products.append(responses)

return responses

In the above code snippets, we create an endpoint in the FastAPI application using the POST method with decorator @app.post(“/extracted_products”), which will process the requested image to extract product information. The extracted_products method will handle the request to these endpoints. It will take the image_request parameter of type ImageRequest.

We called the gemini_extractor function we created previously for information extraction, and the response will be stored in the all_products list. We will use a built-in Python list to store the responses for simplicity. You can add database logic to store the response in the database. MongoDB would be a good choice for storing this type of JSON data.

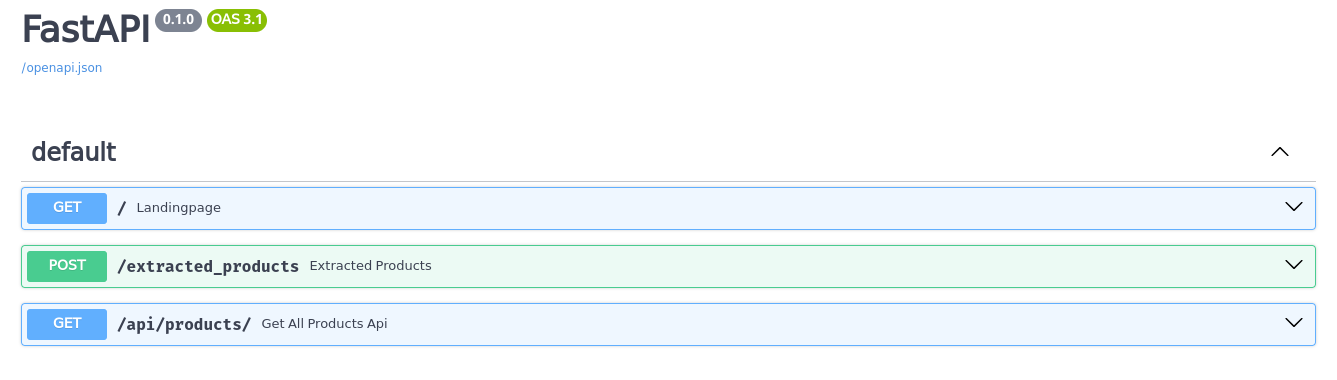

Requesting an image from OPENAPI doc

Go to http://127.0.0.1:8000/docs in your browser; you will get an OpenAPI docs

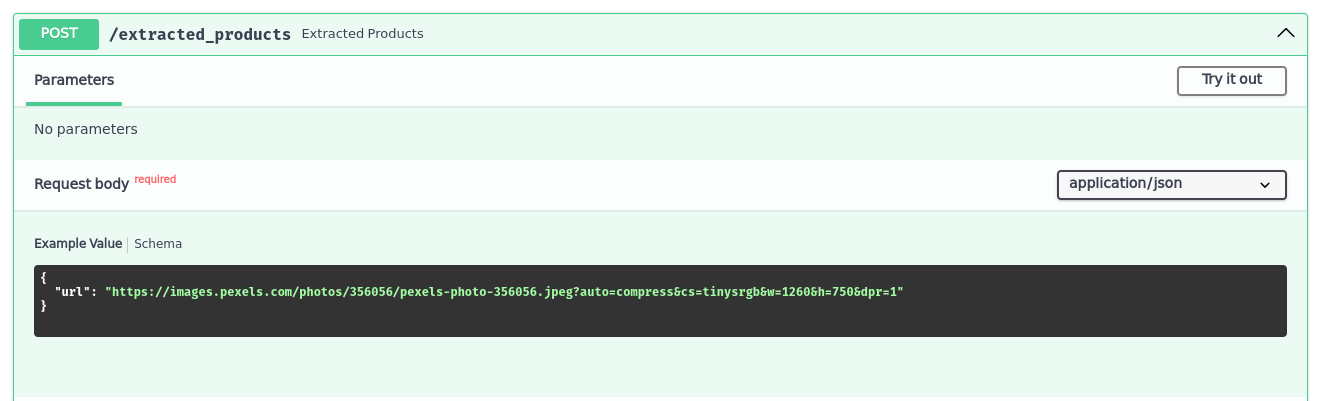

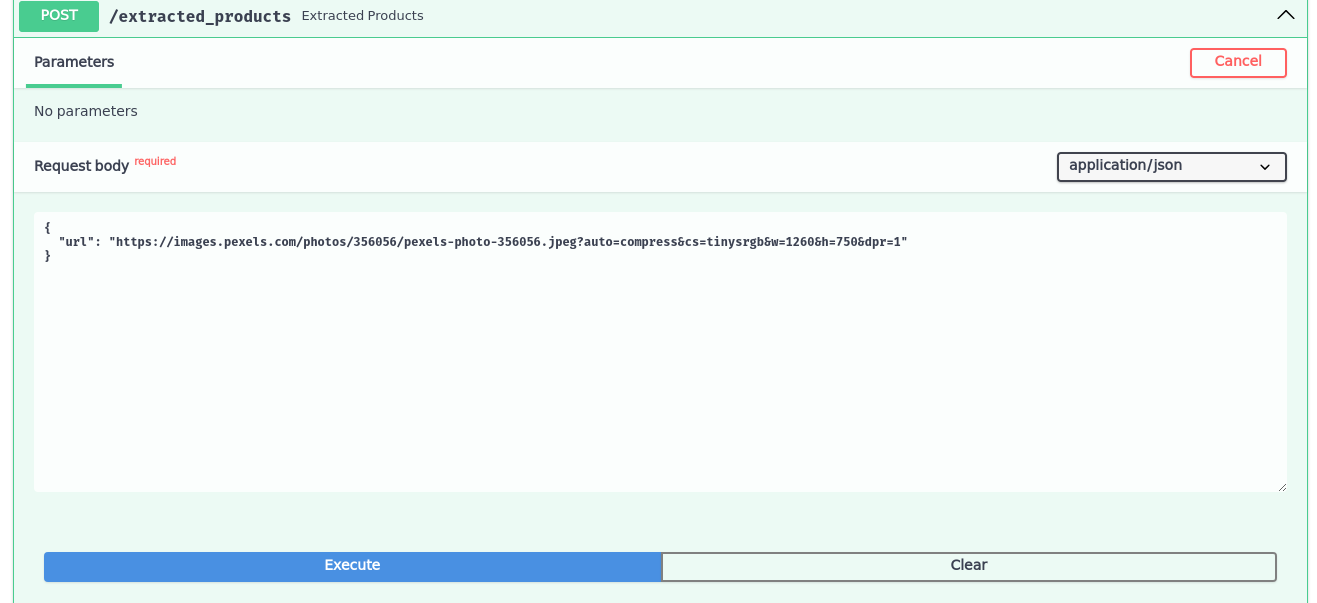

Expand the /extracted_product and click Try It Out on the right

Then click Execute and it will extract the product information from the image using the Gemini Vision Pro model.

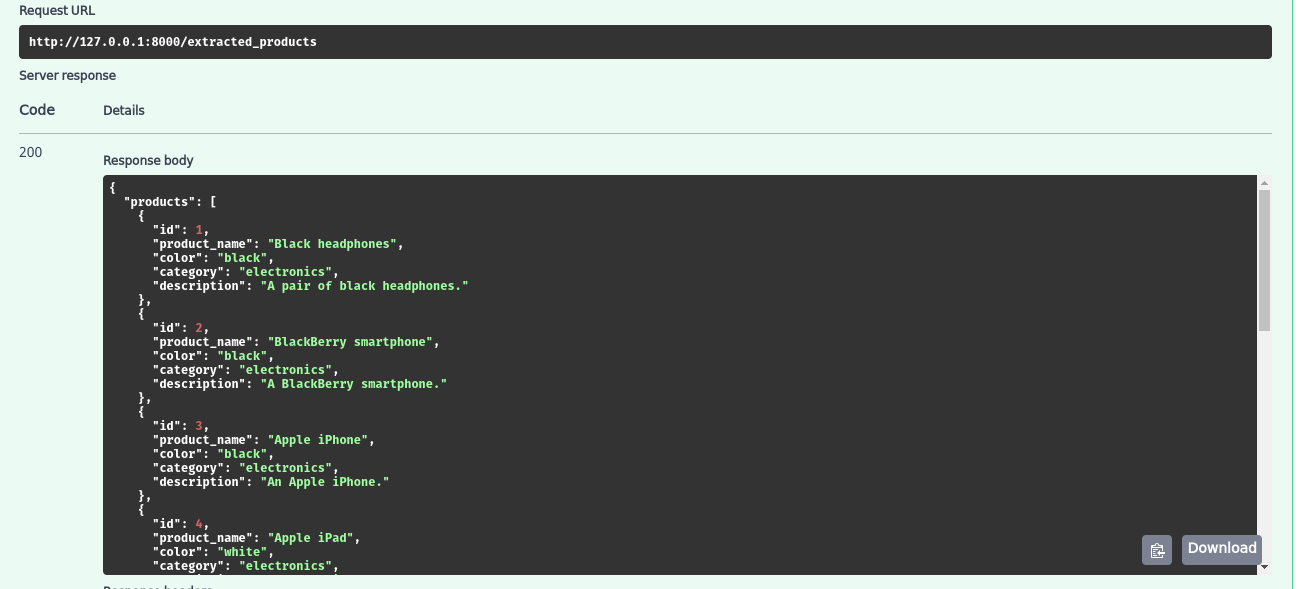

Response from the POST method will look like this.

In the above image, you can see the requested URL and response body, which is the generated response from the Gemini model

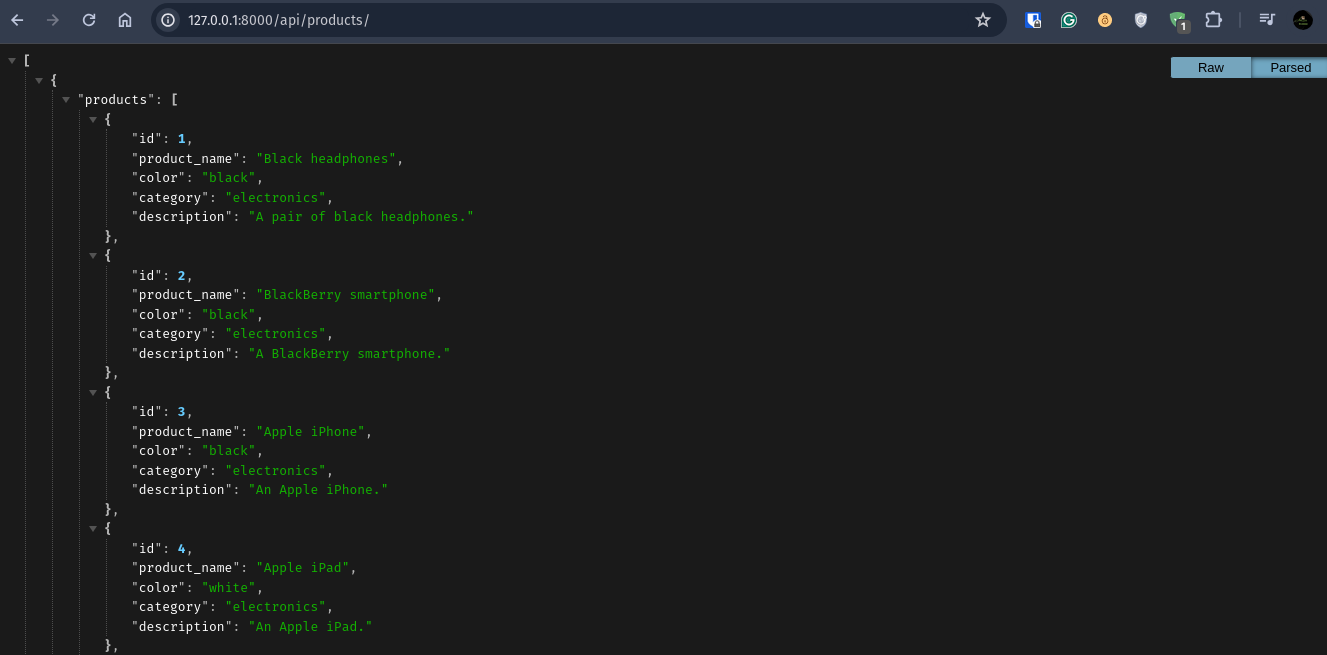

Creating a product endpoint with a GET method for fetching the data

@app.get("/api/products/", response_model=list[ExtractedProductsResponse])

async def get_all_products_api():

return all_productsGo to http://127.0.0.1:8000/api/products to see all the products

In the above code, we created an endpoint to fetch the extracted data stored in the database. Others can use this JSON data for their products, such as making and e-commerce sites.

All the code used in this article in the GitHub Gemini-Product-Application

Conclusion

This is a simple yet systematic way to access and utilize the Gemini Multimodal Model to make minimal viable product discovery API. You can use this technique to build a more robust product discovery system directly from an image. This type of application has very useful business potential, e.g., an Android application that utilizes camera API to take photos and Gemini API for extracting product information from that image, which will be used for buying products directly from Amazon, Flipkart, etc.

Key Takeaways

- The architecture of Representational State Transfer for building high-performance API for business.

- Llamaindex has an API to connect different GenAI models. Today, we will learn how to use Llamaindex with the Gemini API to extract image data using the Gemini Vision Pro model.

- Many Python frameworks, such as Flask, Django, and FastAPI, are used to build REST APIs. We learn how to use FastAPI to build a robust REST API.

- Prompt engineering to get the expected response from Gemini Model

The media shown in this article are not owned by Analytics Vidhya and is used at the Author’s discretion.

Frequently Asked Questions

A: Llamaindex has default OpenAI access, but if you want to use other models such as Cohere, Gemini, Llama2, Ollama, or MistralAI, you can install a model-specific library using PIP. See use cases here.

A: You can use any UI frameworks you want with FastAPI. You have to create a frontend directory and Backend dir for the API application in the FastAPI root and link your frontend UI with the Backend. See the Full Stack application here.

A: The responses from the model are JSON, so any document database such as MongoDB would be good for storing the response and retrieving the data for the application. See MongoDB with FastAPI here.