Introduction

Assume you are engaged in a challenging project, like simulating real-world phenomena or developing an advanced neural network to forecast weather patterns. Tensors are complex mathematical entities that operate behind the scenes and power these sophisticated computations. Tensors efficiently handle multi-dimensional data, making such innovative projects possible. This article aims to provide readers with a comprehensive understanding of tensors, their properties, and applications. As a researcher, professional, or student, having a solid understanding of tensors will help you deal with complex data and advanced computer models.

Overview

- Define what a tensor is and understand its various forms and dimensions.

- Recognize the properties and operations associated with tensors.

- Apply tensor concepts in different fields such as physics and machine learning.

- Perform basic tensor operations and transformations using Python.

- Understand the practical applications of tensors in neural networks.

Table of contents

What is Tensor?

Mathematically, tensors are objects that extend matrices, vectors, and scalars to higher dimensions. The domains of computer science, engineering, and physics are all heavily dependent on tensors, especially when it comes to deep learning and machine learning.

A tensor is, to put it simply, an array of numbers with possible dimensions. The rank of the tensor is the number of dimensions. This is an explanation:

- Scalar: A single number (rank 0 tensor).

- Vector: A one-dimensional array of numbers (rank 1 tensor).

- Matrix: A two-dimensional array of numbers (rank 2 tensor).

- Higher-rank tensors: Arrays with three or more dimensions (rank 3 or higher).

Mathematically, a tensor can be represented as follows:

- A scalar ( s ) can be denoted as ( s ).

- A vector ( v ) can be denoted as ( v_i ) where ( i ) is an index.

- A matrix ( M ) can be denoted as ( M_{ij} ) where ( i ) and ( j ) are indices.

- A higher-rank tensor ( T ) can be denoted as ( T_{ijk…} ) where ( i, j, k, ) etc., are indices.

Properties of Tensors

Tensors have several properties that make them versatile and powerful tools in various fields:

- Dimension: The number of indices required to describe the tensor.

- Rank (Order): The number of dimensions a tensor has.

- Shape: The size of each dimension. For example, a tensor with shape (3, 4, 5) has dimensions of 3, 4, and 5.

- Type: Tensors can hold different types of data, such as integers, floating-point numbers, etc.

Tensors in Mathematics

In mathematics, tensors generalize concepts like scalars, vectors, and matrices to more complex structures. They are essential in various fields, from linear algebra to differential geometry.

Example of Scalars and Vectors

- Scalar: A single number. For example, the temperature at a point in space can be represented as a scalar value, such as ( s = 37 ) degrees Celsius.

- Vector: A numerical array with magnitude and direction in one dimension. For example, a vector (v = [3, 4, 5]) can be used to describe the velocity of a moving object, where each element represents the velocity component in a particular direction.

Example of Tensors in Linear Algebra

Consider a matrix ( M ), which is a two-dimensional tensor:

Multi-dimensional data, such as an image with three color channels, can be represented by complex tensors like rank-3 tensors, whereas the matrix is used for transformations like rotation or scaling vectors in a plane. Dimensions are related to depth of color, width, and height.

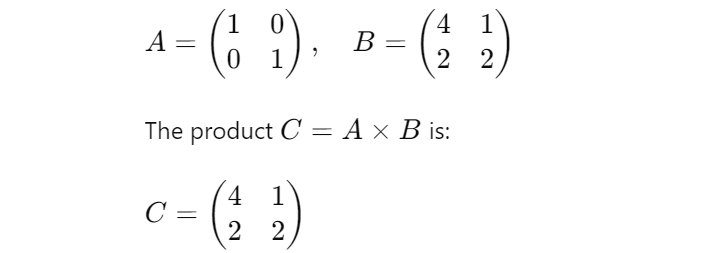

Tensor Contraction Example

Tensor contraction is a generalization of matrix multiplication. For example, if we have two matrices ( A ) and ( B ):

Here, the indices of ( A ) and ( B ) are summed over to produce the elements of ( C ). This concept extends to higher-rank tensors, enabling complex transformations and operations in multi-dimensional spaces.

Tensors in Computer Science and Machine Learning

Tensors are crucial for organizing and analyzing multi-dimensional data in computer science and machine learning, especially in deep learning frameworks like PyTorch and TensorFlow.

Data Representation

Tensors are used to represent various forms of data:

- Scalars: Represented as rank-0 tensors. For instance, a single numerical value, such as a learning rate in a machine learning algorithm.

- Vectors: Represented as rank-1 tensors. For example, a list of features for a data point, such as pixel intensities in a grayscale image.

- Matrices: As rank-2 tensor representations. frequently used to hold datasets in which a feature is represented by a column and a data sample by a row.

- Higher-Rank Tensors: Utilized with more intricate data formats. For instance, a rank-3 tensor with dimensions (height, width, channels) can be used to represent a color image.

Tensors in Deep Learning

In deep learning, tensors are used to represent:

- Input Data: Raw data fed into the neural network. For instance, a batch of images can be represented as a 4-dimensional tensor with shape (batch size, height, width, channels).

- Weights and Biases: Parameters of the neural network that are learned during training. These are also represented as tensors of appropriate shapes.

- Intermediate Activations: Outputs of each layer in the neural network, which are also tensors.

Example

Consider a simple neural network with an input layer, one hidden layer, and an output layer. The data and parameters at each layer are represented as tensors:

import torch

# Input data: batch of 2 images, each 3x3 pixels with 3 color channels (RGB)

input_data = torch.tensor([[[[1, 2, 3], [4, 5, 6], [7, 8, 9]],

[[9, 8, 7], [6, 5, 4], [3, 2, 1]],

[[0, 0, 0], [1, 1, 1], [2, 2, 2]]],

[[[2, 3, 4], [5, 6, 7], [8, 9, 0]],

[[0, 9, 8], [7, 6, 5], [4, 3, 2]],

[[1, 2, 3], [4, 5, 6], [7, 8, 9]]]])

# Weights for a layer: assuming a simple fully connected layer

weights = torch.rand((3, 3, 3, 3)) # Random weights for demonstration

# Output after applying weights (simplified)

output_data = torch.matmul(input_data, weights)

print(output_data.shape)

# Output: torch.Size([2, 3, 3, 3])Here, input_data is a rank-4 tensor representing a batch of two 3×3 RGB images. The weights are also represented as a tensor, and the output data after applying the weights is another tensor.

Tensor Operations

Common operations on tensors include:

- Element-wise operations: Operations applied independently to each element, such as addition and multiplication.

- Matrix multiplication: A special case of tensor contraction where two matrices are multiplied to produce a third matrix.

- Reshaping: Changing the shape of a tensor without altering its data.

- Transposition: Swapping the dimensions of a tensor.

Representing a 3×3 RGB Image as a Tensor

Let’s consider a practical example in machine learning. Suppose we have an image represented as a 3-dimensional tensor with shape (height, width, channels). For a color image, the channels are usually Red, Green, and Blue (RGB).

# Create a 3x3 RGB image tensor

image = np.array([[[255, 0, 0], [0, 255, 0], [0, 0, 255]],

[[255, 255, 0], [0, 255, 255], [255, 0, 255]],

[[128, 128, 128], [64, 64, 64], [32, 32, 32]]])

print(image.shape)

Here, image is a tensor with shape (3, 3, 3) representing a 3×3 image with 3 color channels.

Implementing a Basic CNN for Image Classification

In a convolutional neural network (CNN) used for image classification, an input image is represented as a tensor and passed through several layers, each transforming the tensor using operations like convolution and pooling. The final output tensor represents the probabilities of different classes.

import torch

import torch.nn as nn

import torch.nn.functional as F # Importing the functional module

# Define a simple convolutional neural network

class SimpleCNN(nn.Module):

def __init__(self):

super(SimpleCNN, self).__init__()

self.conv1 = nn.Conv2d(in_channels=1, out_channels=16, kernel_size=3)

self.pool = nn.MaxPool2d(kernel_size=2, stride=2)

self.fc1 = nn.Linear(16 * 3 * 3, 10)

def forward(self, x):

x = self.pool(F.relu(self.conv1(x))) # Using F.relu from the functional module

x = x.view(-1, 16 * 3 * 3)

x = self.fc1(x)

return x

# Create an instance of the network

model = SimpleCNN()

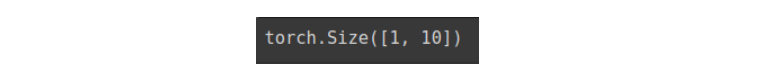

# Dummy input data (e.g., a batch of 1 grayscale image of size 8x8)

input_data = torch.randn(1, 1, 8, 8)

# Forward pass

output = model(input_data)

print(output.shape)

A batch of photos is represented by the rank-4 tensor input_data in this example. These tensors are processed by the convolutional and fully connected layers, which apply different operations to them in order to generate the desired result.

Conclusion

Tensors are mathematical structures that carry matrices, vectors, and scalars into higher dimensions. They are essential to theoretical physics and machine learning, among other domains. Professionals working in deep learning and artificial intelligence need to understand tensors in order to use contemporary computational frameworks to progress research, engineering, and technology.

Frequently Asked Questions

A. A tensor is a mathematical object that generalizes scalars, vectors, and matrices to higher dimensions.

A. The rank (or order) of a tensor is the number of dimensions it has.

A. Tensors are used to represent data and parameters in neural networks, facilitating complex computations.

A. One common tensor operation is matrix multiplication, where two matrices are multiplied to produce a third matrix.