Introduction

Imagine you’re in the middle of an intense conversation, and the perfect response slips your mind just when you need it most. Now, imagine if you had a tool that could adapt to every twist and turn of the discussion, offering just the right words at the right time. That’s the power of adaptive prompting, and it’s not just a dream—it’s a cutting-edge technique transforming how we interact with AI. In this article, we’ll explore how you can harness the capabilities of adaptive prompting using DSPy, diving into real-world applications like sentiment analysis. Whether you’re a data scientist looking to refine your models or just curious about the future of AI, this guide will show you why adaptive prompting is the next big thing you need to know about.

Learning Objectives

- Understand the concept of adaptive prompting and its benefits in creating more effective and context-sensitive interactions.

- Get acquainted with dynamic programming principles and how DSPy simplifies their application.

- Follow a practical guide to using DSPY to build adaptive prompting strategies.

- See adaptive prompting in action through a case study, showcasing its impact on prompt effectiveness.

This article was published as a part of the Data Science Blogathon.

Table of contents

- What is Adaptive Prompting?

- Basic Adaptive Prompting Using Language Model

- Use Cases of Adaptive Prompting

- Building Adaptive Prompting Strategies with DSPy

- Step-by-Step Guide to Building Adaptive Prompting Strategies

- Case Study: Adaptive Prompting in Sentiment Analysis

- Benefits of Using DSPy for Adaptive Prompting

- Challenges in Implementing Adaptive Prompting

- Frequently Asked Questions

What is Adaptive Prompting?

Adaptive prompting is a dynamic approach to interacting with models that involve adjusting prompts based on the responses received or the context of the interaction. Unlike traditional static prompting, where the prompt remains fixed regardless of the model’s output or the conversation’s progress, adaptive prompting evolves in real time to optimize the interaction.

In adaptive prompting, prompts are designed to be flexible and responsive. They change based on the feedback from the model or user, aiming to elicit more accurate, relevant, or detailed responses. This dynamic adjustment can enhance the effectiveness of interactions by tailoring prompts to better fit the current context or the specific needs of the task.

Benefits of Adaptive Prompting

- Enhanced Relevance: By adapting prompts based on model responses, you can increase the relevance and precision of the output.

- Improved User Engagement: Dynamic prompts can make interactions more engaging and personalized, leading to a better user experience.

- Better Handling of Ambiguity: Adaptive prompting can help clarify ambiguous responses by refining the prompts to solicit more specific information.

Basic Adaptive Prompting Using Language Model

Below is a Python code snippet demonstrating a basic adaptive prompting system using a language model. The example shows how to adjust prompts based on a model’s response:

from transformers import GPT3Tokenizer, GPT3Model

# Initialize the model and tokenizer

model_name = "gpt-3.5-turbo"

tokenizer = GPT3Tokenizer.from_pretrained(model_name)

model = GPT3Model.from_pretrained(model_name)

def generate_response(prompt):

inputs = tokenizer(prompt, return_tensors="pt")

outputs = model(**inputs)

return tokenizer.decode(outputs.logits.argmax(dim=-1))

def adaptive_prompting(initial_prompt, model_response):

# Adjust the prompt based on the model's response

if "I don't know" in model_response:

new_prompt = f"{initial_prompt} Can you provide more details?"

else:

new_prompt = f"{initial_prompt} That's interesting. Can you expand on

that?"

return new_prompt

# Example interaction

initial_prompt = "Tell me about the significance of adaptive prompting."

response = generate_response(initial_prompt)

print("Model Response:", response)

# Adaptive prompting

new_prompt = adaptive_prompting(initial_prompt, response)

print("New Prompt:", new_prompt)

new_response = generate_response(new_prompt)

print("New Model Response:", new_response)

In the above code snippet, we use a language model (GPT-3.5-turbo) to demonstrate how prompts can be dynamically adjusted based on the model’s responses. The code initializes the model and tokenizer, then defines a function, generate_response, that takes a prompt, processes it with the model, and returns the generated text. Another function, adaptive_prompting, modifies the initial prompt depending on the model’s response. If the response contains phrases indicating uncertainty, such as “I don’t know,” the prompt is refined to request more details. Otherwise, the prompt is adjusted to encourage further elaboration.

For example, if the initial prompt is “Tell me about the significance of adaptive prompting,” and the model responds with an uncertain answer, the adaptive prompt might be adjusted to “Can you provide more details?” The model would then generate a new response based on this refined prompt. The expected output would be an updated prompt that aims to elicit a more informative and specific answer, followed by a more detailed response from the model.

Use Cases of Adaptive Prompting

Adaptive prompting can be particularly beneficial in various scenarios, including:

- Dialogue Systems: Adaptive prompting in dialogue systems helps tailor the conversation flow based on user responses. This can be achieved using dynamic programming to manage state transitions and prompt adjustments.

- Question-Answering: Adaptive prompting can refine queries based on initial responses to obtain more detailed answers.

- Interactive Storytelling: Adaptive prompting adjusts the narrative based on user choices, enhancing the interactive storytelling experience.

- Data Collection and Annotation: Adaptive prompting can refine data collection queries based on initial responses to gather more precise information.

By leveraging adaptive prompting, applications can become more effective at engaging users, handling complex interactions, and providing valuable insights. Adaptive prompting’s flexibility and responsiveness make it a powerful tool for improving the quality and relevance of model interactions across various domains.

Building Adaptive Prompting Strategies with DSPy

Creating adaptive prompting strategies involves leveraging dynamic programming (DP) principles to adjust prompts based on model interactions and feedback. The DSPy library simplifies this process by providing a structured approach to managing states, actions, and transitions. Below is a step-by-step guide on setting up an adaptive prompting strategy using DSPy.

Step-by-Step Guide to Building Adaptive Prompting Strategies

Let us now look into the step by step guide to building Adaptive prompting strategies.

- Define the Problem Scope: Determine the specific adaptive prompting scenario you are addressing. For example, you might be designing a system that adjusts prompts in a dialogue system based on user responses.

- Identify States and Actions: Define the states representing different scenarios or conditions in your prompting system. Identify actions that modify these states based on user feedback or model responses.

- Create Recurrence Relations: Establish recurrence relations that dictate how the states transition from one to another based on the actions taken. These relations guide how prompts are adjusted adaptively.

- Implement the Strategy Using DSPy: Utilize the DSPy library to model the defined states, actions, and recurrence relations and implement the adaptive prompting strategy.

Defining States and Actions

In adaptive prompting, states typically include the current prompt and user feedback, while actions involve modifying the prompt based on the feedback.

Example:

- States:

- State_Prompt: Represents the current prompt.

- State_Feedback: Represents user feedback or model responses.

- Actions:

- Action_Adjust_Prompt: Adjusts the prompt based on feedback.

Code Example: Defining States and Actions

from dspy import State, Action

class AdaptivePromptingDP:

def __init__(self):

# Define states

self.states = {

'initial': State('initial_prompt'),

'feedback': State('feedback')

}

# Define actions

self.actions = {

'adjust_prompt': Action(self.adjust_prompt)

}

def adjust_prompt(self, state, feedback):

# Logic to adjust the prompt based on feedback

if "unclear" in feedback:

return "Can you clarify your response?"

else:

return "Thank you for your feedback."

# Initialize adaptive prompting

adaptive_dp = AdaptivePromptingDP()Creating a Recurrence Relation

Recurrence relations guide how states transition based on actions. Adaptive prompting involves defining how prompts change based on user feedback.

Example: The recurrence relation might specify that if the user provides unclear feedback, the system should transition to a state where it asks for clarification.

Code Example: Creating a Recurrence Relation

from dspy import Transition

class AdaptivePromptingDP:

def __init__(self):

# Define states

self.states = {

'initial': State('initial_prompt'),

'clarification': State('clarification_prompt')

}

# Define actions

self.actions = {

'adjust_prompt': Action(self.adjust_prompt)

}

# Define transitions

self.transitions = [

Transition(self.states['initial'], self.states['clarification'],

self.actions['adjust_prompt'])

]

def adjust_prompt(self, state, feedback):

if "unclear" in feedback:

return self.states['clarification']

else:

return self.states['initial']Implementing with DSPy

The final step is to implement the defined strategy using DSPy. This involves setting up the states, actions, and transitions within DSPy’s framework and running the algorithm to adjust prompts adaptively.

Code Example: Full Implementation

from dspy import State, Action, Transition, DPAlgorithm

class AdaptivePromptingDP(DPAlgorithm):

def __init__(self):

super().__init__()

# Define states

self.states = {

'initial': State('initial_prompt'),

'clarification': State('clarification_prompt')

}

# Define actions

self.actions = {

'adjust_prompt': Action(self.adjust_prompt)

}

# Define transitions

self.transitions = [

Transition(self.states['initial'], self.states['clarification'],

self.actions['adjust_prompt'])

]

def adjust_prompt(self, state, feedback):

if "unclear" in feedback:

return self.states['clarification']

else:

return self.states['initial']

def compute(self, initial_state, feedback):

# Compute the adapted prompt based on feedback

return self.run(initial_state, feedback)

# Example usage

adaptive_dp = AdaptivePromptingDP()

initial_state = adaptive_dp.states['initial']

feedback = "I don't understand this."

adapted_prompt = adaptive_dp.compute(initial_state, feedback)

print("Adapted Prompt:", adapted_prompt)Code Explanation:

- State and Action Definitions: States represent the current prompt and any changes. Actions define how to adjust the prompt based on feedback.

- Transitions: Transitions dictate how the state changes based on the actions.

- compute Method: This method processes feedback and computes the adapted prompt using the DP algorithm defined with dspy.

Expected Output:

Given the initial state and feedback like “I don’t understand this,” the system would transition to the ‘clarification_prompt’ state and output a prompt asking for more details, such as “Can you clarify your response?”

Case Study: Adaptive Prompting in Sentiment Analysis

Understanding the nuances of user opinions can be challenging in sentiment analysis, especially when dealing with ambiguous or vague feedback. Adaptive prompting can significantly enhance this process by dynamically adjusting the prompts based on user responses to elicit more detailed and precise opinions.

Scenario

Imagine a sentiment analysis system designed to gauge user opinions about a new product. Initially, the system asks a general question like, “What do you think about our new product?” If the user’s response is unclear or lacks detail, the system should adaptively refine the prompt to gather more specific feedback, such as “Can you provide more details about what you liked or disliked?

This adaptive approach ensures that the feedback collected is more informative and actionable, improving sentiment analysis’s overall accuracy and usefulness.

Implementation

To implement adaptive prompting in sentiment analysis using DSPy, follow these steps:

- Define States and Actions:

- States: Represent different stages of the prompting process, such as initial prompt, clarification needed, and detailed feedback.

- Actions: Define how to adjust the prompt based on the feedback received.

- Create Recurrence Relations: Set up transitions between states based on user responses to guide the prompting process adaptively.

- Implement with DSPy: Use DSPy to define the states, actions and transitions and then run the dynamic programming algorithm to adaptively adjust the prompts.

Code Example: Setting Up the Dynamic Program

Let us now look into the steps below for setting up dynamic program.

Step1: Importing Required Libraries

The first step involves importing the necessary libraries. The dspy library is used for managing states, actions, and transitions, while matplotlib.pyplot is utilized for visualizing the results.

from dspy import State, Action, Transition, DPAlgorithm

import matplotlib.pyplot as pltStep2: Defining the SentimentAnalysisPrompting Class

The SentimentAnalysisPrompting class inherits from DPAlgorithm, setting up the dynamic programming structure. It initializes states, actions, and transitions, which represent different stages of the adaptive prompting process.

class SentimentAnalysisPrompting(DPAlgorithm):

def __init__(self):

super().__init__()

# Define states

self.states = {

'initial': State('initial_prompt'),

'clarification': State('clarification_prompt'),

'detailed_feedback': State('detailed_feedback_prompt')

}

# Define actions

self.actions = {

'request_clarification': Action(self.request_clarification),

'request_detailed_feedback': Action(self.request_detailed_feedback)

}

# Define transitions

self.transitions = [

Transition(self.states['initial'], self.states['clarification'],

self.actions['request_clarification']),

Transition(self.states['clarification'], self.states

['detailed_feedback'], self.actions['request_detailed_feedback'])

]Step3: Request Clarification Action

This method defines what happens when feedback is unclear or too brief. If the feedback is vague, the system transitions to a clarification prompt, asking for more information.

def request_clarification(self, state, feedback):

# Transition to clarification prompt if feedback is unclear or short

if "not clear" in feedback or len(feedback.split()) < 5:

return self.states['clarification']

return self.states['initial']Step4: Request Detailed Feedback Action

In this method, if the feedback suggests the need for more details, the system transitions to a prompt specifically asking for detailed feedback.

def request_detailed_feedback(self, state, feedback):

# Transition to detailed feedback prompt if feedback indicates a need

# for more details

if "details" in feedback:

return self.states['detailed_feedback']

return self.states['initial']Step5: Compute Method

The compute method is responsible for running the dynamic programming algorithm. It determines the next state and prompt based on the initial state and the given feedback.

def compute(self, initial_state, feedback):

# Compute the next prompt based on the current state and feedback

return self.run(initial_state, feedback)Step6: Initializing and Processing Feedback

Here, the SentimentAnalysisPrompting class is initialized, and a set of sample feedback is processed. The system computes the adapted prompt based on each feedback entry.

# Initialize sentiment analysis prompting

sa_prompting = SentimentAnalysisPrompting()

initial_state = sa_prompting.states['initial']

# Sample feedbacks for testing

feedbacks = [

"I don't like it.",

"The product is okay but not great.",

"Can you tell me more about the features?",

"I need more information to provide a detailed review."

]

# Process feedbacks and collect results

results = []

for feedback in feedbacks:

adapted_prompt = sa_prompting.compute(initial_state, feedback)

results.append({

'Feedback': feedback,

'Adapted Prompt': adapted_prompt.name

})Step7: Visualizing the Results

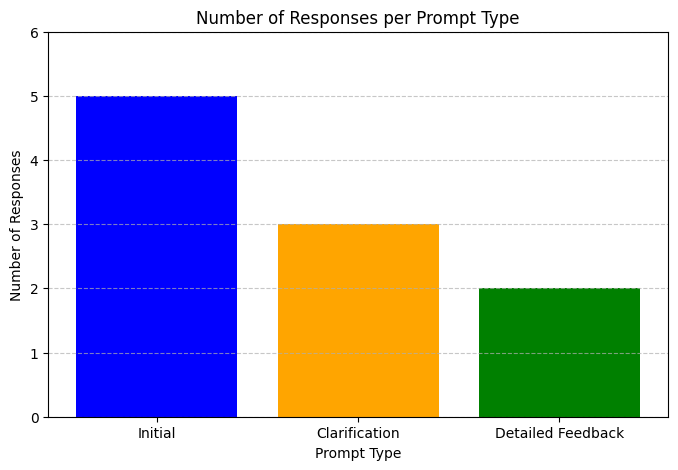

Finally, the results are visualized using a bar chart. The chart displays the number of responses categorized by the type of prompt: Initial, Clarification, and Detailed Feedback.

# Print results

for result in results:

print(f"Feedback: {result['Feedback']}\nAdapted Prompt: {result['Adapted Prompt']}\n")

# Example data for visualization

# Count of responses at each prompt stage

prompt_names = ['Initial', 'Clarification', 'Detailed Feedback']

counts = [sum(1 for r in results if r['Adapted Prompt'] == name) for name in prompt_names]

# Plotting

plt.bar(prompt_names, counts, color=['blue', 'orange', 'green'])

plt.xlabel('Prompt Type')

plt.ylabel('Number of Responses')

plt.title('Number of Responses per Prompt Type')

plt.show()Expected Output

- Feedback and Adapted Prompt: The results for each feedback item showing which prompt type was selected.

- Visualization: A bar chart (below) illustrating how many responses fell into each prompt category.

The bar chart reveals that the ‘Initial’ prompt type dominates in usage and effectiveness, garnering the highest number of responses. The system infrequently requires clarification prompts, and requests for ‘Detailed Feedback’ are even less common. This suggests that initial prompts are crucial for user engagement, while detailed feedback is less critical. Adjusting focus and optimization based on these insights can enhance prompting strategies.

Benefits of Using DSPy for Adaptive Prompting

DSPy offers several compelling benefits for implementing adaptive prompting strategies. By leveraging DSPy’s capabilities, you can significantly enhance your adaptive prompting solutions’ efficiency, flexibility, and scalability.

- Efficiency: DSPy streamlines the development of adaptive strategies by providing high-level abstractions. This simplifies the process, reduces implementation time, and minimizes the risk of errors, allowing you to focus more on strategy design rather than low-level details.

- Flexibility: With DSPy, you can quickly experiment with and adjust different prompting strategies. Its flexible framework supports rapid iteration, enabling you to refine prompts based on real-time feedback and evolving requirements.

- Scalability: DSPy’s modular design is built to handle large-scale and complex NLP tasks. As your data and complexity grow, DSPy scales with your needs, ensuring that adaptive prompting remains effective and robust across various scenarios.

Challenges in Implementing Adaptive Prompting

Despite its advantages, using DSPy for adaptive prompting comes with its challenges. It’s important to be aware of these potential issues and address them to optimize your implementation.

- Complexity Management: Managing numerous states and transitions can be challenging due to their increased complexity. Effective complexity management requires keeping your state model simple and ensuring thorough documentation to facilitate debugging and maintenance.

- Performance Overhead: Dynamic programming introduces computational overhead that may impact performance. To mitigate this, optimize your state and transition definitions and conduct performance profiling to identify and resolve bottlenecks.

- User Experience: Overly adaptive prompting can negatively affect user experience if prompts become too frequent or intrusive. Striking a balance between adaptiveness and stability is crucial to ensure that prompts are helpful and do not disrupt the user experience.

Conclusion

We have explored the integration of adaptive prompting with the DSPy library to enhance NLP applications. We discussed how adaptive prompting improves interactions by dynamically adjusting prompts based on user feedback or model outputs. By leveraging DSPy’s dynamic programming framework, we demonstrated how to implement these strategies efficiently and flexibly.

Practical examples, such as sentiment analysis, highlighted how DSPy simplifies complex state management and transitions. While DSPy offers benefits like increased efficiency and scalability, it presents challenges like complexity management and potential performance overhead. Embracing DSPy in your projects can lead to more effective and responsive NLP systems.

Key Takeaways

- Adaptive prompting dynamically adjusts prompts based on user feedback to improve interactions.

- DSPy simplifies the implementation of adaptive prompting with dynamic programming abstractions.

- Benefits of using DSPy include efficient development, flexibility in experimentation, and scalability.

- Challenges include managing complexity and addressing potential performance overhead.

Frequently Asked Questions

A. Adaptive prompting involves dynamically adjusting prompts based on feedback or model outputs to improve user interactions and accuracy. It is important because it allows for more personalized and effective responses, enhancing user engagement and satisfaction in NLP applications.

A. DSPy provides a dynamic programming framework that simplifies the management of states, actions, and transitions in adaptive prompting. It offers high-level abstractions to streamline the implementation process, making experimenting with and refining prompting strategies easier.

A. The main benefits include increased development efficiency, flexibility for rapid experimentation with different strategies, and scalability to handle complex NLP tasks. DSPy helps streamline the adaptive prompting process and improves overall system performance.

A. Challenges include managing the complexity of numerous states and transitions, potential performance overhead, and balancing adaptiveness with user experience. Effective complexity management and performance optimization are essential to address these challenges.

A. To get started with DSPy, explore its documentation and tutorials to understand its features and capabilities. Implement basic dynamic programming concepts with DSPy, and gradually integrate it into your adaptive prompting strategies. Experiment with different scenarios and use cases to refine your approach and achieve the desired results.

The media shown in this article is not owned by Analytics Vidhya and is used at the Author’s discretion.