LangChain and LlamaIndex are robust frameworks tailored for creating applications using large language models. While both excel in their own right, each offers distinct strengths and focuses, making them suitable for different NLP application needs. In this blog we would understand when to use which framework, i.e., comparison between LangChain and LlamaIndex.

Learning Objectives

- Differentiate between LangChain and LlamaIndex in terms of their design, functionality, and application focus.

- Recognize the appropriate use cases for each framework (e.g., LangChain for chatbots, LlamaIndex for data retrieval).

- Gain an understanding of the key components of both frameworks, including indexing, retrieval algorithms, workflows, and context retention.

- Assess the performance and lifecycle management tools available in each framework, such as LangSmith and debugging in LlamaIndex.

- Select the right framework or combination of frameworks for specific project requirements.

This article was published as a part of the Data Science Blogathon.

Table of contents

What is LangChain?

You can think of LangChain as a framework rather than just a tool. It provides a wide range of tools right out of the box that enable interaction with large language models (LLMs). A key feature of LangChain is the use of chains, which allow the chaining of components together. For example, you could use a PromptTemplate and an LLMChain to create a prompt and query an LLM. This modular structure facilitates easy and flexible integration of various components for complex tasks.

LangChain simplifies every stage of the LLM application lifecycle:

- Development: Build your applications using LangChain’s open-source building blocks, components, and third-party integrations. Use LangGraph to build stateful agents with first-class streaming and human-in-the-loop support.

- Productionization: Use LangSmith to inspect, monitor and evaluate your chains, so that you can continuously optimize and deploy with confidence.

- Deployment: Turn your LangGraph applications into production-ready APIs and Assistants with LangGraph Cloud.

LangChain Ecosystem

- langchain-core: Base abstractions and LangChain Expression Language.

- Integration packages (e.g. langchain-openai, langchain-anthropic, etc.): Important integrations have been split into lightweight packages that are co-maintained by the LangChain team and the integration developers.

- langchain: Chains, agents, and retrieval strategies that make up an application’s cognitive architecture.

- langchain-community: Third-party integrations that are community maintained.

- LangGraph: Build robust and stateful multi-actor applications with LLMs by modeling steps as edges and nodes in a graph. Integrates smoothly with LangChain, but can be used without it.

- LangGraphPlatform: Deploy LLM applications built with LangGraph to production.

- LangSmith: A developer platform that lets you debug, test, evaluate, and monitor LLM applications.

Building Your First LLM Application with LangChain and OpenAI

Let’s make a simple LLM Application using LangChain and OpenAI, also learn how it works:

Let’s start by installing packages

!pip install langchain-core langgraph>0.2.27

!pip install -qU langchain-openaiSetting up openai as llm

import getpass

import os

from langchain_openai import ChatOpenAI

os.environ["OPENAI_API_KEY"] = getpass.getpass()

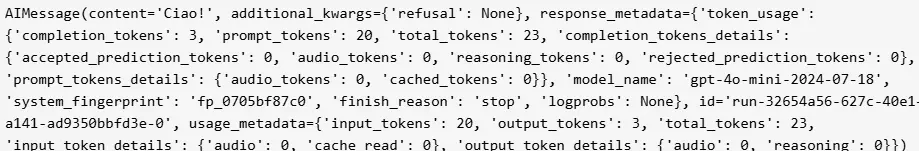

model = ChatOpenAI(model="gpt-4o-mini")To just simply call the model, we can pass in a list of messages to the .invoke method.

from langchain_core.messages import HumanMessage, SystemMessage

messages = [

SystemMessage("Translate the following from English into Italian"),

HumanMessage("hi!"),

]

model.invoke(messages)

Now lets create a Prompt template. Prompt templates are nothing but a concept in LangChain designed to assist with this transformation. They take in raw user input and return data (a prompt) that is ready to pass into a language model.

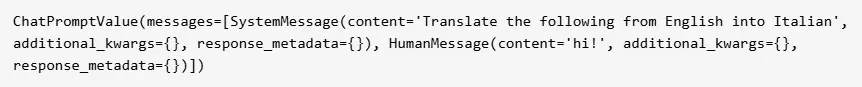

from langchain_core.prompts import ChatPromptTemplate

system_template = "Translate the following from English into {language}"

prompt_template = ChatPromptTemplate.from_messages(

[("system", system_template), ("user", "{text}")]

)Here you can see that it takes two variables, language and text. We format the language parameter into the system message, and the user text into a user message. The input to this prompt template is a dictionary. We can play around with this prompt template by itself.

prompt = prompt_template.invoke({"language": "Italian", "text": "hi!"})

prompt

We can see that it returns a ChatPromptValue that consists of two messages. If we want to access the messages directly we do:

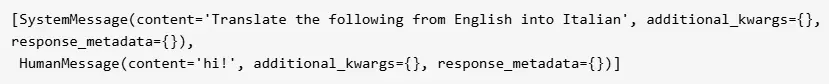

prompt.to_messages()

Finally, we can invoke the chat model on the formatted prompt:

response = model.invoke(prompt)

print(response.content)

LangChain is highly versatile and adaptable, offering a wide variety of tools for different NLP applications,

from simple queries to complex workflows. You can read more about LangChain components here.

What is LlamaIndex?

LlamaIndex (formerly known as GPT Index) is a framework for building context-augmented generative AI applications with LLMs including agents and workflows. Its primary focus is on ingesting, structuring, and accessing private or domain-specific data. LlamaIndex excels at managing large datasets, enabling swift and precise information retrieval, making it ideal for search and retrieval tasks. It offers a set of tools that make it easy to integrate custom data into LLMs, especially for projects requiring advanced search capabilities.

LlamaIndex is highly effective for data indexing and querying. Based on my experience with LlamaIndex, it is an ideal solution for working with vector embeddings and RAGs.

LlamaIndex imposes no restriction on how you use LLMs. You can use LLMs as auto-complete, chatbots, agents, and more. It just makes using them easier.

They provide tools like:

- Data connectors ingest your existing data from their native source and format. These could be APIs, PDFs, SQL, and (much) more.

- Data indexes structure your data in intermediate representations that are easy and performant for LLMs to consume.

- Engines provide natural language access to your data. For example:

- Query engines are powerful interfaces for question-answering (e.g. a RAG flow).

- Chat engines are conversational interfaces for multi-message, “back and forth” interactions with your data.

- Agents are LLM-powered knowledge workers augmented by tools, from simple helper functions to API integrations and more.

- Observability/Evaluation integrations that enable you to rigorously experiment, evaluate, and monitor your app in a virtuous cycle.

- Workflows allow you to combine all of the above into an event-driven system far more flexible than other, graph-based approaches.

LlamaIndex Ecosystem

Just like LangChain, LlamaIndex too has its own ecosystem.

- llama_deploy: Deploy your agentic workflows as production microservices

- LlamaHub: A large (and growing!) collection of custom data connectors

- SEC Insights: A LlamaIndex-powered application for financial research

- create-llama: A CLI tool to quickly scaffold LlamaIndex projects

Building Your First LLM Application with LlamaIndex and OpenAI

Let’s make a simple LLM Application using LlamaIndex and OpenAI, also learn how it works:

Let’s install libraries

!pip install llama-indexSetup the OpenAI Key:

LlamaIndex uses OpenAI’s gpt-3.5-turbo by default. Make sure your API key is available to your code by setting it as an environment variable. In MacOS and Linux, this is the command:

export OPENAI_API_KEY=XXXXXand on Windows it is

set OPENAI_API_KEY=XXXXXThis example uses the text of Paul Graham’s essay, “What I Worked On”.

Download the data via this link and save it in a folder called data.

from llama_index.core import VectorStoreIndex, SimpleDirectoryReader

documents = SimpleDirectoryReader("data").load_data()

index = VectorStoreIndex.from_documents(documents)

query_engine = index.as_query_engine()

response = query_engine.query("What is this essay all about?")

print(response)

LlamaIndex abstracts the query process but essentially compares the query with the most relevant information from the vectorized data (or index), which is then provided as context to the LLM.

Comparative Analysis between LangChain vs LlamaIndex

LangChain and LlamaIndex cater to different strengths and use cases in the domain of NLP applications powered by large language models (LLMs). Here’s a detailed comparison:

| Feature | LlamaIndex | LangChain |

|---|---|---|

| Data Indexing | – Converts diverse data types (e.g., unstructured text, database records) into semantic embeddings. – Optimized for creating searchable vector indexes. | – Enables modular and customizable data indexing. – Utilizes chains for complex operations, integrating multiple tools and LLM calls. |

| Retrieval Algorithms | – Specializes in ranking documents based on semantic similarity. – Excels in efficient and accurate query performance. | – Combines retrieval algorithms with LLMs to generate context-aware responses. – Ideal for interactive applications requiring dynamic information retrieval. |

| Customization | – Limited customization, tailored to indexing and retrieval tasks. – Focused on speed and accuracy within its specialized domain. | – Highly customizable for diverse applications, from chatbots to workflow automation. – Supports intricate workflows and tailored outputs. |

| Context Retention | – Basic capabilities for retaining query context. – Suitable for straightforward search and retrieval tasks. | – Advanced context retention for maintaining coherent, long-term interactions. – Essential for chatbots and customer support applications. |

| Use Cases | Best for internal search systems, knowledge management, and enterprise solutions needing precise information retrieval. | Ideal for interactive applications like customer support, content generation, and complex NLP tasks. |

| Performance | – Optimized for quick and accurate data retrieval. – Handles large datasets efficiently. | – Handles complex workflows and integrates diverse tools seamlessly. – Balances performance with sophisticated task requirements. |

| Lifecycle Management | – Offers debugging and monitoring tools for tracking performance and reliability. – Ensures smooth application lifecycle management. | – Provides the LangSmith evaluation suite for testing, debugging, and optimization. – Ensures robust performance under real-world conditions. |

Both frameworks offer powerful capabilities, and choosing between them should depend on your project’s specific needs and goals. In some cases, combining the strengths of both LlamaIndex and LangChain might provide the best results.

Conclusion

LangChain and LlamaIndex are both powerful frameworks but cater to different needs. LangChain is highly modular, designed to handle complex workflows involving chains, prompts, models, memory, and agents. It excels in applications that require intricate context retention and interaction management,

such as chatbots, customer support systems, and content generation tools. Its integration with tools like LangSmith for evaluation and LangServe for deployment enhances the development and optimization lifecycle, making it ideal for dynamic, long-term applications.

LlamaIndex, on the other hand, specializes in data retrieval and search tasks. It efficiently converts large datasets into semantic embeddings for quick and accurate retrieval, making it an excellent choice for RAG-based applications, knowledge management, and enterprise solutions. LlamaHub further extends its functionality by offering data loaders for integrating diverse data sources.

Ultimately, choose LangChain if you need a flexible, context-aware framework for complex workflows and interaction-heavy applications, while LlamaIndex is best suited for systems focused on fast, precise information retrieval from large datasets.

Key Takeaways

- LangChain excels at creating modular and context-aware workflows for interactive applications like chatbots and customer support systems.

- LlamaIndex specializes in efficient data indexing and retrieval, ideal for RAG-based systems and large dataset management.

- LangChain’s ecosystem supports advanced lifecycle management with tools like LangSmith and LangGraph for debugging and deployment.

- LlamaIndex offers robust tools like vector embeddings and LlamaHub for semantic search and diverse data integration.

- Both frameworks can be combined for applications requiring seamless data retrieval and complex workflow integration.

- Choose LangChain for dynamic, long-term applications and LlamaIndex for precise, large-scale information retrieval tasks.

Frequently Asked Questions

A. LangChain focuses on building complex workflows and interactive applications (e.g., chatbots, task automation), while LlamaIndex specializes in efficient search and retrieval from large datasets using vectorized embeddings.

A. Yes, LangChain and LlamaIndex can be integrated to combine their strengths. For example, you can use LlamaIndex for efficient data retrieval and then feed the retrieved information into LangChain workflows for further processing or interaction.

A. LangChain is better suited for conversational AI as it offers advanced context retention, memory management, and modular chains that support dynamic, context-aware interactions.

A. LlamaIndex uses vector embeddings to represent data semantically. It enables efficient top-k similarity searches, making it highly optimized for fast and accurate query responses, even with large datasets.

The media shown in this article is not owned by Analytics Vidhya and is used at the Author’s discretion.