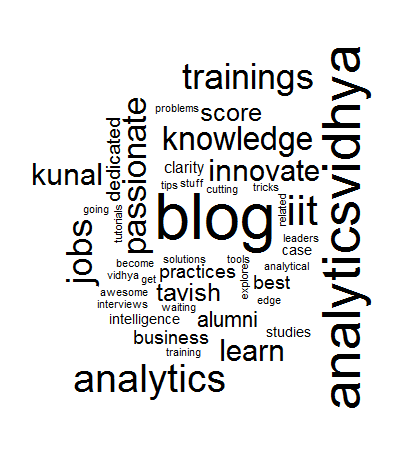

This is how a word cloud of our entire website looks like!

A word cloud is a graphical representation of frequently used words in a collection of text files. The height of each word in this picture is an indication of frequency of occurrence of the word in the entire text. By the end of this article, you will be able to make a word cloud using R on any given set of text files. Such diagrams are very useful when doing text analytics.

[stextbox id=”section”] Why do we need text analytics? [/stextbox]

Analytics is the science of processing raw information to bring out meaningful insights. This raw information can come from variety of sources. For instance, let’s consider a modern multinational bank, who wants to use all the available information to drive the best strategy. What are the sources of information available to the bank?

1. Bank issues different kinds of products to different customers. This information is fed to the system and can be used for targeting new customers, servicing existing customers and forming customer level strategies.

2. Customers of bank would be doing millions of transactions everyday. The information about where these transactions are done, when they are done and what amount of transactions where they helps bank to understand their customer.

There can be other behavioral variables (e.g. cash withdrawal patterns) which can provide the bank with valuable data, which helps the bank build optimal strategy. This analysis gives the bank, a competitive edge over other market players by targeting the right customer, with the right product at the right time. But, given that, at present every competitor is using similar kind of tools and data, analytics have become more of a hygiene factor rather than competitive edge. To gain the edge back, the bank has to find more sources of data and more sophisticated tools to handle this data. All the data, we have discussed till this point is the structured data. There are two other types of data, the bank can use to drive insightful information.

1. System data : Consider a teller carrying out a transaction at one of the counter. Every time he completes a transaction, a log is created in the system. This type of data is called system data. It is obviously enormous in volumes, but still not utilized to a considerable extent in a lot of banks. If we do analyze this data, we can optimize the number of tellers in a branch or scale the efficiency of each branch.

2. Unstructured data : Feedback forms with free text comments, comments on Bank’s Facebook Page, twitter page, etc. are all examples of unstructured data. This data has unique information about customer sentiment. Say, the bank launches a product and found that this product is very profitable in first 3 months. But customers who bought the product found that this product was really a bad choice and started spreading bad words about the product on all social networks and through feedback channels. If the bank has no way to decode this information, this will lead to a huge loss because the bank will never make a proactive effort to stop the negative wave against its image. Imagine, the kind of power analyzing such data hands over to the bank.

[stextbox id=”section”] Installing required packages on R [/stextbox]

Text Mining needs some special packages, which might not be pre-installed on your R software. You need to install Natural Language Processing package to load a library called tm and SnowballC. Follow the instructions written in the box to install the required packages.

[stextbox id=”grey”]

> install.packages(“ctv”)

> library(“ctv”)

> install.views(“NaturalLanguageProcessing”)

[/stextbox]

[stextbox id=”section”] Step by step coding on R [/stextbox]

Following is the step by step algorithm of creating a word cloud on a bunch of text files. For simplicity, we are using files in .txt format.

Step 1 : Identiy & create text files to turn into a cloud

The first step is to identify & create text files on which you want to create the word cloud. Store these files in the location “./corpus/target”. Make sure that you do not have any other file in this location. You can use any location to do this exercise, but for simplicity, try it with this location for the first time.

Step 2 : Create a corpus from the collection of text files

The second step is to transform these text files into a R – readable format. The package TM and other text mining packages operate on a format called corpus. Corpus is just a way to store a collection of documents in a R software readable format.

[stextbox id=”grey”]

> cname <- file.path(".","corpus","target")

> library (tm)

> docs <- Corpus(DirSource(cname))

[/stextbox]

Step 3 : Data processing on the text files

This is the most critical step in the entire process. Here, we will decode the text file by selecting some keywords, which builds up the meaning of the sentence or the sentiments of the author. R makes this step really easy. Here we will make 3 important transformations.

i. Replace symbols like “/” or “@” with a blank space

ii. Remove words like “a”, “an”, “the”, “I”, “He” and numbers. This is done to remove any skewness caused by these commonly occurring words.

iii. Remove punctuation and finally whitespaces. Note that we are not replacing these with blanks because grammatically they will have an additional blank.

[stextbox id=”grey”]

> library (SnowballC)

> for (j in seq(docs))

+ {docs[[j]] <- gsub("/"," ",docs[[j]])

+ docs[[j]] <- gsub("@"," ",docs[[j]])}

> docs <- tm_map(docs,tolower)

> docs <- tm_map(docs,removeWords, stopwords("english"))

> docs <- tm_map(docs,removeNumbers)

> docs <- tm_map(docs,removePunctuation)

> docs <- tm_map(docs,stripWhitespace)

[/stextbox]

Step 4 : Create structured data from the text file

Now is the time to convert this entire corpus into a structured dataset. Note that we have removed all filler words. This is done by a command “DocumentTermMatrix” in R. Execute the following line in your R session to make this conversion.

[stextbox id=”grey”]

> dtm <- DocumentTermMatrix(docs)[/stextbox]

Step 5 : Making the word cloud using the structured form of the data

Once, we have the structured format of the text file content, we now make a matrix of word and their frequencies. This matrix will be finally put into the function to build wordcloud.

[stextbox id=”grey”]

> library(wordcloud)

> m <- as.matrix(dtm)

> v <- sort(colSums(m),decreasing=TRUE)

> head(v,14)

> words <- names(v)

> d <- data.frame(word=words, freq=v)

> wordcloud(d$word,d$freq,min.freq=50)[/stextbox]

Running this code will give you the required output. The order of words is completely random but the length of the words are directly proportional to the frequency of occurrence of the word in text files.Our website has the world “Analytics Vidhya” repeated many times and hence this word has the maximum length. This diagram directly helps us identify the most frequently used words in the text files.

[stextbox id=”section”] End Notes [/stextbox]

Text mining deals with relationships between words and analyzing the sentiment made by the combination of these words and their relationship. Structured data have defined number of variables and all the analysis is done by finding out correlation between these variables. But in text mining the relationship is found between all the words present in the text. This is the reason text mining is rarely used in the industry today. But R offers such tools which make this analysis much simpler. This article covers only the tip of the iceberg. In one of the coming articles, we will cover a framework of analyzing unstructured data.

Did you find the article interesting? Have you worked on text mining before? Did you use R to do text mining or some other softwares? Let us know any other interesting feature of R used for text mining.

If you like what you just read & want to continue your analytics learning, subscribe to our emails, follow us on twitter or like our facebook page.

This is really neat. Thanks for writing about this. One issue I noticed is the ordering of the normalization. I would switch tolower and stopwords like so:

> docs docs <- tm_map(docs,removeWords, stopwords("english"))otherwise an capitalized stop words such as 'The' are not removed. I ran it on a collection of books; many of the sentences must start with 'The' :-)Eric, Thanks for the input. I re ran the code multiple times, which eliminated this issue. Thanks for bringing this up. I have revised the code in the article. Tavish

Hi Tavish, I am planning to do word cloud on a data set, which has the word and respective word count. Is it feasible/ appropriate to do word cloud on such data? If so can you please guide me. Thank you

Avinash, Look at step 5 of the article - I think that is the only thing you need in this case. Regards, Kunal

whoah this blog is excellent i love reading your articles. Keep up the good work! You know, lots of people are searching around for this information, you can help them greatly.