Overview

- Data science hackathons can be a tough nut to crack, especially for beginners

- Here are 12 powerful tips to crack your next data science hackathon!

Introduction

Like any discipline, data science also has a lot of “folk wisdom”. This folk wisdom is hard to teach formally or in a structured manner but it’s still crucial for success, both in the industry as well as in data science hackathons.

Newcomers in data science often form the impression that knowing all machine learning algorithms would be a panacea to all machine learning problems. They tend to believe that once they know the most common algorithms (Gradient Boosting, Xtreme Gradient Boosting, Deep Learning architectures), they would be able to perform well in their roles/organizations or top these leaderboards in competitions.

Sadly, that does not happen!

If you’re reading this, there’s a high chance you’ve participated in a data science hackathon (or several of them). I’ve personally struggled to improve my model’s performance in my initial hackathon days and it was quite a frustrating experience. I know a lot of newcomers who’ve faced the same obstacle.

So I decided to put together 12 powerful hacks that have helped me climb to the top echelons of hackathon leaderboards. Some of these hacks are straightforward and a few you’ll need to practice to master.

If you are a beginner in the world of Data Science Hackathons or someone who wants to master the art of competing in hackathons, you should definitely check out the third edition of HackLive – a guided community hackathon led by top hackers at Analytics Vidhya.

The 12 Tips to Ace Data Science Hackathons

- Understand the Problem Statement

- Build your Hypothesis Set

- Team Up

- Create a Generic Codebase

- Feature Engineering is the Key

- Ensemble (Almost) Always Wins

- Discuss! Collaborate!

- Trust Local Validation

- Keep Evolving

- Build hindsight to improve your foresight

- Refactor your code

- Improve iteratively

Data Science Hackathon Tip #1: Understand the Problem Statement

Seems too simple to be true? And yet, understanding the problem statement is the very first step to acing any data science hackathon:

- Without understanding the problem statement, the data, and the evaluation metric, most of your work is fruitless. Spend time reading as much as possible about them and gain some functional domain knowledge if possible

- Re-read all the available information. It will help you in figuring out an approach/direction before writing a single line of code. Only once you are very clear about the objective, you can proceed with the data exploration stage

Let me show you an example of a problem statement from a data science hackathon we conducted. Here’s the Problem Statement of the BigMart Sales Prediction problem:

The data scientists at BigMart have collected 2013 sales data for 1559 products across 10 stores in different cities. Also, certain attributes of each product and store have been defined. The aim is to build a predictive model and find out the sales of each product at a particular store.

Using this model, BigMart will try to understand the properties of products and stores which play a key role in increasing sales.

The idea is to find the properties of a product and store which impact the sales of a product. Here, you can think of some of the factors based on your understanding that can make an impact on the sales and come up with some hypotheses without looking at the data.

Data Science Hackathon Tip #2: Build your Hypothesis Set

- Next, you should build a comprehensive list of hypotheses. Please note that I am actually asking you to build a set of the hypothesis before looking at the data. This ensures you are not biased by what you see in the data

- It also gives you time to plan your workflow better. If you are able to think of hundreds of features, you can prioritize which ones you would create first

- Read more about hypothesis generation here

I encourage you to go through the hypotheses generation stage for the BigMart Sales problem in this article: Approach and Solution to break in Top 20 of Big Mart Sales prediction We have divided them on the basis of store level and product level. Let me illustrate a few examples here.

Store-Level Hypotheses:

- City type: Stores located in urban or Tier 1 cities should have higher sales because of the higher income levels of people there

- Population Density: Stores located in densely populated areas should have higher sales because of more demand

- Store Capacity: Stores that are very big in size should have higher sales as they act like one-stop-shops and people would prefer getting everything from one place

- Ambiance: Stores that are well-maintained and managed by polite and humble people are expected to have higher footfall and thus higher sales

Product-Level Hypotheses:

- Brand: Branded products should have higher sales because of higher trust in the customer

- Packaging: Products with good packaging can attract customers and sell more

- Utility: Daily products should have a higher tendency to sell as compared to the specific products

- Advertising: Better advertising of products in the store should have higher sales in most cases

- Promotional Offers: Products accompanied by attractive offers and discounts will sell more

Data Science Hackathon Hack #3: Team Up!

- Build a team and brainstorm together. Try and find a person with a complementary skillset in your team. If you have been a coder all your life, go and team up with a person who has been on the business side of things

- This would help you get a more diverse set of hypotheses and would increase your chances of winning the hackathon. The only exception to this rule can be that both of you should prefer the same tool/language stack

- It will save you a lot of time and you will be able to parallelly experiment with several ideas and climb to the top of the leaderboard

- Get a good score early in the competition which helps in teaming up with higher-ranked people

Here are some of the instances where hackathons were won by a team:

- Team Creed won first place in the LTFS Data Science FinHack 2

- Team Mark & SRK won the second position in Lord of the Machines: Data Science Hackathon

Data Science Hackathon Tip #4: Create a Generic Codebase

- Save valuable time when you participate in your next hackathon by creating a reusable generic code base & functions for your favorite models which can be used in all your hackathons, like:

- Create a variety of time-based features if the dataset has a time feature

- You can write a function that will return different types of encoding schemes

- You can write functions that will return your results on a variety of different models so that you can choose your baseline model wisely and choose your strategy accordingly

Here is a code snippet that I generally use to encode all my train, test, and validation set of the data. I just need to pass a dictionary on which column and what kind of encoding scheme I want. I will not recommend you to use exactly the same code but will suggest you keep some of the function handy so that you can spend more time on brainstorming and experimenting.

Here is a sample of how I use the above function. I just need to provide a dictionary where the keys are the type of encoding I want and the values are the columns name that I want to encode:

- You can also use libraries like pandas profiling to get an idea about the dataset by reading the data:

import pandas as pd

import pandas_profiling

# read the dataset

data = pd.read_csv('bigmart-sales-data.csv')

print(data.head())

pandas_profiling.ProfileReport(data)

Data Science Hackathon Tip #5: Feature Engineering is Key

“More data beats clever algorithms, but better data beats more data.”

– Peter Norwig

Feature engineering! This is one of my favorite parts of a data science hackathon. I get to tap into my creative juices when it comes to feature engineering – and which data scientist doesn’t like that?

- Feature engineering is the art of extracting more information from existing data. You are not adding any new data here, but you are actually making the data you already have more useful

- For example, let’s say you are trying to predict footfall in a shopping mall based on dates. If you try and use the dates directly, you may not be able to extract meaningful insights from the data. This is because the footfall is less affected by the day of the month than it is by the day of the week. Now this information about the day of the week is implicit in your data. You need to bring it out to make your machine learning model better

- The performance of a predictive model is heavily dependent on the quality of the features in the dataset used to train that model. If you are able to create new features that help in providing more information to the model about the target variable, it’s performance will go up

- Spend a considerable amount of time in pre-processing and feature engineering. You need to concentrate a lot on this since this can make a huge difference in the scores

- You can also try some automated tools like Featuretools for creating features if you are short of time. Here is an amazing article which will help you start using Featuretools: A Hands-On Guide to Automated Feature Engineering using Featuretools

- I would highly recommend you go through the following articles to learn more about feature engineering:

- Here are some articles on the winner’s solutions from previous hackathons. Have a look at what kind of features they make and how were they able to think about it:

Data Science Hackathon Tip #6: Ensemble (Almost) Always Wins

- 95% of winners have used ensemble models in their final submission on DataHack hackathons

- Ensemble modeling is a powerful way to improve the performance of your model. It is an art of combining diverse results of individual models together to improvise on the stability and predictive power of the model

- You will not find any data science hackathon that has top finishing solutions without ensemble models

- You can learn more about the different ensemble techniques from the following articles:

Here is an example of an advance ensemble technique: 3-Level Stacking used by Marios Michailidis:

Data Science Hackathon Tip #7: Discuss! Collaborate!

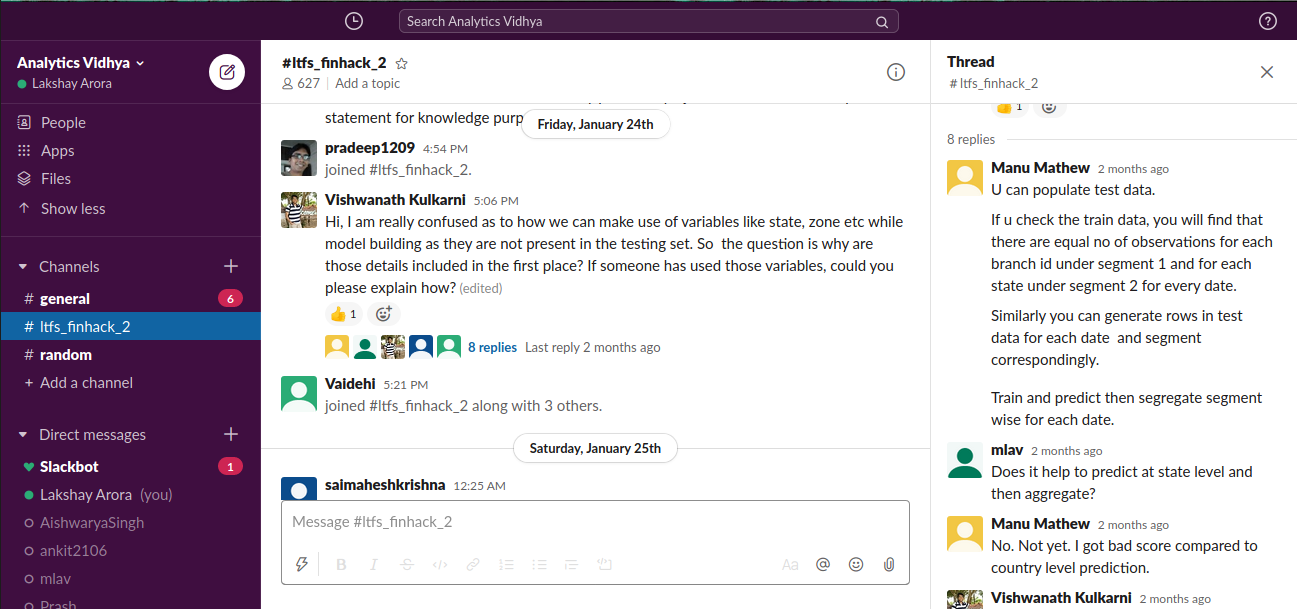

- Stay up to date with forum discussions to make sure that you are not missing out on any obvious detail regarding the problem

- Do not hesitate to ask people in forums/messages:

Data Science Hackathon Tip #8: Trust Local Validation

- Do not jump into building models by dumping data into the algorithms. While it is useful to get a sense of basic benchmarks, you need to take a step back and build a robust validation framework

- Without validation, you are just shooting in the dark. You will be at the mercy of overfitting, leakage and other possible evaluation issues

- By replicating the evaluation mechanism, you can make faster and better improvements by measuring your validation results along with making sure your model is robust enough to perform well on various subsets of the train/test data

- Have a robust local validation set and avoid relying too much on the public leaderboard as this might lead to overfitting and can drop your private rank by a lot

- In the Restaurant Revenue Prediction contest, a team that was ranked first on the public leaderboard slipped down to rank 1962 on the private leaderboard

“The first we used to determine which rows are part of the public leaderboard score, while the second is used to determine the correct predictions. Along the way, we encountered much interesting mathematics, computer science, and statistics challenges.”

Source: Kaggle: BAYZ Team

Data Science Hackathon Tip #9 – Keep Evolving

It is not the strongest or the most intelligent who will survive but those who can best manage change. –Charles Darwin

- If you are planning to enter the elite class of data science hackers then one thing is clear – you can’t win with traditional techniques and knowledge.

- Employing logistic regression or KNN in Hackathons can be a great starting point but as you move ahead of the curve, these won’t land you in the top 100.

- Let’s take a simple example – in the early days of NLP hackathons, participants used TF-IDF, then Word2vec came around. Fast-forward to nowadays, there are state-of-the-art Transformers. The same goes for computer vision and so on.

- Keep yourself up-to-date with the latest research papers and projects on Github. Although this will require a bit of extra effort, it will be worth it.

Data Science Hackathon Tip #10 – Use Hindsight to build your Foresight

- Has it ever happened that after the competition is over, you sit back, relax, maybe think about the things you could have done, and then move on to the next competition? Well, this is the best time to learn!

- Do not stop learning after the competition is over. Read winning solutions of the competition, analyze where you went wrong. After all, learning from mistakes can be very impactful!

- Try to improve your solutions. Make notes about it. Refer to it to your friends and colleagues and take back feedback.

- This will give you a solid head-start for your next competition. And this time you’ll be much more equipped to go tackle the problem statement. Datahack provides a really cool feature of late submission. You can make changes to your code even after the hackathon is over and submit the solution and check its score too!

Data Science Hackathon Tip #11 – Refactor your code

- Just imagine living in a room, where everything is messy, clothes lying all around, shoes on the shelves, and food on the floor. It is nasty. Isn’t it? The same goes for your code.

- When we get started with a competition, we are excited and we probably write rough code, copy-paste from earlier your earlier notebooks, and some from stack overflow. Continuing this trend for the complete notebook will make it messy. Understanding your code will consume the majority of your time and make it harder to perform operations.

- The solution is to keep refactoring your code from time to time. Keep maintaining your code at regular intervals of time.

- This will also help you team up with other participants and have much better communication.

Data Science Hackathon Tip #12 – Improve iteratively

- Many of us follow the linear approach of model building, going through the same process – Data Cleaning, EDA, feature engineering, model building, evaluation. The trick is to understand that it is a circular and iterative process.

- For example, we are building a sales prediction model and we get a low MAE, we decide to analyze the samples. Hence, it turns out, that our model is giving spurious results for female buyers. We can then take a step back and focus on the Gender feature, do EDA on it and check how to improve from there. Here we are going back and forth and improving step-by-step.

- We can also look at some of the strong and important features and combine some of them and check their results. We may or may not see an improvement but we can only figure it out by moving iteratively.

- It is very important to understand that the trick is to be a dynamic learner. Following an iterative process can lead you to achieve double or single-digit ranks.

Final Thoughts

These 12 hacks have held me in good stead regardless of the hackathon I’m participating in. Sure, a few tweaks here and there are necessary but having a solid framework and structure in place will take you a long way towards achieving success in data science hackathons.

I would love to hear your frameworks, hacks, and approaches to hackathons. Share your thoughts in the comments section below.

Do you want more of such hacks, tips, and tricks? HackLive is the way to go from zero-to-hero and master the art of participating in a data science competition. Don’t forget to check out the third edition of HackLive 3.

I am impressed by the information that you have on this blog. It shows how well you understand this subject.