Introduction

Easy way to build a video face detection app that detects and recognizes happiness in your computer’s camera using OpenCV in Python

Face detection algorithms are improving at a fast pace over the last few years. Companies and governments are using it in broad ways for all types of purposes. Here is a list of my favorites:

- Help the blind

- Aid forensic investigations

- Prevent crime

- Smarter advertising

- Identify people on social media

- Find missing persons

- Protect schools from threats

- Validate identity at ATMs

- Track attendance at church

This tutorial will teach you how to create your own video face detection app in an easy way so you can begin on this subject. We will use the OpenCV library in Python. In a few minutes, you will get everything you need to start detecting faces around. We will go straight to what really matters in two sections, and I will provide a link to an article that gives a detailed explanation of the theory behind the algorithms we will be using.

- Code (step by step)

- Bonus (detect happiness)

Let’s begin!

Code

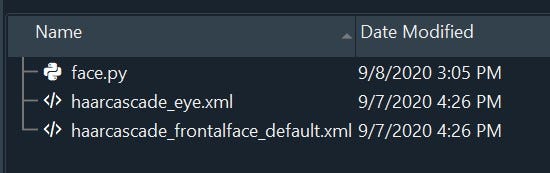

The OpenCV algorithm uses Haar cascades filters to make the detections. It has all the parameters your code needs to identify a face or parts of it. You can read more about it in the article I will link at the end with the theory of face detection. Start by copying the following cascades and pasting as XML inside the same folder as your script:

Let’s start coding by importing the library and the XML files.

import cv2face_cascade = cv2.CascadeClassifier('haarcascade_frontalface_default.xml')eye_cascade = cv2.CascadeClassifier('haarcascade_eye.xml')

Now we will create a function that will access your camera and look for faces. After it finds the faces, it will look for eyes inside the faces. It will save a lot of processing time keeping the program from looking for eyes in the whole frame. I will show the complete function, and provide an explanation of each step below it.

# import the necessary packages

import cv2

#import cv2

face_cascade = cv2.CascadeClassifier('haarcascade_frontalface_default.xml')

eye_cascade = cv2.CascadeClassifier('haarcascade_eye.xml')

def detect(gray, frame) :

faces = face_cascade.detectMultiScale(gray, 1.3, 5)

for (x, y, w, h) in faces:

cv2.rectangle(frame, (x, y), (x+w, y+h), (255, 0, 0), 2)

roi_gray = gray[y:y+h, x:x+w]

roi_color = frame[y:y+h, x:x+w]

eyes = eye_cascade.detectMultiScale(roi_gray, 1.1, 18)

for (ex, ey, ew, eh) in eyes:

cv2.rectangle(roi_color, (ex, ey), (ex+ew, ey+eh), (0, 255, 0), 2)

return frame

def detect(gray, frame)Most of the time when working with images and machine learning, it’s wise to use grayscale images to deal with fewer data and improve the efficiency of the code. Here we will do exactly that. This function will read two parameters, gray and frame, where gray is the grayscale version of the last frame obtained from the camera, and the frame is the original version of the last frame obtained from the camera.

faces = face_cascade.detectMultiScale(gray, 1.3, 5) MultiScale detects objects of different sizes in the input image and returns rectangles positioned on the faces. The first argument is the image, the second is the scalefactor (how much the image size will be reduced at each image scale), and the third is the minNeighbors (how many neighbors each rectangle should have). The values of 1.3 and 5 are based on experimenting and choosing those that worked best.

for (x, y, w, h) in faces: We will loop through each rectangle (each face detected) using its coordinates generated by the function we discussed above.

cv2.rectangle(frame, (x,y), (x+w, y+h), (255, 0, 0), 2) We are drawing the rectangle in the original image that we are capturing in the camera (last frame). the (255,0,0) is the color of the frame in RGB. The last parameter (2) is the thickness of the rectangle. x is the horizontal initial position, w is the width, y is the vertical initial position, h is the height.

roi_gray = gray[y:y+h, x:x+w] Here we are setting roi_gray to be our region of interest. That’s where we will look for the eyes.

roi_color = frame[y:y+h, x:x+w] We are getting the region of interest in the original frame (colored, not black & white).

Now, we apply the same concepts to find the eyes. The difference is that we won’t look inside the whole image. We will be using the region of interest (roi_gray and roi_color), then drawing the rectangles for the eyes in the frame.

eyes = eye_cascade.detectMultiScale(roi_gray, 1.1, 18)

for (ex, ey, ew, eh) in eyes:

cv2.rectangle(roi_color, (ex, ey), (ex+ew, ey+eh), (0, 255, 0), 2)return frame

Now we will access your camera and use the function we just created. The code will keep getting snapshots from the camera and apply the detect() function to detect the face and eyes and draw the rectangles. I will show you the whole block of code and then explain it step by step again.

video_capture = cv2.VideoCapture(0)

while True:

_, frame = video_capture.read()

gray = cv2.cvtColor(frame, cv2.COLOR_BGR2GRAY)

canvas = detect(gray, frame)

cv2.imshow('Video', canvas)

if cv2.waitKey(1) & 0xFF == ord('q') :

break

video_capture = cv2.VideoCapture(0) Here we are accessing your camera. When the parameter is 0, we are accessing an internal camera from your computer. When the parameter is 1, we are accessing an external camera that is plugged on your computer.

while True: We will keep running the detect function with the code that follows while the camera is opened (until we close it using a key that we will define).

_, frame = video_capture.read() the read() function will return 2 objects, but we are interested only in the latest one, that is the last frame from the camera. So we are ignoring the first object _, and naming the second as frame, that we will use to feed our detect() function in a second.

gray = cv2.cvtColor(frame, cv2.COLOR_BGR2GRAY) We are getting the frame we just read from the function above and converting it to grayscale. We are naming it gray and we will use to feed our detect function as well.

canvas = detect(gray, frame) Canva will be our image to work with.

cv2.imshow('Video', canvas imshow() displays an image in a window. So we will see the camera (`Video`) and apply the canvas (detect() function) in a new window.

if cv2.waiKey(1) & 0xFF == ord('q') : break This piece of code will stop the program when you press q on the keyboard.

Now, we just need to turn off the camera and close the window when we stop the program. We use the following syntax:

video_capture.release() # Turn off the camera cv2.destroyAllWindows() # Close the window

That’s it. You are all set to detect faces and eyes. Check below the whole code put together.

import cv2face_cascade = cv2.CascadeClassifier('haarcascade_frontalface_default.xml')eye_cascade = cv2.CascadeClassifier('haarcascade_eye.xml')def detect(gray, frame) :

faces = face_cascade.detectMultiScale(gray, 1.3, 5)

for (x, y, w, h) in faces:

cv2.rectangle(frame, (x, y), (x+w, y+h), (255, 0, 0), 2)

roi_gray = gray[y:y+h, x:x+w]

roi_color = frame[y:y+h, x:x+w]

eyes = eye_cascade.detectMultiScale(roi_gray, 1.1, 18)

for (ex, ey, ew, eh) in eyes:

cv2.rectangle(roi_color, (ex, ey), (ex+ew, ey+eh), (0, 255, 0), 2)

return framevideo_capture = cv2.VideoCapture(0)

while True:

_, frame = video_capture.read()

gray = cv2.cvtColor(frame, cv2.COLOR_BGR2GRAY)

canvas = detect(gray, frame)

cv2.imshow('Video', canvas)

if cv2.waitKey(1) & 0xFF == ord('q') :

breakvideo_capture.release() # Turn off the camera

cv2.destroyAllWindows() # Close the window

Now run everything together and a new window will pop-up.

Bonus Section

Happiness detection!! Let’s include a few more lines of code in the program so we can detect when someone is smiling.

Start by copying the Haar cascade for smiles, and reading the XML file as follows (write it right below you read the XML files for faces and eyes, at the beginning of your code):

smile_cascade = cv2.CascadeClassifier('haarcascade_smile.xml')

When we detect faces, we get regions of interest to look for eyes, right? After looking for eyes, let’s look for a smile as well. The code for smiles will be placed right before the return frame inside the detect() function. The parameters follow the same concept we saw for faces and eyes, and they are chosen based on experimenting with the best values.

smiles = smile_cascade.detectMultiScale(roi_gray, 1.1, 22)

for (sx, sy, sw, sh) in smiles:

cv2.rectangle(roi_color, (sx, sy), (sx+sw, sy+sh), (0, 0, 255), 2)

Here is the complete code adding happiness detection:

# Library

import cv2# Haar Cascades

face_cascade = cv2.CascadeClassifier('haarcascade_frontalface_default.xml')eye_cascade = cv2.CascadeClassifier('haarcascade_eye.xml')smile_cascade = cv2.CascadeClassifier('haarcascade_smile.xml')# Detect function

def detect(gray, frame) :

faces = face_cascade.detectMultiScale(gray, 1.3, 5)

for (x, y, w, h) in faces:

cv2.rectangle(frame, (x, y), (x+w, y+h), (255, 0, 0), 2)

roi_gray = gray[y:y+h, x:x+w]

roi_color = frame[y:y+h, x:x+w]

eyes = eye_cascade.detectMultiScale(roi_gray, 1.1, 18)

for (ex, ey, ew, eh) in eyes:

cv2.rectangle(roi_color, (ex, ey), (ex+ew, ey+eh), (0, 255, 0), 2) smiles = smile_cascade.detectMultiScale(roi_gray, 1.1, 22)

for (sx, sy, sw, sh) in smiles:

cv2.rectangle(roi_color, (sx, sy), (sx+sw, sy+sh), (0, 0, 255), 2)

return frame# Accessing the camera and applying the detect() function

video_capture = cv2.VideoCapture(0)

while True:

_, frame = video_capture.read()

gray = cv2.cvtColor(frame, cv2.COLOR_BGR2GRAY)

canvas = detect(gray, frame)

cv2.imshow('Video', canvas)

if cv2.waitKey(1) & 0xFF == ord('q') :

break# Closing everything

video_capture.release() # Turn off the camera

cv2.destroyAllWindows() # Close the window

https://cdn.embedly.com/widgets/media.html?src=https%3A%2F%2Fgfycat.com%2Fifr%2Fcaninearomaticamoeba&display_name=Gfycat&url=https%3A%2F%2Fgfycat.com%2Fcaninearomaticamoeba&image=https%3A%2F%2Fthumbs.gfycat.com%2FCanineAromaticAmoeba-size_restricted.gif&key=a19fcc184b9711e1b4764040d3dc5c07&type=text%2Fhtml&schema=gfycat

There you go! This was a simple way to detect faces, eyes, and happiness. There are more robust ways for face detection that are more complicated and I will be covering those tutorials later in the next couple of months. For now, enjoy your new skills making object detections in videos!