In the field of machine learning, optimizing model performance often necessitates selecting the most relevant features to accurately predict the target variable. Forward Feature Selection and Backward Elimination emerge as pivotal techniques, providing systematic approaches to incrementally build models by either adding informative features from the feature set (Forward Feature Selection) or removing irrelevant features (Backward Elimination). This article delves into the essence of Forward Feature Selection, exemplifying its application through a practical fitness level prediction scenario.

Table of contents

- What is Forward Feature Selection in Machine Learning ?

- Forward Feature Selection in Python Example

- Steps to Perform Forward Feature Selection

- Feature Importance of Forward Feature Selection

- Forward Feature Selection in Python Tutorial

- Importing Necessary Libraries

- Loading and Exploring the Dataset

- Defining Target and Independent Variables

- Installing Required Libraries

- Importing Models and Feature Selector

- Training the Model Using Forward Feature Selection

- Printing Selected Feature Names

- Creating a New DataFrame with Selected Features

- Checking Shape of Original and New Datasets

- Conclusion

- Frequently Asked Questions

What is Forward Feature Selection in Machine Learning ?

Forward Feature Selection is a feature selection technique that iteratively builds a model by adding one feature at a time, selecting the feature that maximizes model performance. It starts with an empty set of features and adds the most predictive feature in each iteration until a stopping criterion is met. This method is particularly useful when dealing with a large number of features, as it incrementally builds the model based on the most informative features. This process involves assessing new features, evaluating combinations of features, and selecting the optimal subset of features that best contribute to model accuracy. Similarly, Backward Elimination systematically removes features from the model one at a time, evaluating the impact on model performance until no further improvement is observed, ultimately identifying the subset of features that yield the best predictive power.

Also Read: Top 10 Machine Learning Algorithms

Forward Feature Selection in Python Example

We’ll use the same example of fitness level prediction based on the three independent variables:

So the first step in Forward Feature Selection is to train n models using each feature individually and checking the performance. So if you have three independent variables, we will train three models using each of these three features individually. Let’s say we trained the model using the Calories_Burnt feature and the target variable, Fitness_Level and we’ve got an accuracy of 87%:

Also, You Can Go through this article Feature Selection Techniques in Machine Learning

Next, we’ll train the model using the Gender feature, and we get an accuracy of 80%:

And similarly, the Plays_sport variable gives us an accuracy of 85%:

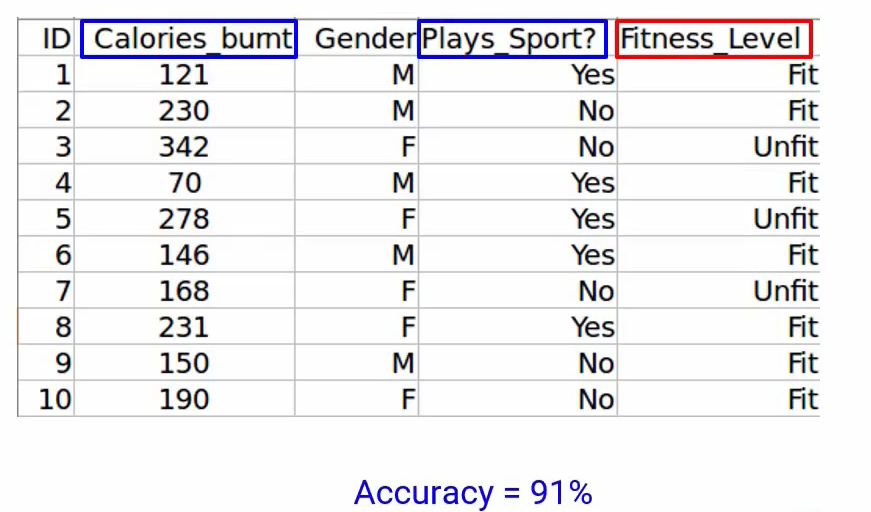

Now we will choose the variable, which gives us the best performance. When you look at this table:

As you can see Calories_Burnt alone gives an accuracy of 87% and Gender give 80% and the Plays_Sport variable gives 85%. When we compare these values, of course, Calories_Burnt produced the best result. And hence, we will select this variable.

Next, we will repeat this process and add one variable at a time. So of course we’ll keep the Calories_Burnt variable and keep adding one variable. So let’s take Gender here and using this we get an accuracy of 88%:

When you take Plays_Sport along with Calories_Burnt, we get an accuracy of 91%. A variable that produces the highest improvement will be retained. That intuitively makes sense. As you can see, Plays_Sport gives us a better accuracy when we combined it with the Calories_Burnt. Hence we will retain that and select it in our model. We will repeat the entire process until there is no significant improvement in the model’s performance.

Summary:

Steps to Perform Forward Feature Selection

- Train n model using feature (n) individually and check the performance.

- Choose the variable which gives the best performance.

- Repeat the process and add one variable at a time.

- Variable Producing the highest improvement is retained.

- Repeat the entire process until there is no significant improvement in the model’s performance.

Also Read: 4 Ways to Evaluate your Machine Learning Model: Cross-Validation Techniques (with Python code)

Feature Importance of Forward Feature Selection

Important features selected through Forward Feature Selection are chosen iteratively based on their individual contributions to the model’s predictive performance. In the fitness level prediction example, potential features could include:

- Calories Burnt: The amount of calories burned during physical activity, likely a significant predictor of fitness levels.

- Gender: Biological sex may influence fitness levels, making it a relevant feature.

- Plays Sport: Engagement in sports activities could correlate with higher fitness levels, making it another valuable predictor.

These important features are evaluated individually and in combination to determine their impact on model accuracy, guiding the selection process during Forward Feature Selection.

Also Read: Lasso & Ridge Regression | A Comprehensive Guide in Python & R (Updated 2024)

Forward Feature Selection in Python Tutorial

In this tutorial, we will delve into the implementation of Forward Feature Selection to systematically select features for our machine learning model. Using forward selection python as our coding platform, we’ll guide you through the process of selecting a subset of informative features from the feature set. Emphasizing the significance of choosing the desired number of features (n_features), we’ll focus on optimizing model performance to achieve the desired level of accuracy and generalization. Join us as we explore the intricacies of Forward Feature Selection and its pivotal role in machine learning optimization.

Importing Necessary Libraries

In this step, we import the Pandas library, which provides data structures and functions for data manipulation and analysis. We’ll use Pandas to read and explore the dataset.

#importing the libraries

import pandas as pdLoading and Exploring the Dataset

Here, we load the dataset into a Pandas DataFrame using the pd.read_csv() function. We then display the first few rows of the dataset using data.head() to get an initial overview.

#reading the file

data = pd.read_csv('forward_feature_selection.csv')

# first 5 rows of the data

data.head()

We have “the Count” target variable and the other independent variables. Let’s check out the shape of our data:

#shape of the data

data.shape

It comes out to be 12,980 observations again, and 9 columns of variables. Perfect! Are there any missing values?

# checking missing values in the data

data.isnull().sum()

Nope! There are none, we can move on.

Also Read: What is a Chi-Square Test? Formula, Examples & Application

Defining Target and Independent Variables

n this step, we separate the dataset into independent variables (features) and the target variable. The X variable contains all the independent features except ‘ID’ and ‘count’, while y contains the target variable ‘count’. We check the shapes of X and y to ensure they have been defined correctly.

# creating the training data

X = data.drop(['ID', 'count'], axis=1)

y = data['count']Let me look at the shapes of both:

X.shape, y.shape

Installing Required Libraries

Here, we install the mlxtend library, which provides implementations of various feature selection algorithms, including Sequential Feature Selector (SFS), which we’ll use for forward feature selection.

!pip install mlxtend

# importing the models

from mlxtend.feature_selection import SequentialFeatureSelector as sfs

from sklearn.linear_model import LinearRegressionImporting Models and Feature Selector

Let’s go ahead and train our model. Here, similar to what we did in the backward elimination technique, we first call the Linear Regression model. And then we define the Feature Selector Model-

# calling the linear regression model

lreg = LinearRegression()

sfs1 = sfs(lreg, k_features=4, forward=True, verbose=2, scoring='neg_mean_squared_error')In the Feature Selector Model let me quickly recap what these different parameters are. The first parameter is the model name, lreg, which is basically our linear regression model.

k_features tells us how many features should be selected. We’ve passed 4 so the model will train until 4 features are selected.

Now here’s the difference between implementing the Backward Elimination Method and the Forward Feature Selection method, the parameter forward will be set to True. This means training the forward feature selection model. We set it as False during the backward feature elimination technique.

Next, verbose = 2 will allow us to bring the model summary at each iteration.

And finally, since it is a regression model scoring based on the mean squared error metric, we will set scoring = ‘neg_mean_squared_error’

Training the Model Using Forward Feature Selection

Let’s go ahead and fit the model. Here we go!

sfs1 = sfs1.fit(X, y)

Printing Selected Feature Names

We can see that the model was trained until four features were selected. Let me print the feature names-

feat_names = list(sfs1.k_feature_names_)

print(feat_names)

It seems pretty familiar, holiday, working day, temp and humidity. These were the exact same features that were selected in a backward elimination method. Awesome right?

Creating a New DataFrame with Selected Features

But keep in mind that this might not be the case when you’re working on a different problem. This is not a rule. It just so happened, occurred in our particular data. So let’s put these features into a new data frame and print the first five observations-

# creating a new dataframe using the above variables and adding the target variable

new_data = data[feat_names]

new_data['count'] = data['count']

# first five rows of the new data

new_data.head()

Checking Shape of Original and New Datasets

Perfect! Lastly, let’s have a look at the shape of both datasets-

# shape of new and original data

new_data.shape, data.shape

A quick look at the shape of the two subsets does confirm that we have indeed selected four variables from our original data.

Hope this tutorial was fun!

Also Read: Understand Random Forest Algorithms With Examples (Updated 2024)

Conclusion

In conclusion, Forward Feature Selection emerges as a valuable technique for incrementally constructing models by incorporating informative features, thereby enhancing prediction accuracy and simplifying model interpretation. By iteratively selecting features based on their individual performance, this method provides a systematic approach to feature selection, especially advantageous for datasets with numerous variables. Through practical implementation and example scenarios, we’ve showcased the efficacy of Forward Feature Selection in optimizing model performance.

As you delve deeper into data science endeavors, mastering this technique equips you with a powerful tool for refining predictive models and extracting meaningful insights from data. Moreover, integrating regularization techniques ensures model robustness, while proper evaluation on a test set validates model performance in real-world scenarios.

Key Takeaways

- Forward Feature Selection iteratively adds features, optimizing model accuracy by selecting informative features incrementally.

- It enhances model interpretability and reduces dimensionality, aiding in understanding and explaining model predictions.

- forward selection python adds features sequentially to maximize model performance, while backward selection removes features iteratively to minimize model complexity.

- Forward model selection starts with an empty feature subset and adds the most predictive feature in each iteration.

- Benefits include improved prediction accuracy, reduced overfitting, and optimized classifier performance.

- Various methods like backward elimination, recursive feature elimination, and filter methods complement forward selection, improving classifier performance and reducing dimensionality.

- Integration with wrapper methods like Recursive Feature Elimination (RFE) and Sequential Feature Selector (SFS) from scikit-learn further enhances model performance and evaluation using metrics like AUC and coefficients, ensuring robustness and generalization.

If you are looking to kick start your Data Science Journey and want every topic under one roof, your search stops here. Check out Analytics Vidhya’s Certified AI & ML BlackBelt Plus Program

Frequently Asked Questions

A. Forward feature selection involves iteratively adding features to a model based on their performance, thereby optimizing model accuracy by selecting the most informative features incrementally. This method helps in reducing dimensionality and improving the interpretability of the model.

A. Forward selection adds features one by one to maximize model performance, while backward selection iteratively removes features to minimize model complexity. Both techniques aim to enhance model accuracy and interpretability while reducing dimensionality.

A. To conduct forward model selection, begin with an empty subset of features and sequentially add the most predictive feature in each iteration. Assess model improvement until a stopping criterion is met, such as reaching a predefined accuracy threshold or a specified number of features.

A. The benefits of forward feature selection include enhanced model interpretability, improved prediction accuracy by selecting informative features, and mitigation of overfitting by incrementally building the model. This approach aids in reducing dimensionality and optimizing classifier performance, selecting the best features for the task.

A. Feature selection methods encompass forward selection, backward elimination, recursive feature elimination, and filter methods like variance thresholding and correlation-based selection. These techniques assist in selecting subsets of relevant features, thereby improving classifier performance and reducing dimensionality to identify the best features.

Himanshi hi, really like your blogs and articles. Need a complete end to end EDA tutorial from you!!!

Hi, Alex Thanks a lot:) Will definitely do that one in some time.