This article was published as a part of the Data Science Blogathon.

Introduction on Centroid Tracker

In this article, we are going to design a counter system using OpenCV in Python that will be able to track any moving objects using the idea of Euclidean distance tracking and contouring.

You might have worked on computer vision before. Have you ever thought of tracking a single object wherever it goes?

Object tracking is the methodology used to track and keep pointing the same object wherever the object goes.

There are multiple techniques to implement object tracking using OpenCV. This can be either-

- Single Object Tracking

- Multiple Object Tracking

In this article, we will perform a Multiple Object Tracker since our goal is to track the number of vehicles passed in the time frame.

Popular Tracking Algorithms

Multiple tracking algorithms depend on complexity and computation.

- DEEP SORT: DEEP SORT is one of the most widely used tracking algorithms, and it is a very effective object tracking algorithm since it uses deep learning techniques. it works with YOLO object detection and uses Kalman filters for its tracker.

- Centroid Tracking algorithm: The centroid Tracking algorithm is a combination of multiple-step processes. It uses a simple calculation to track the point using euclidean distance. This Tracking algorithm can be implemented using our custom code as well.

In this article, we will be using Centroid Tracking Algorithm to build our tracker.

Steps Involved in Centroid Tracking Algorithm

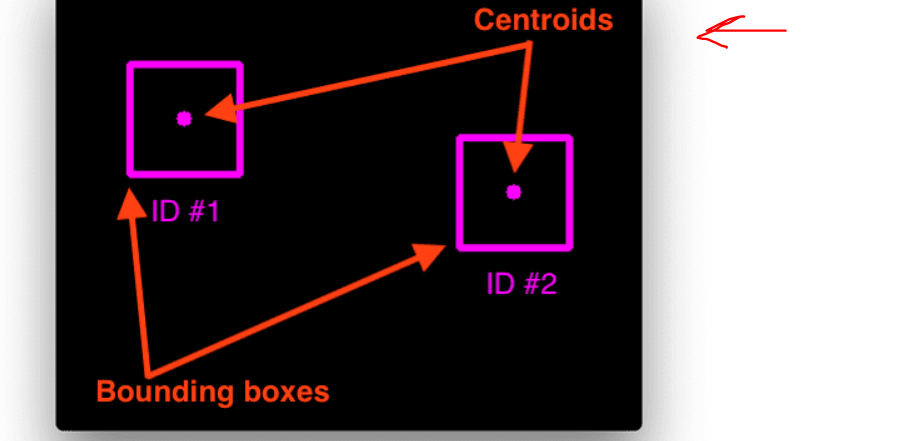

Step 1. Calculate the Centroid of detected objects using the bounding box coordinates.

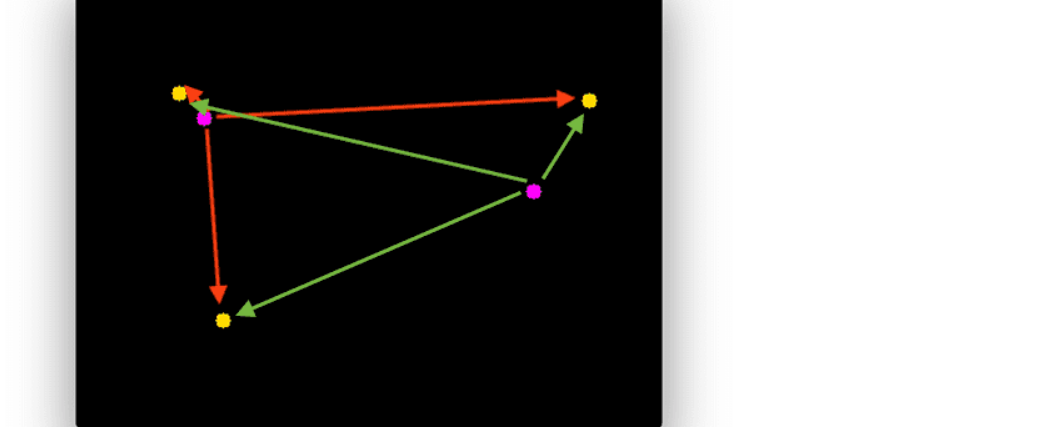

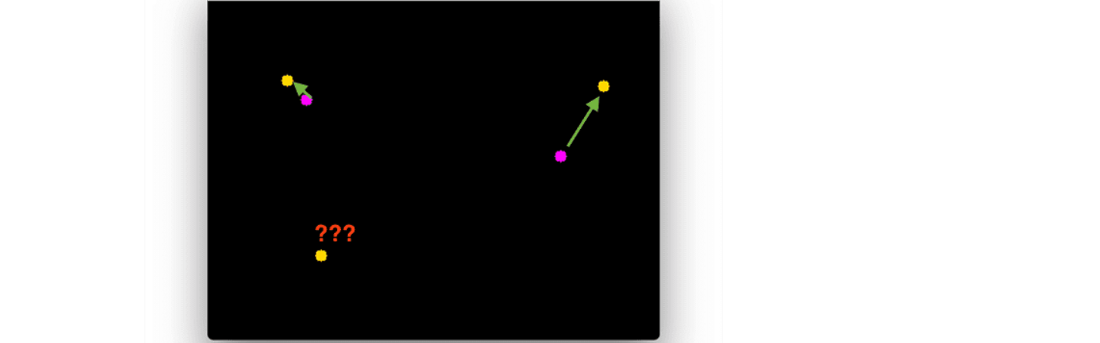

Step 2. For every ongoing frame, it does the same; it computes the centroid by using the coordinates of the bounding box and assigns an id to every bounding box it detects. Finally, it computes the Euclidean distance between every pair of centroids possible.

Step 3. We assume that the same object will be moved the minimum distance compared to other centroids, which means the two pairs of centroids having minimum distance in subsequent frames are considered to be the same object.

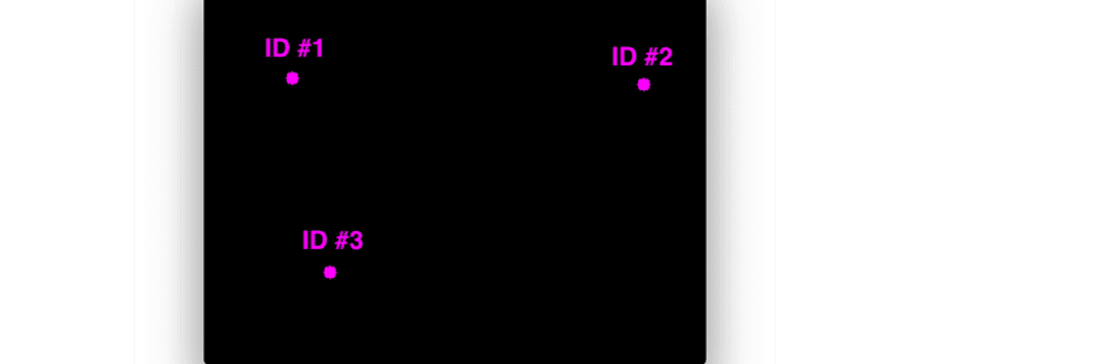

Step 4. Now it’s time to assign the IDs to the moved centroid that will indicate the same object.

We will use the frame subtraction technique to capture a moving object . F(t+1) -F(t) => moved object.

Object Tracking Use-Cases

Object Tracking is getting robust due to the growing computation resources and research work going on it. There are various major use cases where object tracking is extensively getting used.

- Traffic Tracking and collision Protection services

- Tracking Unwanted Behaviours

- Vehicle Tracking

- Tracker in the Malls and Shopping Complexes

- Security Systems

Implementation of Euclidean Distance Tracker in Python

We have built a class EuclideanDistTracker for object tracking combining all the steps we learned.

This includes all the mathematical calculations behind the euclidean distance tracker.

import math

class EuclideanDistTracker:

def __init__(self):

self.center_points = {}

self.id_count = 0

def update(self, objects_rect):

objects_bbs_ids = []

for rect in objects_rect:

x, y, w, h = rect

center_x = (x + x + w) // 2

center_y = (y + y + h) // 2

same_object_detected = False

for id, pt in self.center_points.items():

if distance < 25:

self.center_points[id] = (center_x, center_y)

print(self.center_points)

objects_bbs_ids.append([x, y, w, h, id])

same_object_detected = True

break

if same_object_detected is False:

self.center_points[self.id_count] = (center_x, center_y)

objects_bbs_ids.append([x, y, w, h, self.id_count])

self.id_count += 1

new_center_points = {}

for obj_bb_id in objects_bbs_ids:

var,var,var,var, object_id = obj_bb_id

center = self.center_points[object_id]

new_center_points[object_id] = center

self.center_points = new_center_points.copy()

return objects_bbs_ids

You can download all the source code used in this article using this link. In order to avoid any mistakes, I suggest you download the tracker file using the link.

Save the above code in a python file and save it as tracker.py. We will import our tracker class while working with detection. the file tracker.pycan also be downloaded using this link.

update→ It accepts an array of bounding box coordinates.- tracker outputs a list containing [x,y,w,h,

object_id]. - x,y,w,h are the bounding box coordinates, and

object_idis the id assigned to that particular bounding box? - After creating the tracker, we need to implement an object detector, and we will use the tracker in that.

Importing Libraries and Video Feed

Importing the necessary packages along with our tracker class which we recently made.

import cv2

import numpy as np

from tracker import EuclideanDistTracker

tracker = EuclideanDistTracker()

cap = cv2.VideoCapture('highway.mp4')

ret, frame1 = cap.read()

ret, frame2 = cap.read()

We are using a highway video that comes with OpenCV as a sample video.

cap.read() It reads the frame and returns a boolean value and frame.

Video Feed-In OpenCV

In this section, we will detect the moving objects by reading two subsequent frames and binding them with our tracker object.

The tracker object takes the coordinates of our detected bounding box around the moving object. We will filter out all the noise by proving the minimum area.

while cap.isOpened():

diff = cv2.absdiff(frame1, frame2)

gray = cv2.cvtColor(diff, cv2.COLOR_BGR2GRAY)

blur = cv2.GaussianBlur(gray, (5,5), 0 )

height, width = blur.shape

print(height, width)

dilated = cv2.dilate(threshold, (1,1), iterations=1)

contours, _, = cv2.findContours(dilated, cv2.RETR_TREE, cv2.CHAIN_APPROX_SIMPLE)

detections = []

for contour in contours:

(x,y,w,h) = cv2.boundingRect(contour)

if cv2.contourArea(contour) <300:

continue

detections.append([x,y,w,h])

boxes_ids = tracker.update(detections)

for box_id in boxes_ids:

x,y,w,h,id = box_id

cv2.putText(frame1, str(id),(x,y-15), cv2.FONT_HERSHEY_SIMPLEX, 0.8, (0,0,255), 2)

cv2.rectangle(frame1, (x,y),(x+w, y+h), (0,255,0), 2)

cv2.imshow('frame',frame1)

frame1 = frame2

ret, frame2 = cap.read()

key = cv2.waitKey(30)

if key == ord('q):

break

cv2.destroyAllWindows()

cv2.absdiffIt is used to get the frame difference between two subsequent frames. it detects the changes between two frames.- Before processing and contour detection, we need to change our frames into grayscale.

- We are applying Thresholding and then contour detection for all the detected objects.

- After finding the contours, we will filter out all those contours those are having area greater than 300, and it will be considered a valid object.

boxes_idsIt returned by the tracker contains (x,y,w,h, id) coordinate of bounding boxes and the id associated with it.

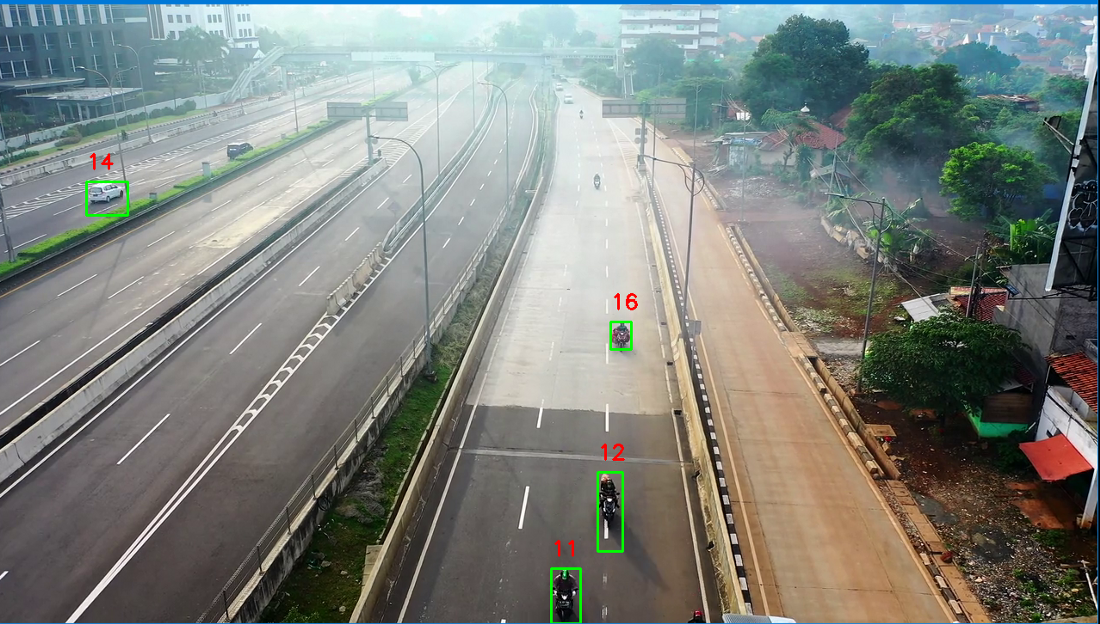

Output Frame:

Conclusion

In this article, we talked about object detection and tracking using OpenCV, and we used a Euclidean tracker to track our objects.

Trying out different deep learning-based trackers like YOLO DEEPSORT promises a better result but that is computationally expensive.

Centroid tracker also doesn’t take camera angle into consideration; in order to counter this problem we need to implement the bird’s eye view before calculating distances.

We built a vehicle counter system using the concept we discussed in this article.

- Centroid Tracking is a combination of multiple-step processes, and it involves simple math for calculating the distance between all pairs of centroids.

- The Pair having a minimum distance between centroids will be considered as a single object.

- DEEP SORT is a more robust and efficient technique for object tracking.

- Using a Centroid tracker is not a good option while working on real-life projects.

- Due to the lower calculation, Centroid Tracker runs very smoothly in edge devices.

I hope you enjoyed reading this article !!

The media shown in this article is not owned by Analytics Vidhya and is used at the Author’s discretion.