Introduction

Knowledge is a treasure but practice is the key to it

Lao Tzu philosophy matches our thoughts behind AV hackathons. We believe knowledge can only be useful when it is applied and tested time and again. The motive behind AV hackathons is to challenge your self and realize your true potential.

Our recent hackathon, “The Smart Recruits” was a phenomenal success and we are deeply thankful to our community for their participation. More than 2500 data aspirants made close to 10,000 submissions over a weekend to take the coveted spot.

It was a 48 hour data science challenge and we challenged our community with a real life machine learning challenge. The competition was fierce and top data scientists competed against each other.

As you would know, the best part of our community is that people share their knowledge / approach with fellow community members. Read on to find the secret recipe from the winners, which would help you to improve yourself further.

The Competition

The competition launched on midnight of 23rd July with more than 2500 registration and 48 hours to go and as expected in no time our slack channel was buzzing with discussion. The individuals were pleasantly surprised with the data set and participants were convinced that it’s not a cake walk. Overnight, the heat rose and hackathon platform was bustling with ideas and execution strategies.

The participants were required to help Fintro – a financial distribution company to help assessing potential agents which will be profitable for the company. The evaluation metric used was AUC- ROC.

The Problem Set

Fintro is an offline financial distribution company operating across India from past 10 years. They sell financial products to consumers with the help of agents. The managers at Fintro identify the right talents and recruit these agents. Once the candidate has been hired by the company they undergo a training for next 7 days and have to clear an assessment to become an agent with Fintro. The agents work as freelancers and get paid in commission for each product they sell.

However, Fintro is facing a challenge of not being able to generate enough business from its agents.

The problem – Who are the best agents?

Fintro invests invaluable time and money in recruiting & training the agents. They expect the agent to have selling skills and generate as much business for the company as possible. But some agents don’t perform, as expected.

Fintro has shared the details of all the agents they have recruited from 2007 to 2009. The data contained demographics of the agents hired and the managers who hired them. They want the data scientists to provide insights from past recruitment data and help them identify / hire potential agents.

Winners

The winners used different approaches and rose up on the leaderboard. Below are the top 3 winners on the leaderboard:

Rank 1 – Rohan Rao

Rank 2 – Sudalai Rajkumar and Mark Landry

Rank 3 – Yaasna Dua and Kanishk Agarwal (Qwerty Team)

Here’s the final ranking of all the participants on leaderboard .

For learning of the rest of the community, all the three winners shared their approach and code which they used in The Smart Recruits.

Rank 3 : Yaasna Dua ( New Delhi, India) and Kanishk Agarwal ( Bengaluru, India) – Qwerty Team

Kanishk Agarwal

Yaasna Dua is an Associate Data Scientist at Info Edge and Kanishk Agarwal is an Associate Data Scientist at Sapient Global Networks. They both participated together ( Qwerty Team ) and were the first ones to find the time based insight on a given day.

They said:

This was our first hackathon on Analytics Vidhya. We followed CRISP-DM and concentrated on feature engineering. We ended up creating the following features-

- Applicant’s age and manager’s age on the day the application was received

- Manager’s experience in Fintro when the application was received

- Whether manger was promoted

- Pin difference ,which was a proxy of how far the applicant’s city was from his office

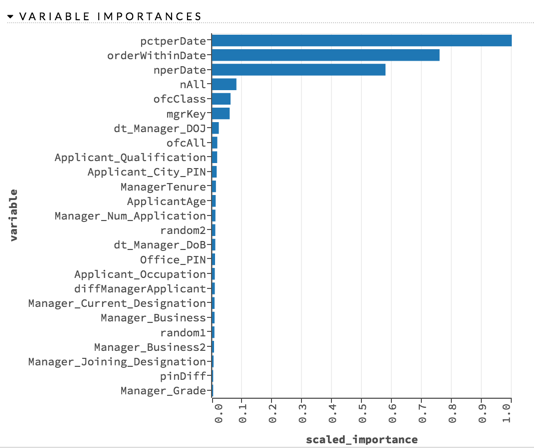

But feature engineering did not give us a good gain. Then we struck gold. Kanishk discovered that the target variable when grouped by application date gave a constant percentage! We incorporated this feature in our model and our score shot up. We tried random forest, xgboost and extra trees. Extra trees gave us the best result. Unfortunately, we did not have enough time to tune the model. Nevertheless, it was a good learning experience and we want to thank Analytics Vidhya for the same.

Solution: Link to Code .

Rank 2 : Sudalai Rajkumar (Chennai, India) and Mark Landry (San Fransico, USA)

Mark Landry

Sudalai Rajkumar is Lead Data Scientist at FreshDesk and Mark Landry is a Competitive Data Scientist and Product Manager at H2O.ai. They both worked together in a team to win The Smart Recruits competition. Their deep knowledge about machine learning and analytics have earned them the high rankings on Kaggle.

They shared :

The solution progression comes in two stages: before the application structure was identified, and after it.

Solution : Link to Code

![]()

Rank 1: Rohan Rao ( Bengaluru, India )

Rohan Rao is a Lead Data Scientist at AdWyze. Rohan is an adept in machine learing and also won our last hackathon.(Rank 1 in Seer’s Accuracy ).

He says:

The CV-LB movement wasn’t in sync. This turned tricky and a public-private LB shakeup was expected with scores being close. I dug back into the data trying to find a feature/pattern which can boost my model. And this proved to be a great decision.

While plotting the target variable for a sample set of days, I found a glaring pattern. Within a day, a large proportion of positive samples appeared in the first half, and vice-versa. At first, it seemed too good to be true. I quickly created a feature based on ordering and saw a big jump in CV score, crossing the 0.8 mark. On exploring this pattern in detail, I was unsure whether it is leakage or whether it is just a pattern that applications received initially in the day are more likely to be accepted than ones later. Either ways, the data showed this and I used it in my model.

End Notes

Please provide Dataset as well, So beginners can practice and learn well.

Hi Gokul, We will soon be launching it in form of practice problem.

Also provide code for "Rank 2 : Sudalai Rajkumar and Mark Landry".

Tanks to the winners for posting the solution, this really helps. The patterns that were discovered in the data was impressive.