Introduction

A large number of E-Commerce and tech companies rely on real time training and predictions for their products. Google predicts real time click-through rates for their ads. This is used as an input to their auction mechanism, apart from a bid from the advertiser to decide which ads to show to the user.

Stackoverflow uses real time predictions to automatically tag a question with the correct programming language so that they reach the right asker. An election management team might want to predict real time sentiment using Twitter to assess the impact of their campaign.

Such datasets are also characterized by their large size. For training the model, we can always take a sub-sample of the data, but its a rather unreliable method. There’s a good chance we might miss out on a significant amount of information.

Is there a solution that tackles both these problems? As it turns out, there is. In this article, we will discuss a comparison of batch learning and online learning. In the second section, we’ll look at an example of text classification using an online learning framework called Vowpal Wabbit (VW).

Table of Contents

- Online Learning Vs Batch Learning

- Vowpal Wabbit (VW) – A framework for online learning

- Input Format

- VW for text classification

- Training

- Testing

- n-grams

- Model Interpretability

- Regularization

Online Learning Vs Batch Learning

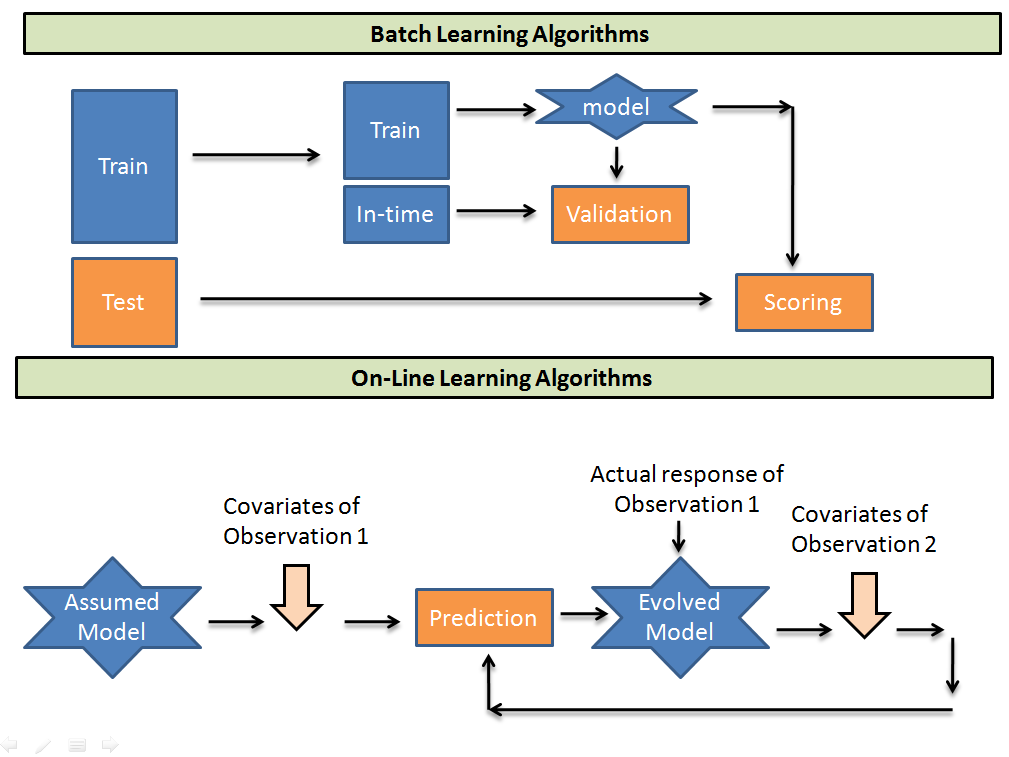

- Online learning is a method of machine learning that stores and processes only one training example at a time sequentially. It assumes an initial predictive model and updates its parameters for future predictions at each step. Other algorithms based on batch learning perform training on the whole batch.

- Batch learning requires the whole batch to be stored in the memory and once we have some new training data, the model has to retrain on the entire set again. Since online learning processes only one training example at a time, its storage requirements are met even by an average system that is able to fit at least one training example in memory.

- In online learning, we update our parameters by adding (minus sign) the gradient computed on a single instance of the dataset. Since it’s based on one random data point, it’s very noisy and may go off in a direction far from the batch gradient. Batch learning updates are based on a batch of training examples and hence intermediate updates are much more stable.

Let’s say we want to build a model that identifies spam emails. One way is to download a large corpus of emails, train a model on it and subsequently test it on unseen examples. This is called offline learning. Another way is to take in each email sequentially and continuously update the parameters in a classifier. You guessed it – this is online learning.

Going back to our example of spam classification, imagine a situation where the spammers have found a work-around and started bypassing the existing spam classifier. Two teams have been tasked to solve the problem – The Batch Learning Team and the Online Learning team.

- This scenario will be a nightmare for the Batch Learning Team. They will first have to label the new spam emails as spam. Following that, they will retrain the model on the entire dataset to get the new model and then deploy it in production.

- The Online Learning Team will still have to label the new spam, but they can do away with retraining the model on the whole dataset. Instead, they can feed the stream of freshly labelled data to an already existing online learning model. They can sit back as it updates the existing model and learns new parameters from each new sample sequentially.

Vowpal Wabbit – A Framework for Online Learning

SkLearn has SGDClassifier and SGDRegressor from sklearn.linear_model. These are nice implementations of SGD/online learning but we’ll focus on Vowpal Wabbit as it’s superior to sklearn’s SGD models in many aspects, including computational performance. The Vowpal Wabbit (VW) project is a fast online learning framework sponsored by Microsoft Research and (previously) Yahoo! Research.

When it comes to categorical variables, label encoding or one hot encoding are popular methods of dealing with it. These are good, if we are able to store and process the whole dataset. But online learning does not have the liberty of looking at the complete dataset. Also, real data can be volatile and we cannot guarantee that new values of categorical features will not be added at some point. This issue hampers the use of the trained model when some new data is introduced,

This is where Vowpal Wabbit comes into the picture.

The idea is very simple: convert data into a vector of features. When this is done using hashing, we call the method “feature hashing” or “the hashing trick”.

I’ll explain how it works with a simple example using text as data.

Let’s say our text is:

“the great blue whale”

We would like to represent this as a vector. The first thing we need to do is fix the length of the vector, i.e., the number of dimensions we are going to use. For the purpose of this example, we will take 5 dimensions.

Once we fix the number of dimensions, we will need a hash function that will take a string and return a number between 0 and n-1 (in our case between 0 and 4). Any good hash function can be used. We will use h(string) mod n to make it return a number between 0 and n-1. h(string) generates a large number based on the number of bits allotted.

I’ll compute the results for each word in our text:

h(the) mod 5 = 0

h(great) mod 5 = 1

h(blue) mod 5 = 1

h(whale) mod 5 = 3

Once we have this, we can simply construct our vector as:

(1,2,0,1,0)

Notice that we just add 1 to the nth dimension of the vector each time our hash function returns that dimension for a word in the text.

Vowpal Wabbit is so incredibly fast in part due to the hashing trick. With many features and a small-sized hash, collisions (i.e. when we have the same hash for 2 different features) start occurring. These collisions may influence the results. Often for the worse, but not necessarily: Multiple features sharing the same hash can have a PCA-like effect of dimensionality reduction.

If you feel hashing is really hurting the performance of your model, you could increase the number of bits required to produce the hash. Read more about hash functions here.

It’s friendly to online learning where you can train on a dataset that doesn’t fit in memory because you need to see each example only once. One-hot encoding/Label Encoding will not work with online learning because to prepare dictionaries you need to see whole dataset first.

Input Format

Vowpal Wabbit reads data from files or from standard input stream (stdin) assuming the following format:

[Label] [Importance] [Tag]|Namespace Features |Namespace Features … |Namespace FeaturesNamespace=String[:Value]

Features=(String[:Value] )*

here [] denotes non-mandatory elements, and (…)* means some repeats.

- Label is a number. In case of classification it is usually 1 and -1, in case of regression it is some real float value

- Importance is a number, it denotes sample weight during training. Setting this helps when working with imbalanced data

- Tag is some string without spaces – it is a “name” of sample, VW saves it upon prediction. In order to separate Tag and Importance it is better to start Tag with the ‘ character

- Namespace is for creation of separate feature spaces

- Features are object features inside Namespace. Features have weight 1.0 by default, but it can be changed, for example – feature:0.1

VW for text classification

Consider a setting where a movie production company wants to build a real time IMDB review extraction and prediction system. This is a binary classification problem and the task is to predict whether a particular review is positive or negative. The data provided has only the actual reviews as text. Here, I will show you how easy it is to work with text data using Vowpal Wabbit.

Installing Vowpal Wabbit is easy and can be done using the documentation provided at this link.

VW can only be used via command line syntax. Help related to all the functions can be seen using the following command:

!vw --help

The review dataset is provided in the form of text files at the provided link. The following piece of python code helps to read all the text files and combine it into a single file.

import os

import numpy as np

import re

from sklearn.datasets import load_files

from sklearn.metrics import accuracy_score, roc_auc_score

# Read the train Data

path_to_movies = os.path.expanduser('/home/analyticsvidhya/IMDB/')

reviews_train = load_files(os.path.join(path_to_movies, 'train'))

text_train, y_train = reviews_train.data, reviews_train.target

Next, we will convert the text to the Vowpal Wabbit format described above.

Here, it is difficult to put the words (features) in different namespaces and hence we will just consider all the words separately without considering the interaction features.

We have done a very basic removal of all the words with less than three characters (like ‘is’, ‘in’ etc.) because these do not add any valuable information to the model.

def to_vw_format(document, label=None):

return str(label or '') + ' |text ' + ' '.join(re.findall('\w{3,}', document.lower())) + '\n'

to_vw_format(str(text_train[1]), 1 if y_train[0] == 1 else -1)

# Splitting train data to train and validation sets

train_size = int(0.7 * len(text_train))

train, train_labels = text_train[:train_size], y_train[:train_share]

valid, valid_labels = text_train[train_size:], y_train[train_share:]

# Convert and save in vowpal wabbit format

with open('movie_reviews_train.vw', 'w') as vw_train_data:

for text, target in zip(train, train_labels):

vw_train_data.write(to_vw_format(str(text), 1 if target == 1 else -1))

with open('movie_reviews_valid.vw', 'w') as vw_train_data:

for text, target in zip(valid, valid_labels):

vw_train_data.write(to_vw_format(str(text), 1 if target == 1 else -1))

# Splitting train data to train and validation sets

train_size = int(0.7 * len(text_train))

train, train_labels = text_train[:train_size], y_train[:train_share]

valid, valid_labels = text_train[train_size:], y_train[train_share:]

# Convert and save in vowpal wabbit format

with open('movie_reviews_train.vw', 'w') as vw_train_data:

for text, target in zip(train, train_labels):

vw_train_data.write(to_vw_format(str(text), 1 if target == 1 else -1))

with open('movie_reviews_valid.vw', 'w') as vw_train_data:

for text, target in zip(valid, valid_labels):

vw_train_data.write(to_vw_format(str(text), 1 if target == 1 else -1))

VW can be used at the terminal or command line. If you prefer using the python notebook, you can use ‘!’ before the command to run it like any other python code.

Training

# Fitting a logistic regression for predicting the sentiment of a review !vw -d movie_reviews_train.vw --loss_function logistic -f movie_reviews_model.vw

Now, let’s examine each of the syntax elements in the above command line function.

- -d This is used to include the path to train the data for the command line.

- –loss_function This is used to declare the loss function (e.g. squared, logistic, hinge etc.).

- -f This is used to save the model for using it on a different dataset.

Testing

!vw -i movie_reviews_model.vw -t -d movie_reviews_valid.vw -p movie_valid_pred.txt --quiet

- -i Read the model from the given file

- -t -d Declare that we are dealing with dataset without labels (test) at this path

- -p Save predictions to this file

- –quiet Do not print any steps taken for prediction

with open('movie_valid_pred.txt') as pred_file:

valid_prediction = [float(label) for label in pred_file.readlines()]

print("Accuracy: {}".format(round(accuracy_score(valid_labels, [int(pred_prob > 0) for pred_prob in valid_prediction]), 5)))

print("AUC: {}".format(round(roc_auc_score(valid_labels, valid_prediction), 5)))

That is quite an impressive accuracy for such a simple model and it only gets better as we stream more data to VW.

We have built a decent model by just feeding it the actual words and a label. And we did this in a fraction of a second on an average system! Now, let’s try hinge loss as the loss function to check if we can improve the results.

Hinge loss did not show any significant improvement. Let’s move on to adding more features via n-grams.

n-grams

VW also supports other functionalities specifically for text data.

For those new to the Natural Language Processing landscape, n-grams are a continuous sequence of n words inside a text. For example, in the sentence:

“Hi, I don’t like dark places”

‘don’t like’ and ‘dark places’ are examples of bi-grams (n =2).

Let us briefly discuss how n-grams can provide a boost to accuracy. We’ll take the phrase – ‘not the best experience’. This phrase has a definite negative sentiment, but if we don’t use n-grams, a positive weight will be learnt owing to the presence of the word ‘best’. However, if we include bi-grams, ‘not best’ will be a separate feature and will have a more negative weight which is appropriate in this case.

VW supports n-grams via command line tags. Let us try including bi-grams in our model to see whether they improve the overall accuracy.

!vw -d movie_reviews_train.vw --loss_function logistic --ngram 2 -f movie_reviews_model_bigram.vw --quiet

![]()

Indeed, we get a better accuracy and a higher AUC after including the bi-grams in the model. We can also try including tri-grams or higher to see if we can get a further boost in the accuracy.

Model Interpretability

How do we interpret the model we just created? VW has a one line solution for it. We just need to replace -f with –invert_hash and it will produce a model file that is human readable.

!vw -d movie_reviews_train.vw --loss_function logistic --ngram 2 --invert_hash movie_reviews_readable_model_bigram.vw

We can see the weights learnt for various bi-grams in the above screenshot. ‘abominably, being a strictly negative word, produces mostly negative weights except in a few cases where the other word is highly positive, like ‘best’.

Regularization

L1 and L2 regularization may be added with the –l1 and –l2 options respectively.

!vw -d movie_reviews_train.vw --l1 0.00005 --l2 0.00005 --loss_function logistic --ngram 2 -f movie_reviews_model_bigram.vw

End Notes

Text data is not the only domain where online learning is useful. It performs really well for CTR (Click-through Rate) predictions and supports many different optimization methods. I plan to cover these in a future article.

Meanwhile, please let me know in the comments if you have ever used VW or online learning to solve a problem. Detailed documentation on VW can be viewed here.

Thank you so much for this excellent write-up. Learned a lot and got motivated to learn more.

Thanks for the kind words!

Thanks!. Great content. Could you provide an example of how to update an existing vowpal model with more additional data? I think it would provide great value to the post. Would it be something like this: !vw -i existing.model -f new.model more_observations.dat (from stackoverflow: https://stackoverflow.com/a/31324121/6413835 )

That is correct Luis. Thanks for the kind words!

Thanks for the kind words. Yes, I will include this in my next article.