Introduction

TensorFlow is one of the most popular open source libraries in the Machine Learning and Deep Learning community. We see breakthroughs in diverse fields on a weekly basis and more often than not, TensorFlow is at the heart of the final model.

Since it’s release, the TensorFlow team has continuously worked on improving the library by making it simpler and interactive for the users. TensorFlow Developer Summit is an event that brings together TensorFlow users from all over the globe to see all the new products, tools, libraries, and use cases presented by the TensorFlow team and industry leaders.

After receiving an overwhelming response to the first TensorFlow Developer summit conducted in 2017, this year’s TensorFlow Developer Summit was held on March 30th 2018, at the Computer History Museum in Mountain View, CA. More than 500 TensorFlow users attended the summit and thousands others connected via a live stream .

The Summit had many exciting announcements, demos and tech talks. Below we have provided the highlights from every session presented at the summit.

Session presented at the Summit

- Keynote (by Anitha Vijayakumar, Rajat Monga, Megan Kacholia and Jeff Dean)

- The tf.data Library (by Derek Murray)

- Eager Execution (by Alexandre Passos)

- ML in javascript: TensorFlow.js (by Daniel Smilkov and Nikhil Thorat)

- Training Performance (by Brennan Saeta)

- Practitioner’s guide with TF High Level API (by Mustafa Ispir)

- Distributed TensorFlow(by Igor Saprykin)

- Debugging TensorFlow with TensorBoard plugins (by Justine Tunney and Shanqing Cai)

- TensorFlow Lite (by Sarah Sirajuddin and Andrew Selle)

- Searching over Ideas (by Vijay Vasudevan)

- Reconstructing Fusion Plasmas (by Ian Langmore)

- Nucleus: TensorFlow toolkit for Genomics (by Cory McLean)

- Opensource Collaboration (by Edd Wilder-James)

- Swift for TensorFlow (by Chris Lattner and Richard Wei)

- TensorFlow Hub (by Andrew Gasparovic and Jeremiah Harmsen)

- TensorFlow Extended (by Clemens Mewald and Raz Mathias)

- Applied AI at the Coca-Cola Company (by Patrick Brandt)

- Real World Robot Learning (by Alexander Irpan)

- Project Magenta (by Sherol Chen)

1. Keynote

Speakers: Anitha Vijayakumar, Rajat Monga, Megan Kacholia and Jeff Dean

Anitha Vijayakumar, technical program manager for TensorFlow, kicked-off the summit by talking about the various fields where machine learning is currently being used extensively. A few examples she quoted were:

- Astrophysicists and Engineers use machine learning to analyze data from Kepler mission, and have discovered a new planet Kepler 90i.

- In Healthcare, TensorFlow and machine learning techniques are used to determine a person’s risk of cardiovascular (CV) diseases by analyzing the retina scans. You can read more about it here.

- Air traffic controllers and engineers in Europe are using TensorFlow to predict flight trajectories to ensure safe landing.

- Machine learning, along with deep neural networks, learn characteristics of sound and create new music.

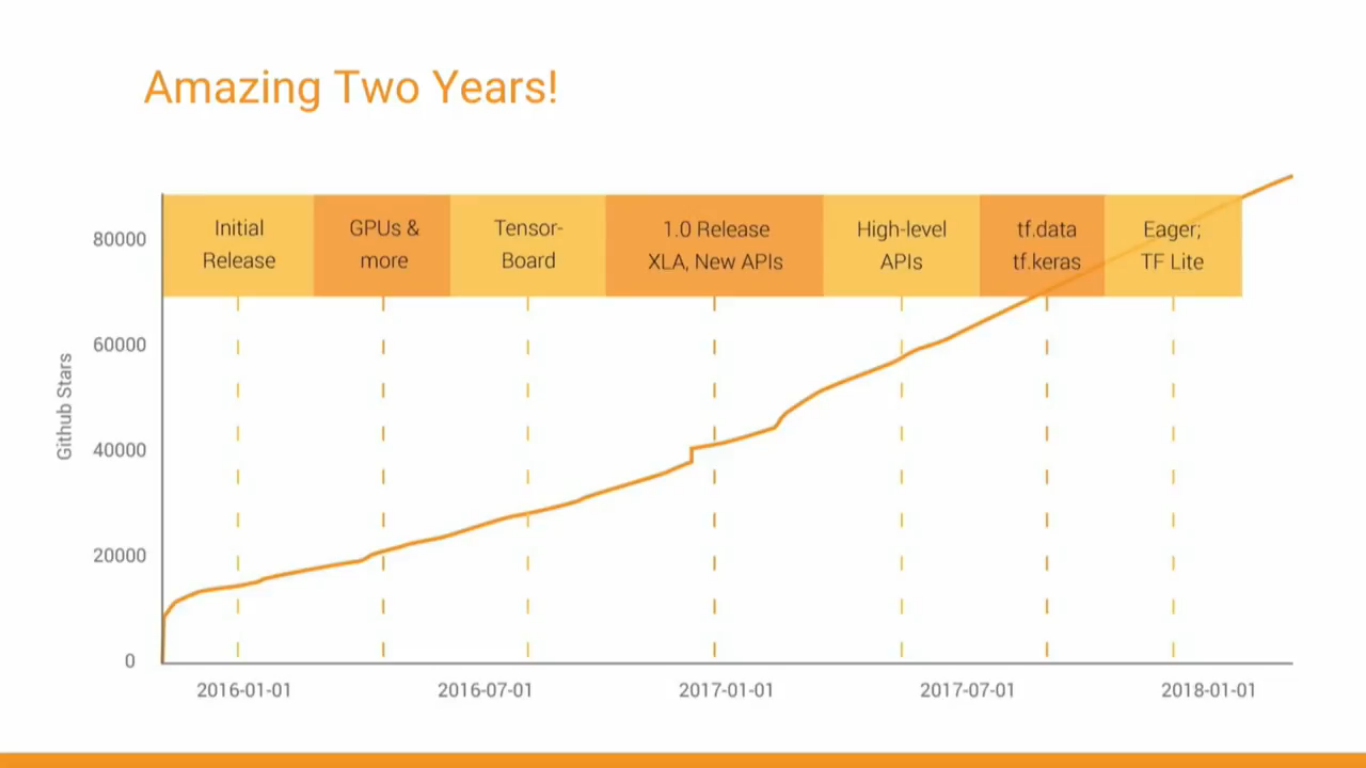

Followed by this, Rajat Monga, director of engineering at TensorFlow, spoke about the growth of TensorFlow in the past two years as highlighted in the below graph:

We have mentioned a few takeaways from his talk below:

- Release of a new TensorFlow Blog and a new Youtube channel

- Release of several additions to the TensorFlow ML toolkit which consists of various machine learning algorithms and statistics tools

- Along with this, there was a small discussion on the machine learning crash course that is being made available for everyone

- TensorFlow contains a full implementation of keras. tf.keras contains building blocks to build models and offers utilities to train them as well.

Taking the discussion forward, Megan Kacholia, Engineering director at Google Brain, introduced the latest update to TensorFlow Lite that let’s users work on any platform, like CPU, Android or iOS. She also mentioned the various platforms TensorFlow works on, which now includes Cloud TPU. A beta version of cloud TPU was launched in February and it provides 180 teraflops of computation per device. Have a look at the Reference models and tools for Cloud TPUs here.

Jeff Dean, leader of the Brain team talks about how TensorFlow addresses real problems, focusing on Advance health informatics and Engineer the tools for scientific discovery. The main idea is to make machine learning easier to use and replace ML expertise with computation.

2. The tf.data Library

Speaker: Derek Murray

tf.data is a new library that helps users get all of their data into TensorFlow. It works as an input pipeline. Derek Murray introduced the tf.data library and talked about its performance. He explained in detail about the flexibility, ease of use and speed that it provides. Derek also announced the launch of a performance guide for tf.data on the website for users.

You can watch the complete talk here.

3. Eager Execution

Speaker: Alexandre Passos

Focusing on making TensorFlow simpler to use, the team introduced an intuitive programming model, Eager Execution. With Eager Execution, the distinction between the construction of execution graph can be removed. Thus, one can now use the same code to generate the equivalent graph for training at scale using the Estimator high-level API. This was launched in the TensorFlow 1.5.0 update which we covered here.

Alexandre Passos, Software Engineer at TensorFlow, talks about eager execution in detail along with a demo code in the following video.

4. ML in Javascript: TensorFlow.js

Speakers: Daniel Smilkov and Nikhil Thorat

Inspired by the JavaScript library, deeplearn.js, which was released in August 2017, the team has now launched tensorflow.js which brings machine learning to JavaScript. tensorflow.js allows the user to build and train modules in the browser itself. A user can also import TensorFlow and Keras models trained offline for inference using WebGL acceleration. You can read more about this on Analytics Vidhya’s AVBytes article here.

Here is the talk by Daniel Smilkov and Nikhil Thorat on TensorFlow.js

5. Training Performance

Speaker: Brennan Saeta

In this talk, Brennan Saeta provided an overview for users on how to optimize training speed of their models on GPUs and TPUs. Starting with an introduction to the ML training loop, he further spoke about improving the performance following three basic steps: Find Bottleneck, Optimize Bottleneck and repeat, and he also provided a few pointers about it’s future impact.

6. Practitioner’s guide with TF High Level API

Speaker: Mustafa Ispir

Mustafa Ispir spoke about high level APIs and how these can be used by ML practitioners for performing modeling experiments, with just a few lines of code. He explained, with a case study, how high level APIs can be used to be more efficient and effective. The discussion was mainly focused on High Level APIs built for each step in a ML pipeline. Briefly summarizing, APIs are built for the Estimators, Features, Premade, Modeling, Scaling and Serving.

7. Distributed TensorFlow

Speaker: Igor Saprykin

Distributed TensorFlow is a method by which the models can train faster and work parallely. Igor Saprykin in his talk discussed the different ways to train a model on a single machine and multiple GPUs. The tf.contrib.distribute is a module that handles distributed computing in TensorFlow. It uses all-reduce on multiple GPUs to perform In-graph replication with synchronous training.

8. Debugging TensorFlow with TensorBoard plugins

Speakers: Justine Tunney and Shanqing Cai

To make debugging models easier, the TensorFlow team has released a new interactive graphical debugger plug-in as part of the TensorBoard visualization tool. In the following video, Justine Tunney and Shanqing Cai give a demo of the TensorFlow Debugger. It will help the user inspect, set breakpoints and step through the graph nodes in real time. This was definitely one of our favorite things from the conference!

9. TensorFlow Lite

Speakers: Sarah Sirajuddin and Andrew Selle

TensorFlow Lite was initially launched last year, and since then many new features have been added to improvise the same. Sarah Sirajuddin, software engineer in the TensorFlow Lite team, talked about TensorFlow Lite and the benefits of having machine learning models on mobile and other edge devices. The tool also provides support for Raspberry Pi and ops/models (including custom ops) . Here is the general workflow:

Talk on TensorFlow Lite by Sarah Sirajuddin and Andrew can be viewed below:

10. Searching over Ideas

Speaker: Vijay Vasudevan

Most machine learning algorithms require extensive tuning of hyperparameters for obtaining best results. Vijay Vasudevan in his talk discussed about how TensorFlow can be useful in hyperparameter optimization. He suggested that automated Machine Learning techniques can be used in order to evaluate our ideas more efficiently. In the following video, Vijay explains the hyperparameter optimization process in detail:

11. Reconstructing Fusion Plasmas

Speaker: Ian Langmore

Starting with a brief talk on nuclie fusion and plasma, Ian Langmore explained how Google and Tae together reconstruct plasma attributes on the basis of measurements, using Bayesian Inference. This is a Bayesian inverse problem and it uses TensorFlow’s distribution and tensorflow_probability libraries.

12. Nucleus: TensorFlow toolkit for Genomics

Speaker: Cory McLean

Cory McLean, Engineer in the Genomics Team at Google Brain, announced the launch of Nucleus, a Python library for reading, writing, and filtering common genomics file formats for conversion to TensorFlow examples. In his talk, he gave a basic introduction on genomics and opportunities for deep learning in this field. Furthermore, he spoke about Nucleus’ interoperable data representation with Variant Transforms, an open source tool from Google Cloud.

13. Opensource Collaboration

Speaker: Edd Wilder-James

Edd Wilder-James gave a brief talk on the TensorFlow community. He shared the number of users and contributors of TensorFlow which, as you can see is the image below, is further evidence of the growth of the TensorFlow community.

With the new additions and improvements in TensorFlow, he expects the numbers to increase rapidly. Here is the video where Edd talks how they are planning to engage and collaborate with their users.

14. Swift for TensorFlow

Speaker: Chris Lattner and Richard Wei

Swift for TensorFlow (TFiwS) is an early stage open source project with the aim to improve usability of TensorFlow. It has many design advantages, and will be released with technical whitepaper, code, and an open design approach in April 2018. The Swift for TensorFlow team strongly believes that Swift could be the future of data science and machine learning development.

You can have a look at the talk by Chris Lattner and Richard Wei here:

15. TensorFlow Hub

Speakers: Andrew Gasparovic and Jeremiah Harmsen)

TensorFlow Hub, a built-in library, is launched to foster the publication, discovery, and consumption of reusable parts of machine learning models. Jeremiah Harmsen and Andrew Gasparovic explained how TensorFlow Hub let’s you build, share and use Machine Learning modules. This can be easily integrated into your model with a single line of code. You can look at the below image for a better understanding of this and visit their blog.

Below is the video from the summit where Andrew Gasparovic and Jeremiah Harmsen discuss TF Hub:

16. TensorFlow Extended

Speaker: Clemens Mewald and Raz Mathias

Clemens Mewald and Raz Mathias announced the roadmap for TensorFlow Extended (TFX), which is an end-to-end ML platform built around TensorFlow. Also, TensorFlow Model Analysis (TFMA), a library to visualize evaluation metrics, was launched. Here is the video where a demo of the same has been presented. Have a look!

17. Applied AI at the Coca-Cola Company

Speaker: Patrick Brandt

In 2016, Coca-Cola updated its core loyalty marketing program which states that in order to avail entry into the promotions, buyers need to input a 14-character proof-of-purchase code. Typing this manually would be a tedious job, so the team built a mobile app for recognizing codes (which were embedded in the bottle’s cap). Basically, Coca-Cola built an Optical Character Recognition (OCR) model that uses Convolutional Neural Networks and TensorFlow to perform the task. Watch this fascinating talk below:

18. Real World Robot Learning

Speaker: Alexander Irpan

Addressing real world research problems, Alex Irphan from the Google Brain Robotics Team, explained his approach to solve the problem. The problem setup was: neural network commands a robot arm to grasp objects. The robotics team combined feature level domain adaptation and pixel level domain adaptation to train the model. You can watch the video below to understand how the simulators and other ML techniques are used to reduce the amount of real world data required.

19. Project Magenta

Speaker: Sherol Chen

Project Magenta is a smart tool that allows artists to create music using pre-trained models. Sherol Chen in her talk explaied how these models can be tuned and controlled. She also presented a glimpse of this on a keyboard, while tuning the outputs. The combination of music and machine learning is always a positive and has been well received by the TensorFlow community.

End Notes

There were quite a few new things launched at this year’s summit. While we had already seen Eager Execution before, new tools and libraries like TensorFlow.js and TensorFlow Model Analysis were introduced and will surely be incorporated into ML models soon.

You can watch the full live stream of TensorFlow 2018 here! What did you find most exciting about this summit? Let us know your thoughts and feedbacks in the comments below!

Thank you for compiling and sharing really exciting field of tensor Flow .

Hi Indira, Glad you liked it.

Good Summary

Cool list. The loyalty program software in particular caught my eye. This type of software automation doesn't reduce the value of the loyalty program from Coco-Cola's perpsective right? Does it increase the value because the data is more reliable?