Overview

- MLPerf is a benchmarking tool for machine learning hardware and software

- It is supported by big companies like Google, Baidu and Intel, among others

- The first version is expected to come out in August

Introduction

What tools make up a data scientist’s skillset? How do you figure out which tool is the best and is there a benchmark? So far, these were questions with a variety of answers, but none of them conclusive. We saw companies launching new tools and libraries and touting them as faster than the previous iteration which belonged to their competition. It’s all a matter of perspective and how you play it.

Until now.

A number of tech giants, including Google, Baidu and Intel, have collaborated to create a benchmarking tool, called MLPerf, which lets users understand and improve the performance of machine learning tools and techniques.

AI pioneer and leader Andrew Ng has previously said, “AI is transforming multiple industries, but for it to reach its full potential, we still need faster hardware and software”. MLPerf aims to bridge this gap by aiming to be the benchmark tool for measuring speed and performance of existing ML tools.

The main goal of introducing MLPerf is to accelerate progress in machine learning via a fair and useful measurement system. The approach behind MLPerf is to select a set of problems, each already defined by a dataset and a set quality target. Then, it measure the time taken to train the model for each problem separately.

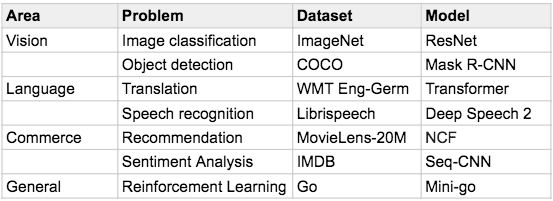

A few examples of where MLPerf has been tested are mentioned in the table below:

As the team mentioned, the first release of MLPerf, (MLPerf version 0.5, as they are calling it for now) will focus on training jobs on a range of systems from workstations to large data centers. Later releases will expand to include inference jobs, eventually extended to include ones run on embedded client systems. An initial version will be ready for use in August.

To view the complete specifications, along with reference codes and examples, you can visit the official site of MLPerf. MLPerf is available on GitHub.

Our take on this

This can be used as a constructive development, for comparing software and hardware systems, creating a benchmark and encouraging innovation & improvement. How easier would life be for a data scientist if they could easily compare tools and techniques from different domains in one place? Sounds awesome.

MLPerf combines the best practices from previous benchmarks, including SPEC’s use of a suite of programs. After SPEC’s release in 1988, CPU performance improved 1.6x per year for the next 15 years, and we are hoping to witness a similar improvement with the release of MLPerf!

This is a tool to keep your eye on, if only because of the names backing this project.

Subscribe to AVBytes here to get regular data science, machine learning and AI updates in your inbox!