Introduction

How do we build interpretable machine learning models? Or, in other words, how do we build trust in the models we design? This is such a critical question in every machine learning project. But we tend to overlook this in our haste to build more accurate models.

Take a moment to think about this – how many times have you turned to complex techniques like ensemble learning and and neural networks to improve your model’s accuracy while sacrificing interpretability? In a real-world industry/business setting, this is unacceptable.

We need to find a way to use these powerful ML algorithms and still make them work in business setting. So in this episode #20 of our DataHack Radio podcast, we welcome Christoph Molar, author of the popular book – “Interpretable Machine Learning“. Who better to talk about this fundamental and critical topic?

This DataHack Radio episode is full of essential machine learning aspects every data scientist, manager, team lead and senior executive must be aware of. Kunal Jain and Christoph had a multi-layered conversation on several topics, including:

- Christoph’s interest and foray into interpretable machine learning

- His research on interpretable ML, specifically model-agnostic methods

- The ‘Interpretable Machine Learning’ book, and much more!

All our DataHack Radio podcast episodes are available on the below platforms. Subscribe today to stay updated on all the latest machine learning developments!

Christoph Molnar’s Background

Statistics is at the core of data science. You cannot simply waltz into the machine learning world without building a solid base in statistics first.

Christoph’s background, especially his university education, embodies that thought. He has a rich background in statistics. Both his Bachelor’s and Master’s degrees were in the area of statistics from the Ludwig-Maximilians Universität München in Germany.

During this stretch, Christoph came across machine learning and was instantly fascinated with it. He started taking part in online ML competitions and hackathons. It didn’t take him long to figure out that linear regression, useful as it was in terms of learning, wasn’t going to cut in these hackathons.

So, he delved deeper into the domain. Decision trees, random forest, ensemble learning – Christoph didn’t leave any stone unturned in his quest to learn and master these algorithms. Once he finished his Master’s, he worked as a Statistical Consultant for a couple of years in the medical domain before stints at a few other organizations.

During this period, Christoph was also doing his own research on the side. Any thoughts on what his area of interest was? You guessed it – interpretable machine learning.

Interest in Interpretable Machine Learning

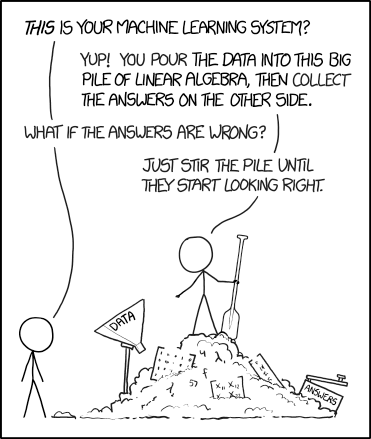

Source: xkcd

Interpretable machine learning is not a topic we come across often when we’re learning this domain (or even working on it). Everyone knows about it yet only a few truly discuss it. So what triggered Christoph’s interest in this area of research?

For Christoph, it all hearkens back to his university days. This was taught as part of his statistics education. So when he was learning about a certain topic, like linear or logistic regression, he learned it from the ground up. That involved not just learning how to built a model, but also how to interpret the inner workings that generated the final output.

A big reason for delving into interpretable ML was Christoph’s experience with non-machine learning folks (and I’m sure everyone would have experienced this at some point):

“I used to ask people “Why don’t you use machine learning for the problem you’re working on?” The answer was always “We can’t explain how it works. Management will not accept a black box model.””

If that sounds familiar, you’re not alone! This inability to understand how models work is quite prevalent in the industry. No wonder a lot of “machine learning projects” fail before they’ve had a chance of picking up steam.

All of this learning naturally translated into Christoph’s machine learning forays. He started exploring methods to make machine learning models interpretable, including looking at projects, reading research papers, etc. One of the methods he came across is called LIME, or Locally Interpretable Model-Agnostic Explanations. We have an excellent article around this you should check out:

According to Christoph, there wasn’t one specific blog or tutorial which emphasized interpretable machine learning across techniques. And that was how the idea of writing a book on the topic was born.

Machine Learning Interpretability Research

Christoph’s research into machine learning interpretability is focused on model-agnostic methods (as opposed to model-specific methods). The former approach is more generalizable in nature while the latter deep dives into the model at hand.

For the model-agnostic methods, they work by changing the features of the input data and observing how the predictions change. For example, how much does the performance of a model drop if we remove a feature? This helps understand feature importance as well. You’ll have come across this concept while learning the random forest technique.

You might be inclined to think – wouldn’t model-specific methods be better? It’s a fair question. The advantage of model-agnostic methods is that they adapt to the evolving spectre of machine learning models. This is applicable to complex techniques like neural networks, and even those that haven’t become mainstream yet.

If you’re a R user, make sure you check out Christoph’s interpretable machine learning package called ‘iml’. You can find it here.

Here, Christoph mentioned a very valid point about the definition of interpretability. Everyone seems to have a different understanding of the concept. A business user might be happy with an overview of how the model works, another user might want to fully grasp each step the model took to produce the final result. That fuzziness is a challenge for any researcher.

End Notes

I strongly believe this topic should be covered in every machine learning course or training. We simply cannot walk into an industry setting and start building a complex web of models without being able to explain how they work.

Can you imagine a self-driving car malfunctioning and the developers struggling to understand where their code went wrong? Or a model detecting an illness where none exists?

I hope to see more traction on this in the coming days and weeks. Until then, make sure you listen to this episode and share it with your network. I look forward to hearing your thoughts and feedback in the comments section below.