I am fascinated by self-driving cars. The sheer complexity and mix of different computer vision techniques that go into building a self-driving car system is a dream for a data scientist like me.

So, I set about trying to understand the computer vision technique behind how a self-driving car potentially detects objects. A simple object detection framework might not work because it simply detects an object and draws a fixed shape around it.

That’s a risky proposition in a real-world scenario. Imagine if there’s a sharp turn in the road ahead and our system draws a rectangular box around the road. The car might not be able to understand whether to turn or go straight. That’s a potential disaster!

Instead, we need a technique that can detect the exact shape of the road so our self-driving car system can safely navigate the sharp turns as well.

Overview

- Mask R-CNN is a state-of-the-art framework for Image Segmentation tasks

- We will learn how Mask R-CNN works in a step-by-step manner

- We will also look at how to implement Mask RCNN in Python and use it for our own images

The latest state-of-the-art framework that we can use to build such a system? That’s Mask R-CNN!

So, in this article, we will first quickly look at what image segmentation is. Then we’ll look at the core of this article—Mask R-CNN framework for image segmentation. Finally, we will dive into implementing our own Mask R CNN model in Python. with that also you will clarified about the Mask R CNN Pytorch Implementation So, Let’s begin!

Table of contents

What is Mask R-CNN?

Mask R-CNN, which stands for Mask Region-based Convolutional Neural Network, is a deep learning model that tackles computer vision tasks like object detection and instance segmentation. It builds upon an existing architecture called Faster R-CNN.

Two main types of image segmentation of Mask R-CNN

- Semantic Segmentation involves categorizing every pixel in an image into a distinct class. Picture a scene where individuals are strolling along a road. Semantic segmentation is able to categorize all the pixels belonging to individuals as a single group, despite there being numerous people.

- Instance Segmentation: This method goes beyond semantic segmentation by not only classifying pixels but also differentiating between objects of the same class. In the same image with people walking, instance segmentation would create a separate mask for each individual person.

Also Read: A Comprehensive Tutorial to learn Convolutional Neural Networks

A Brief Overview of Image Segmentation

We learned the concept of image segmentation in part 1 of this series in a lot of detail. We discussed what is image segmentation and its different techniques, like region-based segmentation, edge detection segmentation, and segmentation based on clustering.

I would recommend checking out that article first if you need a quick refresher (or want to learn image segmentation from scratch).

I’ll quickly recap that article here. Image segmentation creates a pixel-wise mask for each object in the image. This technique gives us a far more granular understanding of the object(s) in the image. The image shown below will help you to understand what image segmentation is:

Here, you can see that each object (which are the cells in this particular image) has been segmented. This is how image segmentation works.

We also discussed the two types of image segmentation: Semantic Segmentation and Instance Segmentation. Again, let’s take an example to understand both of these types:

All 5 objects in the left image are people. Hence, semantic segmentation will classify all the people as a single instance. Now, the image on the right also has 5 objects (all of them are people). But here, different objects of the same class have been assigned as different instances. This is an example of instance segmentation.

Part one covered different techniques and their implementation in Python to solve such image segmentation problems. In this article, we will be implementing a state-of-the-art image segmentation technique called Mask RCNN to solve an instance segmentation problem.

Also Read: A Step-by-Step Guide to Image Segmentation Techniques

Understanding Mask R-CNN

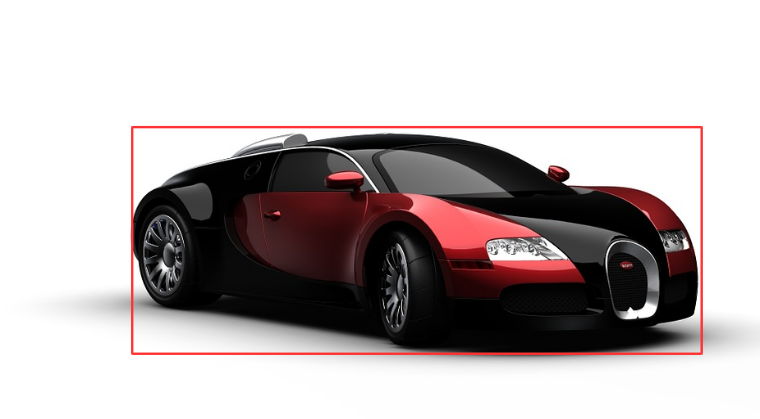

Mask R CNN is basically an extension of Faster R-CNN. Faster R-CNN is widely used for object detection tasks. For a given image, it returns the class label and bounding box coordinates for each object in the image. So, let’s say you pass the following image:

The Fast R-CNN model will return something like this:

The Mask R-CNN framework is built on top of Faster R-CNN. So, for a given image, Mask R-CNN, in addition to the class label and bounding box coordinates for each object, will also return the object mask.

Let’s first quickly understand how Faster R-CNN works. This will help us grasp the intuition behind Mask R-CNN as well.

- Faster R-CNN first uses a ConvNet to extract feature maps from the images

- These feature maps are then passed through a Region Proposal Network (RPN) which returns the candidate bounding boxes

- We then apply an RoI pooling layer on these candidate bounding boxes to bring all the candidates to the same size

- And finally, the proposals are passed to a fully connected layer to classify and output the bounding boxes for objects

Once you understand how Faster R-CNN works, understanding Mask R-CNN will be very easy. So, let’s understand it step-by-step starting from the input to predicting the class label, bounding box, and object mask.

Backbone Model

Similar to the ConvNet that we use in Faster R-CNN to extract feature maps from the image, we use the ResNet 101 architecture to extract features from the images in Mask RCNN. So, the first step is to take an image and extract features using the ResNet 101 architecture. These features act as an input for the next layer.

Region Proposal Network (RPN)

Now, we take the feature maps obtained in the previous step and apply a region proposal network (RPM). This basically predicts if an object is present in that region (or not). In this step, we get those regions or feature maps which the model predicts contain some object.

Region of Interest (RoI)

The regions obtained from the RPN might be of different shapes, right? Hence, we apply a pooling layer and convert all the regions to the same shape. Next, these regions are passed through a fully connected network so that the class label and bounding boxes are predicted.

Till this point, the steps are almost similar to how Faster R-CNN works. Now comes the difference between the two frameworks. In addition to this, Mask R-CNN also generates the segmentation mask.

For that, we first compute the region of interest so that the computation time can be reduced. For all the predicted regions, we compute the Intersection over Union (IoU) with the ground truth boxes. We can computer IoU like this:

IoU = Area of the intersection / Area of the union

Now, only if the IoU is greater than or equal to 0.5, we consider that as a region of interest. Otherwise, we neglect that particular region. We do this for all the regions and then select only a set of regions for which the IoU is greater than 0.5.

Let’s understand it using an example. Consider this image:

Here, the red box is the ground truth box for this image. Now, let’s say we got 4 regions from the RPN as shown below:

Here, the IoU of Box 1 and Box 2 is possibly less than 0.5, whereas the IoU of Box 3 and Box 4 is approximately greater than 0.5. Hence. we can say that Box 3 and Box 4 are the region of interest for this particular image whereas Box 1 and Box 2 will be neglected.

Next, let’s see the final step of Mask RCNN.

Segmentation Mask

Once we have the RoIs based on the IoU values, we can add a mask branch to the existing architecture. This returns the segmentation mask for each region that contains an object. It returns a mask of size 28 X 28 for each region which is then scaled up for inference.

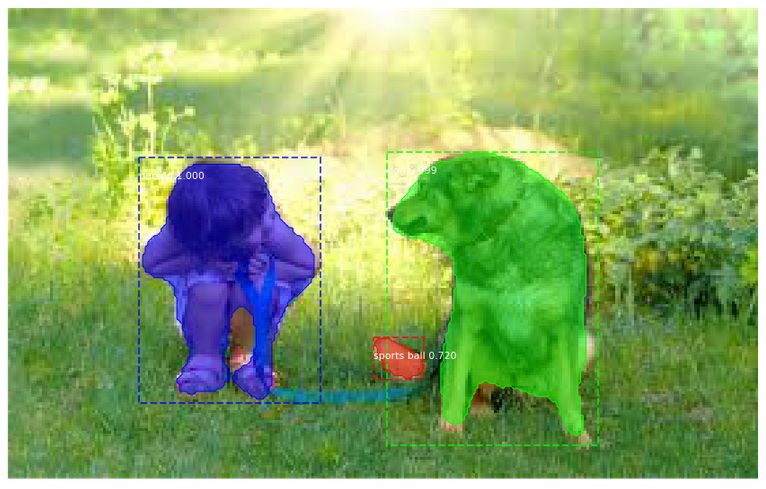

Again, let’s understand this visually. Consider the following image:

The segmentation mask for this image would look something like this:

Here, our model has segmented all the objects in the image. This is the final step in Mask R-CNN where we predict the masks for all the objects in the image.

Keep in mind that the training time for Mask R-CNN is quite high. It took me somewhere around 1 to 2 days to train the Mask R-CNN on the famous COCO dataset. So, for the scope of this article, we will not be training our own Mask R CNN model.

We will instead use the pretrained weights of the Mask R-CNN model trained on the COCO dataset. Now, before we dive into the Python code, let’s look at the steps to use the Mask R CNN model to perform instance segmentation.

Steps to implement Mask R-CNN

It’s time to perform some image segmentation tasks! We will be using the mask rcnn framework created by the Data scientists and researchers at Facebook AI Research (FAIR).

Let’s have a look at the steps which we will follow to perform image segmentation using Mask RCNN.

Step 1: Clone the repository

First, we will clone the mask rcnn repository which has the architecture for Mask R-CNN. Use the following command to clone the repository:

git clone https://github.com/matterport/Mask_RCNN.git

Once this is done, we need to install the dependencies required by Mask RCNN.

Step 2: Install the dependencies

Here is a list of all the dependencies for Mask R CNN:

- numpy

- scipy

- Pillow

- cython

- matplotlib

- scikit-image

- tensorflow>=1.3.0

- keras>=2.0.8

- opencv-python

- h5py

- imgaug

- IPython

You must install all these dependencies before using the Mask R-CNN framework.

Step 3: Download the pre-trained weights (trained on MS COCO)

Next, we need to download the pretrained weights. You can use this link to download the pre-trained weights. These weights are obtained from a model that was trained on the MS COCO dataset. Once you have downloaded the weights, paste this file in the samples folder of the Mask_RCNN repository that we cloned in step 1.

Step 4: Predicting for our image

Finally, we will use the Mask R-CNN architecture and the pretrained weights to generate predictions for our own images.

Once you’re done with these four steps, it’s time to jump into your Jupyter Notebook! We will implement all these things in Python and then generate the masks along with the classes and bounding boxes for objects in our images.

What is Convolutional Neural Networks (CNN)?

Convolutional Neural Networks (CNNs) are a type of deep learning neural network that excel at analyzing visual imagery like photos and videos. They are inspired by the structure of the animal visual cortex, which is the part of the brain responsible for processing vision.

Implementing Mask R-CNN in Python

Sp, are you ready to dive into Python and code your own image segmentation model? Let’s begin!

To execute all the code blocks which I will be covering in this section, create a new Python notebook inside the “samples” folder of the cloned Mask_RCNN repository.

Let’s start by importing the required libraries:

import os

import sys

import random

import math

import numpy as np

import skimage.io

import matplotlib

import matplotlib.pyplot as plt

# Root directory of the project

ROOT_DIR = os.path.abspath("../")

import warnings

warnings.filterwarnings("ignore")

# Import Mask RCNN

sys.path.append(ROOT_DIR) # To find local version of the library

from mrcnn import utils

import mrcnn.model as modellib

from mrcnn import visualize

# Import COCO config

sys.path.append(os.path.join(ROOT_DIR, "samples/coco/")) # To find local version

import coco

%matplotlib inline

Next, we will define the path for the pretrained weights and the images on which we would like to perform segmentation:

# Directory to save logs and trained model

MODEL_DIR = os.path.join(ROOT_DIR, "logs")

# Local path to trained weights file

COCO_MODEL_PATH = os.path.join('', "mask_rcnn_coco.h5")

# Download COCO trained weights from Releases if needed

if not os.path.exists(COCO_MODEL_PATH):

utils.download_trained_weights(COCO_MODEL_PATH)

# Directory of images to run detection on

IMAGE_DIR = os.path.join(ROOT_DIR, "images")

If you have not placed the weights in the samples folder, this will again download the weights. Now we will create an inference class which will be used to infer the Mask R-CNN model:

class InferenceConfig(coco.CocoConfig):

# Set batch size to 1 since we'll be running inference on

# one image at a time. Batch size = GPU_COUNT * IMAGES_PER_GPU

GPU_COUNT = 1

IMAGES_PER_GPU = 1

config = InferenceConfig()

config.display()

view rawinference.py hosted with ❤ by GitHubWhat can you infer from the above summary? We can see the multiple specifications of the Mask R-CNN model that we will be using.

So, the backbone is resnet101 as we have discussed earlier as well. The mask shape that will be returned by the model is 28X28, as it is trained on the COCO dataset. And we have a total of 81 classes (including the background).

We can also see various other statistics as well, like:

- The input shape

- Number of GPUs to be used

- Validation steps, among other things.

You should spend a few moments and understand these specifications. If you have any doubts regarding these specifications, feel free to ask me in the comments section below.

Loading Weights

Next, we will create our model and load the pretrained weights which we downloaded earlier. Make sure that the pretrained weights are in the same folder as that of the notebook otherwise you have to give the location of the weights file:

# Create model object in inference mode.

model = modellib.MaskRCNN(mode="inference", model_dir='mask_rcnn_coco.hy', config=config)

# Load weights trained on MS-COCO

model.load_weights('mask_rcnn_coco.h5', by_name=True)

Now, we will define the classes of the COCO dataset which will help us in the prediction phase:

# COCO Class names

class_names = ['BG', 'person', 'bicycle', 'car', 'motorcycle', 'airplane',

'bus', 'train', 'truck', 'boat', 'traffic light',

'fire hydrant', 'stop sign', 'parking meter', 'bench', 'bird',

'cat', 'dog', 'horse', 'sheep', 'cow', 'elephant', 'bear',

'zebra', 'giraffe', 'backpack', 'umbrella', 'handbag', 'tie',

'suitcase', 'frisbee', 'skis', 'snowboard', 'sports ball',

'kite', 'baseball bat', 'baseball glove', 'skateboard',

'surfboard', 'tennis racket', 'bottle', 'wine glass', 'cup',

'fork', 'knife', 'spoon', 'bowl', 'banana', 'apple',

'sandwich', 'orange', 'broccoli', 'carrot', 'hot dog', 'pizza',

'donut', 'cake', 'chair', 'couch', 'potted plant', 'bed',

'dining table', 'toilet', 'tv', 'laptop', 'mouse', 'remote',

'keyboard', 'cell phone', 'microwave', 'oven', 'toaster',

'sink', 'refrigerator', 'book', 'clock', 'vase', 'scissors',

'teddy bear', 'hair drier', 'toothbrush']

Let’s load an image and try to see how the model performs. You can use any of your images to test the model.

# Load a random image from the images folder

image = skimage.io.imread('sample.jpg')

# original image

plt.figure(figsize=(12,10))

skimage.io.imshow(image)

This is the image we will work with. You can clearly identify that there are a couple of cars (one in the front and one in the back) along with a bicycle.

Making Predictions

It’s prediction time! We will use the Mask R-CNN model along with the pretrained weights and see how well it segments the objects in the image. We will first take the predictions from the model and then plot the results to visualize them:

# Run detection

results = model.detect([image], verbose=1)

# Visualize results

r = results[0]

visualize.display_instances(image, r['rois'], r['masks'], r['class_ids'], class_names, r['scores'])

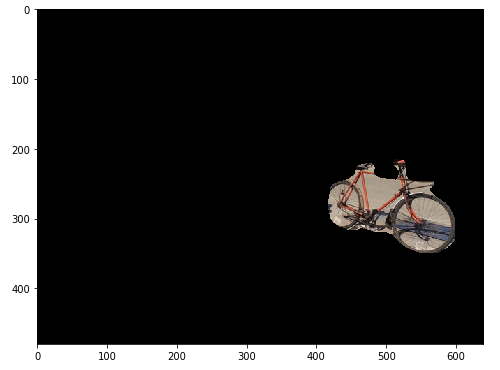

Interesting. The model has done pretty well to segment both the cars as well as the bicycle in the image. We can look at each mask or the segmented objects separately as well. Let’s see how we can do that.

I will first take all the masks predicted by our model and store them in the mask variable. Now, these masks are in the boolean form (True and False) and hence we need to convert them to numbers (1 and 0). Let’s do that first:

mask = r['masks']

mask = mask.astype(int)

mask.shape

Output:

(480,640,3)

This will give us an array of 0s and 1s, where 0 means that there is no object at that particular pixel and 1 means that there is an object at that pixel. Note that the shape of the mask is similar to that of the original image (you can verify that by printing the shape of the original image).

However, the 3 here in the shape of the mask does not represent the channels. Instead, it represents the number of objects segmented by our model. Since the model has identified 3 objects in the above sample image, the shape of the mask is (480, 640, 3). Had there been 5 objects, this shape would have been (480, 640, 5).

We now have the original image and the array of masks. To print or get each segment from the image, we will create a for loop and multiply each mask with the original image to get each segment:

for i in range(mask.shape[2]):

temp = skimage.io.imread('sample.jpg')

for j in range(temp.shape[2]):

temp[:,:,j] = temp[:,:,j] * mask[:,:,i]

plt.figure(figsize=(8,8))

plt.imshow(temp)

This is how we can plot each mask or object from the image. This can have a lot of interesting as well as useful use cases. Getting the segments from the entire image can reduce the computation cost as we do not have to preprocess the entire image now, but only the segments.

Inferences

Below are a few more results which I got using our Mask R-CNN model:

Looks awesome! You have just built your own image segmentation model using Mask R-CNN – well done.

How to implement Mask R-CNN using PyTorch:

Install Required Libraries:

- Ensure you have

torch,torchvision, andpycocotoolsinstalled. If not, install them using:

pip install torch torchvision pycocotools

- Load the Pre-trained Mask R-CNN Model:

- PyTorch’s

torchvisionlibrary provides a pre-trained Mask R-CNN model.

- PyTorch’s

- Prepare the Dataset:

- Mask R-CNN is typically trained on the COCO dataset, but you can prepare your own dataset following the COCO format.

- Inference with Mask R-CNN:

- Load an image, preprocess it, and pass it through the model to get the predictions.

Here’s a sample code snippet demonstrating these steps:

import torch

import torchvision

from PIL import Image

import matplotlib.pyplot as plt

import torchvision.transforms as T

# Load a pre-trained Mask R-CNN model

model = torchvision.models.detection.maskrcnn_resnet50_fpn(pretrained=True)

model.eval() # Set the model to evaluation mode

# Load an image

img_path = 'path_to_your_image.jpg'

img = Image.open(img_path).convert("RGB")

# Preprocess the image

transform = T.Compose([T.ToTensor()])

img = transform(img)

# Add a batch dimension

img = img.unsqueeze(0)

# Perform inference

with torch.no_grad():

prediction = model(img)

# Visualize the results

def plot_image(image, masks, boxes):

fig, ax = plt.subplots(1, figsize=(12,9))

ax.imshow(image)

for i in range(len(masks)):

mask = masks[i].cpu().numpy()

box = boxes[i].cpu().numpy()

ax.imshow(mask, alpha=0.5)

rect = plt.Rectangle((box[0], box[1]), box[2] - box[0], box[3] - box[1], fill=False, color='red')

ax.add_patch(rect)

plt.show()

# Convert image back to numpy

img_np = img.squeeze().permute(1, 2, 0).cpu().numpy()

# Plot the image with masks and bounding boxes

plot_image(img_np, prediction[0]['masks'], prediction[0]['boxes'])

Conclusion

Exploring the table of contents provides a comprehensive understanding of image segmentation, particularly focusing on the implementation of Mask R-CNN in Python. The step-by-step guide outlined elucidates the process from cloning the repository to predicting for customized images. Through understanding Mask R-CNN and following the prescribed steps, users can effectively utilize pre-trained weights and execute segmentation tasks efficiently. This structured approach empowers practitioners to harness the capabilities of advanced segmentation techniques, enhancing their proficiency in image analysis and fostering innovation in various fields reliant on computer vision technologies. Also, you will how to implement mask r CNN pytorch.

Key Takeaways :

- Mask R-CNN extends Faster R-CNN to perform instance segmentation, providing object detection, classification, and pixel-wise masks.

- The framework uses a backbone model (ResNet 101) for feature extraction, followed by Region Proposal Network and Region of Interest alignment.

- Implementation involves using pre-trained weights, setting up the environment, and running inference on images using Python libraries.

- Mask R-CNN can be implemented using both TensorFlow/Keras and PyTorch, with pre-trained models available in both frameworks.

Frequently Asked Questions

A. Mask R-CNN is used for object detection, segmentation, and instance segmentation in images, identifying objects and delineating their exact shapes.

A. CNN (Convolutional Neural Network) is primarily used for image classification and feature extraction, while Mask R-CNN extends Faster R-CNN by adding a branch for predicting segmentation masks on each detected object.

A. R-CNN stands for Region-based Convolutional Neural Network, which focuses on identifying regions of interest in an image for object detection.

A. Mask R-CNN uses multiple loss functions: classification loss for object categories, bounding box regression loss for object localization, and mask loss for pixel-wise object segmentation.

Hi Pulkit, great article I was looking for the same. Thanks for sharing.

Glad you found it useful Vaibhav!

i wanna know about the weights

hey really nice article...I am trying to recognize and classify SSD boards is this something i can employ in my research

Hi Praketa, You can surely try this Mask R-CNN framework for your use case. Do share the results here with the community which will help them in learning.

Hello Pulkit, Yet again, nice way to explain the key concepts.

Glad that you liked it Pankaj! Stay tuned for more such articles on computer vision.