Overview

- A comprehensive look at the top machine learning highlights from 2019, including an exhaustive dive into NLP frameworks

- Check out the machine learning trends in 2020 – and hear from top experts like Sudalai Rajkumar and Dat Tran!

Introduction

2020 is almost upon us! It’s time to welcome the new year with a splash of machine learning sprinkled into our brand new resolutions. Machine learning will continue to be at the heart of what we do and how we do it.

And what about 2019? What a year it has been! The sheer amount of developments we saw in Natural Language Processing (NLP) blew us away. It was the year of fine-tuning language models and frameworks like Google’s BERT and OpenAI’s GPT-2 (more of all of this later!).

What we loved about 2019 was the community’s embrace of open source releases. They have further lowered the access barriers into machine learning as more and more folks from the community aim to break into this field in 2020. Here’s to all your ambitions and this wonderful career choice!

So, as we get set for the new year, we wanted to take some time and pen down this wide-ranging and thought-provoking article. We will look at the top machine learning developments in 2019 in a technical review manner. We will also look at what we can expect from the different machine learning domains in 2020.

And the cherry on top – hear from top machine learning experts and practitioners like Sudalai Rajkumar (SRK), Dat Tran, Sebastian Ruder and Xander Steenbrugge as they pick out their top trends in 2020!

Areas we’ll cover in this article

- AI and ML for Business Leaders

- Natural Language Processing (NLP)

- Deep Learning and Computer Vision

- The Matter of Ethics in Machine Learning

- Analytics Vidhya’s Take on Machine Learning Trends in 2020

The Business Side of Machine Learning – How Leaders Approach It

The rise of machine learning has disrupted multiple and diverse industries across the globe. In fact, our job roles and functions are getting impacted to a large extent right now (we’re sure you can relate to this!).

Executives, leaders, and CxOs are lining up to integrate machine learning solutions in their organizations. This was almost impossible a few years ago when businesses had to do a lot of things manually infrastructure wise. It’s no coincidence that machine learning projects had a higher chance of failure in 2015 than in 2019.

This uptick in machine learning investment (and board-level buy-in) has happened thanks in large part to the rise of cloud-based platforms. Yes, we’re talking about Google Cloud Platform, Amazon Web Services, etc.

These platforms have made adopting machine learning far easier than ever before thanks to their out-of-the-box solutions.

Let’s look at a few hardcore numbers by Forrester (taken from their report here):

- 53% of global data and analytics decision-makers say they have implemented, are in the process of implementing, or are expanding their implementation of some form of machine learning

- 29% of global developers (manager level and up) have worked on machine learning software in the past year (that’s a BIG number)

Top Trends to Expect in the Business Side of Machine Learning in 2020

So, how do we see 2020 planning out for machine learning? The current level of investment and interest in this field will only intensify! That’s great news for all you machine learning enthusiasts and freshers hoping to make a career in this field.

As we’ll see later in this article, the effort to integrate Natural Language Processing (NLP) based applications will multiply in 2020. So far, we have seen a lot of research in this field – 2020 should see that research become reality in the real-world.

Picking the top trends from the Forrester report we mentioned above:

- 25% of the Fortune 500 will add AI building blocks (e.g. text analytics and machine learning)

- In 2020, senior executives like chief data and analytics officers (CDAOs) who are serious about machine learning will see to it that data science teams have what they need in terms of data

- Expect another new peak in AI funding in 2020!

Natural Language Processing (NLP)

Natural Language Processing took a giant leap in 2019. This is one domain that REALLY took off this year. The sheer amount of breakthroughs and developments that happened – unparalleled.

2018 was a watershed year for NLP. 2019 was essentially about building on that and taking the field forward by leaps and bounds.

If you’re a newcomer to NLP and want to get started with this burgeoning field, I recommend checking out the below comprehensive course:

And with that, let’s check out the top NLP highlights from 2019!

Top Natural Language Processing (NLP) Highlights from 2019

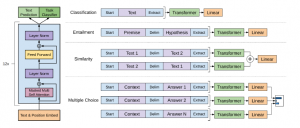

Transformers ruled the NLP landscape

We got the Transformer architecture in 2017 from the “Attention is all you Need” paper. This eventually led to Google open-sourcing BERT, the State-of-Art NLP model. And ever since then, the Transformer has time and again ruled the latest SOTA results in the NLP space.

Google’s Transformer-XL, which was another Transformer based model, outperformed BERT in Language Modeling. This was followed by OpenAI’s GPT-2 – a model that became famous for generating very realistic human-like text by itself:

2019 saw many innovations on BERT itself, like CMU’s XLNet, Facebook AI’s RoBERTa and mBERT (multilingual BERT) in the latter part of the year.

Pre-training of Large Language Models was the norm

Transfer learning in NLP is another trend that picked up in 2019. We started seeing multiple language models that were pre-trained on large unlabelled text corpora thereby enabling them to learn the underlying nuances of language itself.

These models can then be fine-tuned for almost any NLP task and would work well with comparatively fewer data. Major flag bearers for this trend were GPT-2, Transformer-XL, etc.

There was good progress in the method of pre-training itself with models like Baidu’s ERNIE 2.0 that introduced the concept of Continual pre-training. In this framework, different customized tasks can be incrementally introduced at any time:

Increase interest in NLP engineering & deployment

Another cool development in 2019 – the StanfordNLP library! This was open-sourced by Christopher Manning’s StanfordNLP group. This library provides neural network-based models for common text processing tasks like POS tagging, NER, etc.

This was aided by the launch of HuggingFace’s Transformers library. This made huge waves in the community by providing pre-trained models for all the major SOTA models like BERT, XLNet, GPT-2 etc. in a simple Pythonic way.

One of the major positive impacts it had was evident from the fact that spaCy utilized this library to create spacy-transformers, an industry-grade library for text processing that provides SOTA models like BERT, GPT-2, etc. for these tasks.

The Stanford NLP library made it easier to deploy Transformer-based models into production.

Going hand in hand with the large language models that we trained in 2019, there was also a focus towards optimizing these models.

The issue with larger models like BERT, Transformer-XL, GPT-2 is that they are so compute-intensive, it’s almost impractical to use them in a real-life product.

DistilBERT by HuggingFace showed that it is possible to reduce the size of a BERT model by 40% while retaining 97% of its language understanding capabilities and being 60% faster. This was a welcome surprise for the NLP community which was starting to believe that the only way to perform well in NLP is to train larger models:

Another successful and intriguing approach to decrease the size of BERT models came in the form of ALBERT by Google and Toyota Research. ALBERT achieved SOTA on 3 NLP benchmarks (GLUE, SQuAD, RACE):

Rising interest in Speech

The NLP community rekindled its interest in working with audio data with developments like NVIDIA’s NeMo framework which makes it blazingly easy to train models for an end-to-end Automatic Speech Recognition (ASR) system.

These models are called end-to-end because they take speech samples (audio) as input and produce transcripts (text) without any additional information.

Apart from NeMo, NVIDIA also open-sourced QuartzNet, another new end-to-end ASR model architecture based on Jasper, a small, highly efficient model for speech recognition:

More focus towards multilingual models in NLP

How can NLP be truly useful until it’s able to work with multilingual data?

This year saw a renewed interest in exploring multilingual avenues of NLP libraries like StanfordNLP that came with pre-trained models for processing text in 50+ human languages. This, as you can imagine, made a huge impact on the community.

Then, there were successful attempts to make large language models like BERT multilingual by projects like Facebook AI’s XLM mBERT (100+ languages) and CamemBERT which is BERT variant fine-tuned for French:

Top Natural Language Processing Trends to Expect in 2020

So what can we expect from NLP in 2020? This will still be THE field to work in next year.

Here are the key trends Sudalai Rajkumar (SRK), NLP expert and Kaggle Grandmaster, sees happening in NLP:

- Continuing with the current trend, much bigger deep learning models trained on much larger datasets to get state-of-the-art results

- I also think more production applications will be built using NLP. Smaller NLP models with similar performance as that of Transformer models will be helpful for this. So there could be more focus on this area

- Manual annotation of text data is costly (transfer learning models also need tagged data in target domain for fine tuning) and so semi-supervised tagging methods might become prominent

- Explanations / Interpretability of NLP models to understand what the models have learnt under the hood to take unbiased / fair decisions

And this is what Sebastian Ruder, co-creator of ULMFiT and an NLP maestro, has to say about NLP in 2020:

- Rather than just learning from huge datasets, we’ll see more models being evaluated in terms of their their sample efficiency, how well they can learn with tens or hundreds of examples

- We’ll see an increasing emphasis of sparsity and efficiency in models

- We’ll see a focus on multilinguality and more datasets that cover multiple languages

Deep Learning and Computer Vision

This is an interesting section. Have deep learning and computer vision peaked? Have we hit the ceiling when it comes to making breakthroughs in this space?

This is a slight concern right now in the deep learning community. This came out during NeurIPS 2019 as well. 2019, in terms of progress in deep learning and computer vision, was all about fine tuning previous approaches.

But it’s not all concerning news! There were still some amazing open-source deep learning projects that came out this year.

Remember the DeOldify project that sparked a lot of interest from the deep learning community? Its author, Jason Antic, implemented techniques from a number of papers in the generative modeling field, including self-attention GANs, progressively growing GANs and a two time-scale update rule.

Let’s see two more powerful computer vision frameworks that came out in 2019!

Top Deep Learning and Computer Vision Highlights from 2019

SlimYoloV3 for Real-Time Object Detection

Humans can pick out objects in our line of vision in a matter of milliseconds. In fact – just look around you right now. That is real-time object detection. How cool would be it if we could get machines to do that?

Now we can! Thanks primarily to the recent surge of breakthroughs in deep learning and computer vision, we can lean on object detection algorithms to not only detect objects in an image – but to do that with the speed and accuracy of humans.

Real-time object detection models should be able to sense the environment, parse the scene and finally react accordingly. The model should be able to identify what all types of objects are present in the scene. Once the types of objects have been identified, the model should locate the position of these objects by defining a bounding box around each object.

There are multiple components or connections in the model. Some of these connections, after a few iterations, become redundant and hence we can remove these connections from the model. Removing these connections is referred to as pruning.

Pruning will not significantly impact the performance of the model and the computation power will reduce significantly. Hence, in SlimYOLOv3, pruning is performed on convolutional layers. After pruning, we fine-tune the model to compensate for the degradation in the model’s performance.

A pruned model results in fewer trainable parameters and lower computation requirements in comparison to the original YOLOv3 and hence it is more convenient for real-time object detection.

SlimYOLOv3 is the modified version of YOLOv3. The convolutional layers of YOLOv3 are pruned to achieve a slim and faster version.

Facebook AI’s Detectron 2

Detectron2 is Facebook AI Research’s next-generation software system that implements state-of-the-art object detection algorithms. It is a ground-up rewrite of the previous version, Detectron, and it originates from the maskrcnn-benchmark.

- It is powered by the PyTorch deep learning framework

- Includes more features such as panoptic segmentation, densepose, Cascade R-CNN, rotated bounding boxes, etc.

- Can be used as a library to support different projects on top of it. Facebook AI plans to open source more research projects in this way

- It trains much faster!

Top Deep Learning and Computer Vision Trends to Expect in 2020

Here’s Dat Tran, Head of AI at Axel Springer and a Speaker at DataHack Summit 2019, with his thoughts on what we can expect in deep learning and computer vision in 2020:

- Detecting DeepFake will become increasingly important as it’s become easier to generate very realistic faces especially with new approaches such as StyleGAN2. More research will be going into this direction for both visual and (audio) actually.

- Meta-learning and semi-supervised learning: the goal is to continue develop models that generalizes well with less data

No surprise to see that the focus will remain on GANs next year as well.

Top Reinforcement Learning Trends to Expect in 2020

For all those of you who are interested in Reinforcement learning – here are the star-studded trends in the area of RL in 2020 as stated by Xander Steenbrugge.

- For RL to work at scale, current approaches need enormous amounts of training data which can only be realistically harvested if there is a sufficiently accurate and fast simulation environment available. This is the case for many video games (hence these successes) but not for most real-world problems.

- Because of this training paradigm, RL at scale feels almost like we’re simply overfitting a policy on a dense sampling of the problem space, rather than having it learn the underlying causal factors in the environment and generalizing intelligently.

- Similarly, almost all existing Deep RL approaches are incredibly brittle in terms of adversarial examples, out-of-domain generalization, and one-shot-learning; problems that are fundamental to all neural-network based learning paradigms and currently don’t have good solutions.

- OpenAI released a new suite of gym-like environments that uses procedural level generation to test the generalization abilities of Deep RL algorithms

- Many researchers are starting to question and re-evaluate the very definition of what we actually mean by “Intelligence”

- We’re starting to better understand the uncovered weaknesses of neural networks and using that knowledge to make better models.

- Learning from limited data and generalization will become central theme’s of RL research

- Breakthroughs in this domain will be closely tied to advances in the Deep Learning field in general, as the shortcomings they address are fundamental to neural networks as function approximators rather than to the Reinforcement Learning paradigm.

- We will see a growing body of research that tries to leverage the strength of generative models to augment various training processes (from normal classifiers to RL)

The Matter of Ethics in Machine Learning

Data is driving every business and enterprise in today’s world. Beneath this lies the potential to constructively channelize its direction to achieve harmonious growth.

On the flip side, there is also a high potential for its direction to become a mode of destruction for masses. It’s all in our hands, so let’s constructively use the power of machine learning for good.

Ethics is not much talked about in the machine learning realm because people often assume that a computer can’t be biased, prejudiced or harbor stereotypes – right?

Not true! The machine learning techniques themselves might be unbiased, but once we feed in the data, assumptions, etc., there’s a high chance of bias appearing in the model. The reason? These are conditions set by us humans, who may project their biases into the results, even if they weren’t intending to do so (unconscious bias).

How do we Focus on Reducing Bias and Building ‘Good Ethics’?

Our machine learning community is In the process of realizing the massive potential of this field. And as the saying goes – Those that work with data have a lot of power; and with that power, comes great responsibility! This sentence is a hard-hitting one.

There is a dire need for us to set goals to design ethical evidence-based decision-making frameworks. We believe this can only be achieved by understanding the morality, law, and politics of data and artificial intelligence, drawing on world-class research in data science, law, philosophy and beyond.

One way we can measure ethical practices in machine learning is by using a moral compass. This compass diverges around 4 C’s – clarity, consistency, control, and consequences. In each of these C’s, if you can put in foreseeable thoughts, the impact of any implementation going in the wrong direction can potentially be stopped. Here is a very interesting solution to how do we can reduce bias in machine learning by Ideo. Check it out!

Yoshua Bengio, the co-recipient of the 2018 ACM A.M. Turing Award for his work in deep learning, shares his valuable thoughts on Ethics in machine learning:

We are sure you must have heard about Neural Information Processing Systems (NeurIPS) – Its the largest conference in AI. A very interesting paper on ethics in AI which I suggest you should read:

Analytics Vidhya’s Take on Machine Learning Trends in 2020

We’ve covered a lot of trends in this article! From the expected growth areas (such as NLP) to the slight concern around deep learning, there’s a lot to look forward to in 2020. Here are a few key trends Analytics Vidhya predicts in 2020 that we haven’t covered yet in this article:

- The number of jobs in machine learning will continue to rise exponentially in 2020. Thanks in large part to developments in NLP, a lot of organizations will be looking to expand their teams to employ NLP experts. Great time to get into this space

- Speaking of jobs, we feel the role of a data engineer will take on even more importance. Building machine learning pipelines is no easy feat – and amateur data scientists are not exposed to this side of the lifecycle. A data engineer is crucial to a machine learning project and we should see that reflecting in 2020

- AutoML – This took off in 2018 but did not quite scale the heights we expected in 2019. Next year, we should see more of a focus on that as off-the-shelf solutions from AWS and Google Cloud become even more prominent

- Will 2020 be the year we finally see reinforcement learning breakthrough? It’s been in the doldrums for a few years now as transferring research solutions to the real-world has proven a major obstacle. Here’s hoping we see this trend changing in the next few months!

Now it’s your turn to tell us your favorite developments from 2019 in machine learning and where you see the field heading in 2020. Use the comments section below and spark the discussion!