Iterators – One at a Time!

Python is a beautiful programming language. I love the flexibility and the incredible functionality it provides. I love diving into the various nuances of Python and understand how it responds to different situations.

During my time working with Python, I have come across a few functionalities whose usage is not commensurate to the number of complexities they simplify. I like to call these “hidden gems” in Python. Not a lot of people know about them but they’re super useful for analytics and data science professionals.

Python Iterators and Generators fit right into this category. Their potential is immense!

If you’ve ever struggled with handling huge amounts of data (who hasn’t?!), and your machine running out of memory, then you’ll love the concept of Iterators and generators in Python.

Rather than putting all the data in the memory in one go, it would be better if we could work with it in bits, dealing with only that data that is required at that moment, right? This would reduce the load on our computer memory tremendously. And this is what iterators and generators do!

So let’s dive into the article and explore the world of Python iterators and generators.

I assume you are familiar with the basics of Python. If not, I recommend the below popular course to get started:

Here’s what we’ll cover

- What are Iterables?

- What are Python Iterators?

- Creating an Iterator in Python

- Getting Familiar with Generators in Python

- Implementing Generator Expressions in Python

- Why Should you Use Iterators?

What are Iterables?

“Iterables are objects that are capable of returning their members one at a time”.

This is usually done using a for-loop. Objects like lists, tuples, sets, dictionaries, strings, etc. are called iterables. In short, anything you can loop over is an iterable.

We can return the elements of an iterable one-by-one using a for-loop. Here, we iterate over the elements of a list using a for-loop:

# iterables

sample = ['data science', 'business analytics', 'machine learning']

for i in sample:

print(i)Now that we know what iterables are, how are we actually looping over the values? And how does our loop know when to stop? Enter the iterator!

What are Python Iterators?

An iterator is an object representing a stream of data i.e. iterable. They implement something known as the Iterator protocol in Python. What is that?

Well, the Iterator protocol allows us to loop over items in an iterable using two methods: __iter__() and __next__(). All iterables and iterators have the __iter__() method which returns an iterator.

An iterator keeps track of the current state of an iterable.

But what sets iterables and iterators apart is the __next__() method accessible only to iterators. This allows the iterators to return the next value in the iterable, whenever it is asked for it.

Let’s see how this works by creating a simple iterable, a list, and an iterator from it using the __iter__() method:

Yes, as I said, iterables have the __iter__() method for creating an iterator but they do not have the __next__() method which sets them apart from an iterator. So let’s try this once again and try to retrieve the values from the list:

Perfect! But wait, didn’t I say iterators also have the __iter__() method? That’s because iterators are also iterables but not vice-versa. And they are their own iterators. Let me show you this concept by looping over an iterator:

Cool! But instead of using the __iter__() and __next__() methods, you can use the iter() and next() methods which provide a neater way to do things:

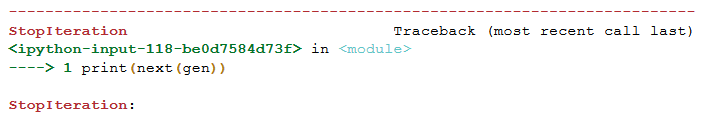

But what if we overshoot the limit the number of times we call the next() method? What will happen then?

That’s right, we get an error! If we try to access the next value after reaching the end of an iterable, a StopIteration exception will be raised which simply says “you can’t go further!”.

We can deal with this error using exception-handling. We can, in fact, build a loop ourselves to loop over the iterable items:

If you take a step back, you will realize that this is precisely how the for-loop works under the hood. What we did with the loop we made here manually, for-loop does the same thing automatically. And that is why for-loops are preferred for looping over the iterables because they automatically deal with the exception.

Whenever we are iterating over an iterable, the for-loop knows which item to be iterated over using iter() and returns the subsequent items using the next() method.

Creating an Iterator in Python

Now that we know how Python iterators work, we can dive deeper and create one ourselves from scratch just to get a better understanding of how things work.

I am going to create a simple iterator for printing all the even numbers:

Let’s break down this chunk of Python code:

- The __init__() method is a class constructor and is the first thing that gets executed when a class is called. It is used to assign any values initially that will be required by the class during the program execution. I have initiated the num variable with 2 here

- The iter() and next() methods are what make this class an iterator

- The iter() method returns the iterator object and initializes the iteration. Since the class object is itself an iterator, therefore it returns itself

- The next() method returns the current value from the iterator and changes the state for the next call. We update the value of the num variable by 2 since we are only printing even numbers

We can loop over the Sequence class by creating its object and then calling the next() method on the object:

Since I did not mention any condition that will determine the end of the sequence, the iterator will keep on returning the next value forever. But we can easily update it with the stop condition:

I have just included an if statement that stops the iteration whenever the value overshoots 10:

Here, instead of using the next() method to return the values from the iterator, I have used a for-loop which works the same way as before.

Getting Familiar with Generators in Python

Generators are also iterators but are much more elegant. Using a generator, we can achieve the same thing as an iterator but don’t have to write the iter() and next() functions in a class. Instead, we could use a simple function to achieve the same task as an iterator:

Did you notice the difference in this generator function and a normal function? Yes, the yield keyword!

Normal functions return values using the return keyword. But generator functions return values using a yield keyword. This is what sets the generator function apart from normal functions (apart from this distinction, they are absolutely the same).

The yield keyword works like a normal return keyword but with additional functionality – it remembers the state of the function. So the next time the generator function is called, it doesn’t start from scratch but from where it was left-off in the last call.

Let’s see how it works:

Generators are of ‘generator’ type which is a special type of iterator but is still an iterator, so they are also lazy workers. They won’t return any value unless explicitly told to do so by the next() method.

Initially, when the object for fib() generator function is created, it initializes the prev and curr variables. Now, when the next() method is called on the object, the generator function computes the values and returns the output, while at the same time remembering the state of the function. So, the next time a next() method is called, the function picks up from where it left off last time and resumes from there.

The function will keep on generating values every time it is asked by the next() method until the prev becomes greater than 5, at which point, a StopIteration error will be raised as shown below:

Implementing Generator Expressions in Python

You don’t have to write a function every time you want to execute a generator. You could instead use a generator expression, much like list comprehension. The only difference is that unlike a list comprehension, a generator expression is enclosed within parenthesis like the one below:

But they are still lazy, so you need to use the next() method. However, you know by now that using for-loops is a better option to return the values:

Generator expressions are very useful when you want to write simple code because they are easy to read and comprehend. But their functionality decreases rapidly as the code becomes more complex. This is where you will find yourself resorting back to generator functions which provide greater flexibility in terms of writing more sophisticated functions.

Why Should you Use Iterators?

The big question – why should you lean on iterators in the first place?

I mentioned this at the start of the article – you use Iterators because they save us a ton of memory. This is because Iterators don’t compute their items when they are generated, but only when they are called upon.

If I create a list containing 10 million items and a generator containing the same amount of items, the difference in their sizes will be shocking:

For the same size as the list and generator, we have a huge difference in their sizes. That is the beauty of iterators.

And not just that, you could use iterators to read text from a file line-by-line instead of reading everything in one go. This will again save you a lot of memory especially if the file is huge.

Here, let’s use generators to read a file iteratively. For this, we can create a simple generator expression to open files lazily, that is, to read one line at a time:

All this is great but for a data scientist or an analyst, it all boils down to working with huge datasets in Pandas dataframes. Think of the times you had to deal with huge datasets, maybe one having 1000s of rows of data points or even more. If only Pandas had something to deal with this ordeal, life as a data scientist would be so much easier.

Well, you are lucky because the Pandas read_csv() has a chunksize parameter that deals with this problem. It lets you load data in chunks of specified sizes instead of loading the whole data into the memory. When you are done working with one chunk of data, you can do the next() method on the dataframe object to load the next chunk of data. It’s that simple!

I am going to read the Black Friday dataset containing 550,068 rows of data in chunks of 10 just to demonstrate the usage of the function:

Pretty useful, isn’t it?

End Notes

I am sure by now you are quite accustomed to using iterators and must be thinking of converting all your functions to generators! Got to love the power of Python programming.

Have you used Python iterators and generators before? Or do you want to share some other “hidden gems” with the community? Let us know in the comments section below!

Amazing !! was not aware of actual benefits of these functionalities in python. Enjoyed reading your blog thoroughly. Keep sharing your learning.

Glad to hear you enjoyed the blog!

Great article. Very well explained. Thanks.

Thanks Harvey.

To be frank, when I started reading it, it seemed nothing different to me from what lists can achieve. Then I noticed the memory size differences and it amazed me. Nice article!