Introduction to Artificial Intelligence and Machine Learning

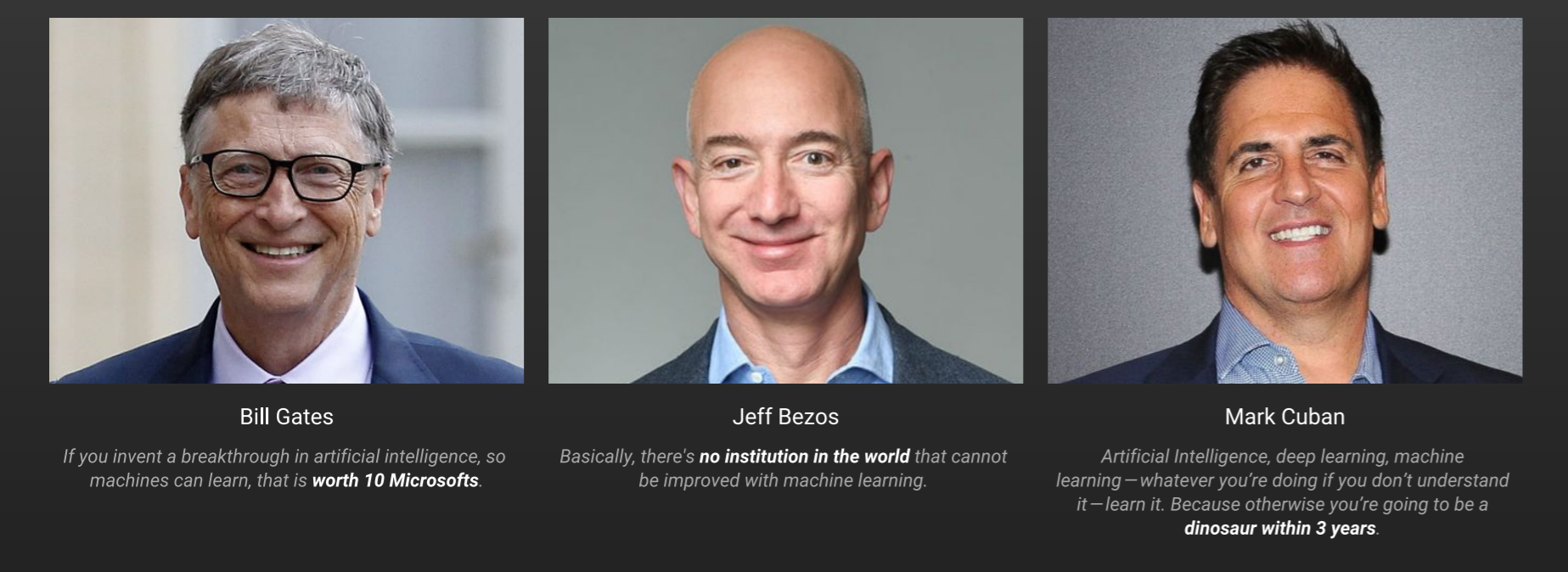

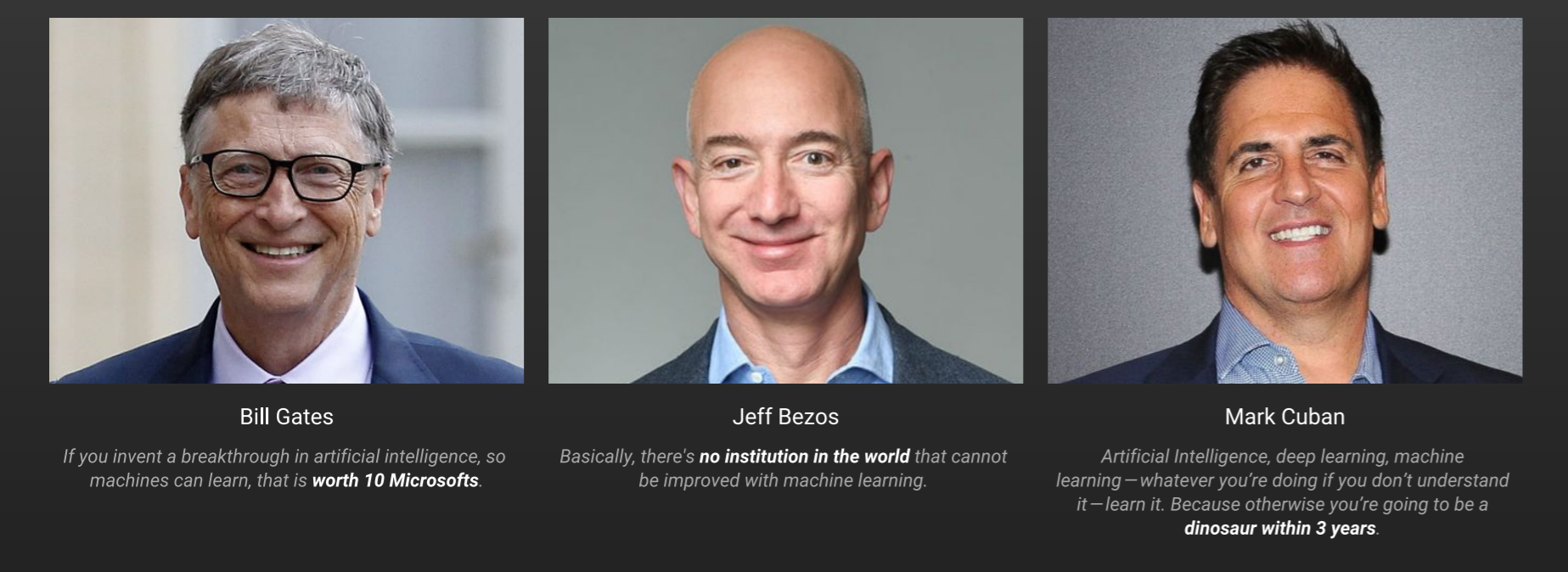

Artificial Intelligence (AI) and its sub-field Machine Learning (ML) have taken the world by storm. From face recognition cameras, smart personal assistants to self-driven cars. We are moving towards a world enhanced by these recent upcoming technologies.

It’s the most exciting time to be in this career field! The global Artificial Intelligence market is expected to grow to $400 billion by the year 2025. From Startups to big organizations, all want to join the AI and ML bandwagon to acquire cutting-edge technology.

A recent Gartner report projects that there will be approximately 2.3 million new jobs created by the year 2020 in the field of Artificial Intelligence and Machine Learning.

Artificial Intelligence and Machine Learning have become the centerpiece of strategic decision-making for organizations. They are disrupting the way industries and roles function – from sales and marketing to finance and HR, companies are betting big on AI and ML to give them a competitive edge.

Artificial Intelligence can be defined as a field of development of intelligent machines that work and react like humans

However, there is a caveat. There are plenty of job roles available but the industry is facing a massive shortage of skilled AI and ML professionals! So how can you fill up this gap? You can follow different paths to becoming a skilled professional – read blogs, watch tutorials, take up a certification course.

But taking up these courses doesn’t actually make you a skilled professional, it is the skills acquired and projects accomplished during this time. Analytics Vidhya’s Certified AI & ML BlackBelt Accelerate course focuses on making you an industry-ready data science professional.

Let us look at how you can become an industry-ready full-stack data science professional in just one go!

What if you can cover each and every element present above can be mastered at one place?

The entire AI and ML journey(covered in the next section) is covered in Analytics Vidhya’s Certified AI & ML BlackBelt Accelerate program. It consists of anything and everything that you will require to become an industry, ready professional! But courses curated by data science experts aren’t the only specialty of the Analytics Vidhya’s BlackBelt Accelerate program. It is the one-on-one mentorship calls specifically to customize and align the course just for you. Let’s see how can you become a master of data science in one go –

- 14+ courses

- 25+ projects

- 1:1 Mentorship calls

Excited yet? Let us, deep-dive, into the granular skills you need to master in order to become an industry-ready full-stack AI and ML professional –

Skills you must master!

1. Data Science Tool Kit

–

Master Microsoft Excel – If you want to work with numbers, there is no better tool to start with than Microsoft Excel which is still the most popular tool around. Become comfortable with this tool.

–

Explore Important Formulas and Functions – Master Excel features like pivot tables, quick charts, VLookUP, HLookUP, IFELSE, find and search, concatenate, SUM, AVG – you’ll need to have these handy when you’re working on real-world analytics projects.

–

Create Charts and Visualization using MS Excel – There is no one size fits all chart and that’s why to create intuitive visualizations, it is imperative that you understand different types of charts and their usage. And Excel is the perfect tool to build advanced yet impactful charts for our analytics audience

–

Get Familiar with MySQL – SQL is a must-have skill for every data science professional. Get yourself familiarized with one of the most widely used tools for data query and analysis. The majority of companies use SQL to make data-driven business decisions.

–

Creating and updating reports in SQL – The majority of companies use SQL to make data-driven business decisions. Learn how to create and update records in SQL. This is one of the most frequent tasks.

–

Performing Data Analysis using SQL – The data won’t speak for itself. In business scenarios, you’ll need to find answers by analyzing data and presenting them to stakeholders such as – Which cities brought in the maximum revenues? or What is the number of users who want to become data scientists or business analysts?

– Explore Python for Data Science – Python has rapidly become the go-to language in the data science space and is among the first things recruiters search for in a data scientist’s skill set. Mastering Python is essential to your skillset

– Important libraries and functions in Python – Python itself isn’t a machine learning language, the libraries and added functions like – Pandas, Numpy, Scikit-learn, Tensorflow make it very powerful.

– Reading file and manipulating data in python – Data is never found in a clean format. If you send dirty and untidy data into the model, the model will also return garbage, you must be well-versed with outlier treatment, missing value imputation.

– Working with data frames, lists, and dictionary – Python offers a range of options to store your data which are easy to understand. For better data handling you must them all. They are also frequently asked in interviews.

2. Data Exploration and Statistical Inference

– Working with pandas and other python libraries for data exploration – Pandas is amongst those elite libraries that draw instant recognition from programmers of all backgrounds, from developers to data scientists. According to a recent survey by StackOverflow, Pandas is the 4th most used library/framework in the world.

– Use Matplotlib and Seaborn for data visualization – These are the most essential libraries that you’ll need to master at the beginning of your data science journey. These can never be avoided.

– Creating charts to visualize data and generate insights – No Data Science project can be started or ended without data visualization. A good data scientist is always a good storyteller. And a storyteller needs tools to visualize the facts and data.

– Univariate and Bivariate analysis using python – Getting a feel of the data is important. Univariate and Bivariate analysis help in uncovering patterns that lie hidden in the dataset and will help you in later stages as well.

– Perform Statistical Analysis on real-world datasets – Machine Learning coincides with statistical analysis and it is always better to perform basic statistical tests in the beginning to understand the quality of the dataset.

– Build and Validate hypotheses using statistical tests – Probably the most underrated yet the most important initial steps in a machine learning project. Any data science project always starts out as a hypothesis.

– Generating useful insights from the data – Converting data into insights is the goal of a data scientist. If all of the above steps are followed properly then you will arrive at some hidden gems of insights.

–

Getting familiar with the Tableau interface – Tableau is the gold standard when it comes to building industry-level dashboards and performing elite-level storyboarding. In fact, Tableau has changed the way industries analyze and present data. Get comfortable with the Tableau interface.

– Build bubble charts, waterfall charts, geo-location charts, and many others – Being a data AI and ML professional, effective, and aesthetic charts are a must for storytelling. Tableau offers basic charts like scatter plots, histograms to bubble charts, waterfall charts. All you need to do is just drag and drop!

– Learn to create Dashboards in Tableau – Dashboards are an amazing way to visualize your analysis and the viewer gets the freedom to break down the analysis. Tableau offers an interactive dashboard that you can use to select various parameters.

– Master storyboarding in Tableau – People usually use PowerPoint for presentations or Word to showcase their findings. There’s a better way – You can create slides directly in Tableau and put visualizations directly. You can import the already built dashboard into the storyboard and you don’t even need to leave the tool environment!

– Perform feature engineering in Tableau – Well, most people think tableau is just a drag and drop tool that doesn’t offer flexibility and customization. Tableau offers much more than that. You can build new features in tableau to understand the analysis better.

– Dealing with ambiguous business problems – Business Problems do not come pre-defined, they are unstructured, full of uncertain ideas. It is the job of a data scientist to understand the needs of the stakeholders.

– Structure a business problem into a data science problem – Once the needs of the stakeholders are defined, you can then move on to apply your structured thinking to break down the business goals and converting them into business problems.

– Present analysis and business insights in an impactful manner – Business leaders need confidence in order to make any business decisions. Data Scientists need to present their analysis in an impactful yet simple-to-understand manner.

– Communicate ideas and insights to the stakeholders – Communications skills are the hidden trait of a data scientist. No matter how insightful your analysis and results are if you can’t communicate them then it all goes in vain.

–

Learn Important Machine Learning concepts – Start off with understanding the basics of machine learning such as supervised and unsupervised learning, structured and unstructured data, and other basic concepts that form the backbone of machine learning.

– Perform data cleaning and Preprocessing – Data Cleaning and preprocessing take about 80% time of data science projects. Mastering these techniques is more of a necessity. Tasks like missing value imputation, outlier detection, data transformation.

–

In-depth understanding of Basic ML models – Now is the time to move on to the most exciting step of machine learning, the machine learning models. Learn about the basic machine learning models like – linear and logistic regression, KNN, etc.

–

Math Behind each Machine Learning Algorithm – Machine Learning model implementation in Python takes only 2-3 lines but their mathematical calculation is actually complex. To become a good data scientist, you must be aware of the mathematics that works inside the system. Only then will you be able to build a better model for your own scenario.

– Building Classification and Regression Models – It’s time to get your hands a little dirty. Try to solve different types of problems to get a good exposure. Start with building classification and regression models and getting to know their evaluation metrics like – accuracy, RMSE, etc.

– Hyperparameter Tuning to improve model – Building a model is an iterative process, hyperparameter tuning is an art that is acquired via experience. There are many different ways you can do hyperparameter tuning like -gridsearch, bayesian hyperparameter tuning.

– Solving real-world business problems using Machine Learning – Now it is time to put all the pieces of the puzzle together and work on a real-life problem. Solving a problem statement from end-to-end. This step will give a polish to all your learnings.

6. Feature Selection and Engineering

– Learn the art of Feature engineering – As mentioned Feature Engineering is an art as it is acquired by experience and executed only after multiple iterations. Many of the data science competition winners have given credits to the step of feature engineering.

– Extracting Features from the image and text-based data – Computers only understand in binary digits. We must extract features from text and image-based data points for the model building process.

– Automated Feature Engineering Tool – With the advent of Automated Machine Learning tools, you can also take the help of automated feature engineering tools like Featuretools, Autofeat, Tsfresh, and so on.

– Concept of dimensionality reduction – When working with real-world data especially text and image, the number of features can escalate to thousands of columns. That is where the concept of dimension reduction comes into importance.

– Feature Selection and Elimination Techniques – One way of reducing the dimension is simply to select the important features and eliminate the others. Sklearn offers several functions to accomplish tasks.

– Detailed Understanding of Principal Component Analysis (PCA) – PCA is a method of obtaining important variables (in form of components) from a large set of variables available in a data set. It extracts a low dimensional set of features by taking a projection of irrelevant dimensions from a high dimensional data set with a motive to capture as much information as possible.

– Concept of Factor Analysis – Factor analysis is one of the unsupervised machine learning algorithms which is used for dimensionality reduction. This algorithm creates factors from the observed variables to represent the common variance i.e. variance due to correlation among the observed variables.

–

Explore the Advanced ML concepts and ALgorithms – Till now, you have built and experienced the basic machine learning pipeline. Over the decade, machine learning has seen a new class of algorithms that give much better efficiency, accurate results. Get accustomed to these advanced concepts like ensemble learning and its variants.

– Use Ensemble Learning Techniques (Stacking and Blending) – Stacking and Blending are the basics of ensemble learning. Learn how multiple decision trees work together in series and parallel, important hyperparameters.

– Understand and Implement Boosting Algorithms – There is no data science competition that doesn’t have to boost as one of the winning solutions. Boosting is an essential advanced concept that you should keep at your fingertips. Start out by understanding the algorithm and the math behind it. Move on to its hyperparameter optimization and then apply it to real-world problems.

– Learn to handle Text data and Image Data – There are many types of data, the most common being the numerical, and then comes text and image data. With the advent of the internet and social media, text and image data have flooded. Understand the basics of text and image data with libraries like NLTK, gensim, textblob.

– Working with structured and unstructured data – Structured data is the data available in the form of tables whereas unstructured data is not. The latter is usually applied with algorithms that find and return patterns

– Dealing with unsupervised learning problems – Unsupervised Learning algorithms are applied to unstructured data. They do not have a target variable. Instead these work on finding common patterns within the data.

– Clustering Algorithms including k-means and Hierarchical clustering – Clustering algorithms form the backbone of unsupervised learning algorithms. K-means and Hierarchical clustering is used to group data points.

–

Important concepts of Deep Learning – Learn fundamental concepts of deep learning – What is a neuron, forward propagation, backward propagation, the role of gradient descent, activation function, bias.

– Working with Neural Network from Scratch – Build a neural network from scratch. Although you won’t be required to build a model from scratch in the future this activity will help you clear your concepts. You can achieve this with the help of Numpy, TensorFlow.

– Explore different types of Neural Networks (CNN, RNN, and more) – There is no one size fits all approach in deep learning. Learn the different types of neural networks like – ANN, CNN, RNN, LSTM, etc. Learn the different applications for each of them.

–

Activation Functions and Optimizers for Deep Learning – The activation functions help the network use important information and suppress the irrelevant data points. Go through different activation functions like linear, sigmoid, ReLU, softmax, etc.

–

Build Deep Learning models to tackle real-life problems – What’s most exciting than applying everything you learned and apply it to real-world problems? You can select the type of problem you want to choose (image, time-series data, text data) and get started.

– Learn to tune the hyperparameters of Neural Networks – Hyperparameters are the variables that need to be set manually like the number of hidden layers, learning rate, etc.

–

Explore various Deep Learning Frameworks – The fast adoption of deep learning is mainly due to the advancements of frameworks like TensorFlow, Keras, and PyTorch that have led to rapid innovation. Try out different frameworks and adopt the one which suits your application.

–

Get familiar with the world of Computer Vision – Computer Vision is one of the hottest topics of machine learning. Start by understanding the basic concepts like – object detection, object classification, face detection, image segmentation.

–

Transfer Learning for Computer Vision – Transfer Learning has transformed the world of Computer Vision. It is essentially using a pre-trained model for a specific task. A pre-trained model is a model already designed and trained by a certain person or team to solve a specific problem.

– Work with popular Deep Learning Framework – Pytorch – PyTorch is one of the most popular and upcoming deep learning frameworks that allow you to build complex neural networks. It is rapidly growing among the research community and companies like Facebook and Uber are using it as well.

– Learn State-of-the-art Algorithms like YOLO, SSD, RCNN, and more – The deep learning landscape is changing rapidly. If you want a high rank in your next hackathon or get even more accuracy for your deep learning projects you must keep up with the state-of-the-art algorithms, even these are released from time to time with advancements.

– Work on different types of problems – There isn’t just a single CV problem out there. Done with image detection? Move on to image recognition. Perhaps try out object recognition in a video. There is an endless number of problems you can try in Computer vision.

–

Advanced Computer Vision Problems like Image Segmentation and Image Generation – With the advent of self-driven cars we are moving to a much-advanced set of computer vision problems. Image detection cannot alone reveal the location and shape of the object, it just returns a bounding box. We move to image segmentation for more granular information about the object.

– Understand how GANs work – Generative models and GANs are at the core of recent progress in computer vision applications. GANs or Generative Adversarial Networks are used to generate data.

– Handling Text Data (Cleaning and Pre-processing) – Text data comes with its own set of challenges. For example, if you work on social media data, you might come across incomplete words, acronym which may be gibberish to the machine learning model. Before getting to advanced topics understand basic data cleaning like lower casing, Punctuation removal, Stopwords removal, Frequent words removal, Rare words removal, Spelling correction, Tokenization, Stemming, Lemmatization.

– Use Spacy, Rasa, and Regex for exploring and processing Text Data – These libraries provide modules and functions to complex text pre-processing steps which cut down the time and effort taken in exploring the dataset.

– Information Extraction and Retrieval from text-based data – The task of Information Extraction (IE) involves extracting meaningful information from unstructured text data and presenting it in a structured format. Using information extraction, you can retrieve pre-defined information such as the name of a person, the location of an organization, or identify a relation between entities, and save this information in a structured format such as a database.

– Understand Language Modelling – This is the main ingredient in many modern NLP tasks such as machine translation, text summarization, speech recognition, etc. You can either delve into statistical language models such as the n-gram models or advanced neural networks.

– Learn Advanced Feature Engineering techniques – The next step is Feature Engineering in which the raw dataset is transformed into flat features that can be used in a machine learning model. This step also includes the process of creating new features from the existing data. You can go with count vectors, TF-IDF features, or word embeddings.

– Build NLP models for Text Classification – The goal of text classification is to automatically classify the text documents into one or more defined categories. It consists of mainly 3 parts – dataset preparation, feature engineering, model building, improving the performance of the model.

– Understand Topic modeling – As the name suggests, it is a process to automatically identify topics present in a text object and to derive hidden patterns exhibited by a text corpus. Thus, assisting better decision-making. For Example, A good topic model should result in – “health”, “doctor”, “patient”, “hospital” for a topic – Healthcare. LDA is a popular algorithm for topic modeling.

– Work on Industry Relevant Projects – Projects are the utmost important component of learning NLP and are given extra weightage during an interview. So go to DataHack and get started with an NLP problem statement.

– Understand the concept of Sequence-to-Sequence Modeling – These models are wherein both the input and the output are sequences of different lengths. You will also need a thorough understanding of the architecture along with the attention mechanism.

– Build a Deep Learning Model for Language translation in PyTorch – Work on a project where you apply sequence-to-sequence modeling to build a deep learning model to translate one language to another automatically using Pytorch.

– Learn to use the Transformers library by Huggingface – The Transformer architecture achieved state-of-the-art status on sequence modeling tasks. The amazing library by Huggingface has democratized the use of Transformers. It has a large number of Transformer based pre-trained models that can also be fine-tuned.

– Use Transformers to perform transfer learning in NLP – Understand the Transformers models in detail. Also, learn how Transformers are used to perform transfer learning in NLP. Understand the working of transformers by working on the Transformers library by Huggingface.

– Build and Deploy your own chatbot – Conversational agents or chatbots have been instrumental across several industries. Work on a project in which you will get to build a chatbot using RASA, an open-source tool. In

Blackbelt+, You will also learn to deploy the chatbot in slack.

– Learn to work with audio-based data – The objective of this project is to build a deep learning system to convert speech input to text. It can be used for tasks such as controlling home automation systems with just a few voice commands.

– Recommender Systems in the industry – From Amazon to Netflix, Google to Goodreads, recommendation engines are one of the most widely used applications of machine learning techniques. A recommendation engine filters the data using different algorithms and recommends the most relevant items to users. It first captures the past behavior of a customer and based on that, recommends products that the users might be likely to buy.

– Detailed Taxonomy of types of Recommender Systems – Understand the various types of recommender systems used in the industry. What are the pros and cons of each of the methods?

– Collaborative Filtering Methods – The collaborative filtering algorithm uses “User Behavior” for recommending items. This is one of the most commonly used algorithms in the industry as it is not dependent on any additional information.

– Content-Based Recommender Systems – This algorithm recommends products that are similar to the ones that a user has liked in the past. For example, if a person has liked the movie “Inception”, then this algorithm will recommend movies that fall under the same genre. But how does the algorithm understand which genre to pick and recommend movies from?

– Knowledge-Based & Hybrid Recommender Systems – The Hybrid Recommender combines the ranks provided by the collaborative and content-based recommender system and makes final recommendations based on the combined rankings.

– Market Basket Analysis & Association Rules – Market Basket Analysis (also called MBA) is a widely used technique among the Marketers to identify the best possible combinatory of the products or services which are frequently bought by the customers. This is also called product association analysis. Association analysis mostly was done based on an algorithm named “Apriori Algorithm”. The Outcome of this analysis is called association rules. Marketers use these rules to strategize their recommendations.

13. Time Series

– Important concepts of Time Series Forecasting – Time Series is an extremely important concept in the Business domain. Some examples range from forecasting sales for next year to analyzing website traffic. Learn the core concepts of time series forecasting like its properties, components, seasonality, etc.

– Machine Learning techniques for Time Series forecasting – We cannot apply the same machine learning concepts and apply them directly to time series data. Instead, we have other special algorithms like – moving averages, exponential smoothing, Holt’s Linear trend model, and other advanced techniques.

– Exponential Smoothing Methods for forecasting – In this technique, we apply more weights to recent observations than to observations with distant past.

– ARIMA and SARIMA Model – ARIMA stands for Auto Regression Integrated Moving Average. The ARIMA forecasting for stationary time series is nothing but a Linear (linear regression) equation. The SARIMA model takes into account the seasonality of the time series.

– Tuning Parameters for ARIMA – ACF(Auto Correlation Function) and PACF(Parcial Auto Correlation Function) plots are used to determine the input parameters for the ARIMA model and perhaps the most important step

– Deep Learning for time series – Deep Learning offers promising results for time series data, one such deep learning architecture is LSTMs.

– Solve Real-world business problems – Time series is incomplete without real-world problems. DataHack offers plenty of examples to try out.

– Dos and Don’ts for Resume Building – Resume building a simple yet the most complex activity for any person. Fire up your Google and gather a list of Do’s and Dont’s and follow along with the points.

– Tips and strategies to build the perfect resume – There are certain tips and tricks that you can employ in your resume to get a better shot at the interview. Data Science is a field that requires data-driven problems, mention projects to support your skills.

– Sample resumes for Data Science profiles – Definitely give a look at the sample resumes of data scientists, this will help you get a clear picture for your next resume.

– Preparing for Data Science Interviews – Once you have landed the resume, now it is time to seize the opportunity. Make sure you go through your resume properly and all the topics and skills you have mentioned.

– Understanding the important skills required – Writing Machine Learning in the skills section won’t help you get the job. Try to paint a clear picture of yourself, mention the algorithms you know or even the libraries you are comfortable with. Also, don’t forget to mention important soft skills like communication skills and structured thinking.

– How to build your digital Presence – The world is going digital, it’s really important to get noticed in the crowd. Build your GitHub profile and move all your projects there with proper description. You can include these Github links in your resume. Also, keep updating your LinkedIn profile, it is where you find recruiters.

– List of Interview Questions for Data Science – Data Science interviews can run smoothly if you prepare for some frequently asked questions. These questions also help you clear your concepts.

That is a lot of skills and you must be exhausted and even scared to look at it but don’t worry if you have the right mentor, the right course, and dedication you can achieve it all!

Let us take a look at all the courses you opt for as part of the Certified AI & ML BlackBelt Accelerate.

End-to-End courses to become a Blackbelt in AI and ML

Data Science and Machine Learning

- Applied Machine Learning – Beginner to Professional: This course starts from the basics of Python, Statistics and provides you all the tools and techniques you need to apply Data Science & Machine Learning to solve business problems. We will cover the basics of machine learning and how to build, improve, and deploy your machine learning models.

- Retail Demand Prediction using Machine Learning – This course provides an end-to-end case study to build retail demand prediction for a large retailer. Starting from the business problem, converting it into a data problem, and applying machine learning to solve the problem – you see an end-to-end case study and project in Machine Learning.

- Fundamentals of Deep Learning – Starting from the basics of Neural Networks and Deep Learning – this course provides you with all the basics of Deep Learning, its various architectures, and its applications to build Intelligence on images and text.

- AI & ML for Business Leaders – If you are a Business Leader and want to understand how Machine Learning and Artificial Intelligence are applicable to various business functions – this is just the right course for you.

Machine Learning and Deep Learning Specializations

- Natural Language Processing (NLP) using Python: Want to become an NLP Expert? This course is the perfect starting point which covers the core components you need to start your NLP journey

- Natural Language Processing (NLP) using PyTorch- This course teaches you the latest tools and techniques using the cutting edge library PyTorch

- Computer Vision using Deep Learning: Computer vision is the future – the number of jobs that will open up in deep learning in the coming years will be staggering. In this course, work on real-world computer vision case studies, learn the fundamentals of deep learning, and get familiar with tips and tricks to improve your computer vision models

Master the Tools and Languages

- Tableau 2.0: Master Tableau from Scratch: Convert your data into actionable insights, create dashboards to impress your clients, and learn Tableau tips, tricks, and best practices for your analytics, business intelligence, or data science role!

- Microsoft Excel: Beginners to Advanced: MS Excel is still the most widely used tool for day to day analysis across the world. By the end of this course, you will have mastered Excel, Pivot Tables, Conditional Formatting, and will be ready to crunch numbers like a pro!

- Structured Query Language (SQL) for Data Science: SQL is a must-have skill for every data science professional. This course will start from the basics of databases and structured query language (SQL) and teach you everything you would need in any data science profession.

Preparing for your next Data Science Interview

- Ace Data Science Interviews: Data science interviews can be daunting if you don’t know what to expect. You might feel you have all the knowledge and yet you keep getting rejected. This course will guide you on how to navigate data science interviews, lay down a comprehensive 7-step process, and help you land your dream data science role!

- Structured Thinking and Communication for Data Science Professionals: Whether you are creating dashboards for your business customers or solving cutting-edge machine learning problems, structured thinking, and communications is a must-have skill for every data professional

- Up-Level your Data Science Resume: Crafting the perfect data science resume is critical to landing your first data science role. Learn the various aspects of designing a resume that will give you the best chance of landing that interview you’ve been looking for.

There are plenty of data scientists available with a certification from XYZ institute but that doesn’t make them special, it is the skills acquired and projects completed that will matter. Analytics Vidhya’s AI & ML BlackBelt Accelerate. course makes sure that you become an industry-ready professional. Here’s an exciting list of options you get –

- Upskill yourself for the AI Revolution: Artificial Intelligence has already started making a huge impact in various industries, roles, and functions. The time to upskill yourself and become familiar with artificial intelligence and machine learning is NOW. This comprehensive program will enable you to do just that.

- Easy-to-understand content: Understanding data science concepts can be difficult. That’s why all the courses in this program have been curated and designed for people from all walks of life. We don’t assume anything – this is AI from scratch.

- Experienced Instructors: All the material in this program was created by instructors who bring immense industry experience. Combined among us, we have multiple decades of teaching experience.

- Industry Relevant: All the courses in this program have been vetted by industry experts. This ensures relevance in the industry and enables you with the content which matters most.

- Real-life problems: All projects in the program are modeled on real-world scenarios. We mean it when we say “industry-relevant”!

Let’s get down to the actual benefits of this program –

- End to End Approach – Our programs have no pre-requisite, they start from basics and prepare you for the industry by building your portfolio of industry-relevant projects.

- Monthly 30 minutes call with a mentor to track your progress and provide you guidance

- Sharable digital certificate enabled by Blockchain for each course and for the complete program on completion of the program

- 18-month access with complete support. More time would enable you to do more projects and pace your learnings.

- The benefit of bulk pricing – get up to 30% off compared to buying the courses individually

- No questions asked money-back guarantee (7 days)

End Notes

It is a wonderful time to be in the AI and ML industry but becoming a full-stack industry-ready AI and ML professional is difficult but the BlackBelt Accelerate. helps you reach your goal easily with its expert-curated content, one-on-one mentorship calls.

Wondering if the BlackBelt Accelerate program is for you? Check out the page to know more.

Product Growth Analyst at Analytics Vidhya. I'm always curious to deep dive into data, process it, polish it so as to create value. My interest lies in the field of marketing analytics.

A really useful, and in fact, a practical guide on how to become an efficient data science professional. It profusely elaborates the skillsets one needs to have, for a data science role in general. The skillsets mentioned shall be more than to crack a handsome opportunity for any beginner in this field. This post also beautifully puts forward the indispensable soft skills for a data science role, or for any professional role to be honest. An awesome read on a whole!