This article was published as a part of the Data Science Blogathon

Introduction

Model Building in Machine Learning is an important component of the Data Science Project Life Cycle where we will try to train our dataset with different types of Machine Learning models. However, without proper model validation, the confidence that the trained model will generalize well i.e, perform well on the unseen data can never be high. There are a lot of things we can achieve using model validation. Some of these are:

👉 Helps in ensuring that the model is generalized i.e, performs well on the unseen data.

👉 Helps in finding the best model, the model parameters, and the accuracy metrics.

Table of Contents

👉 Why are Evaluation metrics not sufficient to find the best ML model for a given problem statement?

👉 Introduction to Cross-Validation

👉 Different types of Cross-Validation Techniques

Non Exhaustive Methods

- Hold out validation approach

- K-fold Cross-validation

- Stratified K-Fold Cross-validation

Exhaustive Methods

- Leave one out Cross-validation (LOOCV)

👉 Implementation of Cross-Validation Techniques in Python

Why are Evaluation metrics not sufficient to find the best model for a given problem statement?

To solve a particular business problem statement, we have a lot of models in hand to solve it but we need to ensure that whatever model we select at the end of this phase should be performing well on the unseen data, which is our major objective. So, we cannot just go with the evaluation metrics to select the best performing model on our problem statement.

We go to the next level from the metric which can help us to decide on our final Machine Learning model which we can deploy to production.

Let’s first understand what Validation exactly means?

Validation is the process of verifying whether the mathematical results calculating relationships between variables are acceptable as descriptions of the data. Just after model building, an error estimation for the model is made on the training dataset, which is called the Evaluation of residuals.

In this step i.e, Evaluate Residuals Step, we find the training Error by finding the difference between predicted output and the original output. But this metric cannot be true because it works well only with the training data. There is more likely that the model is Underfitting or Overfitting the data.

So, the problem with these evaluation techniques is that they do not indicate how well the model will perform to an unseen dataset. Now, to address this issue, a new technique comes into the picture, which is known as Cross-Validation.

What is Cross-Validation?

It is a statistical method that is used to find the performance of machine learning models. It is used to protect our model against overfitting in a predictive model, particularly in those cases where the amount of data may be limited.

In cross-validation, we partitioned our dataset into a fixed number of folds (or partitions), run the analysis on each fold, and then averaged the overall error estimate.

The main question around which our discussion is going on this article is,

When dealing with a real-life Machine learning task, our first task is to properly identify the problem so that we can choose the most suitable algorithm that can give you the best performance. But how do we compare the models?

Let’s you have trained the model with the dataset available and now it’s time to check the performance of our model. For this, one approach may be that you are going to test our model on the training dataset, but this may not be a good practice.

Why testing our model on a training dataset is not a good practice?

If we do so, we assume that our training data covers all the possible scenarios of the real world and this will surely never be the case in real-life problems. Our main objective is that the model should be able to work well on the real-world data, although the training dataset is also real-world, it represents a small set of all the possible data points out there.

So to know the real score or performance of the model, it should be tested on the data that it has never seen before and this set of data is usually known as a testing set.

Types of Cross-Validation Techniques

Let us discuss the different types of cross-validation techniques in a detailed manner:

Cross-validation techniques can be divided into two broad categories:

- Non-Exhaustive methods

- Exhaustive methods

Non-exhaustive Methods

These methods do not include all the ways of splitting the original dataset.

👉 Hold out Validation approach:

To use this approach, firstly we have to separate our initial dataset into two parts – training data and testing data. Then, we train the model on the training data and then see the performance on the unseen data.

To implement this approach, we first shuffled the data randomly before splitting. This approach can cause instability since we trained the model on a different combination of data points, and the model can give different results every time after training. Also, we can never ensure that our train set is representative of the whole dataset.

Fig. Picture Showing Hold out Technique

Image Source: link

Pros

- This approach is Fully independent of the data.

- This approach only needs to be run once so has lower computational costs.

Cons

- The Performance leads to a higher variance if we have a dataset of smaller size.

👉 k-Fold Cross-Validation:

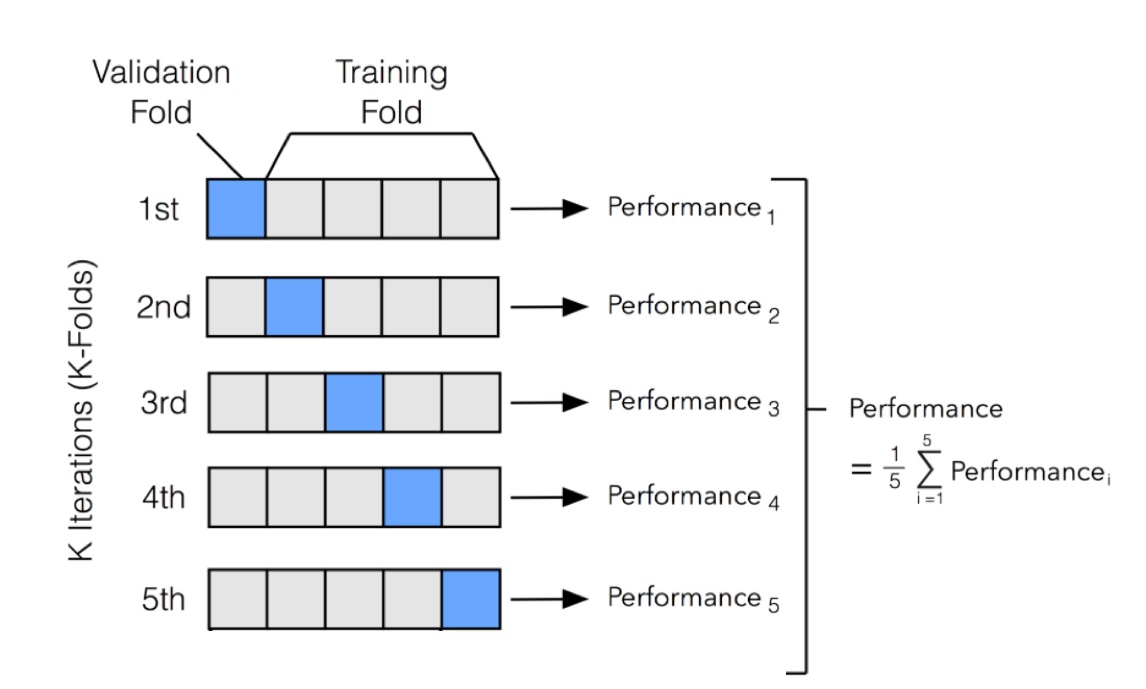

It tries to address the problem of the holdout method. It ensures that the score of our model does not depend on the way we select our train and test subsets. In this approach, we divide the data set into k number of subsets and the holdout method is repeated k number of times.

Here is the algorithm you should have to follow:

- Randomly divide your entire dataset into k numbers of folds.

- For each fold in your dataset, build your model on k – 1 folds of the dataset and test the model to find the performance for the kth fold.

- Repeat this until each of the k-folds has become the test set exactly once.

- Finally, the average of your k accuracies is called the cross-validation accuracy and it will serve as our performance metric for the model.

Fig. Picture showing 5 fold approach

Image Source: link

Pros

- Models may not be affected much if there are some outliers present in the dataset.

- It helps us to overcome the problem of variability.

- This method results in a less biased model compared to other methods since every observation has the chance of appearing in both train and test sets.

- The Best approach if we have a limited amount of input data.

Cons

- Imbalanced datasets will impact our model.

- Requires Computation k times as much as to evaluate since training algorithm has to be rerun from start K times to complete the k folds.

👉 Stratified K-Fold Cross Validation:

It tries to address the problem of the K-Fold approach.

Since In our previous approach, we first randomly shuffled the data and then divided it into folds, in some cases there is a chance that we may get highly imbalanced folds which may cause our model to be biassed towards a particular class.

For example, let us somehow get a fold that contains the majority of the samples from the positive class and only a few samples from the negative class. This will certainly affect our training and to avoid this we make the stratified folds using the stratification process.

Let’s first understand the term “Stratification” before going to deep dive into this technique,

Stratification It is the process of rearranging the data such that each of the folds is a good representative of the whole dataset wrt different classes.

Fig. Picture showing Stratified K-Fold approach

Image Source: link

Pros

- It can improve different models using hyper-parameter tuning.

- Helps us compare models.

- It helps in reducing both Bias and Variance.

Exhaustive Methods

These methods test our model on all the possible ways to divide our original dataset into a training and a validation set.

👉 Leave one out Cross-Validation (LOOCV):

In this approach, we take out only one data point from the available dataset for each iteration to test the model and train the model on the rest of the data. This process iterates for each of the data points.

Fig. Picture showing LOOCV approach

Image Source: link

Pros

- Since we make use of all data points, hence the bias will be less.

Cons

- Higher execution time since we repeat the cross-validation process n times (where n is the number of observations in the dataset).

- This leads to higher variation in testing model effectiveness because we test our model against only one data point. So, our results get highly influenced by the data point. For Example, If the data point is an outlier, it can lead to a higher variation.

This ends our discussion on different Techniques of Cross-Validation!

Implementation of Cross-Validation Techniques in Python

Step-1: Import Necessary Dependencies

import numpy as np import pandas as pd

Step-2: Read and Load the Dataset

df=pd.read_csv('cancer_dataset.csv')

df.head()

Step-3: Separate Dependent and Independent columns

X=df.iloc[:,2:] y=df.iloc[:,1]

Step-4: Drop the independent columns having missing values

X=X.dropna(axis=1)

Step-5: Split train and test dataset from initial Dataset

from sklearn.model_selection import train_test_split X_train, X_test, y_train, y_test = train_test_split(X, y, test_size=0.30, random_state=4)

Step-6: Use the Hold-out Cross-Validation approach

from sklearn.tree import DecisionTreeClassifier model = DecisionTreeClassifier() model.fit(X_train, y_train) result = model.score(X_test, y_test) print(result)

Output:

0.9064327485380117

Step-7: Use the k-fold Cross-Validation approach

from sklearn.model_selection import KFold model=DecisionTreeClassifier() kfold_validation=KFold(10) from sklearn.model_selection import cross_val_score results=cross_val_score(model,X,y,cv=kfold_validation) print(results) print(np.mean(results))

Output:

[0.89473684, 0.9122807, 0.89473684, 0.9122807, 0.92982456, 0.98245614,0.9122807, 0.94736842, 0.92982456, 0.85714286]

0.9172932330827068

Step-8: Use stratified K-fold Cross-validation approach

from sklearn.model_selection import StratifiedKFold skfold=StratifiedKFold(n_splits=5) model=DecisionTreeClassifier() scores=cross_val_score(model,X,y,cv=skfold) print(scores) print(np.mean(scores))

Output:

[0.9122807, 0.92982456, 0.92982456, 0.93859649, 0.89380531]0.9208663251047973

Step-9: Use Leave one out Cross-Validation (LOOCV) approach

from sklearn.model_selection import LeaveOneOut model=DecisionTreeClassifier() leave_validation=LeaveOneOut() results=cross_val_score(model,X,y,cv=leave_validation) print(np.mean(results))

Output:

0.9380116959064327

This ends our implementation of different Techniques of Cross-Validation!

End Notes

Thanks for reading!

If you liked this and want to know more, go visit my other articles on Data Science and Machine Learning by clicking on the Link

Please feel free to contact me on Linkedin, Email.

Something not mentioned or want to share your thoughts? Feel free to comment below And I’ll get back to you.

About the author

Chirag Goyal

Currently, I am pursuing my Bachelor of Technology (B.Tech) in Computer Science and Engineering from the Indian Institute of Technology Jodhpur(IITJ). I am very enthusiastic about Machine learning, Deep Learning, and Artificial Intelligence.

The media shown in this article are not owned by Analytics Vidhya and is used at the Author’s discretion.