This article was published as a part of the Data Science Blogathon

1. Understanding Transformer’s Package

2.Text Classification

3. Question Answering

4. Text Summarization

5. Language Translation

Introduction

This article will discuss a very amazing package that lets you perform all of your NLP Operations in just a couple of lines of code. Even if you are a beginner, you can still perform various NLP operations and fine-tune them according to your task.

Applying NLP operations from scratch for inference becomes tedious since it requires various steps to be performed.

1. process our raw text data using tokenizer

2. Convert the data into the model’s input format

3. Design the model using pre-trained layers or custom layers

4. Training and validation

5. Inference

Here transformer’s package cut these hassle. Transformers package basically helps us to implement NLP tasks by providing pre-trained models and simple implementation.

Table of contents

Transformer’s package

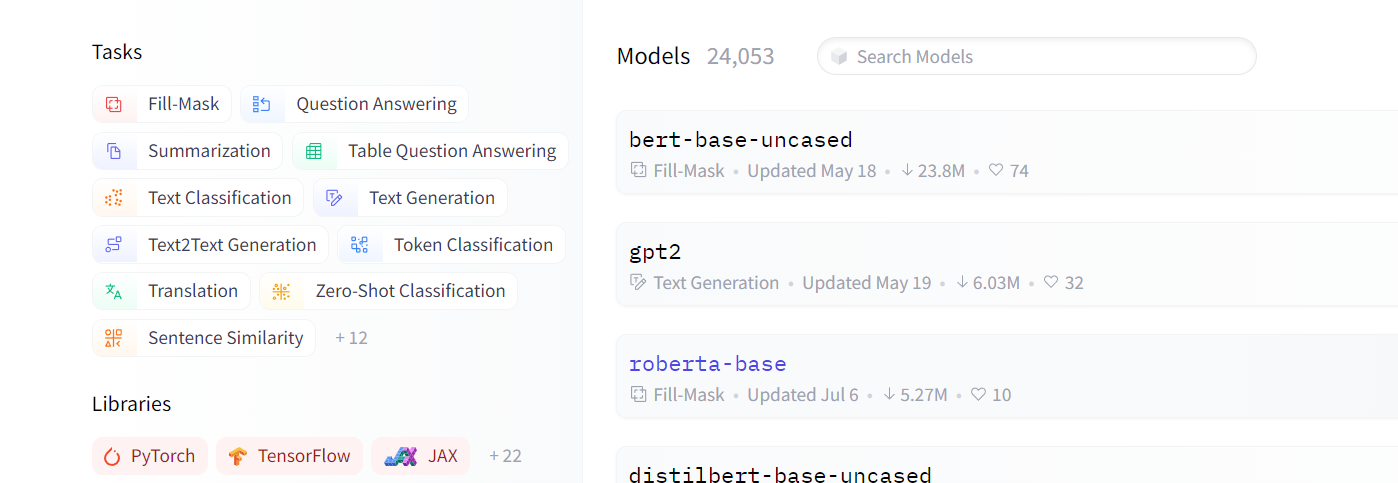

The transformer package is provided by huggingface.io. It tries to solve the various challenges we face in the NLP field; it provides pre-trained models, tokenizers, configs, various APIs, ready-made pipelines for our inference etc. The transformers package gives us the advantage of using pre-trained language models along with their data-processing tools. Most of the models are provided to us directly and made available in the library in PyTorch and TensorFlow.

Transformers package requires TensorFlow or PyTorch to work, and it can train models in just some lines of code and pre-process our text data easily.

Image Source: huggingface.io

The Transformers library comes with more than 30 pre-trained models and supports up to 100 languages, along with 8 major architectures for natural language understanding (NLU) and natural language generation (NLG):

- BERT (from Google);

- GPT-2 (from OpenAI);

- GPT (from OpenAI);

- Transformer-XL (from Google/CMU);

- XLNet (from Google/CMU);

- RoBERTa (from Facebook);

- XLM (from Facebook);

- DistilBERT (from HuggingFace).

Getting Started with Pipeline

The easiest way to use a pre-trained model for prediction for a given NLP task is to use pipeline() from the Transformers package. Pipelines are a Great Way to use a pre-trained model for our Inference. These Pipelines are abstract of most of the complex code written for data pre-processing and inferential steps. Creating a Pipeline for NLP tasks is very easy with Transformers.

Getting Pipelines from Package:

First, we need to install the Transformers package and then import the pipeline class from it

!pip install transformers

from transformers import pipelineIn the Transformers package, the pipeline It is a wrapper class of other pipelines for Named Entity Recognition, Masked Language Modeling, Sentiment Analysis, Feature Extraction, Question Answering, etc.

How to load a Pipeline for a specific Task:

Transformers pipeline also works with the custom models; you can call that in the pipeline if you have a trained model. the different model needs different tokenizers.

pipe_task = pipeline(‘task_name’,model =’model_name’, tokenizer )

If you don’t define the model name and tokenizer, it will load the default model and default tokenizer.

Sentiment Analysis

This pipeline can classify a text based on sentimentality with positive and negative along with confidence.

This pipeline is trained only for binary-class classification, so that it won’t work for multi-class classification.

Calling the pipeline

pipe = pipeline("text-classification")

pipe = pipeline("sentiment-analysis")

pipe(["This restaurant is awesome", "This restaurant is aweful"])output:

[{'label': 'POSITIVE', 'score': 0.9998743534088135},

[{'label': 'NEGATIVE', 'score': 0.9996669292449951}]

Loading Pipeline with a given Model

if you have trained a model and want to use it in the pipeline you only need to name the model in the pipeline.

pipe = pipeline("text-classification", model="roberta-large-mnli")

Text Summarization

Summarise a given text by creating new sentences.

It uses generative text summarization based on deep learning. Currently, T5 and BART only support text summarization pipelines.

context = r"""

The Mars Orbiter Mission (MOM), also called Mangalyaan ("Mars-craft", from Mangala, "Mars" and yāna, "craft, vehicle")

is a space probe orbiting Mars since 24 September 2014? It was launched on 5 November 2013 by the Indian Space Research Organisation (ISRO). It is India's first interplanetary mission

and it made India the fourth country to achieve Mars orbit, after Roscosmos, NASA, and the European Space Company. and it made India the first country to achieve this in the first attempt.

The Mars Orbiter took off from the First Launch Pad at Satish Dhawan Space Centre (Sriharikota Range SHAR), Andhra Pradesh, using a Polar Satellite Launch Vehicle (PSLV) rocket C25 at 09:08 UTC on 5 November 2013.

The launch window was approximately 20 days long and started on 28 October 2013.

The MOM probe spent about 36 days in Earth orbit, where it made a series of seven apogee-raising orbital maneuvers before trans-Mars injection

on 30 November 2013 (UTC).[23] After a 298-day long journey to Mars orbit, it was put into Mars orbit on 24 September 2014."""

Calling the pipeline:

summarizer = pipeline(“summarization”, model=”t5-base”, tokenizer=”t5-base”, framework=”tf”)

summary=summarizer(context, max_length=130, min_length=60)

print(summary)output:

[{'summary_text': "the mars Orbiter Mission (MOM) is a space probe orbiting Mars since 24 September 2014. it is India's first interplanetary mission and the fourth space Company to achieve Mars orbit. the probe spent about 36 days in Earth orbit before trans-Mars injection on 30 November 2013 ."}]

max length of the summarymax_length = 120 and min_length = 60 and model = ‘t5_base’

Question Answering

In Question Answering, a context is provided, and the model tries to find the answer hidden in the passage.

context = r"""

The Mars Orbiter Mission (MOM), also called Mangalyaan ("Mars-craft", from Mangala, "Mars" and yāna, "craft, vehicle")

is a space probe orbiting Mars since 24 September 2014? It was launched on 5 November 2013 by the Indian Space Research Organisation (ISRO). It is India's first interplanetary mission

and it made India the fourth country to achieve Mars orbit, after Roscosmos, NASA, and the European Space Company. and it made India the first country to achieve this in the first attempt.

The Mars Orbiter took off from the First Launch Pad at Satish Dhawan Space Centre (Sriharikota Range SHAR), Andhra Pradesh, using a Polar Satellite Launch Vehicle (PSLV) rocket C25 at 09:08 UTC on 5 November 2013.

The launch window was approximately 20 days long and started on 28 October 2013.

The MOM probe spent about 36 days in Earth orbit, where it made a series of seven apogee-raising orbital maneuvers before trans-Mars injection

on 30 November 2013 (UTC).[23] After a 298-day long journey to the Mars Orbit, it was put into Mars orbit on 24 September 2014."""

Calling pipeline:

nlp = pipeline(“question-answering”) result = nlp(question="When did Mars Mission Launched?", context=context) print(result['answer'])

output:

5 November 2013

The T5 default model is being used for the inference.

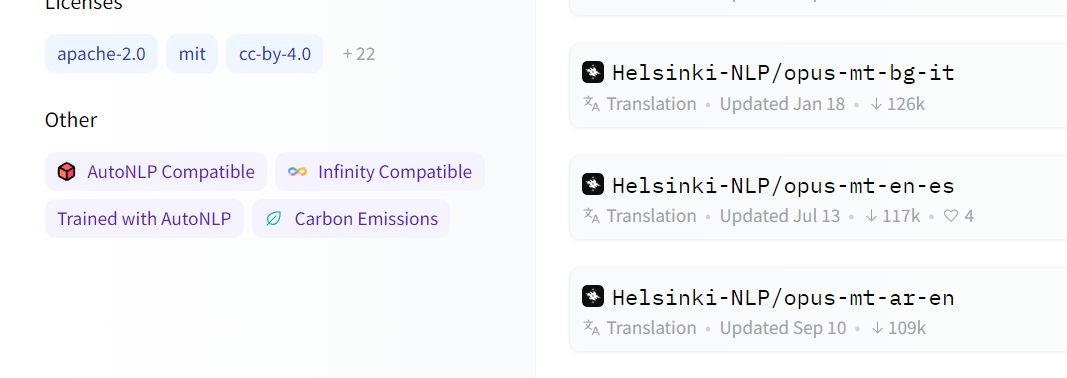

Language Translation

Transformer pipeline can translate from one language to another target language you want.

Available languages for language translator model:

Auto Classes:

Transformers package provides us an Auto-Class like AutoTokenizer, AutoModel,AutoConfig AutoModelForSeq2Seq etc.

These classes contain models, tokenizers, and configs, which can be imported by calling their trivial names.

from transformers import AutoTokenizer, AutoModelForSeq2SeqLM, Pipeline

tokenizer = AutoTokenizer.from_pretrained(“Helsinki-NLP/opus-mt-en-ru”)

model = AutoModelForSeq2SeqLM.from_pretrained(“Helsinki-NLP/opus-mt-en-ru”)model for “Helsinki-NLP/opus-mt-en-ru” English to Russian translation.

Loading into the pipeline

translator = pipeline(‘translation’, model = model, tokenizer = tokenizer)translated = translator('this is me and my name.you know me very well')[0].get('translation_text')

print(translated)output:

'Это я и мое имя, вы меня очень хорошо знаете.

Saving and Loading Model

Whenever we load the pipeline, it downloads the model and tokenizer from the internet that may take time and Internet data. We can save and load our model and tokenizer for future use to solve this problem.

First load the model and tokenizer :

from transformers import AutoTokenizer, AutoModelForSeq2SeqLM

tokenizer = AutoTokenizer.from_pretrained(“Helsinki-NLP/opus-mt-en-hi”)

model = AutoModelForSeq2SeqLM.from_pretrained(“Helsinki-NLP/opus-mt-en-hi”)After loading, we need to save the model file; tokenizer comes with the model file

model.save_pretrained(‘file path’)

Loading the saved model:

model = AutoModelForSeq2SeqLM.from_pretrained(‘./model/clf’) tokenizer = AutoTokenizer.from_pretrained(“./model/clf”)

Conclusion

This article has seen several NLP tasks using the pre-trained model. I highly encourage you to explore the transformers package for pre-trained NLP models and the features they provide. Other tasks like NER, Filling Blank words can be solved using Pipelines.

Tasks like Question Answering, Language Translator, Text Summarization are not easy to implement from scratch, and we must use the power that comes with the pre-trained model in our tasks.

In the Next Article, we implement a Language translator model from the beginning.

Read more about transformer packages on our blog.

Source Code here

If you have anything to ask me, reach out at my Linkedin.

The media shown in this article is not owned by Analytics Vidhya and are used at the Author’s discretion.

[…] Source link […]