This article was published as a part of the Data Science Blogathon.

Introduction

In this article, we are going to build a vehicle counter system using OpenCV in Python using the concept of Euclidean distance tracking and contours. In the last article, we talked about object detection in OpenCV using haar cascades, if you haven’t read it yet, it can be found on this page.

Object Tracking

Object Tracking is the idea of tack or keeping the identity of a moving object wherever it moves. There are various techniques to perform object tracking in OpenCV. Object Tracking can be performed for 2 cases:-

- Tracking Single Object

- Tracking Multiple Object

Here we are going to perform a multiple object tracking approach since we have multiple vehicles in a single time frame.

Popular Tracking Algorithm

DEEP SORT: It is one of the most widely used and very effective object tracking algorithms, it works on YOLO object detection. It uses Kalman filters for tracking.

Centroid Tracking algorithm: The centroid tracking algorithm is an easy-to-understand algorithm and very effective. This is a multi-step process.

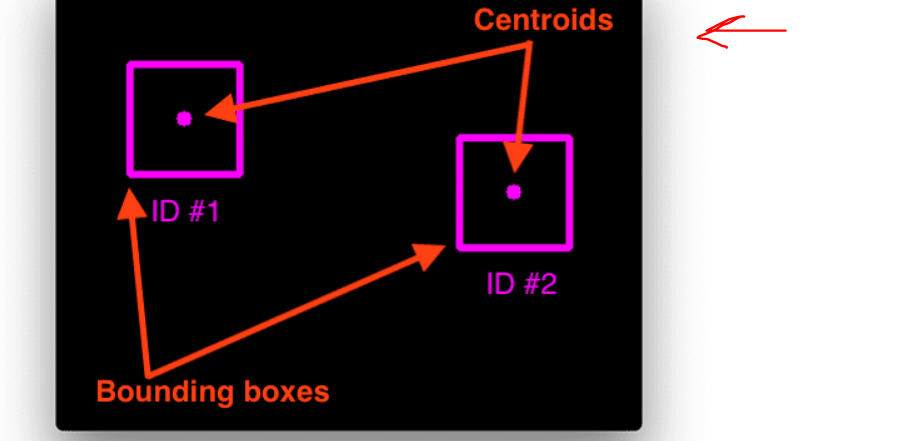

Step 1. Takes Bounding boxes coordinates of detected objects and computes centroid by using bounding box’s coordinates.

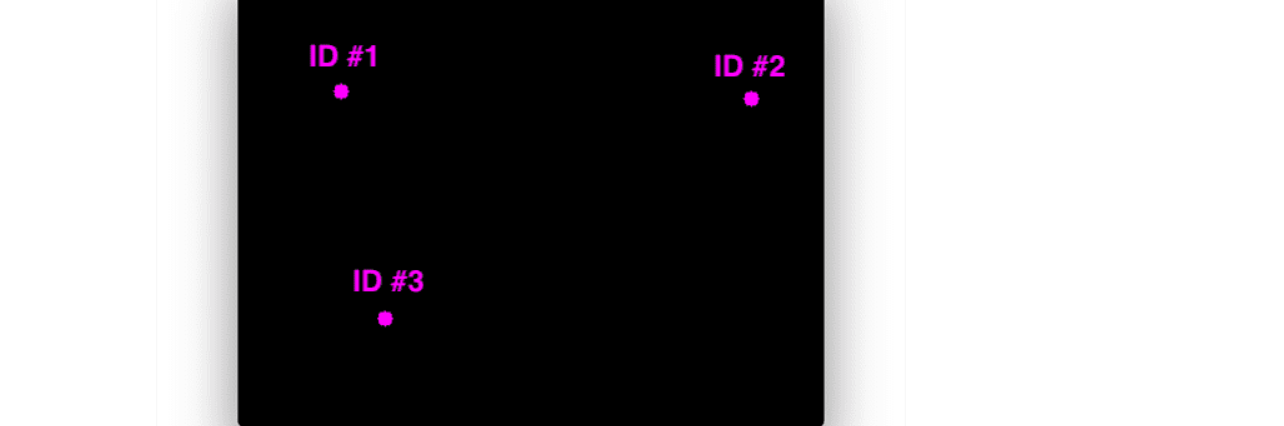

Step 2. For every subsequent frame, it computes the centroid using bounding box coordinates and assigns an id to those bounding boxes, and computes the euclidian distance between every centroid possible.

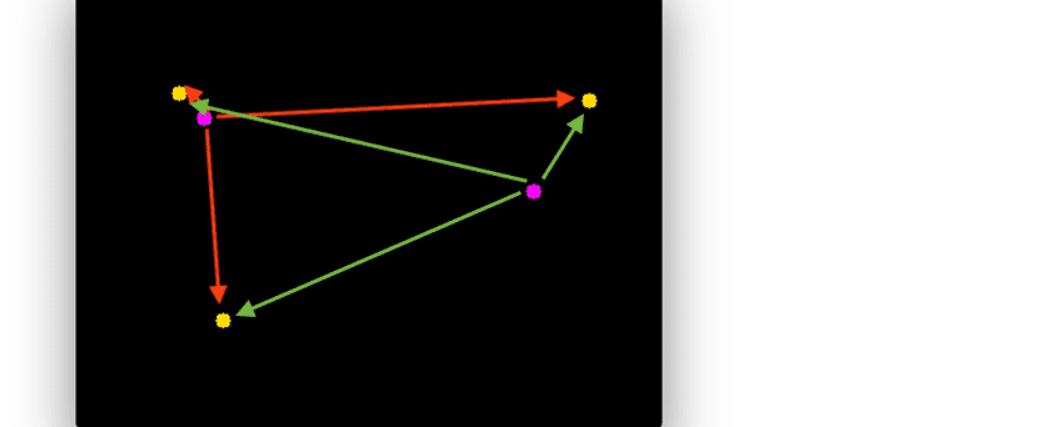

Step 3. Our assumption is that a given object will potentially move in subsequent frames, and the euclidean distance between their centroid will be the minimum distance compared to others.

Step 4. Assign the same IDs to the minimum moved centroid in between subsequent frames.

In order to detect any moving object, we can subtract frame(t+1) with frame(t).

Application of Object Tracking

Object tracking is getting advanced using growing computing power. There are some major use cases of object tracking.

- Traffic Tracking and avoiding collisions.

- Crowd Tracking

- Pet tracking while no one is at home

- Missile tracking

- Air Canvas Pen

Implementing Euclidean Distance Tracker

The source file of all codes used in this article and the test video can be downloaded using this link.

All the steps above discussed can be performed using some math calculation

We have built a class named EuclideanDistTracker for object tracking.

import math

class EuclideanDistTracker:

def __init__(self):

# Storing the positions of center of the objects

self.center_points = {}

# Count of ID of boundng boxes

# each time new object will be captured the id will be increassed by 1

self.id_count = 0

def update(self, objects_rect):

objects_bbs_ids = []

# Calculating the center of objects

for rect in objects_rect:

x, y, w, h = rect

center_x = (x + x + w) // 2

center_y = (y + y + h) // 2

# Find if object is already detected or not

same_object_detected = False

for id, pt in self.center_points.items():

dist = math.hypot(center_x - pt[0], center_y - pt[1])

if dist < 25:

self.center_points[id] = (center_x, center_y)

print(self.center_points)

objects_bbs_ids.append([x, y, w, h, id])

same_object_detected = True

break

# Assign the ID to the detected object

if same_object_detected is False:

self.center_points[self.id_count] = (center_x, center_y)

objects_bbs_ids.append([x, y, w, h, self.id_count])

self.id_count += 1

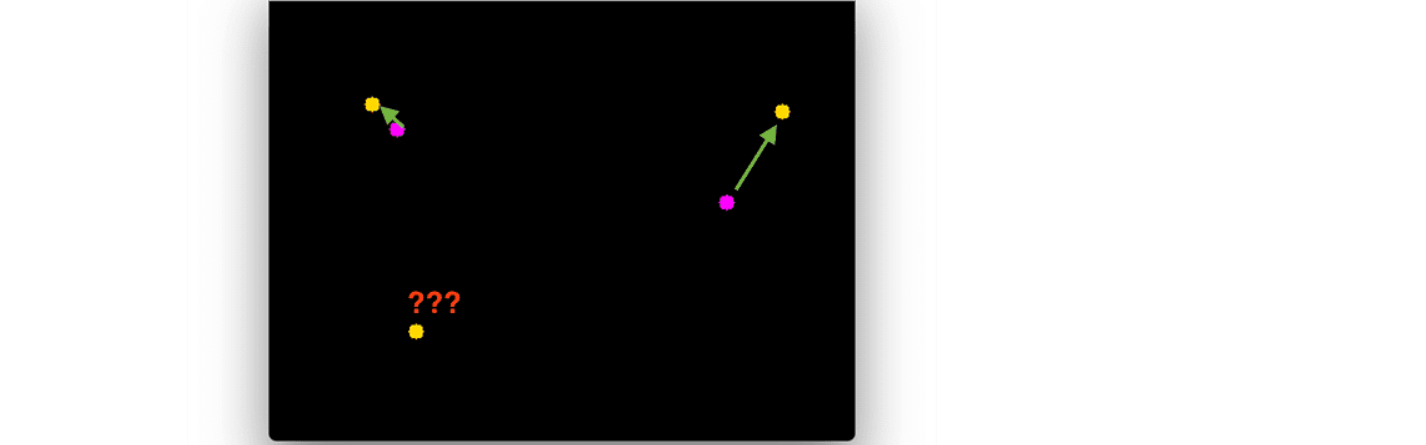

# Cleaning the dictionary ids that are not used anymore

new_center_points = {}

for obj_bb_id in objects_bbs_ids:

var,var,var,var, object_id = obj_bb_id

center = self.center_points[object_id]

new_center_points[object_id] = center

# Updating the dictionary with IDs that is not used

self.center_points = new_center_points.copy()

return objects_bbs_ids

You can either create a file named tracker.py and paste the tracker’s code or directly download the tracker file using this link.

update→ Update methods expect an array of all bounding box coordinates.- tracker returns an arrays including [x,y,w,h,

object_id] .here x,y,w,h are the coordinates of bounding boxes and object_id is the id associated with that bounding box.

After getting ready with the tracker file we need to implement our object detector and later we will bind our tracker with the object detector.

Loading the Libraries and Videos

Import our EuclideanDistTracker class from the tracker.py file we had already created.

import cv2

import numpy as np

from tracker import EuclideanDistTracker

tracker = EuclideanDistTracker()

cap = cv2.VideoCapture('highway.mp4')

ret, frame1 = cap.read()

ret, frame2 = cap.read()

cap.read() It returns frame and boolean value, we need to capture the frame.

Getting Video Frames in OpenCV

The idea is to get the absolute difference between two subsequent frames in order to detect the moving objects.

while cap.isOpened():

# ret, frame = cap.read()

diff = cv2.absdiff(frame1, frame2)

# this method is used to find the difference bw two frames

gray = cv2.cvtColor(diff, cv2.COLOR_BGR2GRAY)

blur = cv2.GaussianBlur(gray, (5,5), 0 )

# here i would add the region of interest to count the single lane cars

height, width = blur.shape

print(height, width)

# thresh_value = cv2.getTrackbarPos('thresh', 'trackbar')

_, threshold = cv2.threshold(blur, 23, 255, cv2.THRESH_BINARY)

dilated = cv2.dilate(threshold, (1,1), iterations=1)

contours, _, = cv2.findContours(dilated, cv2.RETR_TREE, cv2.CHAIN_APPROX_SIMPLE)

detections = []

# DRAWING RECTANGLE BOX (Bounding Box)

for contour in contours:

(x,y,w,h) = cv2.boundingRect(contour)

if cv2.contourArea(contour) <300:

continue

detections.append([x,y,w,h])

boxes_ids = tracker.update(detections)

for box_id in boxes_ids:

x,y,w,h,id = box_id

cv2.putText(frame1, str(id),(x,y-15), cv2.FONT_HERSHEY_SIMPLEX, 0.8, (0,0,255), 2)

cv2.rectangle(frame1, (x,y),(x+w, y+h), (0,255,0), 2)

cv2.imshow('frame',frame1)

frame1 = frame2

ret, frame2 = cap.read()

key = cv2.waitKey(30)

if key == ord('q):

break

cv2.destroyAllWindows()

cv2.absdiffThis method is used to get the absolute difference between two frames.- After getting the frame difference to convert the difference into grayscale and then apply thresholding and contour detection.

- The Found contours are the contours of all moving objects

- To avoid all the noise we take only those contours having a size greater than 300.

boxes_idsIt contains (x,y,w,h,id).cv2.putTextIt is used to write the Id on the frame.cv2.rectange()It is used to draw bounding boxes.

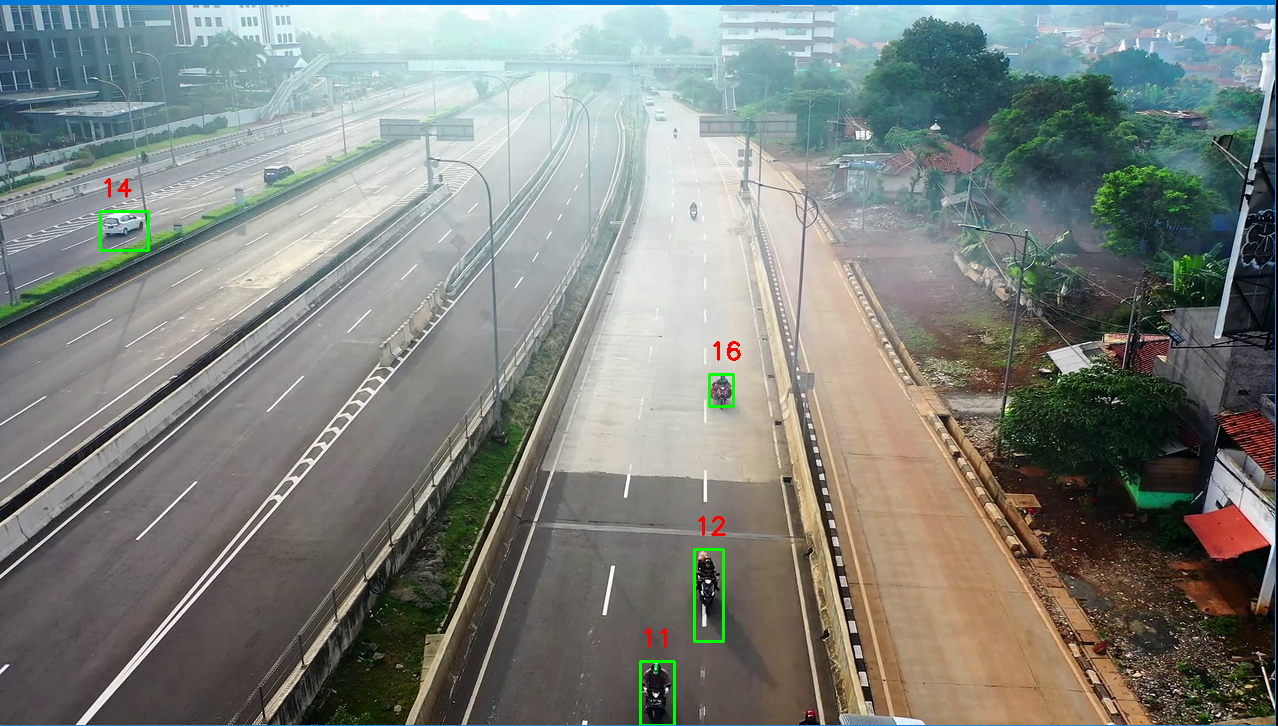

Output: Vehicle Counter System

Conclusion

In this article, we discussed :

- The idea of object tracking

- The use case of object tracking and that was vehicle counter.

- some applications of Object tracking

- Discussed steps involved in the centroid tracking algorithm and implemented it for vehicle counting.

Deep learning-based object tracking algorithms like the DEEP SORT algorithm that works on YOLO object detection perform more accurately in our case.

The media shown in this article is not owned by Analytics Vidhya and are used at the Author’s discretion.