If you’re familiar with Python and programming languages, you’ve likely encountered Pandas, a robust data manipulation and analysis library. Founded by Wes McKinney in 2008, Pandas has become the go-to tool for data scientists, analysts, and researchers worldwide. With efficient data structures and powerful manipulation tools, Pandas has revolutionized data work. After more than three years of development, the Pandas 2.0 new release was done on March 16, 2023, and made available to the general public on April 3. This blog explores some of the new features of Pandas 2.0. Read on to learn how it can take your work to the next level.

Table of contents

What is Pandas Library?

Pandas is an open-source Python library that provides data manipulation and analysis tools. It provides a set of efficient and flexible data structures, including Series (1-dimensional) and DataFrame (2-dimensional), that allow users to manipulate and analyze data in various ways.

What is Pandas 2.0?

Pandas is a vital Python library that has been in use for over a decade. The new release, Pandas 2.0, is an essential upgrade to the library’s capabilities. This section discusses some significant improvements of Pandas 2.0 new release.

Enhanced Performance and Memory Efficiency

The new 2.0 release improves performance, fixes bugs, and makes Pandas more efficient. These are achieved by the utilization of Apache Arrow as the backend. Apache Arrow is an open-source, cross-language development platform for in-memory data. It is developed for columnar in-memory data representation, a popular data processing and storage format in many extensive data systems.

Shifting to this framework makes Pandas more speedy and interoperable and supports more data types, especially for strings.

Moreover, data interchange has been a big problem for Pandas, especially when moving tabular data. But now, with Arrow’s zero-copy data access, mapping tabular data to memory will become very convenient, even if it takes a terabyte of memory space. This makes the entire system much more memory efficient.

Improved Support for Time-Series Data

Till now, time-series or time-stamped data was represented in nanosecond resolution in pandas. Due to this, dates before 21 September 1677 and after 11 April 2264 could not be represented, causing several pain points for researchers who analyze time series data spanning millions of years.

However, the Pandas 2.0 new release supports many other resolutions, including milliseconds, microseconds, and second. This improvement widens the range mentioned above by +/-2.9e¹¹ years!

The new update also allows you to pass an arbitrary date to the Timestamp constructor without resulting in an error (unlike the previous versions), irrespective of the specified time unit. For example,

- Input [0]: pd.Timestamp(“1567-11-10”, unit = “s”)

- Output [0]: Timestamp(‘1567-11-10 00:00:00’)

Here, specifying the unit “s” implies that the timestamp returns an output up to the second; higher precisions like microseconds would not be returned.

While this is an excellent starting point for timestamp resolution updates, it is a challenging work in progress, and you could still encounter some bugs.

Introduction to Nullable Data Types

Pandas 2.0 significantly improves nullable data types. Nullable data types allow you to assign a unique ‘NULL’ value to the variable instead of the typical values for that particular data type.

It makes it easier to opt for nullable data types by adding a new keyword, dtype_backend. This keyword returns a DataFrame with nullable data types when set to “numpy_nullable” for most I/O functions. When used with the object data type, this option can also return a DataFrame with pandas StringDtype.

Many internal operations can now use nullable semantics (operations/comparisons that yield unknown values) for data types like Int64, boolean, and Float64 rather than cast them to a different object. This update ensures that the results are of appropriate data types.

Even the groupby algorithms (used to group/categorize data) can now use nullable semantics, increasing the accuracy and performance. Before the 2.0 version, the input was cast to a float object, often leading to a loss of precision.

Expanded Support for Data Types and File Formats

Pandas 2.0 offers extended support for more methods, containers, and data types. For instance, it provides additional custom accessors like pandas.api.extensions.register_dataframe_accessor(), pandas.api.extensions.register_series_accessor(), and pandas.api.extensions.register_index_accessor() to add “namespaces” to pandas objects.

Moreover, libraries can now define custom arrays and data types using pandas.api.extensions.ExtensionDtype that will be handled properly and not cast to another n-dimensional array of objects. ExtensionDtype works similarly to numpy.dtype, i.e., it simply describes the data type.

New Data Manipulation Functions

Many new data manipulations have been added in the Pandas 2.0 version. Data manipulation functions help in preparing raw data according to the requirement. It may include removing discrepancies like “Null”/”NA” values, additional rows/columns, etc. It can also mean reshaping or modifying the data to make it an efficient input for the model. Some of these are:

- melt(frame[id_vars, value_vars, var_name, …]) – unpivots a DataFrame by changing the wide format to a long format.

- pivot(data, *, columns[index, values]) – returns a reshaped DataFrame organized by the index/values parameters.

- pivot_table(data[values, index, columns, …]) – creates a spreadsheet-style DataFrame.

- crosstab(index, columns[values, rownames, …]) – calculates a straightforward cross-tabulation of multiple factors.

Head over to Pandas 2.0 documentation page to see more data manipulation functions that have been added in the upgrade.

Upgrading to Pandas 2.0

System Requirements and Compatibility

To work with Pandas 2.0, you need to have Python 3.7, 3.8, 3.9, 3.10, and 3.11 or later installed on your computer. It also requires a few other libraries like NumPy and SciPy and has optional dependencies on Matplotlib.

Steps to Upgrade from Previous Versions

If you are installing it for the first time, you can use Anaconda/Miniconda.

Download Anaconda from here, and complete the installation process.

You will have all the key libraries installed.

Python installation can also be done using Conda to install packages for data analytics and scientific computing. Find the Conda installer here.

After installation, use the following commands.

- conda create -n name_of_env python (creates an environment)

- activate name_of_env (activates the environment)

- conda install pandas (main step to install pandas)

- conda install pandas= ’required version’

You can also use PyPI to install Pandas. Open the Command Prompt window and use the following command:

pip install pandas OR pip3 install pandas

If you have any previous version of Pandas, you can upgrade it to Pandas 2.0 using the same pip command.

Use pip3 install – – upgrade pandas OR python -m pip install – – upgrade pandas

Common Issues and Solutions

Sometimes, on running the pip install – – upgrade pandas command, you may get an error message: “pip is not recognized as an internal or external command, operable program or batch file.”

- You can try using: pip3 install – – upgrade pandas on Windows as an administrator.

- You can also use: conda update pandas on the Anaconda Prompt as administrator.

- You can also run !pip install -U pandas on Jupiter Notebook.

Pandas 2.0 Use Cases

Exploratory Data Analysis

Pandas is one of the most widely used Python libraries for EDA, involving exploring and summarizing data to study its structure and patterns. Pandas help prepare the real-life raw data that comes with a lot of “noise” and make it suitable for use. Below is a list of some standard Pandas functions used in EDA.

- pd.read_csv(): reads a CSV file and loads data into a dataframe.

- df.head(): returns the first five rows (default) of the dataframe. You can change the number of rows by specifying in the parenthesis.

- df.info() and df.describe(): basic exploratory commands that brief you about the rows, columns, and data types.

- df.isnull().sum(): specifies the number of missing values in each column.

- df.sample(): generates a random sample (either by row or column).

For example, Netflix, a global movie streaming giant, uses the Pandas library for its recommendation engine. Before giving the recommendations, data scientists at Netflix must undergo data pre-processing and exploratory analysis to understand user preferences, and Pandas 2.0 is an excellent upgrade for this process.

Data Cleaning and Wrangling

It takes a lot of time for data scientists to clean datasets and wrangle them in a usable format. As a matter of fact, more than 70% of the time is spent on cleaning data. Python’s Pandas and NumPy are the most prominent and efficient libraries that enable them to handle messy data, correct inconsistencies in formats, and remove nonsensical outliers.

Some of the steps that might be used in cleaning data are listed below.

Dropping Columns

Use the following commands to drop specific columns.

df.drop( column1, column2, …, inplace=True, axis=1)

df.drop(columns = ‘list of columns’, inplace=True)

Changing the Index of a DataFrame

Using a unique Pandas Index for NumPy arrays helps to extend its functionality by allowing more labeling and slicing.

Use the following command to set the index:

df = df.set_index(‘Name_of_column’)

Reshaping Data

You can reshape the data, i.e., switch rows into columns, with Pandas. Use the following command:

df.pivot(index=’row_name’, columns=’column_name’, values=’Values’)

Many real-world companies, like Airbnb, Uber, etc., utilize Pandas for cleaning and manipulating data. For future reference, Airbnb uses customer and host data to categorize the most demanded amenities and most booked hosts. On the other hand, Uber uses the library to wrangle GPS data, payments, and customer feedback to enhance the overall customer experience.

Time Series Analysis

Time series analysis is a statistical method used to analyze data that is collected over time. The data in this analysis consists of a series of observations made over time and can be used to model trends, seasonal variations, and other patterns. Pandas is often used to work with time series data because it contains various tools for in-depth analysis.

Time series analysis with Pandas may include the following steps.

- Loading time-stamped data using the pd.read_csv() command.

- Making it a better fit by creating a datetime index with pd.DatetimeIndex, using the pd.to_datetime() function

- Plotting the new data frame using the .plot() method. You can specify the figure size, the x and y-axis, and the style.

Tesla, a global leader in autonomous vehicles, uses Pandas to analyze time-series data to improve the functionality of its electric vehicles. The company uses vehicle performance data, charging patterns, and battery health for the same over some time to draw insights.

Machine Learning Applications

As the library helps in data-related operations, it addresses numerous shortcomings data scientists face in working with scattered and raw scientific data. This scientific data can be prepared, reshaped, and stored in a tabular format using Pandas dataframes. As machine learning models need vast data to train on, Pandas primarily helps in the following ways.

- Data loading and preprocessing using functions like read_csv(), dropna(), fillna(), drop_duplicates(), etc.

- Exploratory Data Analysis and Visualization using functions like head(), tail(), sample(), plot(), etc.

- Feature Engineering with functions like replace(), get_dummies(), value_counts(), etc.

Data Visualization and Reporting

Pandas is extensively used for data visualization and reporting.

- It helps with data summarization with functions like describe(), value_counts(), groupby(), etc. These functions provide a statistical summary of the input data.

- It helps in data filtering based on specific criteria, helping data scientists to identify patterns. You can use loc(), iloc(), filter(), etc., for the same.

- It offers many plotting options by integrating with Matplotlib and Seaborn. You can create a line, bar, scatter plot, pie chart, etc.

Goldman Sachs uses Pandas to visualize data related to financial markets and economic indicators. They track booking volumes, revenue, and other seasonal trends.

Best Practices for Using Pandas 2.0

Efficient Data Manipulation and Cleaning Techniques

Well-prepared data gives more accurate results. This is why it is vital to ensure that you take all data manipulation and cleaning techniques seriously. You may ensure that:

- Unnecessary columns are not loaded in the dataframe. Use the drop() function.

- Data is indexed. Use the .set_index(‘column’) function.

- Clean specific columns. For example, remove extra dates, convert string “nan” values to NumPy’s “NaN,” etc.

- Handle missing data by using functions like fillna(), dropna(), interpolate(), etc.

- Avoid chaining operations together. Instead, break down the operations into multiple steps.

Appropriate Use of Data Types and File Formats

To get the most accurate results, use appropriate data types for each entry (rows/columns) in your dataframe. For instance, if you are working with numerical data, use integer or float. Use datetime for time-series or time-stamped data and categorical dtype for data with limited and unique values.

Additionally, Pandas can read and load data from numerous file formats, including CSV, JSON, HTML, Excel, SQL, Pickle, XLSX, XML, etc.

Effective Memory Management Strategies

Following some best practices for efficient memory usage will help you manage tabular data, analyze, and process it in a better way. Some of these strategies are:

Make Dataframe Modifications “Inplace”

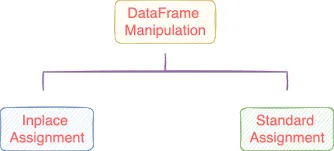

Once you load data in a dataframe, there are two primary ways to modify it: inplace manipulation and standard manipulation. Inplace modifications tend to modify the original dataframe without creating a new Pandas copy of the original object. On the other hand, standard modification creates a brand-new copy, taking up more memory space. Therefore, in memory-constrained tasks, inplace modifications are preferred.

When Using a CSV File, Read Relevant Columns

If your dataset contains hundreds of columns and not all of them are relevant to your application, read only the ones that interest you. Intuitively, reading all columns would take up a lot more memory space. Use the read_csv() function to load all columns and add an argument called usecols to read only the relevant columns. The final syntax should look something like:

required_data = pd.read_csv(“name_of_dataset”, usecols = “name_of_column”)Manipulate Data Types

As a default configuration, Pandas assign the datatype that takes up the most memory space to columns. For instance, a numerical data type with integer values comes with four possible sub-categories: int8, int16, int32, and int64, taking 8, 16, 32, and 64 bits, respectively. Pandas assigns int64 by default. To save up memory space, use the astype() method.

Good Coding Practices and Documentation

Good coding practices make a lot of difference in your application’s evaluation. It shows that you have clearly understood how to use the methods in a neat and tidy manner. Some good practices include

- Use meaningful variable names that clearly describe and convey the purpose. For example, while computing the arithmetic mean of values of a column, use the variable name “mean” or “mean_value” rather than some random x or y.

- Use markup languages like Markdown and reStructuredText (plain text formatting syntax), docstrings (documentation strings for methods/classes), etc., for neater documentation.

- Correct broken code immediately. If you delay fixing a bug that you have caught early on, it could worsen problems later.

Comparison with Other Data Analytics Libraries

While Pandas is a very famous Python library for data analytics, there are many other libraries, like NumPy, SciPy, etc., that are often used as an alternative. Let’s explore some of these libraries and draw a contrast between them.

Pandas vs NumPy

Numpy is a library for scientific computing, and it is used for mathematical operations and numerical computations. On the other hand, Pandas is a library for data manipulation and analysis. It is designed for working with tabular data, such as data stored in spreadsheets or databases.

| FACTORS\LIBRARIES | Pandas | NumPy |

| Data Types | Supports a variety of data types, like numerical, categorical, datetime, etc. | Primarily for numerical data, like integers, float, and complex numbers. |

| Data Structures | Provides dataframes and series for heterogeneous data. | Provides n-dimensional numpy arrays. |

| Indexing | Provides both positional and label-based indexing. | Provides only positional indexing. |

Pandas vs SciPy

Both Python libraries are open-sourced data science tools widely used for data analysis and scientific computing. While they share some similarities, they are designed for different purposes and have distinct features. However, Pandas is used for broader use cases compared to SciPy, which caters to mathematical optimization and engineering areas.

| FACTORS\LIBRARIES | Pandas | SciPy |

| Primary utility | Data cleaning, transformation, and filtering. | Optimization, linear algebra, integrations, signal processing, and interpolation. |

| Data | Datetime, categorical, numerical, etc. | Numerical and statistical data. |

Pandas vs Apache Spark

As you know, Pandas is an open-source Python library for data manipulation and analysis; it is extensively used for filtering, aggregating, pivoting, and grouping structured data. On the other hand, Apache Spark is a multi-language (Python, Java, Scala) engine for data engineering, data science, and machine learning tasks. It is used for extensive big data processing for batch and streaming data with machine learning, graph processing, and SQL modules.

While Pandas is an excellent tool for modestly-sized datasets that can fit into memory, Apache Spark is designed for big data processing. It can handle large datasets distributed across multiple nodes. Depending on the size and complexity of the data, either the library or a combination of both can be used to efficiently process and analyze the data.

Conclusion

Pandas has been one of the most famous Python libraries since its inception in 2008. Wes McKinney designed it to be a Python-based data manipulation and analysis staple. With numerous functions that clean, reshape, aggregate, and pivot data, Pandas has gained an active community of users and contributors. After several years of development, Pandas 2.0 has taken developers by a much-anticipated surprise. The Pandas 2.0 new release has several essential yet subtle features focusing on performance enhancements, bug fixes, and code improvements.

If you work with data and are familiar with Python programming, Pandas is the most convenient and powerful tool for data analysis. Even if you seek professional opportunities in the data domain, prior experience with Pandas will help you big time. The library is often used by developers, data scientists, data analysts, software engineers, and researchers. To learn more about how Pandas or Pandas 2.0 is used, head to Analytics Vidhya (AV). AV is an excellent platform for all knowledge and career guidance for machine learning, data science, Python programming, and artificial intelligence.

Frequently Asked Questions

A. Yes. Pandas 2.0 was made generally available on April 3, 2023.

A. Pandas is a very efficient library for small-sized data. Its performance is not a point of concern for data up to 1GB. With larger datasets, the performance may vary.

A. You can use the pip install pandas command. However, the developer team planned to drop supporting updates for Python 2 starting January 1, 2019. All new feature releases (>0.24) will be for Python 3 only.

A. Pandas 2.0 is faster than the older version because it uses Apache Arrow.