Introduction

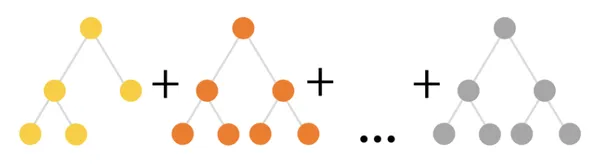

If enthusiastic learners want to learn data science and machine learning, they should learn the boosted family. There are a lot of algorithms that come from the family of Boosted, such as AdaBoost, Gradient Boosting, XGBoost, and many more. One of the algorithms from Boosted family is a CatBoost algorithm. CatBoost is a machine learning algorithm, and its stands for Categorical Boosting. Yandex developed it. It is an open-source library. It is used in both Python and R languages. CatBoost works really well with categorical variables in the dataset. Like other boosting algorithms CatBoost also creates multiple decision trees in the background, known as an ensemble of trees, to predict a classification label. It is based on gradient boosting.

Also Read: CatBoost: A machine learning library to handle categorical (CAT) data automatically

Learning Objectives

- Understand the concept of boosted algorithms and their significance in data science and machine learning.

- Explore the CatBoost algorithm as one of the boosted family members, its origin, and its role in handling categorical variables.

- Comprehend the key features of CatBoost, including its handling of categorical variables, gradient boosting, ordered boosting, and regularization techniques.

- Gain insights into the advantages of CatBoost, such as its robust handling of categorical variables and excellent predictive performance.

- Learn to implement CatBoost in Python for regression and classification tasks, exploring model parameters and making predictions on test data.

This article was published as a part of the Data Science Blogathon.

Table of contents

Important Features of CatBoost

- Handling Categorical Variables: CatBoost excels at handling datasets that contain categorical features. Using various methods, we automatically deal with categorical variables by transforming them into numerical representations. It includes target statistics, one-hot encoding, or a mix of the two. This capability saves time and effort by doing away with the requirement for manual categorical feature preprocessing.

- Gradient Boosting: CatBoost uses gradient boosting, an ensemble technique that combines several weak learners (decision trees), to create effective predictive models. Adding trees trained and instructed to rectify the mistakes caused by the preceding trees creates trees iteratively while minimizing a differentiable loss function. This iterative approach progressively enhances the predictive capability of the model.

- Ordered Boosting: CatBoost proposes a novel technique called “Ordered Boosting” to effectively handle categorical features. When building the tree, it uses a technique known as permutation-driven pre-sorting of categorical variables to identify the optimal split points. This method enables CatBoost to consider all potential split configurations, improving predictions and lowering overfitting.

- Regularization: Regularization techniques are used in CatBoost to reduce overfitting and improve generalization. It features L2 regularization on leaf values, which modifies the loss function by adding a penalty term to prevent excessive leaf values. Additionally, it uses a cutting-edge method known as “Ordered Target Encoding” to avoid overfitting when encoding categorical data.

Advantages of CatBoost

- Robust handling of the categorical variable: CatBoost’s automatic handling makes preprocessing convenient and effective. It does away with the necessity for manual encoding methods and lowers the chance of information loss related to conventional procedures.

- Excellent Predictive Performance: Predictions made using CatBoost’s gradient boosting framework and Ordered Boosting are frequently accurate. It can produce strong models that outperform many other algorithms and effectively capture complicated relationships in the data.

Use Cases

In several Kaggle contests involving tabular data, Catboost has proven to be a top performer. CatBoost utilizes a variety of regression and classification tasks successfully. Here are a few instances where CatBoost has been successfully used:

- Cloudflare uses Catboost to identify bots targeting its users’ websites.

- Ride-hailing service Careem, based in Dubai, uses Catboost to predict where its customers will travel next.

Implementation

As CatBoost is open source library, ensure you have installed it. If not, here is the command to install the CatBoost package.

#installing the catboost library

!pip install catboostYou can train and build a catboost algorithm in both Python and R languages, but we will only use Python as a language in this implementation.

Once the CatBoost package is installed, we will import the catboost and other necessary libraries.

#import libraries

import pandas as pd

import os

import matplotlib.pyplot as plt

import seaborn as sns

import catboost as cb

from sklearn.model_selection import train_test_split

from sklearn.metrics import confusion_matrix, accuracy_score

import warnings

warnings.filterwarnings('ignore')

Here we use the big mart sales dataset and perform some data sanity checks.

#uploading dataset

os.chdir('E:\Dataset')

dt = pd.read_csv('big_mart_sales.csv')

dt.head()

dt.describe()

dt.info()

dt.shape

The dataset contains more than 1k records and 35 columns, out of which 8 columns are categorical, but we will not convert those columns into numeric format. Catboost itself can do such things. This is the magic of Catboost. You can mention as many things as you want in the model parameter. I have only taken “iteration” for demo purposes as a parameter.

#import csv

X = dt.drop('Attrition', axis=1)

y = dt['Attrition']

X_train, X_test, y_train, y_test = train_test_split(X,y, test_size=0.2, random_state=14)

print(X_train.shape)

print(X_test.shape)

cat_var = np.where(X_train.dtypes != np.float)[0]

model = cb.CatBoostClassifier(iterations=10)

model.fit(X_train, y_train, cat_features=cat_var, plot=True)

There are many model parameters that you use. Below are the important parameters you can mention while building a CatBoost model.

Parameters

- Iterations: The number of boosting iterations or trees to be built. Higher values can lead to better performance but longer training periods. It is an integer value that ranges from 1 to infinity [1, ∞].

- Learning_rate: The step size at which the gradient boosting algorithm learns. A lower number causes the model to converge more slowly but could improve generalization. It should be a float value, Ranges from 0 to 1

- Depth: The maximum depth of the individual decision trees in the ensemble. Although deeper trees have a higher chance of overfitting, they can capture more complicated interactions. It is an integer value that ranges from 1 to infinity [1, ∞].

- Loss_function: During training, we should optimize the loss function. Various problem types—such as “Logloss” for binary classification, “MultiClass” for multiclass classification, “RMSE” for regression, etc. have different

solutions. It is a string value. - l2_leaf_reg: The leaf values were subjected to L2 regularization. Large leaf values are penalized with higher values, which helps minimize overfitting. It is a float value, Ranging from 0 to infinity [0, ∞].

- border_count: The number of splits for numerical features. Although higher numbers offer a more accurate split, they may also cause overfitting. 128 is the suggested value for larger datasets. It is an integer value ranging from 1 to 255 [1, 255].

- random_strength: The level of randomness to use when selecting the split points. More randomness is introduced with a larger value, preventing overfitting. Range: [0, ∞].

- bagging_temperature: Controls the intensity of sampling of the training instances. A greater value lowers the bagging process’s randomness, whereas a lower value raises it. It is a float value, Ranging from 0 to infinity [0, ∞].

Making predictions on the trained model

#model prediction on the test set

y_pred = model.predict(X_test)

print(accuracy_score(y_pred, y_test))

print(confusion_matrix(y_pred, y_test))You can also set the threshold value using the predict_proba() function. Here we have achieved an accuracy score of more than 85%, which is a good value considering that we have not processed any categorical variable into numbers. That shows us how powerful the Catboost algorithm is.

Conclusion

CatBoost is one of the breakthrough and famous models in the field of machine learning. It gained a lot of interest because of its ability to handle categorical features by itself. From this article, you will learn the following:

- The practical implementation of catboost.

- What are the important features of the catboost algorithm?

- Use cases where catboost has performed well

- Model parameters of catboost while training a model

Frequently Asked Questions

A. Catboost is a supervised machine learning algorithm. It can be used for both regression and classification problems.

A. Catboost is an open-source gradient-boosting library that handles categorical data really well; hence it uses the boosting technique.

A. The pool is like an internal data format in Catboost. If you pass a numpy array to it, it will implicitly convert it to Pool first, without telling you. If you need to apply many formulas to one dataset, using Pool drastically increases performance (like 10x), because you’ll omit converting step each time.

The media shown in this article is not owned by Analytics Vidhya and is used at the Author’s discretion.