Introduction

In this article, we will create a Chatbot for your Google Documents with OpenAI and Langchain. Now why do we have to do this in the first place? It would get tedious to copy and paste your Google Docs contents to OpenAI. OpenAI has a character token limit where you can only add specific amounts of information. So if you want to do this at scale or you want to do it programmatically, you’re going to need a library to help you out; with that, Langchain comes into the picture. You can create a business impact by connecting Langchain with Google Drive and open AI so that you can summarize your documents and ask related questions. These documents could be your product documents, your research documents, or your internal knowledge base that your company is using.

Learning Objectives

- You can learn how to fetch your Google documents content using Langchain.

- Learn how to integrate your Google docs content with OpenAI LLM.

- You can learn to summarize and ask questions about your document’s content.

- You can learn how to create a Chatbot that answers questions based on your documents.

This article was published as a part of the Data Science Blogathon.

Table of contents

Load Your Documents

Before we get started, we need to set up our documents in google drive. The critical part here is a document loader that langchain provides called GoogleDriveLoader. Using this, you can initialize this class and then pass it a list of document IDs.

from langchain.document_loaders import GoogleDriveLoader

import os

loader = GoogleDriveLoader(document_ids=["YOUR DOCUMENT ID's'"],

credentials_path="PATH TO credentials.json FILE")

docs = loader.load()

You can find your document id from your document link. You can find the id between the forward slashes after /d/ in the link.

For example, if your document link is https://docs.google.com/document/d/1zqC3_bYM8Jw4NgF then your document id is “1zqC3_bYM8Jw4NgF”.

You can pass the list of these document IDs to document_ids parameter, and the cool part about this is you can also pass a Google Drive folder ID that contains your documents. If your folder link is https://drive.google.com/drive/u/0/folders/OuKkeghlPiGgWZdM then the folder ID is “OuKkeghlPiGgWZdM1TzuzM”.

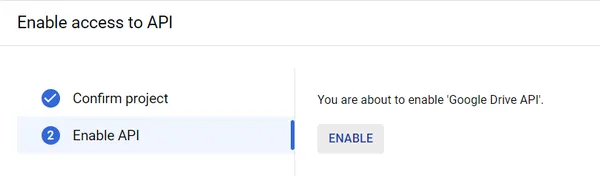

Authorize Google Drive Credentials

Step 1:

Enable the GoogleDrive API by using this link https://console.cloud.google.com/flows/enableapi?apiid=drive.googleapis.com. Please ensure you are logged into the same Gmail account where your documents are stored in the drive.

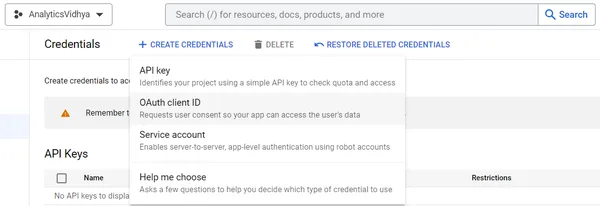

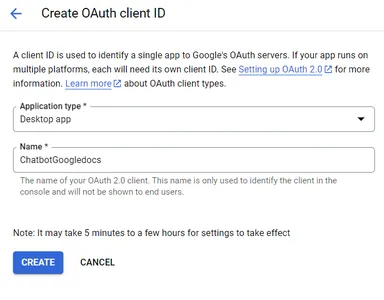

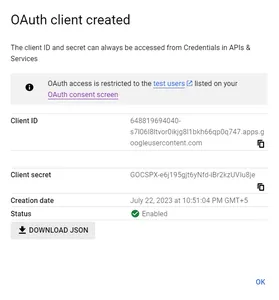

Step 2: Go to the Google Cloud console by clicking this link . Select “OAuth client ID”. Give application type as Desktop app.

Step 3: After creating the OAuth client, download the secrets file by clicking “DOWNLOAD JSON”. You can follow Google’s steps if you have any doubts while creating a credentials file.

Step 4: Upgrade your Google API Python client by running below pip command

pip install --upgrade google-api-python-client google-auth-httplib2 google-auth-oauthlibThen we need to pass our json file path into GoogleDriveLoader.

Summarizing Your Documents

Make sure you have your OpenAI API Keys available with you. If not, follow the below steps:

1. Go to ‘https://openai.com/ and create your account.

2. Login into your account and select ‘API’ on your dashboard.

3. Now click on your profile icon, then select ‘View API Keys’.

4. Select ‘Create new secret key’, copy it, and save it.

Next, we need to load our OpenAI LLM. Let’s summarize the loaded docs using OpenAI. In the below code, we used a summarization algorithm called summarize_chain provided by langchain to create a summarization process which we stored in a variable named chain that takes input documents and produces concise summaries using the map_reduce approach. Replace your API key in the below code.

from langchain.llms import OpenAI

from langchain.chains.summarize import load_summarize_chain

llm = OpenAI(temperature=0, openai_api_key=os.environ['OPENAI_API_KEY'])

chain = load_summarize_chain(llm, chain_type="map_reduce", verbose= False)

chain.run(docs)

You will get a summary of your documents if you run this code. If you want to see what LangChain was doing underneath the covers, change verbose to True, and then you can see the logic that Langchain is using and how it’s thinking. You can observe that LangChain will automatically insert the query to summarize your document, and the entire text(query+ document content) will be passed to OpenAI. Now OpenAI will generate the summary.

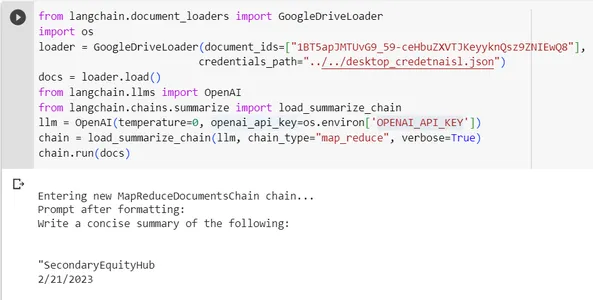

Below is a use case where I sent a document in Google Drive related to a product SecondaryEquityHub and summarized the document using the map_reduce chain type and load_summarize_chain() function. I have set verbose=True to see how Langchain is working internally.

from langchain.document_loaders import GoogleDriveLoader

import os

loader = GoogleDriveLoader(document_ids=["ceHbuZXVTJKe1BT5apJMTUvG9_59-yyknQsz9ZNIEwQ8"],

credentials_path="../../desktop_credetnaisl.json")

docs = loader.load()

from langchain.llms import OpenAI

from langchain.chains.summarize import load_summarize_chain

llm = OpenAI(temperature=0, openai_api_key=os.environ['OPENAI_API_KEY'])

chain = load_summarize_chain(llm, chain_type="map_reduce", verbose=True)

chain.run(docs)Output:

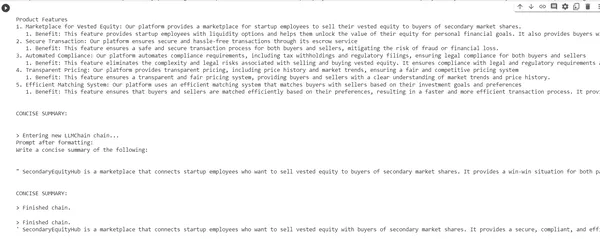

We can observe that Langchain inserted the prompt to generate a summary for a given document.

We can see the concise summary and the product features present in the document generated by Langchain using OpenAI LLM.

More Use Cases

1. Research: We can use this functionality while doing research, Instead of intensively reading the entire research paper word by word, we can use the summarizing functionality to get a glance at the paper quickly.

2. Education: Educational institutions can get curated textbook content summaries from extensive data, academic books, and papers.

3. Business Intelligence: Data analysts must go through a large set of documents to extract insights from documents. Using this functionality, they can reduce the huge amount of effort.

4. Legal Case Analysis: Law practicing professionals can use this functionality to quickly get critical arguments more efficiently from their vast amount of previous similar case documents.

Read more: How to be a data analyst in 2025?

Asking Questions Related to Your Documents

Let’s say we wanted to ask questions about content in a given document, we need to load in a different chain named load_qa_chain . Next, we initialise this chain with a chain_type parameter. In our case, we used chain_type as “stuff” This is a straightforward chain type; it takes all the content, concatenates, and passes to LLM.

Other chain_types:

- map_reduce: At the beginning, the model will individually looks into each document and stores its insights, and at the end, it combines all these insights and again looks into these combined insights to get the final response.

- refine: It iteratively looks into each document given in the document_id list, then it refines the answers with the recent information it found in the document as it goes.

- Map re-rank: The model will individually look into each document and assigns a score to the insights. Finally, it will return the one with the highest score.

Next, we run our chain by passing the input documents and query.

from langchain.chains.question_answering import load_qa_chain

query = "Who is founder of analytics vidhya?"

chain = load_qa_chain(llm, chain_type="stuff")

chain.run(input_documents=docs, question=query)

When you run this code, langchain automatically inserts the prompt with your document content before sending this to OpenAI LLM. Under the hood, langchain is helping us with prompt engineering by providing optimized prompts to extract the required content from documents. If you want to see what prompts they are using internally, just set verbose=True, then you can see the prompt in the output.

from langchain.chains.question_answering import load_qa_chain

query = "Who is founder of analytics vidhya?"

chain = load_qa_chain(llm, chain_type="stuff", verbose=True)

chain.run(input_documents=docs, question=query)

Build Your Chatbot

Now we need to find a way to make this model a question-answering Chatbot. Mainly we need to follow below three things to create a Chatbot.

1. Chatbot should remember the chat history to understand the context regarding the ongoing conversation.

2. Chat history should be updated after each prompt the user asks to bot.

2. Chatbot should work until the user wants to exit the conversation.

from langchain.chains.question_answering import load_qa_chain

# Function to load the Langchain question-answering chain

def load_langchain_qa():

llm = OpenAI(temperature=0, openai_api_key=os.environ['OPENAI_API_KEY'])

chain = load_qa_chain(llm, chain_type="stuff", verbose=True)

return chain

# Function to handle user input and generate responses

def chatbot():

print("Chatbot: Hi! I'm your friendly chatbot. Ask me anything or type 'exit' to end the conversation.")

from langchain.document_loaders import GoogleDriveLoader

loader = GoogleDriveLoader(document_ids=["YOUR DOCUMENT ID's'"],

credentials_path="PATH TO credentials.json FILE")

docs = loader

# Initialize the Langchain question-answering chain

chain = load_langchain_qa()

# List to store chat history

chat_history = []

while True:

user_input = input("You: ")

if user_input.lower() == "exit":

print("Chatbot: Goodbye! Have a great day.")

break

# Append the user's question to chat history

chat_history.append(user_input)

# Process the user's question using the question-answering chain

response = chain.run(input_documents=chat_history, question=user_input)

# Extract the answer from the response

answer = response['answers'][0]['answer'] if response['answers'] else "I couldn't find an answer to your question."

# Append the chatbot's response to chat history

chat_history.append("Chatbot: " + answer)

# Print the chatbot's response

print("Chatbot:", answer)

if __name__ == "__main__":

chatbot()We initialized our google drive documents and OpenAI LLM. Next, we created a list to store the chat history, and we updated the list after every prompt. Then we created an infinite while loop that stops when the user gives “exit” as a prompt.

Conclusion

In this article, we have seen how to create a Chatbot to give insights about your Google documents contents. Integrating Langchain, OpenAI, and Google Drive is one of the most beneficial use cases in any field, whether medical, research, industrial, or engineering. Instead of reading entire data and analyzing the data to get insights which costs a lot of human effort and time. We can implement this technology to automate describing, summarizing, analyzing, and extracting insights from our data files.

Key Takeaways

- Google documents can be fetched into Python using Python’s GoogleDriveLoader class and Google Drive API credentials.

- By integrating OpenAI LLM with Langchain, we can summarize our documents and ask questions related to the documents.

- We can get insights from multiple documents by choosing appropriate chain types like map_reduce, stuff, refine, and map rerank.

Frequently Asked Questions

A. To build an intelligent chatbot, you need to have appropriate data, then you need to give access to ChatGPT for this data. Finally, you need to provide conversation memory to the bot to store the chat history to understand the context.

A. One of the solutions is you can use Langchain’s GoogleDriveLoader to fetch a Google Doc then, you can initialize the OpenAI LLM using your API keys, then you can share the file to this LLM.

A. First, you need to enable Google Drive API, then get your credentials for Google Drive API, then you can pass the document id of your file to the OpenAI ChatGPT model using Langchain GoogleDriveLoader.

A. ChatGPT cannot access our documents directly. However, we can either copy and paste the content into ChatGPT or directly fetch the contents of documents using Langchain then, we can pass the contents to ChatGPT by initializing it using secret keys.

The media shown in this article is not owned by Analytics Vidhya and is used at the Author’s discretion.