spaCy is a Python library for Natural Language Processing (NLP). NLP pipelines with spaCy are free and open source. Developers use it to create information extraction and natural language comprehension systems, as in Cython. Use the tool for production, boasting a concise and user-friendly API.

If you work with a lot of text, you’ll want to learn more about it. What, for example, is it about? In what context do the terms mean? What is being done to whom? Which businesses and goods are mentioned? What texts are comparable to one another?

spaCy is intended for production usage and assists you in developing apps that process and “understand” enormous amounts of text. It may be used to create systems for information extraction, natural language interpretation, and pre-process text for deep learning.

Learning Objectives

- Discover the fundamentals of spaCy, such as tokenization, part-of-speech tagging, and named entity identification.

- Understand spaCy’s text processing architecture, which is efficient and quick, making it appropriate for large-scale NLP jobs.

- In spaCy, you may explore NLP pipelines and create bespoke pipelines for specific tasks.

- Explore the advanced capabilities of spaCy, including rule-based matching, syntactic parsing, and entity linking.

- Learn about the many pre-trained language models available in spaCy and how to utilize them for various NLP applications.

- Learn named entity recognition (NER) strategies for identifying and categorizing entities in text using spaCy.

This article was published as a part of the Data Science Blogathon.

Table of contents

Statistical Models

Certain spaCy characteristics function autonomously, while others require the loading of statistical models. These models enable spaCy to predict linguistic annotations, like determining whether a word is a verb or a noun. Currently, spaCy offers statistical models for various languages, and you can install them as individual Python modules. They usually incorporate the following elements:

- To forecast those annotations in context, assign the binary weights to the part-of-speech tagger, dependency parser, and named entity recognizer.

- Lexical entries in the vocabulary are words and their context-independent characteristics, such as form or spelling.

- Lemmatization rules and lookup tables are examples of data files.

- Word vectors are multidimensional meaning representations of words that allow you to identify how similar they are.

- Use Configuration choices, such as language and processing pipeline settings, to put spaCy in the proper condition when the model is loaded.

To import a model, just run spacy.load(‘model_name’), as seen below:

!python -m spacy download en_core_web_lg!python -m spacy download en_core_web_sm

import spacy

nlp = spacy.load('en_core_web_sm')Also read: spaCy Tutorial to Learn and Master Natural Language Processing (NLP)

Linguistic Annotations

spaCy offers several linguistic annotations to help you understand the grammatical structure of a document. This covers the word kinds, such as parts of speech, and how the words are connected. For example, when analyzing text, it makes a tremendous difference whether a noun is a subject or the object of a phrase – or whether “Google” is used as a verb or refers to a specific website or corporation.

import spacy

nlp = spacy.load("en_core_web_sm")

doc = nlp("Company Y is planning to acquire stake in X company for $23 billion")

for token in doc:

print(token.text, token.pos_, token.dep_)

Even after a Doc has been processed (for example, broken into individual words and annotated), it retains all of the original text’s metadata, such as whitespace characters. You can always retrieve a token’s offset within the original string or rebuild it by merging the tokens and their trailing whitespace. In this manner, you’ll never lose information when using spaCy to parse text.

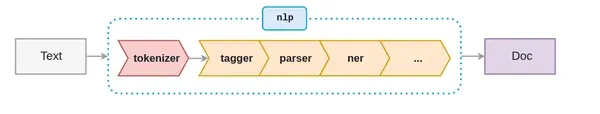

spaCy’s Processing Pipeline

When working with spaCy, the first step is to give a text string to an NLP object. This object is a pipeline of text preprocessing activities the input text string must pass through.

As depicted in the figure above, the NLP pipeline consists of a few components such as a tokenizer, tagger, parser, ner, and so on. So, before we can do anything with the input text string, it must go through all components.

Let me show you how to create a nlp object:

import spacy

nlp = spacy.load('en_core_web_sm')

# Create an nlp object

doc = nlp("He went to play cricket with friends in the stadium")

Use the following code to determine the active pipeline components:

nlp.pipe_names

If you only want the tokenizer to run, use the following code to disable the pipeline components:

nlp.disable_pipes('tagger', 'parser')

Check the active pipeline component once more:

nlp.pipe_names

Tokenization

SpaCy begins processing by tokenizing the text, which means segmenting it into words, punctuation, etc. This is accomplished by using language-specific rules. Segmenting text into words, punctuation marks, etc.

#import cimport spacy

nlp = spacy.load("en_core_web_sm")

doc = nlp("Reliance is looking at buying U.K. based

analytics startup for $7 billion")

for token in doc:

print(token.text)sv

First, the raw text is divided into whitespace characters, like text.split(‘ ‘). The tokenizer then reads the text from left to right. It does two tests on each substring:

- Is the substring a tokenizer exception rule match? “Don’t,” for example, does not include whitespace and should be divided into two tokens, “do” and “n’t,” although “U.K.” should always remain a single token.

- Can a prefix, suffix, or infix be separated? For example, commas, periods, hyphens, and quotation marks.

If a match is found, we apply the rule and restart the tokenizer loop, beginning with the substrings that have just been split. SpaCy may use this method to distinguish complex, nested tokens like abbreviations and multiple punctuation marks.

Part-Of-Speech (POS) Tagging

A part of speech (POS) is a grammatical role that depicts how a specific word is utilized in a sentence. This classification comprises eight distinct parts.

- Noun

- Pronoun

- Adjective

- Verb

- Adverb

- Preposition

- Conjunction

- Interjection

Part of speech tagging is assigning a POS tag to each token depending on its usage in the sentence. POS tags are useful for assigning a syntactic category, such as a noun or verb, to each word.

POS tagging automatically assigns POS tags to all the words in a sentence. Use it for various downstream NLP tasks like feature engineering, language interpretation, and information extraction.

POS tagging is a piece of cake in spaCy. In spaCy, POS tags are available as an attribute on the Token object:

import spacy

nlp = spacy.load('en_core_web_sm')

# Create an nlp object

doc = nlp("Reliance is looking at buying U.K. based

analytics startup for $7 billion")

# Iterate over the tokens

for token in doc:

# Print the token and its part-of-speech tag

print(token, token.tag_, token.pos_, spacy.explain(token.tag_))

The easiest approach to visualize Doc is to use displacy.serve, integrates easily into spaCy. This will start a simple web server and allow you to view the results in your browser. As its first parameter, displaCy can take a single Doc object or a list of Doc objects. This allows you to build them however desired, using any model or changes you wish. This is how our sample statement and its dependents look:

import spacy

from spacy import displacy

doc = nlp("board member meet with senior manager"")

displacy.render(doc, style="dep" , jupyter=True)

Entity Detection

Entity detection, also known as entity recognition, is a type of sophisticated language processing that distinguishes essential features of a text string, such as places, individuals, organizations, and languages. This is particularly beneficial for quickly extracting information from text since it helps you identify significant subjects or important portions of text immediately.

Let’s try entity detection using these excerpts from a recent Washington Post article. We’ll utilise.label to retrieve a label for each recognized thing in the text and then use spaCy’s displaCy visualizer to analyze these entities more visually.

import spacy

from spacy import displacy

nlp = spacy.load("en_core_web_sm")

doc= nlp(u"""The Amazon rainforest,[a] alternatively,

the Amazon Jungle, also known in English as Amazonia,

is a moist broadleaf tropical rainforest in the Amazon

biome that covers most of the Amazon basin of South America.

This basin encompasses 7,000,000 km2 (2,700,000 sq mi), of

which 5,500,000 km2 (2,100,000 sq mi) are covered by the rainforest.

This region includes territory belonging to nine nations.""")

entities=[(i, i.label_, i.label) for i in doc.ents]

entities

Using displaCy, we can also view our input text, with each detected object highlighted in colour and named. In this scenario, we’ll use style = “ent” to tell DisplaCy we want to view entities.

displacy.render(doc, style = "ent",jupyter = True)

Similarity

We compare word vectors or ‘word embeddings,’ which are multi-dimensional semantic representations of a word, to assess similarity. We often create word vectors using an algorithm like word2vec, and they appear like this

Spacy also incorporates dense, real-valued vectors that convey distributional similarity information.

import spacy

nlp = spacy.load("en_core_web_lg")

tokens = nlp("dog cat banana afskfsd")

for token in tokens:

print(token.text, token.has_vector, token.vector_norm, token.is_oov)

import spacy

nlp = spacy.load("en_core_web_lg") # make sure to use larger model!

tokens = nlp("dog cat banana")

for token1 in tokens:

for token2 in tokens:

print(token1.text, token2.text, token1.similarity(token2))

In this situation, the model’s predictions are rather accurate. A dog is quite similar to a cat. However, a banana is not at all. Identical tokens are identical (albeit not necessarily precisely 1.0 due to vector math and floating point imprecisions).

Conclusion

spaCy is a well-known open-source natural language processing (NLP) program allowing efficient and robust text processing. Due to its scalability, multilingual support, and ready-to-use features, SpaCy has gained widespread acceptance in both academic and industrial applications. Its easy interaction with deep learning frameworks, flexible pipelines, and active community make it a good alternative for various NLP activities, allowing users to deliver accurate and effective language processing results.

- It has text processing capabilities that are both rapid and scalable.

- Multiple languages are supported, and pre-trained models are available.

- Features that are ready to utilize for standard NLP tasks.

- Customise and Train the Domain-specific models.

- Integration with deep learning frameworks is seamless.

- Used extensively in real-world NLP applications.

Finally, spaCy is a highly efficient, adaptable, and user-friendly NLP library offering diverse features and pre-trained models for text processing in various languages. It is a popular choice for research and production-level NLP applications because of its speed, scalability, and customization possibilities.

Also read: Beginner’s Guide To Natural Language Processing Using SpaCy

Frequently Asked Questions

A. Yes, spaCy supports several languages and offers pre-trained models for various languages, making it suitable for multilingual NLP tasks.

A. spaCy’s key features include fast processing speed, efficient memory usage, ease of use, support for multiple languages, and built-in deep learning capabilities.

A. To tokenize text using spaCy, load the appropriate language model, and call the nlp object on the text. The resulting document will contain tokens using attributes like .text and .lemma_.

A. spaCy allows you to build custom pipelines and train models on domain-specific data for tasks like text classification and named entity recognition.

A. spaCy furnishes pre-trained language models that underwent training on extensive text corpora. These models can perform various NLP tasks without requiring additional training.

A. To extract named entities, use spaCy’s named entity recognition (NER) functionality. Access the detected entities using the ents attribute of the processed document.

The media shown in this article is not owned by Analytics Vidhya and is used at the Author’s discretion.