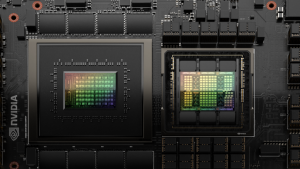

In an era where technology continuously pushes boundaries, Nvidia has once again left its mark. The company has launched the GH200 Grace Hopper Superchip, an advanced AI chip tailored to amplify generative AI applications. This latest innovation promises to revolutionize AI, offering enhanced performance, memory, and capabilities to propel AI to heights.

Also Read: China’s Hidden Market for Powerful Nvidia AI Chips

Unveiling the GH200 Grace Hopper: A New Era of AI

Nvidia, a renowned chip manufacturer, has introduced the world to its cutting-edge GH200 Grace Hopper platform, a next-generation AI chip designed for accelerated computing. This platform will tackle the most intricate generative AI workloads, ranging from complex language models to recommender systems and intricate vector databases.

Also Read: NVIDIA Builds AI SuperComputer DGX GH200

A Glimpse into Grace Hopper Superchips

At the heart of the GH200 Grace Hopper platform lies the revolutionary Grace Hopper Superchip. This groundbreaking processor incorporates the world’s first HBM3e (High Bandwidth Memory 3e) technology, offering a multitude of configurations to cater to diverse needs. Nvidia’s founder and CEO, Jensen Huang, lauds the platform’s remarkable memory technology, seamless performance aggregation through GPU connections, and an adaptable server design that facilitates data center-wide deployment.

Also Read: SiMa.ai to Bring World’s Most Powerful AI Chip to India

NVIDIA NVLink: Paving the Path for Collaborative Power

Nvidia has once again outdone itself by enabling the Grace Hopper Superchip to connect with additional Superchips using Nvidia NVLink technology. This breakthrough connectivity empowers multiple chips to join forces, seamlessly deploying colossal generative AI models that were previously unimaginable. The high-speed NVLink ensures that the GPU gains full access to CPU memory, culminating in an impressive 1.2TB of fast memory in dual-chip configurations.

Amplified Performance: A Leap in Memory Bandwidth

The introduction of HBM3e memory is a pivotal moment in AI advancement. With a staggering 50% increase in memory bandwidth compared to its predecessor, HBM3e delivers an astonishing 10TB/sec combined bandwidth. This boost in memory capacity empowers the GH200 Grace Hopper platform to handle models 3.5 times larger than its predecessors while ensuring an unparalleled performance boost.

Also Read: Samsung Embraces AI and Big Data, Revolutionizes Chipmaking Process

Empowering Businesses: The Road Ahead

Leading system manufacturers are gearing up to release platforms based on the GH200 Grace Hopper in the second quarter of 2024. Nvidia’s unwavering commitment to empowering data centers and enhancing AI development is evident through this revolutionary product. As the AI landscape evolves, Nvidia’s hardware innovations continue to reshape the boundaries of possibility.

Also Read: Government Intervention in Chip Design: A Boon or Bane for India’s Semiconductor Ambitions?

Revolutionizing AI Training and Inference

The GH200 Grace Hopper platform’s potential extends to the training and fine-tuning large language models. With the ability to handle models with hundreds of billions of parameters, and the prospect of soon exceeding a trillion parameters, Nvidia’s hardware innovation simplifies AI development. This advancement means AI models can be trained and referenced using compact networks, eliminating the need for extensive supercomputing resources.

Also Read: New AI Model Outshine GPT-3 with Just 30B Parameters

A Visionary Future for AI

Nvidia’s vision for AI transcends just hardware. As AI becomes more integral to our lives, Nvidia introduces a range of AI architectures catering to diverse AI needs. Collaborations with tech giants such as Microsoft, AWS & Google Cloud showcase Nvidia’s role as an AI hardware provider of choice. Nvidia’s commitment to AI infrastructure, efficiency, and performance is evident in its Spectrum-X Ethernet platform, which propels data processing and AI workloads in the cloud.

Our Say

By launching the GH200 Grace Hopper platform, Nvidia has once again proven its prowess in driving the AI revolution. Nvidia empowers AI developers and businesses to push boundaries and explore new horizons by providing unparalleled memory, performance, and connectivity. As Nvidia’s innovations continue to shape the AI landscape, we can anticipate an era of unprecedented AI achievements and discoveries.