Text generation has witnessed significant advancements in recent years, thanks to state-of-the-art language models like GPT-2 (Generative Pre-trained Transformer 2). These models have demonstrated remarkable capabilities in generating human-like text based on given prompts. However, balancing creativity and coherence in the generated text remains challenging. In this article, we delve into text generation using GPT-2, exploring its principles, practical implementation, and the fine-tuning of parameters to control the generated output. We’ll provide code examples for text generation with GPT 2 and discuss real-world applications, shedding light on how this technology can be harnessed effectively.

Learning Objectives

- The learners should be able to explain the foundational concepts of GPT-2, including its architecture, pre-training process, and autoregressive text generation.

- The learners should be proficient in fine-tuning GPT-2 for specific text generation tasks and controlling its output by adjusting parameters such as temperature, max_length, and top-k sampling.

- The learners should be able to identify and describe real-world applications of GPT-2 in various fields, such as creative writing, chatbots, virtual assistants, and data augmentation in natural language processing.

This article was published as a part of the Data Science Blogathon.

Table of contents

Understanding GPT-2

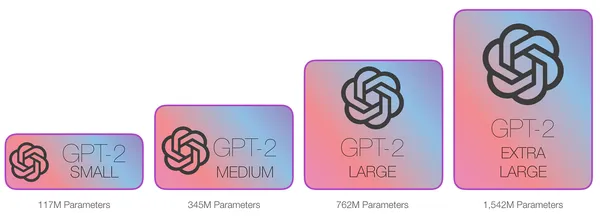

GPT-2, short for Generative Pre-trained Transformer 2, has introduced a revolutionary approach to natural language understanding and text generation through innovative pre-training techniques on a vast corpus of internet text and transfer learning. This section will delve deeper into these critical innovations and understand how they empower GPT-2 to excel in various language-related tasks.

Pre-training and Transfer Learning

One of GPT-2’s key innovations is pre-training on a massive corpus of internet text. This pre-training equips the model with general linguistic knowledge, allowing it to understand grammar, syntax, and semantics across various topics. This model can then be fine-tuned for specific tasks.

Research Reference: “Improving Language Understanding by Generative Pre-training” by Devlin et al. (2018)

Pre-training on Massive Text Corpora

- The Corpus of Internet Text

GPT-2’s journey begins with pre-training on a massive and diverse corpus of Internet text. This corpus comprises vast text data from the World Wide Web, encompassing various subjects, languages, and writing styles. This data’s sheer scale and diversity provide GPT-2 with a treasure trove of linguistic patterns, structures, and nuances. - Equipping GPT-2 with Linguistic Knowledge

During the pre-training phase, GPT-2 learns to discern and internalize the underlying principles of language. It becomes proficient in recognizing grammatical rules, syntactic structures, and semantic relationships. By processing an extensive range of textual content, the model gains a deep understanding of the intricacies of human language. - Contextual Learning

GPT-2’s pre-training involves contextual learning, examining words and phrases in the context of the surrounding text. This contextual understanding is a hallmark of its ability to generate contextually relevant and coherent text. It can infer meaning from the interplay of words within a sentence or document.

From Transformer Architecture to GPT-2

GPT-2 is built upon the Transformer architecture, revolutionizing various natural language processing tasks. This architecture relies on self-attention mechanisms, enabling the model to weigh the importance of different words in a sentence concerning each other. The Transformer’s success laid the foundation for GPT-2.

Research Reference: Attention Is All You Need” by Vaswani et al. (2017)

How Does GPT-2 Work?

At its core, GPT-2 is an autoregressive model. It predicts the next word in a sequence based on the preceding words. This prediction process continues iteratively until the desired length of text is generated. GPT-2 uses a softmax function to estimate the probability distribution over the vocabulary for each word in the sequence.

Code Implementation

Setting Up the Environment

Before diving into GPT-2 text generation, it’s essential to set up your Python environment and install the required libraries:

Note: If ‘transformers’ is not already installed, use: !pip install transformers

import torch

from transformers import GPT2LMHeadModel, GPT2Tokenizer

# Loading pre-trained GPT-2 model and tokenizer

model_name = "gpt2" # Model size can be switched accordingly (e.g., "gpt2-medium")

tokenizer = GPT2Tokenizer.from_pretrained(model_name)

model = GPT2LMHeadModel.from_pretrained(model_name)

# Set the model to evaluation mode

model.eval()

Generating Text with GPT-2

Now, let’s define a function to generate text based on a given prompt:

def generate_text(prompt, max_length=100, temperature=0.8, top_k=50):

input_ids = tokenizer.encode(prompt, return_tensors="pt")

output = model.generate(

input_ids,

max_length=max_length,

temperature=temperature,

top_k=top_k,

pad_token_id=tokenizer.eos_token_id,

do_sample=True

)

generated_text = tokenizer.decode(output[0], skip_special_tokens=True)

return generated_text

Applications and Use Cases

Creative Writing

GPT-2 has found applications in creative writing. Authors and content creators use it to generate ideas, plotlines, and even entire stories. The generated text can serve as inspiration or a starting point for further refinement.

Chatbots and Virtual Assistants

Chatbots and virtual assistants benefit from GPT-2’s natural language generation capabilities. They can provide more engaging and contextually relevant responses to user queries, enhancing the user experience.

Data Augmentation

GPT-2 can be used for data augmentation in data science and natural language processing tasks. Generating additional text data helps improve the performance of machine learning models, especially when training data is limited.

Fine-Tuning for Control

While GPT-2 generates impressive text, fine-tuning its parameters is essential to control the output. Here are key parameters to consider:

- Max Length: This parameter limits the length of the generated text. Setting it appropriately prevents excessively long responses.

- Temperature: Temperature controls the randomness of the generated text. Higher values (e.g., 1.0) make the output more random, while lower values (e.g., 0.7) make it more focused.

- Top-k Sampling: Top-k sampling limits the vocabulary choices for each word, making the text more coherent.

Adjusting Parameters for Control

To generate more controlled text, experiment with different parameter settings. For example, to create a coherent and informative response, you might use:

# Example prompt

prompt = "Once upon a time"

generated_text = generate_text(prompt, max_length=40)

# Print the generated text

print(generated_text)

Output: Once upon a time, the city had been transformed into a fortress, complete with its secret vault containing some of the most important secrets in the world. It was this vault that the Emperor ordered his

Note: Adjust the maximum length based on the application.

Conclusion

In this article, you learned text generation with GPT-2 is a powerful language model that can be harnessed for various applications. We’ve delved into its underlying principles, provided code examples, and discussed real-world use cases.

Key Takeaways

- GPT-2 is a state-of-the-art language model that generates text based on given prompts.

- Fine-tuning parameters like max length, temperature, and top-k sampling allow control over the generated text.

- Applications of GPT-2 range from creative writing to chatbots and data augmentation.

Frequently Asked Questions

A. GPT-2 is a larger and more powerful model than GPT-1, capable of generating more coherent and contextually relevant text.

A. Fine-tune GPT-2 on domain-specific data to make it more contextually aware and useful for specific applications.

A. Ethical considerations include ensuring that generated content is not misleading, offensive, or harmful. Reviewing and curating the generated text to align with ethical guidelines is crucial.

A. Yes, there are various language models, including GPT-3, BERT, and XLNet, each with strengths and use cases.

A. Evaluation metrics such as BLEU score, ROUGE score, and human evaluation can assess the quality and relevance of generated text for specific tasks.

The media shown in this article is not owned by Analytics Vidhya and is used at the Author’s discretion.