Introduction

We live in an age where large language models (LLMs) are on the rise. One of the first things that comes to mind nowadays when we hear LLM is OpenAI’s ChatGPT. Now, did you know that ChatGPT is not exactly an LLM but an application that runs on LLM models like GPT 3.5 and GPT 4? We can develop AI applications very quickly by prompting an LLM. But, there is a limitation. An application may require multiple prompting on LLM, which involves writing glue code several times. This limitation can be easily overcome by using LangChain.

This article is about LangChain and its applications. I assume you have a fair understanding of ChatGPT as an application. For more details about LLMs and the basic principles of Generative AI, you can refer to my previous article on prompt engineering in generative AI.

Learning Objectives

- Getting to know about the basics of the LangChain framework.

- Knowing why LangChain is faster.

- Comprehending the essential components of LangChain.

- Understanding how to apply LangChain in Prompt Engineering.

This article was published as a part of the Data Science Blogathon.

Table of contents

What is LangChain?

LangChain, created by Harrison Chase, is an open-source framework that enables application development powered by a language model. There are two packages viz. Python and JavaScript (TypeScript) with a focus on composition and modularity.

Why Use LangChain?

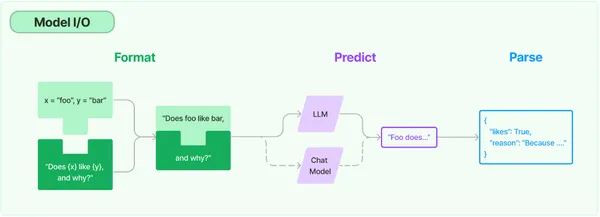

When we use ChatGPT, the LLM makes direct calls to the API of OpenAI internally. API calls through LangChain are made using components such as prompts, models, and output parsers. LangChain simplifies the difficult task of working and building with AI models. It does this in two ways:

- Integration: External data like files, API data, and other applications are being brought to LLMs.

- Agency: Facilitates interaction between LLMs and their environment through decision-making.

Through components, customized chains, speed, and community, LangChain helps avoid friction points while building complex LLM-based applications.

Components of LangChain

There are 3 main components of LangChain.

- Language models: Common interfaces are used to call language models. LangChain provides integration for the following types of models:

i) LLM: Here, the model takes a text string as input and returns a text string.

ii) Chat models: Here, the model takes a list of chat messages as input and returns a chat message. A language model backs these types of models. - Prompts: Helps in building templates and enables dynamic selection and management of model inputs. It is a set of instructions a user passes to guide the model in producing a consistent language-based output, like answering questions, completing sentences, writing summaries, etc.

- Output Parsers: Takes out information from model outputs. It helps in getting more structured information than just text as an output.

Practical Application of LangChain

Let us start working with LLM with the help of LangChain.

openai_api_key='sk-MyAPIKey'Now, we will work with the nuts and bolts of LLM to understand the fundamental principles of LangChain.

ChatMessages will be discussed at the outset. It has a message type with system, human, and AI. The roles of each of these are:

- System – Helpful background context that guides AI.

- Human – Message representing the user.

- AI – Messages showing the response of AI.

#Importing necessary packages

from langchain.chat_models import ChatOpenAI

from langchain.schema import HumanMessage, SystemMessage, AIMessage

chat = ChatOpenAI(temperature=.5, openai_api_key=openai_api_key)

#temperature controls output randomness (0 = deterministic, 1 = random)We have imported ChatOpenAI, HumanMessage, SystemMessage, and AIMessage. Temperature is a parameter that defines the degree of randomness of the output and ranges between 0 and 1. If the temperature is set to 1, the output generated will be highly random, whereas if it is set to 0, the output will be least random. We have set it to .5.

# Creating a Chat Model

chat(

[

SystemMessage(content="You are a nice AI bot that helps a user figure out

what to eat in one short sentence"),

HumanMessage(content="I like Bengali food, what should I eat?")

]

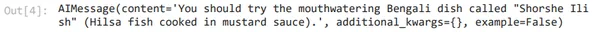

)In the above lines of code, we have created a chat model. Then, we typed two messages: one is a system message that will figure out what to eat in one short sentence, and the other is a human message asking what Bengali food the user should eat. The AI message is:

We can pass more chat history with responses from the AI.

# Passing chat history

chat(

[

SystemMessage(content="You are a nice AI bot that helps a user figure out

where to travel in one short sentence"),

HumanMessage(content="I like the spirtual places, where should I go?"),

AIMessage(content="You should go to Madurai, Rameswaram"),

HumanMessage(content="What are the places I should visit there?")

]

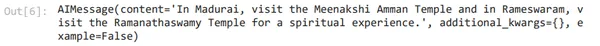

)In the above case, we are saying that the AI bot suggests places to travel in one short sentence. The user is saying that he likes to visit spiritual places. This sends a message to the AI that the user intends to visit Madurai and Rameswaram. Then, the user asked what the places to visit there were.

It is noteworthy that it has not been told to the model where I went. Instead, it referred to the history to find out where the user went and responded perfectly.

How Do the Components of LangChain Work?

Let’s see how the three components of LangChain, discussed earlier, make an LLM work.

The Language Model

The first component is a language model. A diverse set of models bolsters OpenAI API with different capabilities. All these models can be customized for specific applications.

# Importing OpenAI and creating a model

from langchain.llms import OpenAI

llm = OpenAI(model_name="text-ada-001", openai_api_key=openai_api_key)The model has been changed from default to text-ada-001. It is the fastest model is the GPT-3 series and has proven to cost the lowest. Now, we are going to pass a simple string to the language model.

# Passing regular string into the language model

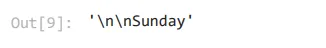

llm("What day comes after Saturday?")

Thus, we obtained the desired output.

The next component is the extension of the language model, i.e., a chat model. A chat model takes a series of messages and returns a message output.

from langchain.chat_models import ChatOpenAI

from langchain.schema import HumanMessage, SystemMessage, AIMessage

chat = ChatOpenAI(temperature=1, openai_api_key=openai_api_key)We have set the temperature at 1 to make the model more random.

# Passing series of messages to the model

chat(

[

SystemMessage(content="You are an unhelpful AI bot that makes a joke at

whatever the user says"),

HumanMessage(content="I would like to eat South Indian food, what are some

good South Indian food I can try?")

]

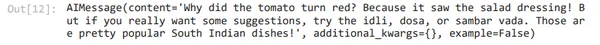

)Here, the system is passing on the message that the AI bot is an unhelpful one and makes a joke at whatever the users say. The user is asking for some good South Indian food suggestions. Let us see the output.

Here, we see that it is throwing some jokes at the beginning, but it did suggest some good South Indian food as well.

The Prompt

The second component is the prompt. It acts as an input to the model and is rarely hard coded. Multiple components construct a prompt, and a prompt template is responsible for constructing this input. LangChain helps in making the work with prompts easier.

# Instructional Prompt

from langchain.llms import OpenAI

llm = OpenAI(model_name="text-davinci-003", openai_api_key=openai_api_key)

prompt = """

Today is Monday, tomorrow is Wednesday.

What is wrong with that statement?

"""

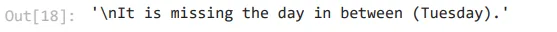

llm(prompt)The above prompts are of instructional type. Let us see the output

So, it correctly picked up the error.

Prompt templates are like pre-defined recipes for generating prompts for LLM. Instructions, few-shot examples, and specific context and questions for a given task form part of a template.

from langchain.llms import OpenAI

from langchain import PromptTemplate

llm = OpenAI(model_name="text-davinci-003", openai_api_key=openai_api_key)

# Notice "location" below, that is a placeholder for another value later

template = """

I really want to travel to {location}. What should I do there?

Respond in one short sentence

"""

prompt = PromptTemplate(

input_variables=["location"],

template=template,

)

final_prompt = prompt.format(location='Kanyakumari')

print (f"Final Prompt: {final_prompt}")

print ("-----------")

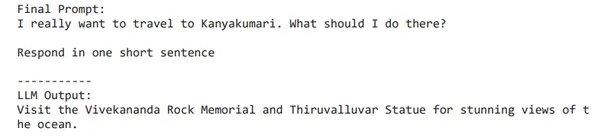

print (f"LLM Output: {llm(final_prompt)}")So, we have imported packages at the outset. The model we have used here is text-DaVinci-003, which can do any language task with better quality, longer output, and consistent instructions compared to Curie, Babbage, or Ada. So, now we have created a template. The input variable is location, and the value is Kanyakumari.

The Output Parser

The third component is the output parser, which enables the format of the output of a model. Parser is a method that will extract model text output to a desired format.

from langchain.output_parsers import StructuredOutputParser, ResponseSchema

from langchain.prompts import ChatPromptTemplate, HumanMessagePromptTemplate

from langchain.llms import OpenAIllm = OpenAI(model_name="text-davinci-003", openai_api_key=openai_api_key)# How you would like your response structured. This is basically a fancy prompt template

response_schemas = [

ResponseSchema(name="bad_string", description="This a poorly formatted user input string"),

ResponseSchema(name="good_string", description="This is your response, a reformatted response")

]

# How you would like to parse your output

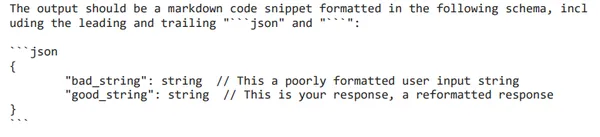

output_parser = StructuredOutputParser.from_response_schemas(response_schemas)# See the prompt template you created for formatting

format_instructions = output_parser.get_format_instructions()

print (format_instructions)

template = """

You will be given a poorly formatted string from a user.

Reformat it and make sure all the words are spelled correctly

{format_instructions}

% USER INPUT:

{user_input}

YOUR RESPONSE:

"""

prompt = PromptTemplate(

input_variables=["user_input"],

partial_variables={"format_instructions": format_instructions},

template=template

)

promptValue = prompt.format(user_input="welcom to Gugrat!")

print(promptValue)

llm_output = llm(promptValue)

llm_output

output_parser.parse(llm_output)

The language model is only going to return a string, but if we need a JSON object, we need to parse that string. In the response schema above, we can see that there are 2 field objects, viz., good string and bad string. Then, we have created a prompt template.

Conclusion

In this article, we have briefly examined the key components of the LangChain and their applications. At the outset, we understood what LangChain is and how it simplifies the difficult task of working and building with AI models. We have also understood the key components of LangChain, viz. prompts (a set of instructions passed on by a user to guide the model to produce a consistent output), language models (the base which helps in giving a desired output), and output parsers (enables getting more structured information than just text as an output). By understanding these key components, we have built a strong foundation for building customized applications.

Key Takeaways

- LLMs possess the capacity to revolutionize AI. It opens a plethora of opportunities for information seekers, as anything can be asked and answered.

- While basic ChatGPT prompt engineering augurs well for many purposes, LangChain-based LLM application development is much faster.

- The high degree of integration with various AI platforms helps utilize the LLMs better.

Frequently Asked Questions

Ans. Python and JavaScript are the two packages of LangChain.

Ans. Temperature is a parameter that defines the degree of randomness of the output. Its value ranges from 0 to 1.

Ans. text-ada-001 is the fastest model in the GPT-3 series.

Ans. Parser is a method that will extract a model’s text output to a desired format.

The media shown in this article is not owned by Analytics Vidhya and is used at the Author’s discretion.