Introduction

In September 2023, the Mistral Lab released Mistral-7b, a fully open-sourced model with an Apache 2.0 license. It took the AI sphere by storm and topped the Open LLM leaderboard. It outperformed bigger models like Llama 2 13b on all benchmarks. Even now, the models topping the leaderboard are derived from the Mistral base model. It has been proven that Mistral-7b is a capable model and has a ton of potential. However, for many tasks, a base model might not be desirable. The models usually needed to be trained over custom datasets to perform better at targeted tasks like Coding, Role-Play, Chat, etc. Besides these models, even the 7B parameter is very expensive to fine-tune. Fully fine-tuning these models requires significant amounts of GPUs. But thanks to recent advancements in model quantization and LoRA, we can now fine-tune and infer from small LLMs for free.

Learning Objectives

- Learn about LLM fine-tuning.

- Understand the basics of LoRA and QLoRA.

- Explore tools and techniques for fine-tuning.

- Implement SFT fine-tuning of Mistral-7b on the Colab using Unsloth and HuggingFace’s trl library.

This article was published as a part of the Data Science Blogathon.

Table of contents

Fine-tuning LLMs

Fine-tuning is the best way to make a model learn about task-specific things. It is the process of training a pre-trained model over custom datasets to make the model perform better on targeted tasks. During fine-tuning, the parameters of the base model are updated through transfer learning to reflect the knowledge learned.

All the model parameters are updated during full fine-tuning, but this can be very expensive and inaccessible to a larger chunk of developers. This is where LoRA and QLoRA come into the picture. So, let’s understand LoRA and QLoRA.

Take your AI innovations to the next level with GenAI Pinnacle. Fine-tune models like Gemini and unlock endless possibilities in NLP, image generation, and more. Dive in today! Explore Now

LoRA

LoRA (Low-Rank Adaptation) is an efficient method for fine-tuning language models with fewer computing resources by reducing the number of trainable parameters. The LoRA is based on the low-rank approximation technique to approximate a large matrix as closely as possible.

Note: A rank of a matrix is the number of linearly independent rows or columns. This is analogous to dimensionality reduction. SVD(Singular Value Decomposition) and PCA(Principal Component Analysis) are used for low-rank decomposition of original weight matrices.

In contrast to full fine-tuning, where all the weights are updated, with LoRA, only the low-rank approximated weight matrices are updated; these are called update matrices. This is a significant improvement in speed and efficiency over full fine-tuning, as we will only deal with a low-rank matrix of the original weight matrix.

The original parameters remain the same as the LoRA, but only low-rank matrices (adapters) are updated. This reduces the overall GPU requirements for fine-tuning. These adapters act as add-ons in conjunction with original weights for token prediction.

QLoRA

The LoRA was a great step up over full fine-tuning, yet it was still expensive to fine-tune models on consumer-grade machines. This is where QLoRA shines.

The QLoRA stands for Quantized LoRA. Quantization is casting high-bit numbers to low-bit numbers to reduce memory footprint. Original models usually have higher bit-values (float16, 32) to store more information. But it also requires large computing resources to work with them.

To make the process efficient, QLoRA introduced three different concepts. They are 4-bit Normal Float 16(NF4), optimal for normally distributed weights, Double Quantization to reduce average memory footprint by quantizing the quantization constant, and Paged Optimizer to reduce memory spikes.

Once the quantization is complete, the LoRA is applied to fine-tune low-rank update matrices. The entire process of model quantization followed by LoRA makes it easy to fine-tune LLMs on consumer hardware.

Also Read: What is QLoRA?

Fine-tuning with Unsloth

Unsloth is an open-source platform for efficient fine-tuning of popular open-source LLMs like Llama-2, Mistral, and other derivatives. Unsloth implements optimized Triton kernels, manual autograds, etc, to speed up training. It is almost twice as fast as Huggingface and Flash Attention implementation.

We will use Unsloth to fine-tune a Mistral-7b model on the Alpaca dataset over Colab’s free Tesla T4 GPU.

First, open a Colab notebook with a GPU runtime and install the following libraries.

import torch

!pip install "unsloth[colab] @ git+https://github.com/unslothai/unsloth.git"

!pip install "git+https://github.com/huggingface/transformers.git"123pythonNow, we download the 4-bit Mistral 7b model to our runtime through Unsloth’s FastLanguageModel class.

from unsloth import FastLanguageModel

import torch

max_seq_length = 2048

dtype = None # None for auto detection. Float16 for Tesla T4, V100, Bfloat16 for Ampere+

load_in_4bit = True # Use 4bit quantization to reduce memory usage. Can be False.

model, tokenizer = FastLanguageModel.from_pretrained(

model_name = "unsloth/mistral-7b-bnb-4bit", # "unsloth/mistral-7b" for 16bit loading

max_seq_length = max_seq_length,

dtype = dtype,

load_in_4bit = load_in_4bit,

# token = "hf_...", # use one if using gated models like meta-llama/Llama-2-7b-hf

)To use 16-bit models, set load_in_4bit to False.

Now, add LoRA adapters. So, we only have to deal with a fraction of parameters (1-10%).

model = FastLanguageModel.get_peft_model(

model,

r = 16, # Choose any number > 0 ! Suggested 8, 16, 32, 64, 128

target_modules = ["q_proj", "k_proj", "v_proj", "o_proj",

"gate_proj", "up_proj", "down_proj",],

lora_alpha = 16,

lora_dropout = 0, # Currently only supports dropout = 0

bias = "none", # Currently only supports bias = "none"

use_gradient_checkpointing = True,

random_state = 3407,

max_seq_length = max_seq_length,

)Data Preparation

We will use the Yahma version of the Alpaca 52k dataset. This is a cleaned version of the original Alpaca dataset from Stanford. The dataset has data in instruction-output format. Here is an example

Now, prepare the dataset for fine-tuning by loading the dataset from the HuggingFace datasets library.

alpaca_prompt = """Below is an instruction that describes a task,

paired with an input that provides further context. Write a response that

appropriately completes the request.

### Instruction:

{}

### Input:

{}

### Response:

{}"""

def formatting_prompts_func(examples):

instructions = examples["instruction"]

inputs = examples["input"]

outputs = examples["output"]

texts = []

for instruction, input, output in zip(instructions, inputs, outputs):

text = alpaca_prompt.format(instruction, input, output)

texts.append(text)

return { "text" : texts}

pass

from datasets import load_dataset

dataset = load_dataset("yahma/alpaca-cleaned", split = "train")

dataset = dataset.map(formatting_prompts_func, batched = True,)Training the Model

So far, we have loaded a 4-bit quantized Mistral-7b model, created a LoRA configuration, and prepared our data for training. The next thing is to train the model. There are multiple ways to accomplish model training, such as SFT and DPO. Let’s briefly go through these concepts.

SFT

SFT stands for Supervised Fine Tuning, and as the name suggests in SFT, we will have a labeled dataset similar to the Alpaca dataset we just prepared. The dataset consists of data with instructions and expected answers. The models fine-tuned over it learn the pattern and nuances of expected answers associated with questions.

DPO

DPO stands for Direct Preference Optimisation. The dataset for DPO consists of data with instructions, an accepted answer, and a rejected answer. Here is an example of a DPO dataset.

DPO approaches the task as a classification problem. To accomplish this, it employs two models: A trained model and a reference model. During fine-tuning, the goal is to make the trained model yield higher probabilities for accepted responses than the reference model. Conversely, we will also want the policy model to output lower probabilities for rejected answers than the reward model.

We can efficiently align model behavior with our preference by rewarding the model for preferred responses and penalizing it for rejected responses.

Now, back to our model training. We will use the Supervised Fine Tuning (SFT) method to train the LoRA adapters on the Alpaca dataset. To accomplish this, we will use the SFTTrainer from the TRL library. For DPO, there is a DPOTrainer class.

from trl import SFTTrainer

from transformers import TrainingArguments

trainer = SFTTrainer(

model = model,

train_dataset = dataset,

dataset_text_field = "text",

max_seq_length = max_seq_length,

args = TrainingArguments(

per_device_train_batch_size = 2,

gradient_accumulation_steps = 4,

warmup_steps = 5,

max_steps = 60,

learning_rate = 2e-4,

fp16 = not torch.cuda.is_bf16_supported(),

bf16 = torch.cuda.is_bf16_supported(),

logging_steps = 1,

optim = "adamw_8bit",

weight_decay = 0.01,

lr_scheduler_type = "linear",

seed = 3407,

output_dir = "outputs",

),

)Now, start the training.

trainer_stats = trainer.train()This will take a while. Once the training is finished, we can use the fine-tuned model for inferencing.

Inference

Now, run the model with formatted inputs.

inputs = tokenizer(

[

alpaca_prompt.format(

"Continue the fibonnaci sequence.", # instruction

"1, 1, 2, 3, 5, 8", # input

"", # output - leave this blank for generation!

)

]*1, return_tensors = "pt").to("cuda")

outputs = model.generate(**inputs, max_new_tokens = 128, use_cache = True)

tokenizer.batch_decode(outputs)This will output a list with a string of the model’s instructions, inputs, and outputs.

We can save the LoRA adapters to the local directory with the following code.

model.save_pretrained("mistral_lora_model") If you wish to push the model to Huggingface Hub, create a HugginFace account and run the below code

model.push_to_hub("your_name/mistral_lora_model")We can load the saved LoRA adapters and inference as well.

from peft import PeftModel

model = PeftModel.from_pretrained(model, "mistral_lora_model")Define a function to format and print the model output.

from typing import List

def get_response(query:str, input="")->List[str]:

inputs = tokenizer(

[

alpaca_prompt.format(

query, # instruction

input, # input

"", # output

)

]*1, return_tensors = "pt").to("cuda")

outputs = model.generate(**inputs, max_new_tokens = 1024, use_cache = True)

return tokenizer.batch_decode(outputs)

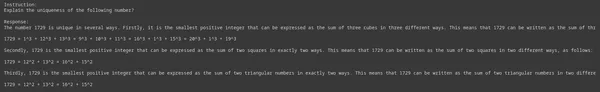

query = "State 3 unique aspects of the following number?"

input = "1729"

resp = get_response(query, input)

def format_msg(message):

split_msg = message.split("### ")

final_str = split_msg[1]+split_msg[3]

return final_str

print(format_msg(resp[0]))

You can play with the prompts and see how it performs.

Conclusion

The LoRA and QLoRA have made running LLMs on consumer hardware a reality without sacrificing the original performance of the models. A true democratization of Large Language Models. With the help of tools like Unsloth, transformers, and trl, it is possible to fine-tune LLMs on custom datasets over consumer GPUs. This article showed how to fine-tune an LLM in Colab’s T4 GPU.

Key Takeaways

- Fine-tuning is the best way to make a model obey specific instructions. It makes models learn patterns from smaller datasets.

- While full fine-tuning is always desired, the model training cost can be expensive for custom use cases.

- LoRA simplifies this by only needing us to train low-rank update matrices instead of full-weight matrices.

- While LoRA is a step up, the QLoRA makes it even more cost-effective by quantizing models before applying LoRA.

- Unsloth is an open-source platform that provides tools to speed up fine-tuning LLMs.

Frequently Asked Questions

A. Mistral-7b is a fully open-source Large Language Model from Mistral lab with an excellent potential to fine-tune over custom datasets.

A. It is possible to fine-tune smaller LLMs for free on Colab over the Tesla T4 GPU with QLoRA.

A. Fine-tuning vastly enhances LLM’s capability to perform downstream tasks, like role play, code generation, etc.

A. The LoRA is a fine-tuning method where only a fraction of approximated model weights are trained instead of the original weights. Thus reducing the overall memory footprint. While in QLoRA, the models are quantized before applying LoRA. This makes fine-tuning less GPU-intensive.

A. Fine-tuning has many advantages but can also skew the model behavior by introducing biases. The fine-tuning data must be thoroughly examined before training the model on it.

The media shown in this article is not owned by Analytics Vidhya and is used at the Author’s discretion.

Dive into the future of AI with GenAI Pinnacle. From training bespoke models to tackling real-world challenges like PII masking, empower your projects with cutting-edge capabilities. Start Exploring.