“AI Agentic workflow will drive massive progress this year,” commented Andrew Ng, highlighting the significant advancements anticipated in AI. With the growing popularity of large language models, Autonomous Agents are becoming a topic of discussion. In this article, we will explore Autonomous Agents, cover the components of building an Agentic workflow, and discuss the practical implementation of a Content creation agent using Groq and crewAI. In this article, you will get an understanding of the AI agentic workflows and how you can create content with an AI agent using Groq and CrewAI. With that, we are also providing an agentic workflow example so that you can get a full understanding of the agentic workflow topic.

Learning Objectives

- Guide through the working functionality of Autonomous Agents with a simple example of how humans execute a given task.

- Understand the limitations and research areas of autonomous agents.

- Explore the core components required to build an AI agent pipeline.

- Build a content creator agent using crewAI, an Agentic open-source framework.

- Integrate an open-source large language model within the agentic framework with the help of LangChain and Groq.

Table of contents

What is Agentic Workflow?

Agentic workflow is a new way of using AI, specifically large language models (LLMs) like me, to tackle complex tasks. It’s different from the traditional approach where you just give an LLM a prompt and get a response.

Here’s the gist of agentic workflows:

- Multiple AI agents: Instead of a single LLM, agentic workflows use several AI agents, each with specific roles and functions. Think of them as a team working together.

- Iterative process: The task is broken down into smaller, more manageable steps. The AI agents tackle each step, and you can provide feedback along the way. This lets the agents learn and improve as they go.

- Collaboration: The agents can work together, sharing information and completing subtasks to achieve the final goal.

Understanding Autonomous Agents

Imagine a group of engineers gathering to plan the development of a new software app. Each engineer brings expertise and insights, discussing various features, functionalities, and potential challenges. They brainstorm, analyze, and strategize together, aiming to create a comprehensive plan that meets the project’s goals.

To replicate this using large language models eventually gave rise to the Autonomous Agents.

Autonomous agents possess reasoning and planning capabilities similar to human intelligence, making them advanced AI systems. They are essentially LLMs with brain that has the ability to self-reason and plan the decomposition of the tasks. A prime example of such an agent is “Devin AI,” which has sparked numerous discussions about its potential to replace human or software engineers.

Although this replacement might be premature due to the complexity and iterative nature of software development, the ongoing research in this field aims to address key areas like self-reasoning and memory utilization.

Key Areas of Research on Improvement for Agents

- Self-Reasoning: Enhancing the ability of LLMs to reduce hallucinations and provide accurate responses.

- Memory Utilization: Storing past responses and experiences to avoid repeating mistakes and improve task execution over time.

- Prompt Techniques for Agents: Currently, most frameworks include REACT prompting to execute agentic workflows. Other alternatives include COT-SC (Chain-of-Thought Self-Consistency), self-reflection, LATS, and so on. This is an ongoing research area where better prompts are benchmarked on open-source datasets.

Before exploring the components required for building an agentic workflow, let’s first understand how humans execute a simple task.

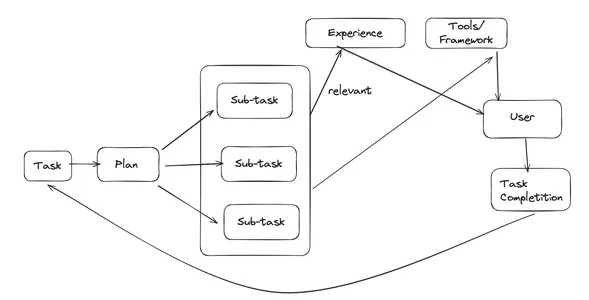

Simple Task Execution Workflow

Let’s say, as humans, how do we approach a problem statement?

When we approach a problem statement as humans, we follow a structured process to ensure efficient and successful execution. Take, for example, building a customer service chatbot. We don’t dive straight into coding. Instead, we plan by breaking down the task into smaller, manageable sub-tasks such as fetching data, cleaning data, building the model, and so on.

For each sub-task, we use our relevant experience and the right tools/framework knowledge to get the task done. Each sub-task requires careful planning and execution, previous learnings, and ensuring we don’t make mistakes in executing the task.

This method involves multiple iterations until the task is completed successfully. Agents’ workflow operates similarly. Let’s break it down step by step to see how it mirrors our approach.

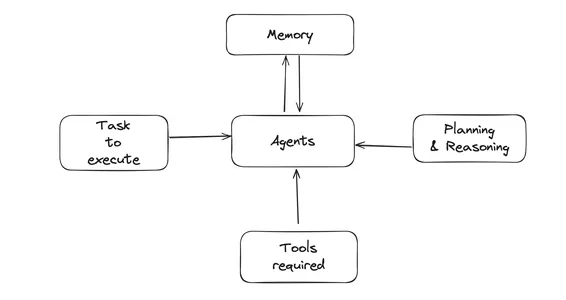

AI Agents Component Workflow

At the heart of the workflow are the Agents. Users provide a detailed description of the task. Once the task is outlined, the Agent utilizes planning and reasoning components to break down the task further. This involves using a large language model with prompt techniques like REACT. In this prompt engineering approach, we divide the process into three parts: Thoughts, Actions, and Observation. Thoughts reason about what needs to be done, actions refer to the supported tools and the additional context required by the LLM.

- Task Description: The task is described and passed to the agent.

- Planning and Reasoning: The agent uses various prompting techniques (e.g., React Prompting, Chain of Thought, Self-Consistency, Self-Reflection, Language Agent Research) to plan and reason.

- Tools required: The agent uses tools like web APIs or GitHub APIs to complete subtasks.

- Memory: Storing responses, i.e., Task+Each subtask result in memory for future reference.

It’s time to dirty our hands with some code writing.

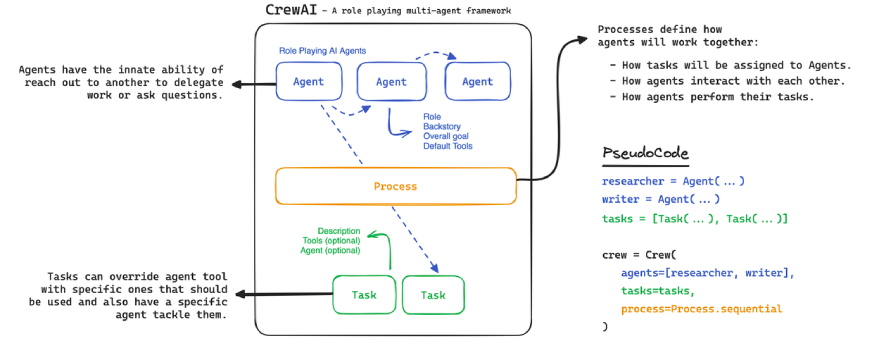

Content Creator Agent using Groq and CrewAI

Let us look into the steps to build an Agentic Workflow using CrewAI and the Groq model.

Step 1: Installation

- crewai: Open Source Agents framework.

- ‘crewai[tools]’: Supported tools integration to get contextual data as Actions.

- langchain_groq: Most of the CrewAI backend is dependent on Langchain, thus, we directly use langchain_groq to infer LLM.

pip install crewai

pip install 'crewai[tools]'

pip install langchain_groqStep 2: Set up the API keys

To integrate the tool and a large language model environment, securely store your API keys using the getpass module. The SERPER_API_KEY can be obtained from serper.dev, and the GROQ_API_KEY from console.groq.com.

import os

from getpass import getpass

from crewai import Agent,Task,Crew,Process

from crewai_tools import SerperDevTool

from langchain_groq import ChatGroq

SERPER_API_KEY = getpass("Your serper api key")

os.environ['SERPER_API_KEY'] = SERPER_API_KEY

GROQ_API_KEY = getpass("Your Groq api key")

os.environ['GROQ_API_KEY'] = GROQ_API_KEYStep 3: Integrate Gemma Open Source Model

Groq is a hardware and software platform building the LPU AI Inference Engine, known for being one of the fastest LLM inference engines in the world. With Groq, users can efficiently perform inference on open-source LLMs such as Gemma, Mistral, and Llama with low latency and high throughput. To integrate Groq into crewAI, you can seamlessly import it via Langchain.

llm = ChatGroq(

model="groq/llama-3.1-8b-instant",

temperature=0,

max_tokens=None,

timeout=None,

max_retries=2,

# other params...

)Step 4: Search Tool

SerperDevTool is a search API that browses the internet to return metadata, relevant query result URLs, and brief snippets as descriptions. This information aids agents in executing tasks more effectively by providing them with contextual data from web searches.

search_tool = SerperDevTool()Step 5: Agent

Agents are the core component of the entire code implementation in crewAI. An agent is responsible for performing tasks, making decisions, and communicating with other agents to complete decomposed tasks.

To achieve better results, it is essential to appropriately prompt the agent’s attributes. Each agent definition in crewAI includes role, goal, backstory, tools, and LLM. These attributes give agents their identity:

- Role: Determines the kind of tasks the agent is best suited for.

- Goal: Defines the objective that improves the agent’s decision-making process.

- Backstory: Provides context to the agent on its capabilities based on its learning.

One of the major advantages of crewAI is its multi-agent functionality. Multi-agent functionality allows one agent’s response to be delegated to another agent. To enable task delegation, the allow_delegations parameter should be set to True.

Agents run multiple times until they convey the correct result. You can control the maximum number of interactions by setting the max_iter parameter to 10 or a value close to it.

Note:

- By default, crewAI uses OpenAI as the LLM. To change this, Groq can be defined as the LLM

- {subject_area}: This is an input variable, meaning the value declared inside the curly brackets needs to be provided by the user.

Python Code Implementation

researcher = Agent(

role = "Researcher",

goal='Pioneer revolutionary advancements in {subject_area}',

backstory=(

"As a visionary researcher, your insatiable curiosity drives you"

"to delve deep into emerging fields. With a passion for innovation"

"and a dedication to scientific discovery, you seek to"

"develop technologies and solutions that could transform the future."),

llm = llm,

max_iter = 5,

tools = [search_tool],

allow_delegation=True,

verbose=True

)

writer = Agent(

role = "Writer",

goal='Craft engaging and insightful narratives about {subject_area}',

verbose=True,

backstory=(

"You are a skilled storyteller with a talent for demystifying"

"complex innovations. Your writing illuminates the significance"

"of new technological discoveries, connecting them with everyday"

"lives and broader societal impacts."

),

tools = [search_tool],

llm = llm,

max_iter = 5,

allow_delegation=False

)Step 6: Task

Agents can only execute tasks when provided by the user. Tasks are specific requirements completed by agents, which provide all necessary details for execution. For the Agent to decompose and plan the subtask, the user needs to define a clear description and expected outcome. Each task requires linking with the responsible agents and the necessary tools.

Further, crewAI provides the flexibility to provide async execution to execute the task. Since in our case, the execution is in sequential order, we can set it to False.

research_task = Task(

description = (

"Explore and identify the major development within {subject_area}"

"Detailed SEO report of the development in a comprehensive narrative."

),

expected_output='A report, structured into three detailed paragraphs',

tools = [search_tool],

agent = researcher

)

write_task = Task(

description=(

"Craft an engaging article on recent advancements within {subject_area}"

"The article should be clear, captivating, and optimistic, tailored for a broad audience."

),

expected_output='A blog article on recent advancements in {subject_area} in markdown.',

tools = [search_tool],

agent = writer,

async_execution = False,

output_file = "blog.md"

)Step 7: Run and Execute the Agent

To execute a multi-agent setup in crewAI, you need to define the Crew. A Crew is a collection of Agents working together to accomplish a set of tasks. Each Crew establishes the strategy for task execution, agent cooperation, and the overall workflow. In our case, the workflow is sequential, with one agent delegating tasks to the next.

Finally, the Crew executes by running the kickoff function, followed by user input.

crew = Crew(

agents = [researcher,writer],

tasks = [research_task,write_task],

process = Process.sequential,

max_rpm = 3,

cache=True

)

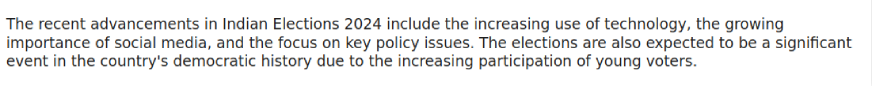

result = crew.kickoff(inputs={'subject_area':"Indian Elections 2024"})

print(result)Output:

Conclusion

The field of Agents remains research-focused, with development gaining momentum through the release of multiple open-source agent frameworks. This article is mainly a beginner’s guide for those interested in building agents without relying on closed-source large language models. Furthermore, this article summarizes the importance and necessity of prompt engineering to maximize the potential of both large language models and agents.

Hope you liked the article and were able to get a good understanding of the agentic workflows.

Key Takeaways

- Understanding the necessity of an Agentic workflow in today’s landscape of large language models.

- One can easily understand the Agentic workflow by comparing it with a simple human task execution workflow.

- While models like GPT-4 or Gemini are prominent, they’re not the sole options for building agents. Open Source models, supported by the Groq API, enable the creation of faster inference agents.

- CrewAI provides a wide range of multi-agent functionality and workflows that help in efficient task decomposition

- Agents Prompt Engineering stands out as a crucial factor for enhancing task decomposition and planning.

Frequently Asked Questions

A. crewAI integrates seamlessly with the Langchain backend, a robust data framework that links large language models (LLMs) to custom data sources. With over 50 LLM integrations, Langchain stands as one of the largest tools for integrating LLMs. Therefore, any Langchain-supported LLM can utilize a custom LLM to connect with crewAI agents.

A. crewAI is an open-source agents framework that supports multi-agent functionalities. Similar to crewAI, there are various powerful open-source agent frameworks available, such as AutoGen, OpenAGI, SuperAGI, AgentLite, and more.

A. Yes, crewAI is open source and free to use. One can easily build an agentic workflow in just around 15 lines of code.