Introduction

Evaluation of models and medical tests is significant in both data science and medicine. However, these two domains use different metrics, which is confusing. While data scientists use precision and recall, medics use specificity and sensitivity. When it comes to the relationship between these metrics, they differ. This, therefore, calls for comprehension of their disparities and applications to evaluate models accurately and have an effective exchange between data scientists and medical professionals.

Overview

- The blog contrasts data science metrics (precision, recall) with medical metrics (specificity, sensitivity) for model evaluation.

- Precision measures the accuracy of positive predictions, while recall (sensitivity) assesses the detection of all actual positives.

- Specificity evaluates the accuracy of negative predictions, which is crucial for identifying true negatives in medical tests.

- Practical examples illustrate the implications of different metric combinations in medical screenings and disease detection.

- Balancing precision and recall using the F1 score is recommended for comprehensive model performance evaluation.

Table of contents

Data Science Metrics

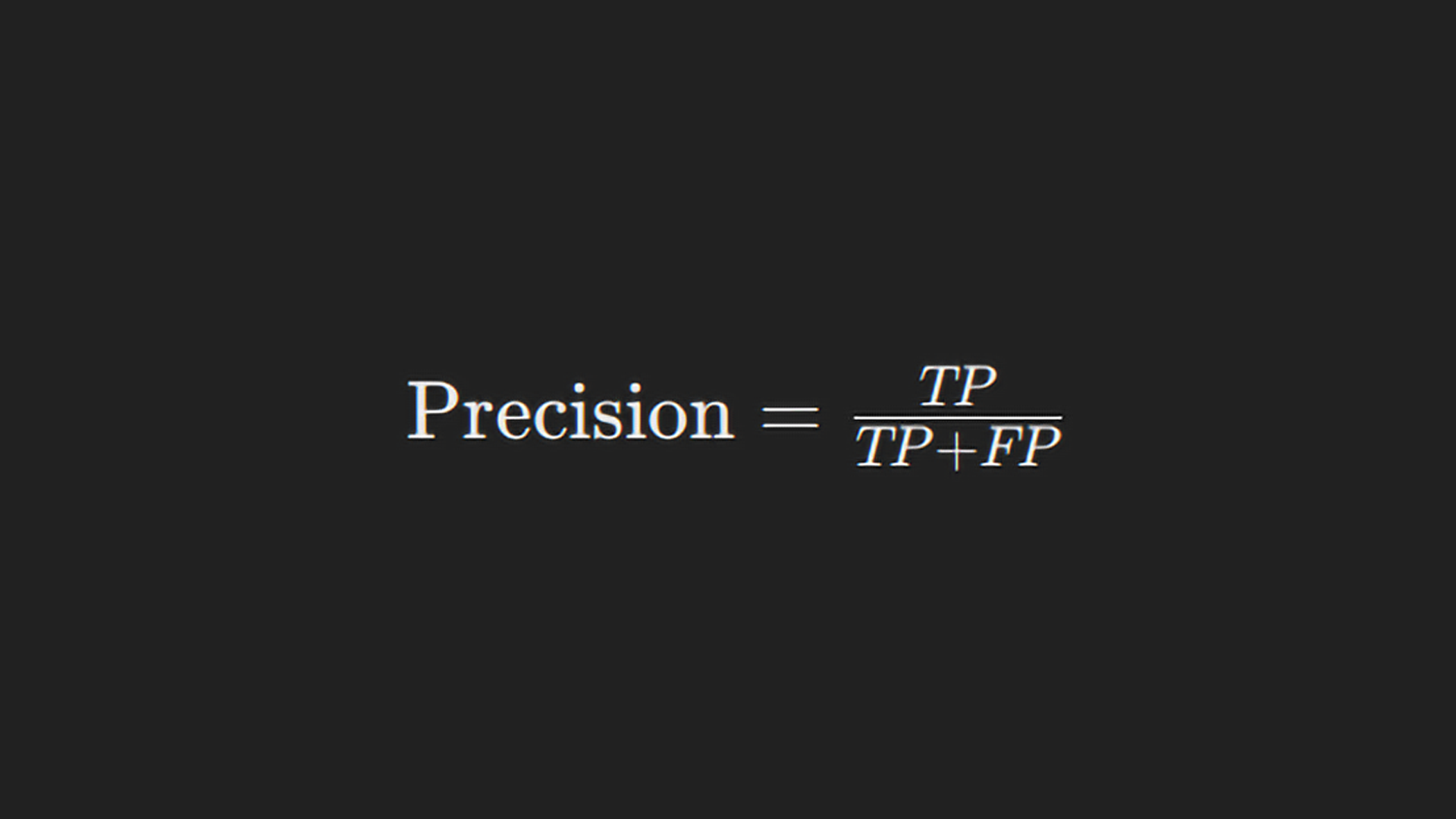

Precision is the ratio or fraction of true positives out of all positive examples predicted by a model. It answers this question: “Out of all the examples predicted as positive, how many are positive?”

The total number of cases that were positively classified based on their actual existence as HIV/AIDS can be measured using precision.

Precision tells us how many of the positively classified instances were positive. For instance, in a spam detection system, precision calculates the proportion of emails marked as spam that are spam.

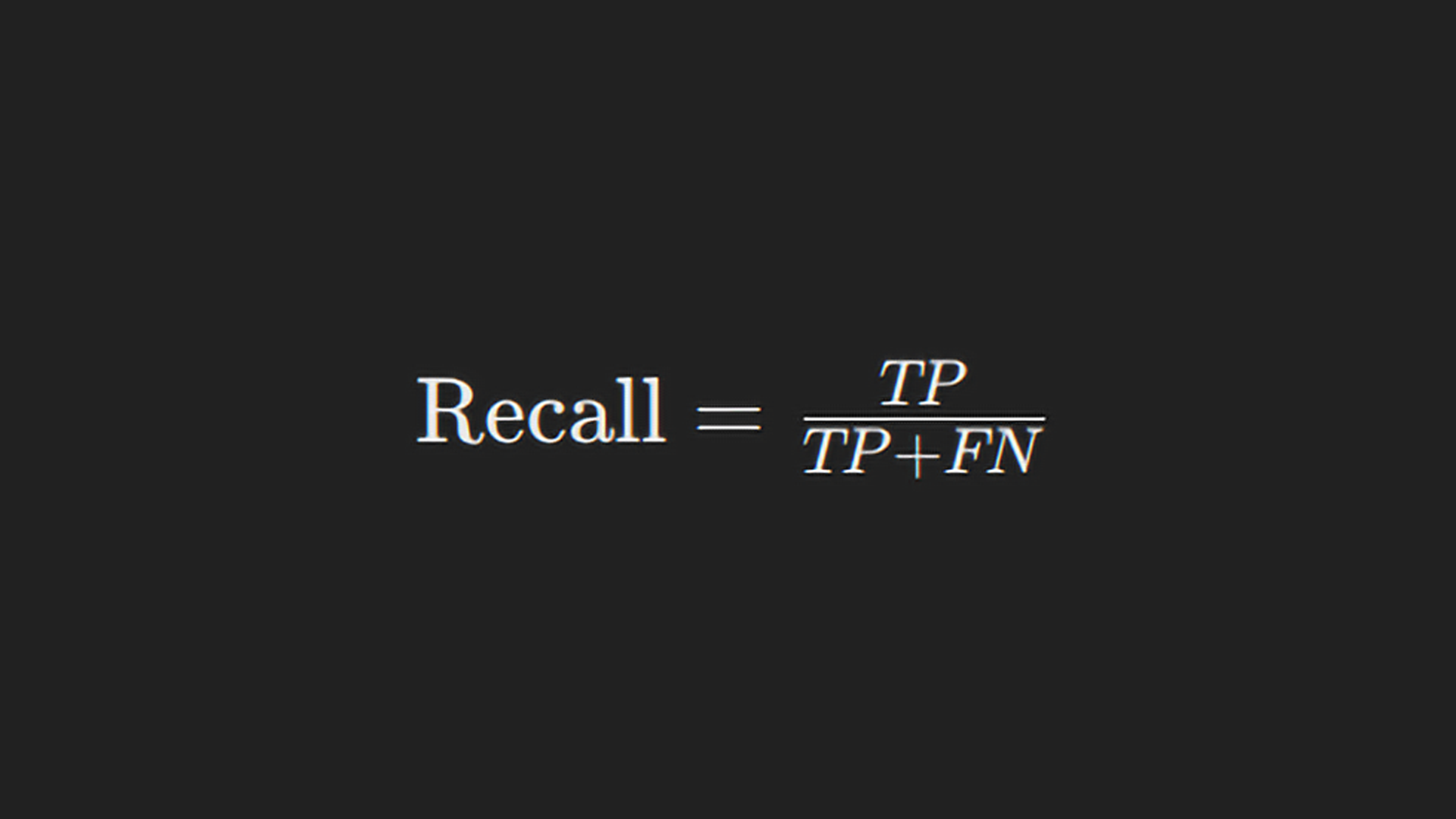

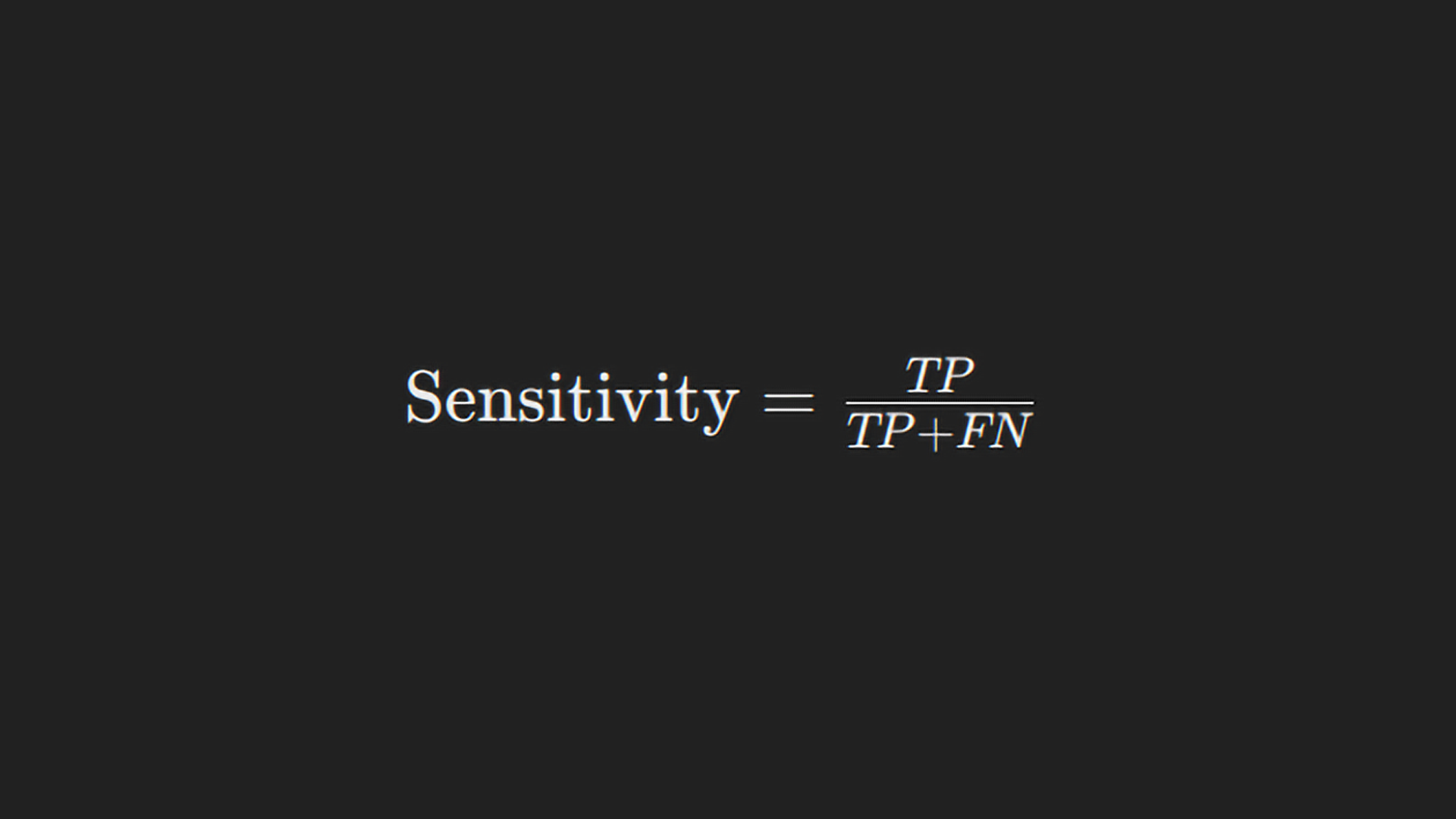

Recall, also called sensitivity in the field, measures the fraction of all true positives divided by total actual positive cases. It addresses the question: “Of all positive cases, how many were correctly predicted as positives?”

Recall refers to the model’s ability to find all relevant instances. For example, in the case of a medical test for a disease, recall tells us how many actual positive cases (patients with the disease) were correctly identified by the test.

Also read: Machine Learning & AI for Healthcare in 2024

Medical Metrics

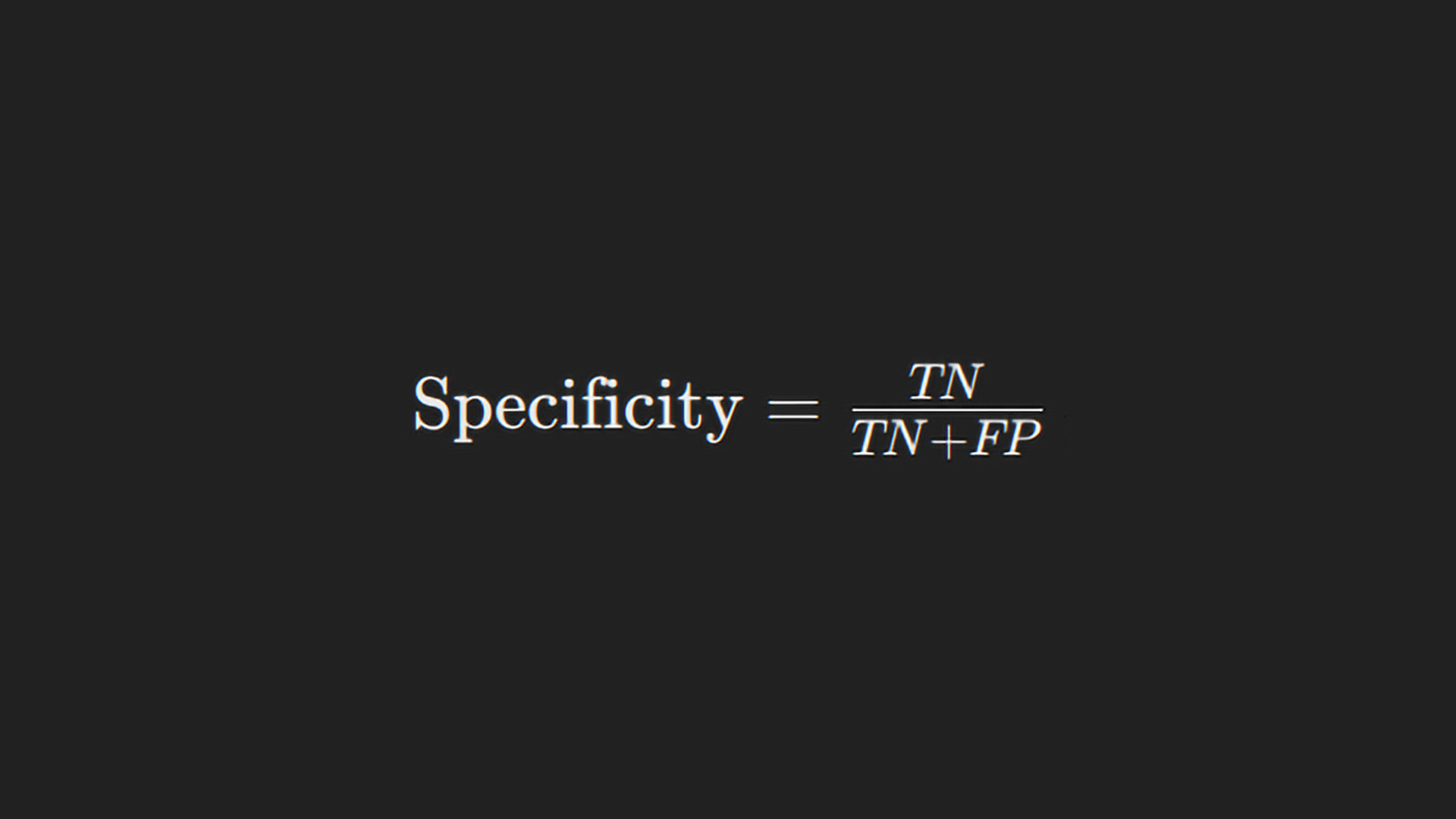

Specificity calculates the ratio of true negatives predicted and those that are negative. It seeks to answer the question, ” How many negative predictions for people who do not have a condition are correct?”

Specificity measures how well a test can tell a negative. In other words, during medical screening, specificity shows how many healthy individuals who do not have the disease may be correctly identified as externalities.

Sensitivity (or recall in data science) measures the proportion of true positive predictions out of all positive cases. It answers the same question as recall.

Comparing Metrics

Precision Compared to Specificity

Precision and specificity cover different elements of model performance. Precision focuses on the accuracy of positive predictions, asking how many of the predicted positives are actually positive. Specificity evaluates the accuracy of negative predictions, indicating how well the model identifies negative cases.

For example, in a medical test for a rare disease, high precision means that most positives identified actually have it, while high specificity means that most negatives are correctly classified as not having it.

Recall vs Sensitivity

The same metric has two different names: recall and sensitivity. Both measures describe how many true positives the model identifies. Both metrics measure the ability to find positive instances, like detecting all patients with disease.

Also read: Extracting Medical Information From Clinical Text With NLP

Practical Examples

To illustrate the differences and importance of these metrics, consider the following examples:

Example 1: Low Precision, High Recall, High Specificity

In this scenario, if the classifier predicts negatively, the prediction is trustworthy (high specificity), but a positive prediction is less reliable (low precision). However, the model effectively identifies all positive cases (high recall).

This type of classifier might be used in initial medical screenings where it is crucial not to miss any positive cases, even if it means having more false positives.

Example 2: High Precision, High Recall, Low Specificity

Here, the classifier predicts everything as positive. While it identifies all actual positives (high recall) and most predictions are correct (high precision), it fails to identify negatives (low specificity).

This scenario might occur where missing a positive case is highly undesirable, such as in critical disease detection, but where the cost of false positives is relatively low.

Example 3: High Precision, Low Recall, High Specificity

This classifier is reliable when it predicts a positive case (high precision), but it misses many actual positives (low recall). It correctly identifies most negatives (high specificity).

Such a classifier could be used when confidence in positive predictions is crucial, such as diagnosing a condition requiring highly invasive or risky treatment.

Also read: Application of Machine Learning in Medical Domain!

Choosing the Right Metric

The correct metric depends on the particular application and the relative costs of false positives and negatives:

- In terms of precision, it is more crucial to minimize false positive results when they are more important. For instance, in email spam detection, it is better to have some spam messages in your inbox than to classify them as important spam emails.

- Recall (Sensitivity) matters most if false negatives are important. For example, in medical diagnostics, missing out on a positive case like a disease can be devastating; hence, it is better to generate some wrong results that can be eliminated through further tests.

- Specificity assumes significance when the price of false positives becomes unbearable. For instance, in drug testing, false positives should be avoided so as not to punish innocent people.

Balancing Metrics

One has to strike a balance among these ratios. For instance, F1 score is one of the metrics that combine both precision and recall to give an overall accuracy of a test that balances the trade-off between precision and recall.

F1 score is highly recommended to attain this equilibrium between precision and recall, especially when imbalanced classes are involved.

Conclusion

Understanding and appropriately applying precision, recall, specificity, and sensitivity are vital for developing and evaluating data science and medicine models. Each metric provides unique insights into model performance, and choosing the right one depends on the specific context and the consequences of errors. By bridging the gap between these fields, we can improve communication and collaboration, ultimately enhancing the effectiveness of predictive models in medical applications.

In summary, while precision and recall are often emphasized in data science and specificity and sensitivity in medicine, recognizing their relationships and differences allows for more nuanced and accurate model evaluations. This understanding can significantly impact the development of better diagnostic tools and predictive models, leading to improved patient outcomes and more efficient medical practices.

Join the Certified AI & ML BlackBelt Plus Program for custom learning tailored to your goals, personalized 1:1 mentorship from industry experts, and dedicated job placement assistance. Enroll now and transform your future!

Frequently Asked Questions

A. Precision measures the accuracy of positive predictions, while recall (sensitivity) assesses the ability to identify all actual positive cases.

A. Specificity evaluates the accuracy of negative predictions, indicating how well a test identifies true negatives, whereas sensitivity (recall) measures the proportion of true positives correctly identified.

A. Data scientists focus on precision and recall to assess model performance, while medical professionals use specificity and sensitivity to evaluate diagnostic tests, reflecting their different priorities in error management.

A. High specificity is crucial when it is important to accurately identify true negatives, such as in medical screenings where false positives can lead to unnecessary anxiety and additional testing.

A. The F1 score is a metric that balances precision and recall, providing an overall measure of a model’s accuracy, especially useful when dealing with imbalanced classes.