Introduction

Building and optimizing Retrieval-Augmented Generation (RAG) pipelines has been a rewarding experience. Combining retrieval mechanisms with language models to create contextually aware responses is fascinating. Over the past few months, I’ve fine-tuned my RAG pipeline and learned that effective evaluation and continuous improvement are crucial.

Evaluation ensures the RAG pipeline retrieves relevant documents, generates coherent responses, and meets end-user needs. Without it, the system might fail in real-world applications.

TRULens helps developers evaluate their RAG pipelines by providing feedback and performance metrics, measuring response relevance and groundedness, tracking performance over time, and identifying areas for improvement.

In this blog, I will guide you through setting up and evaluating an RAG pipeline using TRULens, providing insights and tools to enhance your RAG system.

Let’s dive in!

Learning Objectives

- Learn about Retrieval-Augmented Generation (RAG) systems and how they combine retrieval-based and generative models to understand natural language and generate responses.

- Gain insights into using LlamaIndex, a framework for building RAG systems, including steps for data preparation, index creation, and query engine setup.

- Explore the TruLens evaluation framework and understand how it provides feedback on RAG system performance based on metrics like groundedness, context relevance, and answer relevance.

- Learn how to interpret feedback metrics generated by TruLens, such as groundedness, context relevance, and answer relevance, to identify areas for improvement in RAG systems.

- Understand the iterative improvement process for RAG systems, including using insights from TruLens feedback to make incremental changes and continuously enhance system performance.

- Familiarize yourself with the TruLens dashboard interface and learn how to navigate it to visualize feedback metrics, analyze results, and make data-driven decisions for system enhancement.

- Explore emerging trends and future directions in RAG technology and evaluation methods, including advancements in retrieval algorithms, generative models, and integration with other technologies.

This article was published as a part of the Data Science Blogathon.

Table of contents

- Introduction

- What is RAG?

- How RAG Differs from Traditional LLMs?

- Setting Up a Simple RAG System

- Building a Simple Llama Index Application

- Evaluating Your RAG System with TruLens

- Analyzing and Interpreting Feedback

- Exploring Results in the TruLens Dashboard

- Future Directions in RAG and Evaluation

- Conclusion

- Frequently Asked Questions

What is RAG?

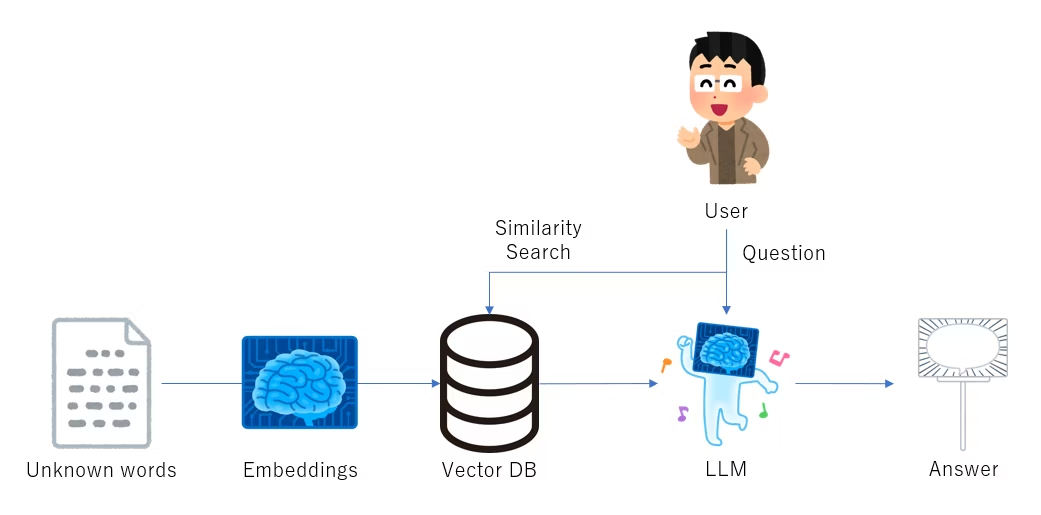

Retrieval-Augmented Generation (RAG) is an innovative approach in natural language processing that combines two primary components: a retrieval mechanism and a generative model. The retrieval component searches a large database of documents to find relevant information, which the generative model then uses to produce a coherent and contextually appropriate response.

In this diagram, the retrieval component processes a query to fetch relevant documents. These documents then inform the generative model to produce a response.

How RAG Differs from Traditional LLMs?

Traditional language models (LLMs) generate responses based solely on their training data and the input query. While they can be remarkably effective, they often struggle with providing up-to-date or specific information not present in their training data. On the other hand, RAG systems augment their generative capabilities with real-time retrieval of information, ensuring responses are fluent, factually grounded, and relevant.

The Importance of RAG

RAG systems are particularly useful in scenarios where up-to-date and specific information is crucial. Some notable applications include:

- Customer Support: Providing accurate and timely responses to customer queries by retrieving relevant information from a knowledge base.

- Healthcare: Assisting medical professionals with quick access to the latest research and clinical guidelines.

- Education: Offering detailed explanations and additional resources to students based on their queries.

Advantages Over Standard Generation Methods

- RAG systems can generate more accurate and contextually appropriate responses by retrieving relevant documents.

- These systems can access the latest information, making them ideal for dynamic fields where knowledge constantly evolves.

- The retrieval mechanism ensures the generated responses are closely aligned with the user’s query, enhancing the overall user experience.

Setting Up a Simple RAG System

To demonstrate how Retrieval-Augmented Generation (RAG) works, we’ll build a simple RAG system using LlamaIndex, a powerful tool that leverages OpenAI’s models for both retrieval and generation. We’ll also set up the necessary environment for evaluating our RAG system with TruLens. Let’s dive in!

Installing Dependencies

We need to install several dependencies before we can build our RAG system. These include LlamaIndex, TruLens for evaluation, and OpenAI’s library for accessing their language models.

Step-by-Step Guide to Installing Required Packages:

- LlamaIndex: This library helps create an index from a collection of documents, which can be used for retrieval.

- TruLens: A tool to evaluate and get feedback on our LLM responses.

- OpenAI: For generating responses using OpenAI’s models.

You can install these packages using pip:

# Install the required packages

!pip install trulens_eval llama_index openaiThis command will download and install all necessary libraries. Make sure you run this in your Python environment.

Adding API Keys

You need to set up API keys to use OpenAI for embeddings, generation, and evaluation. These keys will authenticate your requests to the respective services.

Step-by-Step Guide to Setting Up API Keys:

- Obtain Your API Keys: https://platform.openai.com/docs/quickstart/step-2-setup-your-api-key

- Set Up API Keys in Your Environment:

Here’s how you can set the API keys in your Python environment:

import os

# Set your OpenAI API key

os.environ["OPENAI_API_KEY"] = "your-openai-api-key"

Replace “your-openai-api-key” with your actual API keys. Ensure you keep these keys secure and do not expose them in public repositories.

Security Tips for Managing API Keys:

- Unit control does not include store keys in environment variables or configuration files.

- Use tools like dotenv to manage environment variables securely.

- Rotate your keys periodically to mitigate the risk of unauthorized access.

With the dependencies installed and API keys configured, we’re ready to build our simple LlamaIndex application.

Building a Simple Llama Index Application

In this section, we will create a basic LlamaIndex application using the text of Paul Graham’s essay, “What I Worked On.” This example will help you understand how to build and query a RAG system. We’ll then use this setup as a basis for our evaluation with TruLens.

Downloading and Preparing Data

We’ll use Paul Graham’s essay “What I Worked On” for this example. This text provides a rich dataset for demonstrating how our RAG system retrieves and generates responses.

Instructions for Downloading Example Data:

Download the Essay

We can download the essay using the following command:

!wget https://raw.githubusercontent.com/run-llama/llama_index/main/docs/docs/examples\

/data/paul_graham/paul_graham_essay.txt -P data/

This command uses wget to fetch the essay and saves it into a directory named data. Ensure you have permission to create directories and download files to your system.

Verify the Download

After downloading, verify that the file is correctly saved in the data directory. The directory structure should look something like this:

data/

└── paul_graham_essay.txt

Creating the LLM Application

With our data in place, we can now create a LlamaIndex application. This involves loading the data, creating an index, and setting up a query engine.

Detailed Guide on Setting Up LlamaIndex with the Downloaded Data:

Load the Data

First, we need to load the text data from the downloaded file. LlamaIndex provides a simple way to do this using the SimpleDirectoryReader:

from llama_index.core import VectorStoreIndex, SimpleDirectoryReader

# Load documents from the data directory

documents = SimpleDirectoryReader("data").load_data()This will read all text files in the data directory and prepare them for indexing.

Create the Index

Next, we create an index from the loaded documents. The index will enable efficient retrieval of relevant documents based on a query:

index = VectorStoreIndex.from_documents(documents)Set Up the Query Engine

With the index created, we can set up a query engine to handle our queries and retrieve relevant information:

query_engine = index.as_query_engine()Send Your First Request

Now, we can send a query to our newly created RAG system and see the results:

response = query_engine.query("What did the author do growing up?")

print(response)

When you run this code, the system retrieves relevant parts of the essay and uses them to generate a coherent answer to the query. This combination of retrieval and generation is the essence of RAG.

Example Query and Response

Let’s illustrate this with a query about Paul Graham’s early life:

response = query_engine.query("What did the author do growing up?")

print(response)

You might get a response like:

As a child, Paul Graham was interested in many things, including computers and

programming. He often engaged in creative activities and was passionate about learning

and experimenting.

This response is generated based on relevant excerpts retrieved from the essay, demonstrating the power of RAG in providing contextually appropriate and accurate information.

Evaluating Your RAG System with TruLens

Now that our simple LlamaIndex application is set up and running, it’s time to evaluate its performance. Evaluation is crucial to understanding how well our system performs and identifying improvement areas. TruLens offers a comprehensive framework for evaluating RAG systems, focusing on key metrics like groundedness, context relevance, and answer relevance.

Introduction to TruLens

TruLens is a powerful evaluation tool designed to provide detailed feedback on the performance of language models, particularly in RAG systems.

It helps in assessing the quality of responses by considering several dimensions:

- Groundedness: Ensures that the responses are based on actual retrieved documents.

- Context Relevance: Measures how relevant the response is to the given context or query.

- Answer Relevance: Evaluate the response’s overall relevance to the question.

Using TruLens, developers can get actionable insights into their RAG systems and make iterative improvements.

Why Is Evaluation Crucial for RAG Systems?

Evaluation helps in:

- Identifying Weaknesses: Spotting areas where the system might generate inaccurate or irrelevant responses.

- Ensuring Accuracy: Ensuring the responses are grounded in the retrieved documents, enhancing trustworthiness.

- Improving User Experience: Ensuring the responses are contextually and semantically relevant to the user’s queries.

Here, we are going to use RAG Triad for evaluation:

Initializing Feedback Functions

To evaluate our LlamaIndex application, we need to set up feedback functions in TruLens. These functions will help us measure our system’s responses’ groundedness, context relevance, and answer relevance.

Detailed Explanation of Feedback Functions in TruLens:

- Groundedness Feedback Function: The groundedness feedback function checks if the responses are based on the retrieved documents. Here’s how you set it up:

from trulens_eval import Tru

from trulens_eval.feedback.provider import OpenAI

from trulens_eval import Feedback

import numpy as np

tru = Tru()

# Initialize provider class

provider = OpenAI()

# Select context to be used in feedback. The location of context is app specific.

from trulens_eval.app import App

context = App.select_context(query_engine)

# Define a groundedness feedback function

f_groundedness = (

Feedback(provider.groundedness_measure_with_cot_reasons)

.on(context.collect()) # Collect context chunks into a list

.on_output()

)

- Answer Relevance Feedback Function:

This function measures the relevance of the answer to the overall question:

# Question/answer relevance between overall question and answer.

f_answer_relevance = (

Feedback(provider.relevance)

.on_input_output()

)

- Context Relevance Feedback Function:

This function evaluates the relevance of each context chunk to the question:

# Question/statement relevance between question and each context chunk.

f_context_relevance = (

Feedback(provider.context_relevance_with_cot_reasons)

.on_input()

.on(context)

.aggregate(np.mean)

)

These feedback functions will help us assess different aspects of our RAG system’s responses, ensuring a comprehensive evaluation.

Instrumenting the Application for Logging

We need to instrument our application to capture and log the interactions between our RAG system and TruLens. This involves integrating TruLens into our LlamaIndex setup so that we can log queries and feedback.

Step-by-Step Guide on Integrating TruLens for Logging and Evaluation:

- Set Up TruLlama Recorder:

The TruLlama recorder will capture and log the interactions for evaluation:

from trulens_eval import TruLlama

# Initialize the recorder

tru_query_engine_recorder = TruLlama(query_engine,

app_id='LlamaIndex_App1',

feedbacks=[f_groundedness, f_answer_relevance, f_context_relevance])

- Logging Queries:

You can log queries using the recorder as a context manager to ensure that each query and its corresponding feedback are recorded:

# Use the recorder as a context manager to log queries

with tru_query_engine_recorder as recording:

response = query_engine.query("What did the author do growing up?")

print(response)

- Retrieving and Displaying Feedback:

After logging the interactions, retrieve and display the feedback:

# The record of the app invocation can be retrieved from the `recording`:

rec = recording.get() # Use .get if only one record

# Display the record

display(rec)

# Run the dashboard to visualize feedback

tru.run_dashboard()

Through initializing feedback functions and instrumenting your application with TruLens, you can comprehensively evaluate your RAG system. This setup not only helps in assessing the current performance but also provides actionable insights for continuous improvement.

Analyzing and Interpreting Feedback

With your RAG system set up and TruLens integrated, it’s time to analyze the feedback generated by your application’s responses. This section will guide you through retrieving feedback records, understanding the metrics used, and utilizing this feedback to enhance your RAG system’s performance.

Retrieving and Displaying Feedback

After running your RAG system with TruLens, you must retrieve the recorded feedback for analysis. This step involves accessing the feedback data and displaying it meaningfully.

Instructions for Retrieving Feedback Records:

- Retrieve Feedback Record:

When you run queries with TruLens logging enabled, feedback records are generated. Retrieve these records as follows:

# Retrieve the record from the recording context

rec = recording.get() # Use .get if only one record

# Display the feedback record

display(rec)

- Accessing Feedback Results:

Feedback results can be accessed using the wait_for_feedback_results method, which ensures all feedback functions have completed their evaluation:

# The results of the feedback functions can be rertireved from

# `Record.feedback_results` or using the `wait_for_feedback_result` method. The

# results if retrieved directly are `Future` instances (see

# `concurrent.futures`). You can use `as_completed` to wait until they have

# finished evaluating or use the utility method:

for feedback, feedback_result in rec.wait_for_feedback_results().items():

print(feedback.name, feedback_result.result)

records, feedback = tru.get_records_and_feedback(app_ids=["LlamaIndex_App1"])

records.head()

#tru.get_leaderboard(app_ids=["LlamaIndex_App1"])

- Viewing Feedback in Dashboard:

To get a more interactive view, you can use the TruLens dashboard:

tru.run_dashboard()

The dashboard provides a visual representation of the feedback metrics, making it easier to analyze and interpret the results.

Understanding Feedback Metrics

TruLens provides three primary metrics for evaluating RAG systems: groundedness, context relevance, and answer relevance. Understanding these metrics is crucial for interpreting the feedback and making informed improvements.

Detailed Explanation of Each Metric:

- Groundedness: Groundedness measures whether the generated response is based on the retrieved documents. A high groundedness score indicates that the retrieved information supports the response well.

# Example of groundedness feedback

f_groundedness = Feedback(provider.groundedness_measure_with_cot_reasons)Interpretation:

- High Score: The response is well-grounded in the retrieved documents.

- Low Score: The response may include unsupported or hallucinated information.

- Context Relevance:

Context relevance evaluates how relevant each chunk of context (retrieved documents) is to the query. This ensures that the retrieved information is pertinent to the user’s question.

# Example of context relevance feedback

f_context_relevance = Feedback(provider.context_relevance_with_cot_reasons)Interpretation:

- High Score: Retrieved context is highly relevant to the query.

- Low Score: Retrieved context may be off-topic or irrelevant.

- Answer Relevance:

Answer relevance assesses the overall relevance of the response to the query. This metric ensures that the response directly addresses the user’s question.

# Example of answer relevance feedback

f_answer_relevance = Feedback(provider.relevance)Interpretation:

- High Score: Response is directly relevant and answers the query well.

- Low Score: Response may be tangential or incomplete.

Example of Feedback Results:

Here’s a hypothetical output for feedback metrics:

Groundedness: 0.85

Context Relevance: 0.90

Answer Relevance: 0.88These scores indicate a well-performing RAG system with well-grounded responses that are contextually relevant and directly answer the queries.

Using Feedback to Improve Your RAG System

Feedback is only valuable if it leads to actionable improvements. This section provides practical tips on leveraging feedback to enhance your RAG system’s performance.

Practical Tips on Leveraging Feedback:

- Identify Weak Areas: Use the feedback metrics to pinpoint where your system is underperforming. For instance, if groundedness scores are low, focus on improving the retrieval mechanism to fetch more relevant documents.

- Iterative Development: Based on feedback, make incremental changes and reevaluate the system. This iterative approach gradually enhances overall performance.

# Example of iterative improvement

for feedback, result in feedback_results.items():

if result.result < threshold:

print(f"Improving based on low {feedback.name} score")

# Implement changes- Enhance Retrieval Strategies:

Improve your document retrieval strategies by:

- Fine-tuning search algorithms.

- Expanding the document corpus with more relevant data.

- Filtering out irrelevant documents more effectively.

- Refine Generative Model:

Adjust the generative model parameters or consider fine-tuning the model with additional data to improve the quality and relevance of generated responses.

By thoroughly analyzing and interpreting feedback from TruLens, you can make informed decisions to enhance your RAG system’s performance. This ongoing evaluation and improvement process is key to developing a robust and reliable RAG application.

Exploring Results in the TruLens Dashboard

The TruLens dashboard is a powerful tool for visualizing and interacting with the feedback data generated during the evaluation of your RAG system. In this section, we’ll guide you through using the dashboard to gain deeper insights into your system’s performance and make data-driven decisions for further improvements.

Setting Up the Dashboard

To get started with the TruLens dashboard, you must ensure it’s properly set up and running. This dashboard provides a user-friendly interface to explore feedback metrics and records.

Steps to Launch and Use the Dashboard:

- Start the Dashboard:

Run the following command to launch the dashboard:

tru.run_dashboard()

This command will start the dashboard server and provide you with a URL to access it in your web browser.

- Access the Dashboard:

Open the provided URL in your web browser. You should see the TruLens dashboard interface, which allows you to explore various feedback metrics and records.

Navigating the Dashboard

The TruLens dashboard is designed to offer a comprehensive view of your RAG system’s performance. Here’s how to navigate and make the most of its features:

Key Components of the Dashboard:

- Overview Page:

The overview page summarizes your RAG system’s performance, including key metrics such as groundedness, context relevance, and answer relevance.

- Records Page:

This page lists all the recorded interactions between the user queries and the system responses. Each record includes detailed feedback metrics and the corresponding responses.

- Feedback Details:

Clicking on a specific record brings up detailed feedback, showing how each feedback function evaluated the response. This helps in understanding the strengths and weaknesses of individual responses.

- Leaderboard:

The leaderboard aggregates performance metrics across different queries and responses, allowing you to compare and benchmark your system’s performance over time.

Interpreting Dashboard Insights

The dashboard provides a wealth of data that can be used to gain insights into your RAG system’s performance. Here’s how to interpret and act on these insights:

Analyzing Key Metrics:

- Groundedness:

High groundedness scores indicate that responses are well-supported by the retrieved documents. If you notice low scores, investigate the retrieval process to ensure relevant documents are being fetched.

- Context Relevance:

High context relevance scores suggest that the retrieved documents are pertinent to the query. Low scores may indicate that your retrieval algorithm needs fine-tuning or that your document corpus requires better curation.

- Answer Relevance:

This metric shows how well the response answers the query. Consistently low scores might indicate the need to refine the generative model or adjust its parameters.

Example of Using Dashboard Insights:

Imagine you notice that responses to certain queries consistently have low context relevance scores. You could:

- Review the Retrieval Algorithm: Ensure the algorithm effectively identifies relevant documents for these queries.

- Expand the Document Corpus: Add more documents relevant to the types of queries that are underperforming.

- Fine-Tune the Model: The model can be adjusted to better handle specific query types, possibly by training on additional data that includes more examples of these queries.

Iterative Improvement Process:

Using the insights from the dashboard, implement changes to your RAG system, then re-evaluate to see if the changes lead to improvements. This iterative process is crucial for continually enhancing your system’s performance.

Case Study: Practical Application of Dashboard Insights

To illustrate the practical use of the TruLens dashboard, let’s consider a hypothetical case study:

Scenario:

Your RAG system is deployed in a customer support chatbot for a tech company. Customer queries about software installation procedures often result in low context relevance and answer relevance scores.

Steps Taken:

- Analyze Feedback:

- Use the dashboard to identify specific queries with low scores.

- Review the retrieved documents and responses for these queries.

- Improve Document Corpus:

- Add more detailed installation guides and FAQs to the document corpus.

- Ensure documents are tagged and categorized appropriately.

- Refine Retrieval Algorithm:

- Adjust the retrieval algorithm to prioritize documents with installation keywords.

- Implement filtering to remove less relevant documents.

- Re-Evaluate:

- Run the updated system and compare new feedback metrics using the dashboard.

- Look for improvements in context relevance and answer relevance scores.

Outcome:

After implementing these changes, you will see a significant improvement in the scores for installation-related queries, leading to more accurate and helpful customer responses.

Future Directions in RAG and Evaluation

Here are the future directions in RAG and Evaluation:

Advancements in RAG Systems:

- Improved Retrieval Algorithms: Develop more sophisticated retrieval algorithms to understand better and prioritize document relevance.

- Enhanced Generative Models: Explore advancements in generative models to produce even more coherent and contextually accurate responses.

Innovations in Evaluation Metrics:

- Dynamic Groundedness Measures: Create more dynamic and context-sensitive groundedness measures to evaluate the evolving nature of responses better.

- User-Centric Metrics: Develop metrics that better capture the user’s perspective and satisfaction with the responses.

Integration with Other Technologies:

- Multimodal RAG Systems: Incorporate multimedia (images, videos) into RAG systems for richer and more informative responses.

- Cross-Domain Applications: Expand the application of RAG systems to various domains, such as healthcare, education, and customer service, to address diverse information needs.

Conclusion

In this comprehensive guide, we’ve walked through the entire process of building, evaluating, and iteratively improving a Retrieval-Augmented Generation (RAG) system using LlamaIndex and TruLens. By leveraging these tools, you can develop a highly effective system that retrieves relevant information and generates coherent responses, all while ensuring that the generated outputs are grounded, contextually relevant, and directly answer user queries.

The combination of LlamaIndex and TruLens provides a robust framework for building and evaluating RAG systems. By following the outlined steps and best practices, you can develop a system that meets and exceeds user expectations in delivering accurate and relevant information. The future of RAG systems is promising, with ongoing advancements and innovations paving the way for even more powerful and versatile applications.

I encourage you to build your own RAG system today using LlamaIndex and TruLens. Experiment with different datasets, refine your retrieval and generative models and use the powerful evaluation tools provided by TruLens to improve your system continuously. Share your experiences and insights with the community to contribute to the evolving landscape of RAG technology.

Elevate your RAG system with our ‘Building Effective RAG with LlamaIndex and TruLens‘ course – master the art of retrieval, generation, and evaluation for accurate, relevant responses!

Key Takeaways

- Retrieval-Augmented Generation (RAG) pipelines combine retrieval mechanisms with powerful language models to create contextually aware and relevant responses.

- Evaluating a RAG pipeline is crucial to ensure its effectiveness and identify areas for improvement.

- TRULens is a tool that provides detailed feedback and performance metrics for RAG pipelines, helping developers measure relevance and groundedness and track improvements.

- LlamaIndex is a framework that facilitates the building of RAG systems, including data preparation, index creation, and query engine setup.

- The TruLens evaluation framework offers insights into the performance of RAG systems based on metrics like groundedness, context relevance, and answer relevance.

- Interpreting feedback metrics is essential for identifying weaknesses and improving the system’s accuracy and user experience.

- The iterative improvement process involves using feedback to make incremental changes, enhancing the system’s performance over time.

Best Practices for Ongoing Improvement

- Continuous Monitoring: Regularly monitor your RAG system’s performance using the TruLens dashboard to catch any regressions or new issues that may arise.

- Expand and Update Data Sources: Keep your document corpus updated with new and relevant information to ensure the retrieval process has access to the latest and most accurate data.

- Fine-Tune Models: Continuously fine-tune your generative model based on user interactions and feedback to improve response quality and relevance.

- User Feedback Integration: Incorporate direct user feedback into your evaluation loop. Users can provide insights that automated metrics might miss, helping you refine the system further.

- Diverse Testing: Test your system with various queries to ensure robustness across different topics and questions.

The media shown in this article are not owned by Analytics Vidhya and is used at the Author’s discretion.

Frequently Asked Questions

A. RAG combines the strengths of retrieval-based and generative models to enhance natural language understanding and response generation. It retrieves relevant information from a document corpus and uses it to generate coherent responses to user queries.

A. TruLens provides comprehensive evaluation metrics such as groundedness, context relevance, and answer relevance to assess the performance of RAG systems. It offers actionable insights and facilitates iterative improvements.

A. Yes, LlamaIndex can be used with any document corpus. It provides a flexible framework for indexing and retrieving information from diverse datasets, making it suitable for a wide range of applications.

A. Integrating TruLens enables developers to gain deep insights into their RAG system’s performance. It helps identify strengths and weaknesses, facilitates data-driven decision-making, and supports continuous improvement efforts.

A. To get started, familiarize yourself with LlamaIndex and TruLens documentation. Collect and prepare your dataset, build your RAG system using LlamaIndex, and integrate TruLens for evaluation. Iterate on your system based on feedback and insights gathered from TruLens.