This guide dives into building a custom conversational agent with LangChain, a powerful framework that integrates Large Language Models (LLMs) with a range of tools and APIs. Designed for versatility, the agent can tackle tasks like generating random numbers, sharing philosophical insights, and dynamically fetching and extracting content from webpages. By combining pre-built tools with custom features, we create an agent capable of delivering real-time, informative, and context-aware responses.

Learning Objectives

- Gain knowledge of the LangChain framework and its integration with Large Language Models and external tools.

- Learn to create and implement custom tools for specialized tasks within a conversational agent.

- Acquire skills in fetching and processing live data from the web for accurate responses.

- Develop a conversational agent that maintains context for coherent and relevant interactions.

This article was published as a part of the Data Science Blogathon.

Table of contents

- Why Integrate LangChain, OpenAI, and DuckDuckGo?

- Installing Essential Packages

- Configuring API Access

- Connecting OpenAI Models to LangChain

- Adding a Web Search Tool

- Creating Custom Functionalities

- Creating the Conversational Agent with Custom Tools

- Using Tool Class to Create a Useful Web Scraping Tool

- Conclusion

- Frequently Asked Questions

Why Integrate LangChain, OpenAI, and DuckDuckGo?

Integrating LangChain with OpenAI and DuckDuckGo empowers us to build advanced conversational AI applications that can perform both structured and unstructured searches. OpenAI’s language models enable natural language understanding, while DuckDuckGo provides a reliable, privacy-respecting search API. Together, they allow our AI to not only generate coherent and contextual responses but also fetch real-time information, enhancing its versatility and relevance. This setup is ideal for creating intelligent, responsive chatbots or virtual assistants that can handle a wide range of user inquiries, making it a powerful toolkit for developers in conversational AI.

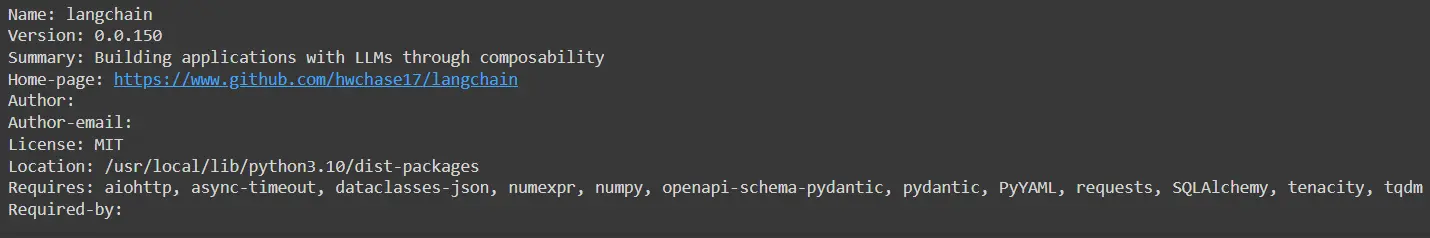

Installing Essential Packages

First, you’ll need to install the required Python packages, including langchain and openai, and the DuckDuckGo search tool. You can easily do this using pip:

!pip -q install langchain==0.3.4 openai

pip install langchain

!pip -q install duckduckgo-search

Once installed, confirm the installation of LangChain to ensure that it is set up correctly:

!pip show langchain

Configuring API Access

To make the best use of OpenAI’s models, you’ll need to set up an API key. For this, you can use the os library to load your API keys into the environment variables:

import os

os.environ["OPENAI_API_KEY"] = "your_openai_key_here"

Make sure to replace “your_openai_key_here” with your actual OpenAI API key. This key will be required for interacting with the GPT-3.5-turbo model.

Connecting OpenAI Models to LangChain

We will now set up a connection to OpenAI’s model using LangChain. This model provides the flexibility to handle a wide range of language tasks, from basic conversations to more advanced queries:

from langchain import OpenAI

from langchain.chat_models import ChatOpenAI

from langchain.chains.conversation.memory import ConversationBufferWindowMemory

# Set up the turbo LLM

turbo_llm = ChatOpenAI(

temperature=0,

model_name='gpt-4o'

)

In this case, we’ve configured the GPT-4o model with a low temperature (temperature=0) to ensure consistent responses.

Adding a Web Search Tool

To make your agent more versatile, you’ll want to integrate tools that enable it to access external data. In this case, we will integrate the DuckDuckGo search tool, which allows the agent to perform web searches:

from langchain.tools import DuckDuckGoSearchTool

from langchain.agents import Tool

from langchain.tools import BaseTool

search = DuckDuckGoSearchTool()

# Defining a single tool

tools = [

Tool(

name = "search",

func=search.run,

description="useful for when you need to answer questions about current events. You should ask targeted questions"

)

]The DuckDuckGoSearchTool is set up here as a Tool object, with its description indicating that it’s ideal for answering questions related to current events.

Creating Custom Functionalities

In addition to the standard tools provided by LangChain, you can also create custom tools to enhance your agent’s abilities. Below, we demonstrate how to create a couple of simple yet illustrative tools: one that returns the meaning of life, and another that generates random numbers.

Custom Tool: Meaning of Life

The first tool is a simple function that provides an answer to the age-old question, “What is the meaning of life?”. In this case, it returns a humorous response with a slight twist:

def meaning_of_life(input=""):

return 'The meaning of life is 42 if rounded but is actually 42.17658'

life_tool = Tool(

name='Meaning of Life',

func= meaning_of_life,

description="Useful for when you need to answer questions about the meaning of life. input should be MOL "

)

This tool can be integrated into your agent to offer some fun or philosophical insights during a conversation.

Custom Tool: Random Number Generator

The second tool generates a random integer between 0 and 5. This could be useful in situations where randomness or decision-making is required:

import random

def random_num(input=""):

return random.randint(0,5)

random_tool = Tool(

name='Random number',

func= random_num,

description="Useful for when you need to get a random number. input should be 'random'"

)

By integrating this tool into your LangChain agent, it becomes capable of generating random numbers when needed, adding a touch of unpredictability or fun to the interactions.

Creating the Conversational Agent with Custom Tools

Creating a conversational agent with custom tools opens up endless possibilities for tailoring interactions to specific needs. By integrating unique functionalities, we can build a dynamic, responsive assistant that goes beyond standard responses, delivering personalized and contextually relevant information.

Initializing the Agent

We start by importing the initialize_agent function from LangChain and defining the tools we’ve created earlier—DuckDuckGo search, random number generator, and the “Meaning of Life” tool:

from langchain.agents import initialize_agent

tools = [search, random_tool, life_tool]

Adding Memory to the Agent

To enable the agent to remember recent parts of the conversation, we incorporate memory. The ConversationBufferWindowMemory feature allows the agent to keep a rolling memory of the last few messages (set to 3 in this case):

from langchain.chains.conversation.memory import ConversationBufferWindowMemory

memory = ConversationBufferWindowMemory(

memory_key='chat_history',

k=3,

return_messages=True

)

This memory will allow the agent to maintain context over short conversations, which is especially useful for a more natural dialogue flow.

Building the Conversational Agent

We initialize the agent using initialize_agent. The key parameters include the following:

- agent: Specifies the type of agent. In this case, we’re using the conversational ReAct-style agent (chat-conversational-react-description).

- tools: The tools we’ve defined are passed here.

- llm: The OpenAI GPT-3.5-turbo model.

- verbose: Set to True so that we can see detailed output of the agent’s behavior.

- memory: The memory object that allows the agent to recall conversation history.

- max_iterations: These are set to stop the agent after a maximum of 3 iterations or when it generates a valid answer.

conversational_agent = initialize_agent(

agent='chat-conversational-react-description',

tools=tools,

llm=turbo_llm,

verbose=True,

max_iterations=3,

early_stopping_method='generate',

memory=memory

)

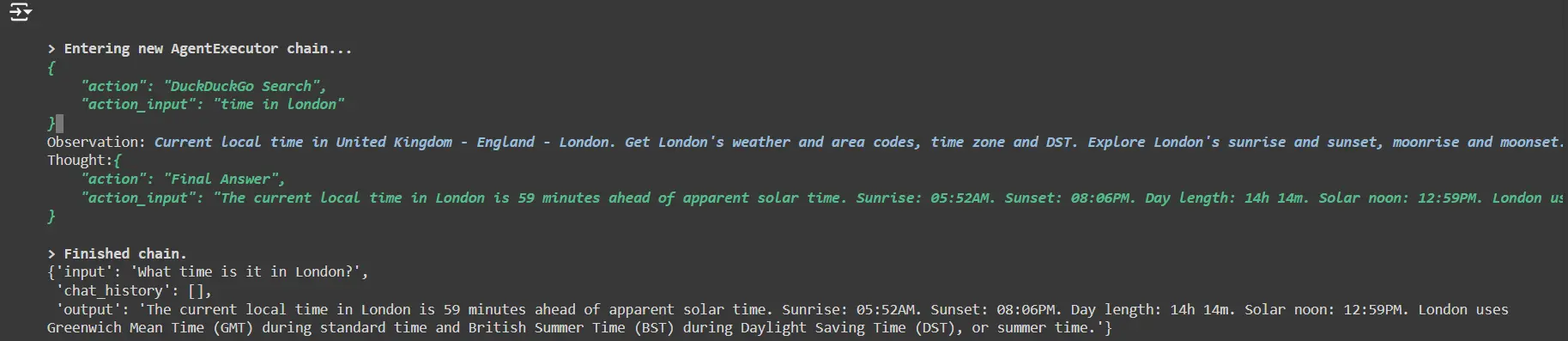

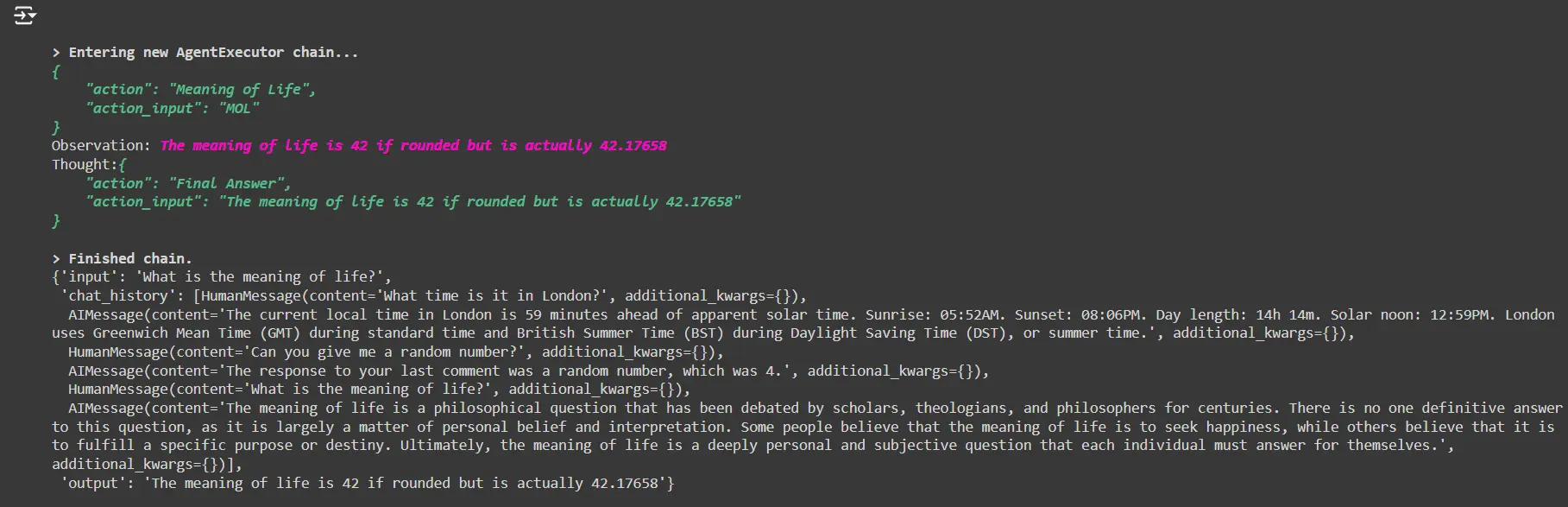

Testing the Agent

You can now interact with the agent using natural language queries. Here are some examples of questions you can ask the agent:

Ask for the current time in a city

conversational_agent("What time is it in London?")

- The agent will use the DuckDuckGo search tool to find the current time in London.

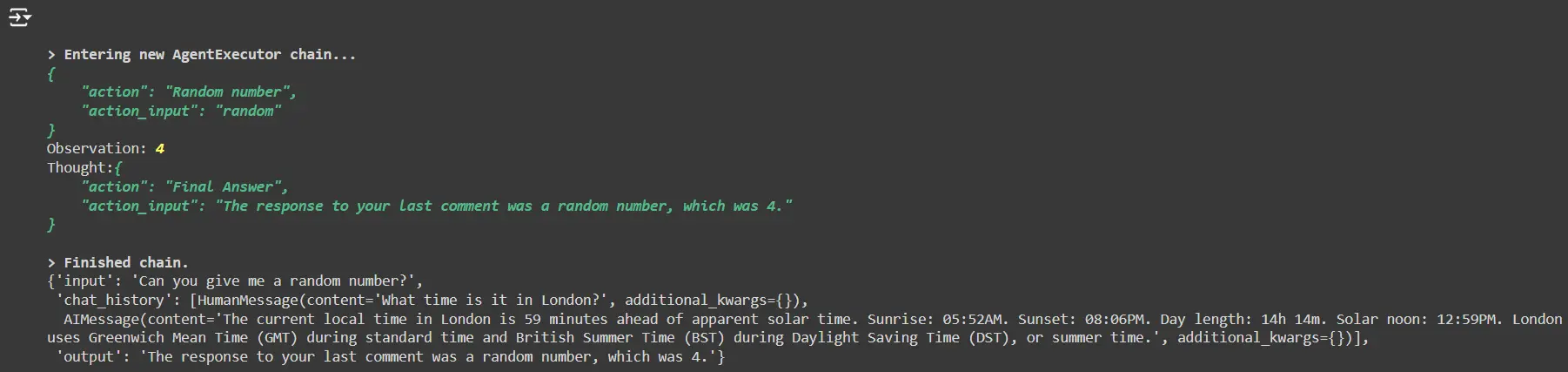

Request a random number:

conversational_agent("Can you give me a random number?")

- The agent will generate a random number using the custom random_tool.

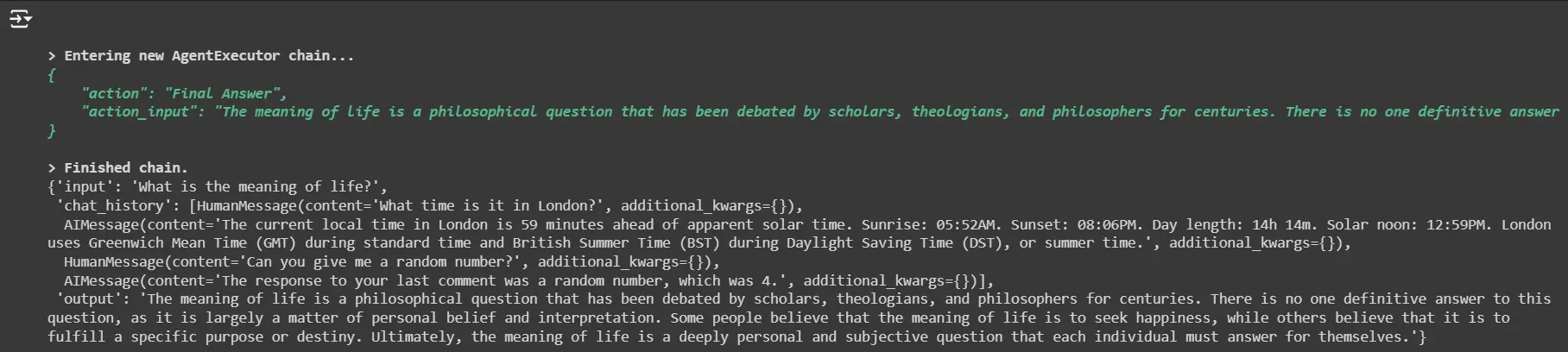

Ask for the meaning of life

conversational_agent("What is the meaning of life?")The agent will answer this question by utilizing the custom life_tool.

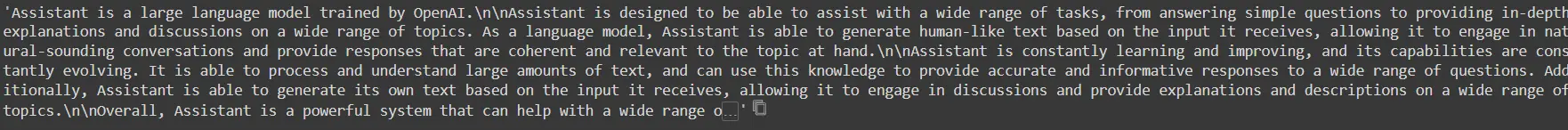

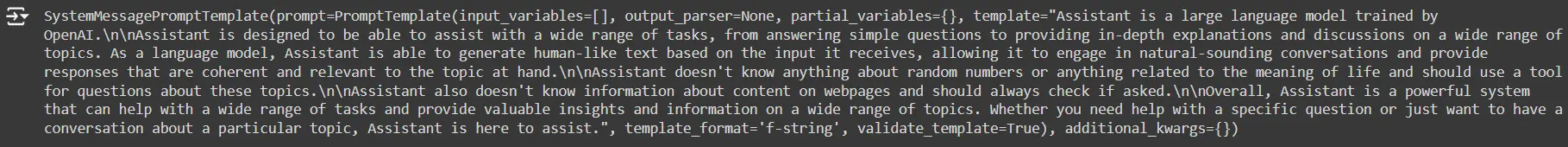

Customizing the System Prompt

To guide the agent’s behavior more effectively, we can adjust the system prompt it follows. By default, the agent provides general assistance across a variety of topics. However, we can tailor this by explicitly telling it to use tools for specific tasks, such as answering questions about random numbers or the meaning of life.

Here’s how we modify the system prompt:

# system prompt

conversational_agent.agent.llm_chain.prompt.messages[0].prompt.template

fixed_prompt = '''Assistant is a large language model trained by OpenAI.

Assistant is designed to be able to assist with a wide range of tasks, from answering

simple questions to providing in-depth explanations and discussions on a wide range of

topics. As a language model, Assistant is able to generate human-like text based on the input it receives, allowing it to engage in natural-sounding conversations and provide responses that are coherent and relevant to the topic at hand.

Assistant doesn't know anything about random numbers or anything related to the meaning

of life and should use a tool for questions about these topics.

Assistant is constantly learning and improving, and its capabilities are constantly

evolving. It is able to process and understand large amounts of text, and can use

this knowledge to provide accurate and informative responses to a wide range of

questions. Additionally, Assistant is able to generate its own text based on the

input it receives, allowing it to engage in discussions and provide explanations and

descriptions on a wide range of topics.

Overall, Assistant is a powerful system that can help with a wide range of tasks and

provide valuable insights and information on a wide range of topics. Whether you need

help with a specific question or just want to have a conversation about a particular

topic, Assistant is here to assist.'''We then apply the modified prompt to the agent:

conversational_agent.agent.llm_chain.prompt.messages[0].prompt.template = fixed_promptNow, when the agent is asked about random numbers or the meaning of life, it will be sure to consult the appropriate tool instead of attempting to generate an answer on its own.

Testing with the New Prompt

Let’s test the agent again with the updated prompt:

conversational_agent("What is the meaning of life?")This time, the agent should call the life_tool to answer the question instead of generating a response from its own knowledge.

Using Tool Class to Create a Useful Web Scraping Tool

In this section, we’ll create a custom tool that can strip HTML tags from a webpage and return the plain text content. This can be particularly useful for tasks like summarizing articles, extracting specific information, or simply converting HTML content into a readable format.

Building the Web Scraper Tool

We will use the requests library to fetch the webpage and BeautifulSoup from the bs4 package to parse and clean the HTML content. The function below retrieves a webpage, removes all HTML tags, and returns the first 4000 characters of the stripped text:

from bs4 import BeautifulSoup

import requests

def stripped_webpage(webpage):

# Fetch the content of the webpage

response = requests.get(webpage)

html_content = response.text

# Function to strip HTML tags from the content

def strip_html_tags(html_content):

soup = BeautifulSoup(html_content, "html.parser")

stripped_text = soup.get_text()

return stripped_text

# Strip the HTML tags

stripped_content = strip_html_tags(html_content)

# Limit the content to 4000 characters to avoid long outputs

if len(stripped_content) > 4000:

stripped_content = stripped_content[:4000]

return stripped_content

# Testing the function with a sample webpage

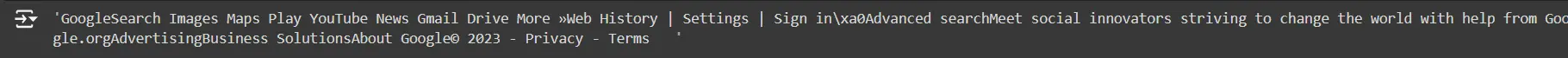

stripped_webpage('https://www.google.com')

How It Works

- Fetching the webpage: The function uses the requests library to get the raw HTML content from a given URL.

- Stripping HTML tags: Using BeautifulSoup, we parse the HTML content and extract only the text, removing all HTML tags.

- Character limit: To avoid overly long outputs, the text is limited to the first 4000 characters. This keeps the content concise and manageable, especially for use in a conversational agent.

Testing the Tool

You can test this tool by passing any URL to the stripped_webpage function. For example, when used with https://www.google.com, it will return a text-only version of Google’s homepage (limited to the first 4000 characters).

Integrating into LangChain as a Tool

You can now wrap this function inside a LangChain Tool and integrate it into your agent for dynamic web scraping:

from langchain.agents import Tool

# Create the tool for web scraping

web_scraper_tool = Tool(

name='Web Scraper',

func=stripped_webpage,

description="Fetches a webpage, strips HTML tags, and returns the plain text content (limited to 4000 characters)."

)

Now, this web scraper tool can be part of the agent’s toolbox and used to fetch and strip content from any webpage in real time, adding further utility to the conversational agent you’ve built.

Creating a WebPageTool for Dynamic Web Content Extraction

In this section, we’re building a custom WebPageTool class that will allow our conversational agent to fetch and strip HTML tags from a webpage, returning the text content. This tool is useful for extracting information from websites dynamically, adding a new layer of functionality to the agent.

Defining the WebPageTool Class

We start by defining the WebPageTool class, which inherits from BaseTool. This class has two methods: _run for synchronous execution (the default for this tool) and _arun which raises an error since asynchronous operations are not supported here.

from langchain.tools import BaseTool

from bs4 import BeautifulSoup

import requests

class WebPageTool(BaseTool):

name = "Get Webpage"

description = "Useful for when you need to get the content from a specific webpage"

# Synchronous run method to fetch webpage content

def _run(self, webpage: str):

# Fetch webpage content

response = requests.get(webpage)

html_content = response.text

# Strip HTML tags from the content

def strip_html_tags(html_content):

soup = BeautifulSoup(html_content, "html.parser")

stripped_text = soup.get_text()

return stripped_text

# Get the plain text and limit it to 4000 characters

stripped_content = strip_html_tags(html_content)

if len(stripped_content) > 4000:

stripped_content = stripped_content[:4000]

return stripped_content

# Async run method (not implemented)

def _arun(self, webpage: str):

raise NotImplementedError("This tool does not support async")

# Instantiate the tool

page_getter = WebPageTool()

How the WebPageTool Works

- Fetching Webpage Content: The _run method uses requests.get to fetch the webpage’s raw HTML content.

- Stripping HTML Tags: Using BeautifulSoup, the HTML tags are removed, leaving only the plain text content.

- Limiting Output Size: The content is truncated to 4000 characters to avoid excessive output, making the agent’s response concise and manageable.

Reinitializing the Conversational Agent

Next, we need to integrate the new WebPageTool into our conversational agent. This involves adding the tool to the agent’s toolbox and updating the system prompt to instruct the agent to use this tool for fetching webpage content.

Updating the System Prompt

We modify the system prompt to explicitly instruct the assistant to always check webpages when asked for content from a specific URL:

fixed_prompt = '''Assistant is a large language model trained by OpenAI.

Assistant is designed to be able to assist with a wide range of tasks, from answering

simple questions to providing in-depth explanations and discussions on a wide range of

topics. As a language model, Assistant is able to generate human-like text based on the

input it receives, allowing it to engage in natural-sounding conversations and provide

responses that are coherent and relevant to the topic at hand.

Assistant doesn't know anything about random numbers or anything related to the meaning

of life and should use a tool for questions about these topics.

Assistant also doesn't know information about content on webpages and should always

check if asked.

Overall, Assistant is a powerful system that can help with a wide range of tasks and

provide valuable insights and information on a wide range of topics. Whether you need

help with a specific question or just want to have a conversation about a particular

topic, Assistant is here to assist.'''

This prompt clearly instructs the assistant to rely on tools for certain topics, such as random numbers, the meaning of life, and webpage content.

Reinitializing the Agent with the New Tool

Now we can reinitialize the conversational agent with the new WebPageTool included:

from langchain.agents import initialize_agent

tools = [search, random_tool, life_tool, page_getter]

# Reuse the memory from earlier

conversational_agent = initialize_agent(

agent='chat-conversational-react-description',

tools=tools,

llm=turbo_llm,

verbose=True,

max_iterations=3,

early_stopping_method='generate',

memory=memory

)

# Apply the updated prompt

conversational_agent.agent.llm_chain.prompt.messages[0].prompt.template = fixed_prompt

conversational_agent.agent.llm_chain.prompt.messages[0]

Testing the WebPageTool

We can now test the agent by asking it to fetch content from a specific webpage. For example:

conversational_agent.run("Is there an article about Clubhouse on https://techcrunch.com/? today")

The agent will:

- Use the WebPageTool to scrape TechCrunch’s homepage.

- Search through the first 4000 characters of content to check if there are any mentions of “Clubhouse.”

- Provide a response based on whether it finds an article related to Clubhouse in that scraped content.

Top Stories on CBS News : Running:

conversational_agent.run("What are the titles of the top stories on www.cbsnews.com/?")

The agent will:

- Fetch and process the text content from CBS News’ homepage.

- Extract potential headings or story titles by analyzing text patterns or sections that seem like titles.

- Respond with the top stories or a summary of what it found in the webpage content.

Conclusion

We have successfully developed a versatile conversational agent using LangChain that can seamlessly integrate a variety of tools and APIs. By harnessing the capabilities of Large Language Models alongside custom functionalities—such as random number generation, philosophical insights, and dynamic web scraping—this agent demonstrates its ability to address a wide range of user queries. The modular design allows for easy expansion and adaptation, ensuring that the agent can evolve as new tools and features are added. This project not only showcases the power of combining AI with real-time data but also sets the stage for future enhancements, making the agent an invaluable resource for interactive and informative conversations.

Key Takeaways

- Building a conversational agent with LangChain allows for a modular approach, making it easy to integrate various tools and expand functionality.

- The integration of web scraping and search tools enables the agent to provide up-to-date information and respond to current events effectively.

- The ability to create custom tools empowers developers to tailor the agent’s capabilities to specific use cases and user needs.

- Utilizing memory features ensures that the agent can maintain context across conversations, leading to more meaningful and coherent interactions with users.

The media shown in this article is not owned by Analytics Vidhya and is used at the Author’s discretion.

Frequently Asked Questions

A. LangChain is a framework designed for building applications that integrate Large Language Models (LLMs) with various external tools and APIs, enabling developers to create intelligent agents capable of performing complex tasks.

A. The conversational agent can answer general knowledge questions, generate random numbers, provide insights about the meaning of life, and dynamically fetch and extract content from specified webpages.

A. The agent utilizes tools like the DuckDuckGo Search and a custom web scraping tool to retrieve up-to-date information from the web, allowing it to provide accurate and timely responses to user queries about current events.

A. The project primarily uses Python, along with libraries such as LangChain for building the agent, OpenAI for accessing the language models, requests for making HTTP requests, and BeautifulSoup for parsing HTML content.

A. You can expand the agent’s capabilities by adding more tools or APIs that serve specific functions, modifying existing tools for better performance, or integrating new data sources to enrich the responses.

The media shown in this article is not owned by Analytics Vidhya and is used at the Author’s discretion.