In the age of information overload, it’s easy to get lost in the large amount of content available online. YouTube offers billions of videos, and the internet is filled with articles, blogs, and academic papers. With such a large volume of data, it’s often difficult to extract useful insights without spending hours reading and watching. That’s where AI-powered web summarizer comes to the help.

In this article, Let’s make a Streamlit-based app using NLP and AI that summarizes YouTube videos and websites in very detailed summaries. This app uses Groq’s Llama-3.2 model and LangChain’s summarization chains to offer very detailed summaries, saving the reader time without missing any point of interest.

Learning Outcomes

- Understand the challenges of information overload and the benefits of AI-powered summarization.

- Learn how to build a Streamlit app that summarizes content from YouTube and websites.

- Explore the role of LangChain and Llama 3.2 in generating detailed content summaries.

- Discover how to integrate tools like yt-dlp and UnstructuredURLLoader for multimedia content processing.

- Build a powerful web summarizer using Streamlit and LangChain to instantly summarize YouTube videos and websites.

- Create a web summarizer with LangChain for concise, accurate content summaries from URLs and videos.

This article was published as a part of the Data Science Blogathon.

Table of contents

Purpose and Benefits of the Summarizer App

From YouTube to webpage publications, or in-depth research articles, this vast repository of information is literally just around the corner. However, for most of us, the time factor rules out browsing through videos that run into several minutes or reading long-form articles. According to studies, a person spends just a few seconds on a website before deciding to proceed to read it or not. Now, here is the problem that needs a solution.

Enter AI-powered summarization: a technique that allows AI models to digest large amounts of content and provide concise, human-readable summaries. This can be particularly useful for busy professionals, students, or anyone who wants to quickly get the gist of a piece of content without spending hours on it.

Components of the Summarization App

Before diving into the code, let’s break down the key elements that make this application work:

- LangChain: This powerful framework simplifies the process of interacting with large language models (LLMs). It provides a standardized way to manage prompts, chain together different language model operations, and access a variety of LLMs.

- Streamlit: This open-source Python library allows us to quickly build interactive web applications. It is user-friendly and that make it perfect for creating the frontend of our summarizer.

- yt-dlp: When summarizing YouTube videos, yt_dlp is used to extract metadata like the title and description. Unlike other YouTube downloaders, yt_dlp is more versatile and supports a wide range of formats. It’s the ideal choice for extracting video details, which are then fed into the LLM for summarization.

- UnstructuredURLLoader: This LangChain utility helps us load and process content from websites. It handles the complexities of fetching web pages and extracting their textual information.

Building the App: Step-by-Step Guide

In this section, we’ll walk through each stage of developing your AI summarization app. We’ll cover setting up the environment, designing the user interface, implementing the summarization model, and testing the app to ensure optimal performance.”

Note: Get the Requirements.txt file and Full code on GitHub here.

Importing Libraries and Loading Environment Variables

This step involves setting up the essential libraries needed for the app, including any machine learning and NLP frameworks. We’ll also load environment variables to securely manage API keys, credentials, and configuration settings required throughout the development process.

import os

import validators

import streamlit as st

from langchain.prompts import PromptTemplate

from langchain_groq import ChatGroq

from langchain.chains.summarize import load_summarize_chain

from langchain_community.document_loaders import UnstructuredURLLoader

from yt_dlp import YoutubeDL

from dotenv import load_dotenv

from langchain.schema import Document

load_dotenv()

groq_api_key = os.getenv("GROQ_API_KEY")This section import Libraries and loads the API key from an .env file, which keeps sensitive information like API keys secure.

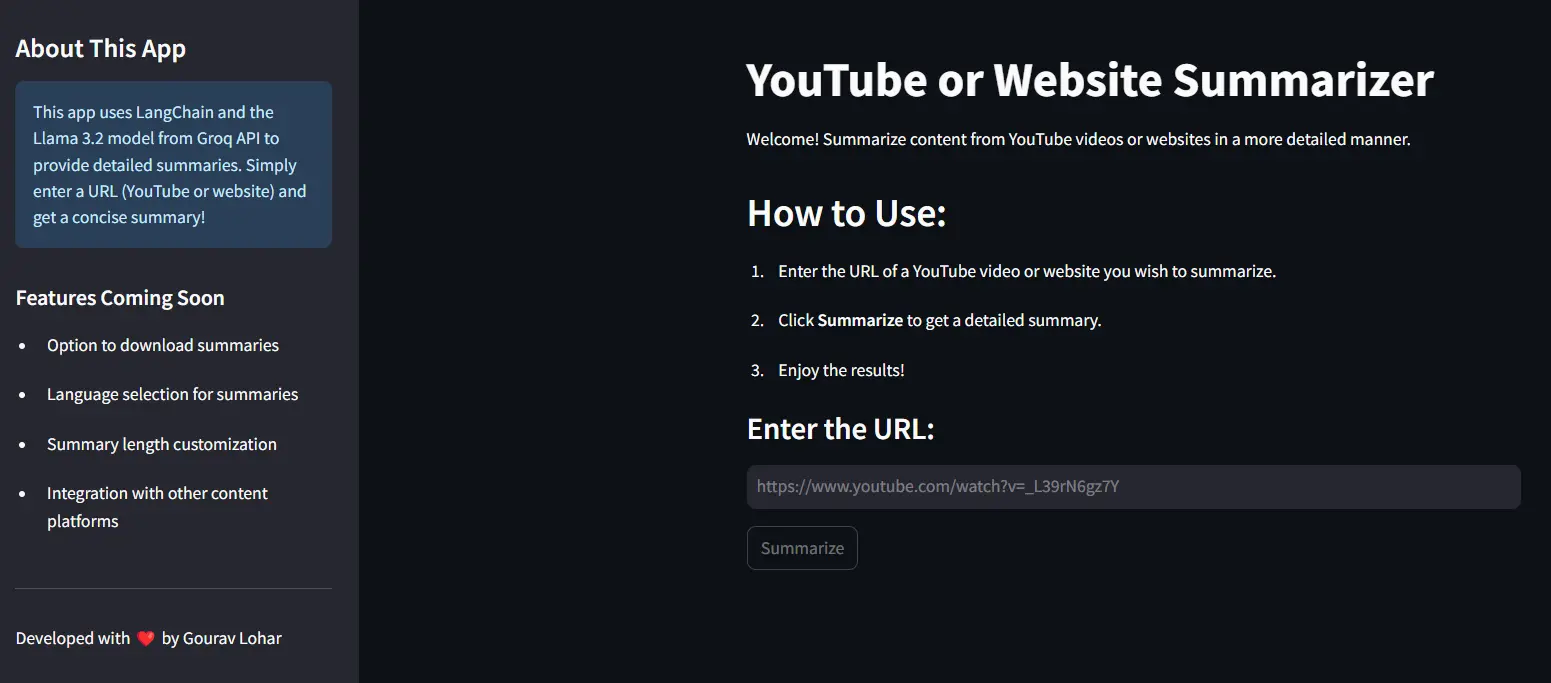

Designing the Frontend with Streamlit

In this step, we’ll create an interactive and user-friendly interface for the app using Streamlit. This includes adding input forms, buttons, and displaying outputs, allowing users to seamlessly interact with the backend functionalities.

st.set_page_config(page_title="LangChain Enhanced Summarizer", page_icon="🌟")

st.title("YouTube or Website Summarizer")

st.write("Welcome! Summarize content from YouTube videos or websites in a more detailed manner.")

st.sidebar.title("About This App")

st.sidebar.info(

"This app uses LangChain and the Llama 3.2 model from Groq API to provide detailed summaries. "

"Simply enter a URL (YouTube or website) and get a concise summary!"

)

st.header("How to Use:")

st.write("1. Enter the URL of a YouTube video or website you wish to summarize.")

st.write("2. Click **Summarize** to get a detailed summary.")

st.write("3. Enjoy the results!")

These lines set the page configuration, title, and welcome text for the main UI of the app.

Text Input for URL and Model Loading

Here, we’ll set up a text input field where users can enter a URL to analyze. Additionally, we will integrate the necessary model loading functionality to ensure that the app can process the URL efficiently and apply the machine learning model as needed for analysis.

st.subheader("Enter the URL:")

generic_url = st.text_input("URL", label_visibility="collapsed", placeholder="https://example.com")Users can enter the URL (YouTube or website) they want summarized in a text input field.

llm = ChatGroq(model="llama-3.2-11b-text-preview", groq_api_key=groq_api_key)

prompt_template = """

Provide a detailed summary of the following content in 300 words:

Content: {text}

"""

prompt = PromptTemplate(template=prompt_template, input_variables=["text"])

The model uses a prompt template to generate a 300-word summary of the provided content. This template is incorporated into the summarization chain to guide the process.

Defining Function to Load YouTube Content

In this step, we will define a function that handles fetching and loading content from YouTube. This function will take the provided URL, extract relevant video data, and prepare it for analysis by the machine learning model integrated into the app.

def load_youtube_content(url):

ydl_opts = {'format': 'bestaudio/best', 'quiet': True}

with YoutubeDL(ydl_opts) as ydl:

info = ydl.extract_info(url, download=False)

title = info.get("title", "Video")

description = info.get("description", "No description available.")

return f"{title}\n\n{description}"

This function uses yt_dlp to extract YouTube video information without downloading it. It returns the video’s title and description, which will be summarized by the LLM.

Handling the Summarization Logic

if st.button("Summarize"):

if not generic_url.strip():

st.error("Please provide a URL to proceed.")

elif not validators.url(generic_url):

st.error("Please enter a valid URL (YouTube or website).")

else:

try:

with st.spinner("Processing..."):

# Load content from URL

if "youtube.com" in generic_url:

# Load YouTube content as a string

text_content = load_youtube_content(generic_url)

docs = [Document(page_content=text_content)]

else:

loader = UnstructuredURLLoader(

urls=[generic_url],

ssl_verify=False,

headers={"User-Agent": "Mozilla/5.0"}

)

docs = loader.load()

# Summarize using LangChain

chain = load_summarize_chain(llm, chain_type="stuff", prompt=prompt)

output_summary = chain.run(docs)

st.subheader("Detailed Summary:")

st.success(output_summary)

except Exception as e:

st.exception(f"Exception occurred: {e}")

- If it’s a YouTube link, load_youtube_content extracts the content, wraps it in a Document, and stores it in docs.

- If it’s a website, UnstructuredURLLoader fetches the content as docs.

Running the Summarization Chain: The LangChain summarization chain processes the loaded content, using the prompt template and LLM to generate a summary.

Streamlit Footer Code

To give your app a polished look and provide essential information, we will add a custom footer using Streamlit. This footer can display important links, acknowledgments, or contact details, ensuring a clean and professional user interface.

st.sidebar.header("Features Coming Soon")

st.sidebar.write("- Option to download summaries")

st.sidebar.write("- Language selection for summaries")

st.sidebar.write("- Summary length customization")

st.sidebar.write("- Integration with other content platforms")

st.sidebar.markdown("---")

st.sidebar.write("Developed with ❤️ by Gourav Lohar")

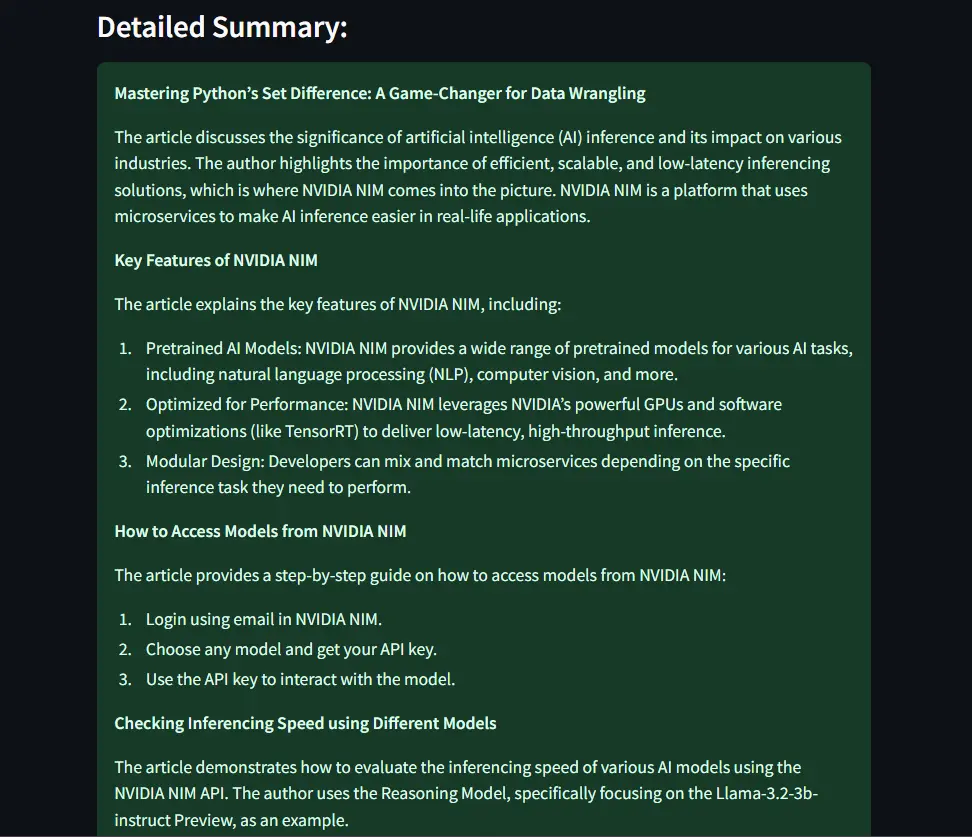

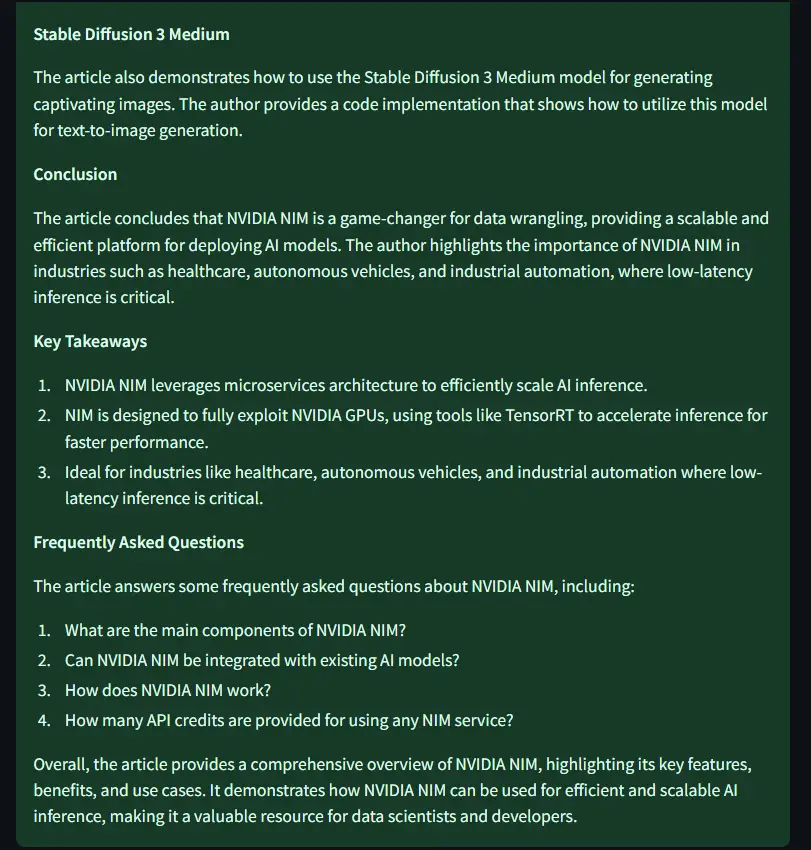

Output

Input: https://www.analyticsvidhya.com/blog/2024/10/nvidia-nim/

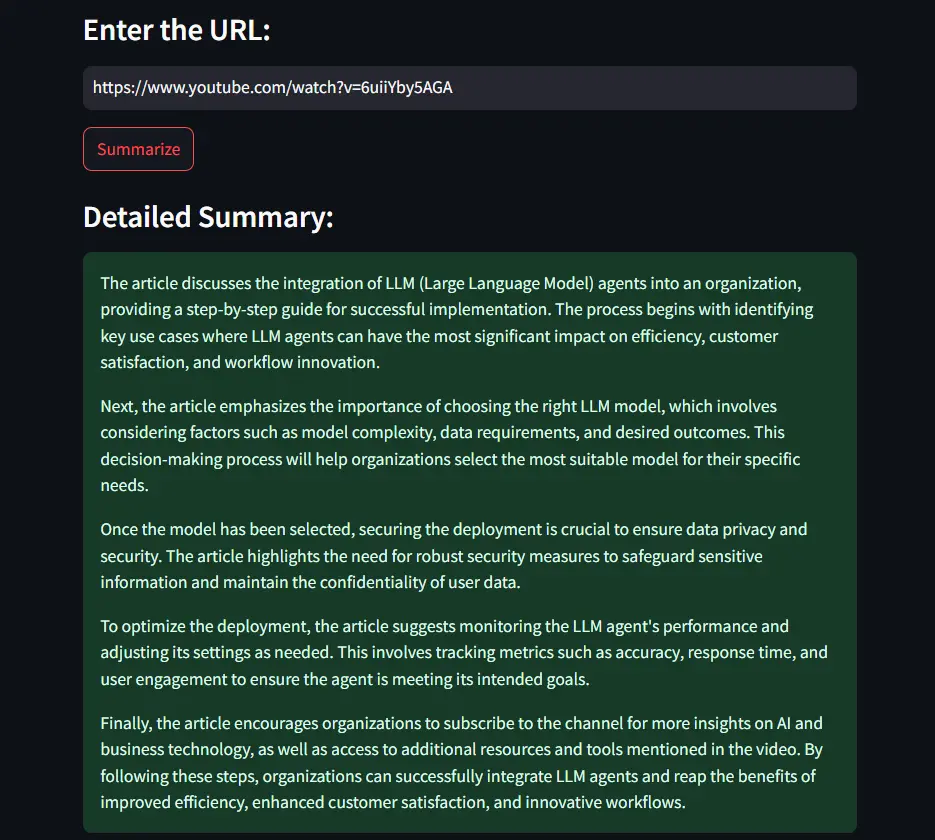

YouTube Video Summarizer

Input Video:

Conclusion

By leveraging LangChain’s framework, we streamlined the interaction with the powerful Llama 3.2 language model, enabling the generation of high-quality summaries. Streamlit facilitated the development of an intuitive and user-friendly web application, making the summarization tool accessible and engaging.

In conclusion, the article offers a practical approach and useful ideas into creating a comprehensive summary tool. By combining cutting-edge language models with efficient frameworks and user-friendly interfaces, we can open up fresh possibilities for easing information consumption and improving knowledge acquisition in today’s content-rich world.

Key Takeaways

- LangChain makes development easier by offering a consistent approach to interact with language models, manage prompts, and chain processes.

- The Llama 3.2 model from Groq API demonstrates strong capabilities in understanding and condensing information, resulting in accurate and concise summaries.

- Integrating tools like yt-dlp and UnstructuredURLLoader allows the application to handle content from various sources like YouTube and web articles easily.

- The web summarizer uses LangChain and Streamlit to provide quick and accurate summaries from YouTube videos and websites.

- By leveraging the Llama 3.2 model, the web summarizer efficiently condenses complex content into easy-to-understand summaries.

Frequently Asked Questions

A. LangChain is a framework that simplifies interacting with large language models. It helps manage prompts, chain operations, and access various LLMs, making it easier to build applications like this summarizer.

A. Llama 3.2 generates high-quality text and excels at understanding and condensing information, making it well-suited for summarization tasks. It is also an open-source model.

A. While it can handle a wide range of content, limitations exist. Extremely long videos or articles might require additional features like audio transcription or text splitting for optimal summaries.

A. Currently, yes. However, future enhancements could include language selection for broader applicability.

A. You need to run the provided code in a Python environment with the necessary libraries installed. Check GitHub for full code and requirements.txt.

The media shown in this article is not owned by Analytics Vidhya and is used at the Author’s discretion.