Unlocking the power of domain-specific Large Language Models like Microsoft Phi-4 requires the ability to fine-tune these models for specialized tasks. Fine-tuning Phi-4 on custom datasets helps tailor the model to perform optimally in specific domains, such as customer support, medical advice, or technical documentation. By leveraging LoRA (Low-Rank Adaptation) adapters, this process becomes more efficient, allowing for faster training and reduced resource consumption. This guide will walk you through the essential steps to fine-tune Phi-4 using LoRA adapters, integrate the model with Hugging Face for easy sharing, and apply the latest techniques to get the most out of your custom LLM.

Learning Objectives

- Learn how to fine-tune Microsoft Phi-4 using LoRA adapters for domain-specific tasks.

- Understand the setup process and configuration for loading Phi-4 efficiently with 4-bit quantization.

- Gain proficiency in preparing datasets and transforming them for fine-tuning with Hugging Face and unsloth.

- Master training techniques using Hugging Face’s SFTTrainer to optimize model performance.

- Explore how to monitor GPU usage and save/upload fine-tuned models to Hugging Face for deployment.

This article was published as a part of the Data Science Blogathon.

Table of contents

Prerequisites

Before diving into fine-tuning Phi-4, ensure you have the necessary tools and environment configured. This includes installing Python 3.8+, PyTorch with CUDA support for GPU acceleration, and the unsloth library, along with Hugging Face Transformers and Datasets for seamless dataset handling and model integration. Having these prerequisites in place will ensure a smooth and efficient fine-tuning process.

Ensure you have the following installed:

- Python 3.8+

- PyTorch (with CUDA support for GPU acceleration)

- unsloth

- Hugging Face Transformers and Datasets

Install the required libraries with:

pip install unsloth

pip install --force-reinstall --no-cache-dir --no-deps git+https://github.com/unslothai/unsloth.gitFine-Tuning Phi-4: A Step-by-Step Guide

This section covers all the essential steps involved in fine-tuning Microsoft Phi-4, from setting up the environment to pushing the fine-tuned model to Hugging Face. It includes configuring the model, preparing the dataset, training, monitoring GPU usage, generating responses, and saving/uploading the model.

Step 1: Setting Up the Model

Below we will be setting up the model by loading the model and importing the dependencies:

Load the Model with LoRA Adapters

LoRA adapters enable parameter-efficient fine-tuning by training only a small subset of model parameters.

Importing Dependencies

from unsloth import FastLanguageModel

import torch- FastLanguageModel: A utility class from the unsloth library for working with language models, including loading and fine-tuning.

- torch: PyTorch library for deep learning operations, providing GPU acceleration.

Configuration Settings

max_seq_length = 2048

load_in_4bit = True- max_seq_length: Specifies the maximum length of input sequences. Models like Phi-4 are designed to handle long sequences efficiently, making this crucial.

- load_in_4bit: This setting loads the model with 4-bit quantization, reducing memory usage and improving inference speed.

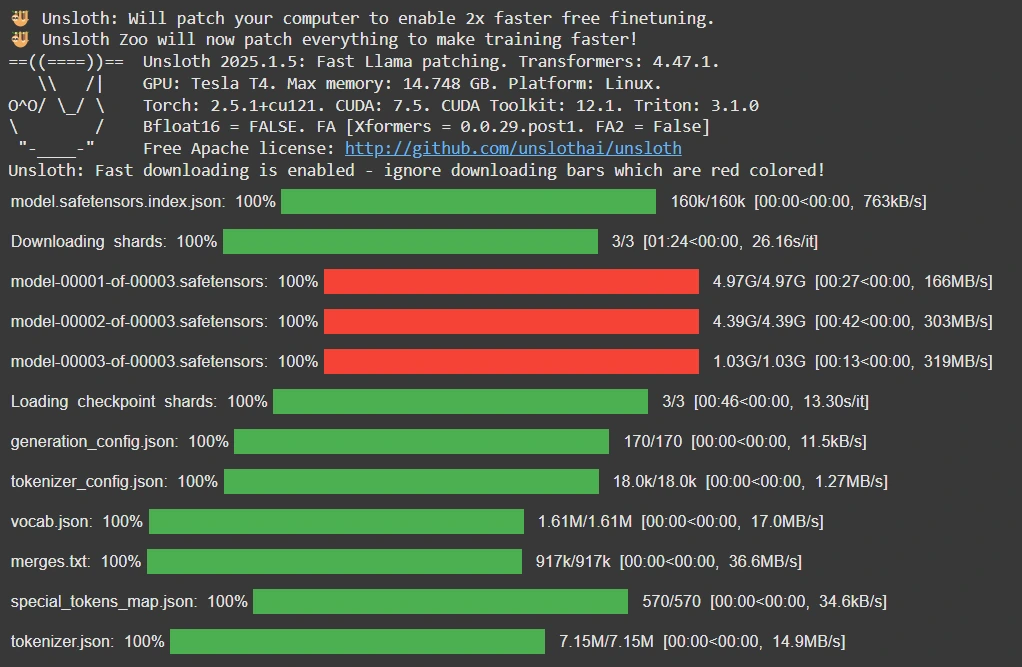

Loading the Phi-4 Model

model, tokenizer = FastLanguageModel.from_pretrained(

model_name="unsloth/Phi-4",

max_seq_length=max_seq_length,

load_in_4bit=load_in_4bit,

)- model_name: Refers to the pre-trained Phi-4 model hosted by unsloth.

- from_pretrained: Downloads and initializes the model and tokenizer with the specified configurations.

Applying LoRA Adapters

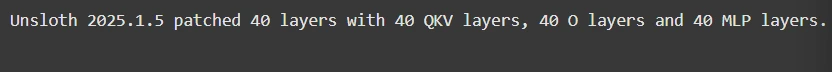

model = FastLanguageModel.get_peft_model(

model,

r=16,

target_modules=["q_proj", "k_proj", "v_proj", "o_proj", "gate_proj", "up_proj", "down_proj"],

lora_alpha=16,

lora_dropout=0,

bias="none",

use_gradient_checkpointing="unsloth",

random_state=3407,

)

- get_peft_model: A method to integrate LoRA adapters into the model for parameter-efficient fine-tuning.

- r=16: Sets the rank of the LoRA layers, controlling the dimensionality of the additional trainable parameters.

- target_modules: Specifies the model layers where LoRA adapters will be applied. These layers correspond to key components of the model’s transformer architecture.

- lora_alpha: A scaling factor for the LoRA layers to stabilize training.

- lora_dropout: Dropout probability for regularization; set to 0 for no dropout.

- bias=”none”: Indicates that no additional bias terms are introduced.

- use_gradient_checkpointing: Activates gradient checkpointing to reduce memory usage during backpropagation.

- random_state=3407: Ensures reproducibility by fixing the random seed.

Checkout this article about the about Step-By- Step Gudie Hugging Face Fine- Tune

Step 2: Preparing the Dataset

We use the FineTome-100k dataset in ShareGPT format. The unsloth library provides utilities to convert this format into Hugging Face’s generic format for multi-turn conversations.

Load the Dataset

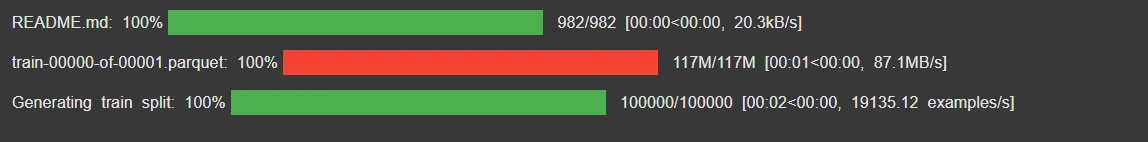

from datasets import load_dataset

from unsloth.chat_templates import standardize_sharegpt, get_chat_template

dataset = load_dataset("mlabonne/FineTome-100k", split="train")

The Hugging Face’s datasets library loads the mlabonne/FineTome-100k dataset and ensures that only the training split is loaded with the split=”train” argument.

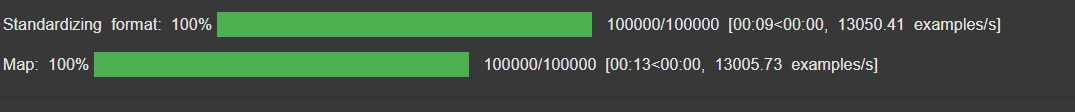

Standardize the Dataset

dataset = standardize_sharegpt(dataset)The standardize_sharegpt function from the unsloth.chat_templates module standardizes the dataset to the ShareGPT format. This ensures that the dataset adheres to the expected format for multi-turn conversations.

Apply Phi-4 Chat Template

tokenizer = get_chat_template(tokenizer, chat_template="phi-4")The get_chat_template function customizes the tokenizer to use the “phi-4” chat template. This ensures the prompts and conversations align with Phi-4’s format.

Format Prompts for Training

def formatting_prompts_func(examples):

texts = [

tokenizer.apply_chat_template(convo, tokenize=False, add_generation_prompt=False)

for convo in examples["conversations"]

]

return {"text": texts}The formatting_prompts_func processes each example in the dataset:

- The examples[“conversations”] field contains conversation data.

- Each conversation (convo) is passed through tokenizer.apply_chat_template.

- tokenize=False ensures the output is not tokenized yet.

- add_generation_prompt=False avoids appending generation-specific tokens to the prompts at this stage.

- The formatted text is stored under the “text” field.

Map Function to Dataset

dataset = dataset.map(formatting_prompts_func, batched=True)

The map function applies formatting_prompts_func to the entire dataset in batches. This efficiently preprocesses the dataset to prepare it for fine-tuning.

We look at how the conversations are structured for item 5:

dataset[5]["conversations"]

Step 3: Fine-Tuning the Model

Fine-tuning the Model involves training Phi-4 with Hugging Face’s SFTTrainer, optimizing the process with custom settings and efficient data handling.

Training with SFTTrainer

We use Hugging Face’s SFTTrainer to train the model. Below is a minimal setup for efficient training:

from trl import SFTTrainer

from transformers import TrainingArguments, DataCollatorForSeq2Seq

from unsloth import is_bfloat16_supportedMasking User Inputs

To train only on assistant responses, we mask user inputs using the train_on_responses_only utility:

from unsloth.chat_templates import train_on_responses_only

trainer = train_on_responses_only(

trainer,

instruction_part="<|im_start|>user<|im_sep|>",

response_part="<|im_start|>assistant<|im_sep|>",

)- SFTTrainer: A specialized trainer for supervised fine-tuning of language models.

- TrainingArguments: Defines training hyperparameters such as batch size, learning rate, and number of steps.

- DataCollatorForSeq2Seq: Prepares input data for sequence-to-sequence models.

- is_bfloat16_supported: Checks if the system supports bfloat16, a mixed-precision format.

trainer = SFTTrainer(

model=model,

tokenizer=tokenizer,

train_dataset=dataset,

dataset_text_field="text",

max_seq_length=max_seq_length,

data_collator=DataCollatorForSeq2Seq(tokenizer=tokenizer),

dataset_num_proc=2,

args=TrainingArguments(

per_device_train_batch_size=2,

gradient_accumulation_steps=4,

warmup_steps=5,

max_steps=30,

learning_rate=2e-4,

fp16=not is_bfloat16_supported(),

bf16=is_bfloat16_supported(),

logging_steps=1,

optim="adamw_8bit",

weight_decay=0.01,

output_dir="outputs",

report_to="none",

),

)Trainer Initialization:

- model and tokenizer: The language model and its tokenizer are passed in, typically pre-configured.

- train_dataset: The dataset used for training, preprocessed and tokenized earlier.

- dataset_text_field: Specifies the field in the dataset containing the text.

- max_seq_length: The maximum sequence length for tokenized inputs.

- data_collator: Ensures input data is properly batched and padded.

- dataset_num_proc: Parallelizes dataset processing for efficiency.

Training Arguments:

- per_device_train_batch_size: Batch size for each device during training (set to 2 here).

- gradient_accumulation_steps: Simulates a larger batch size by accumulating gradients over multiple steps.

- warmup_steps: Steps for learning rate warmup, helping stabilize training.

- max_steps: Total number of training steps (30 here, indicating a short training run).

- learning_rate: Learning rate for the optimizer.

- fp16 and bf16: Enable mixed precision (FP16 or BF16) based on hardware support for faster and memory-efficient training.

- logging_steps: Frequency of logging during training.

- optim: Optimizer choice; adamw_8bit reduces memory usage.

- weight_decay: Regularization parameter to prevent overfitting.

- output_dir: This directory saves the model checkpoints and logs.

- report_to: Disables reporting to external tracking tools (e.g., WandB).

Purpose:

This setup efficiently fine-tunes a large model on a custom dataset, focusing on:

- Memory optimization (e.g., mixed precision, 8-bit optimizers).

- Efficient training configurations with a small batch size and gradient accumulation.

- Short, lightweight training for quick experimentation or domain adaptation.

We can also use Unsloth’s train_on_completions method to only train on the assistant outputs and ignore the loss on the user’s inputs.

from unsloth.chat_templates import train_on_responses_only

trainer = train_on_responses_only(

trainer,

instruction_part="<|im_start|>user<|im_sep|>",

response_part="<|im_start|>assistant<|im_sep|>",

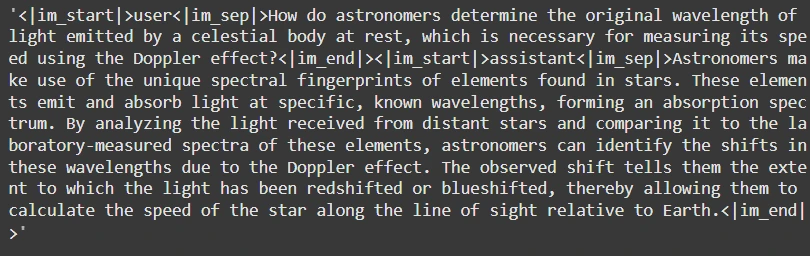

)Let’s verify masking is actually done:

tokenizer.decode(trainer.train_dataset[5]["input_ids"])

space = tokenizer(" ", add_special_tokens = False).input_ids[0]

tokenizer.decode([space if x == -100 else x for x in trainer.train_dataset[5]["labels"]])

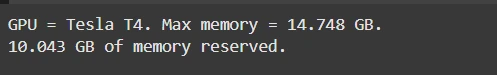

Step 4: Monitoring GPU Usage

Check GPU memory usage before and after training:

import torch

gpu_stats = torch.cuda.get_device_properties(0)

start_gpu_memory = round(torch.cuda.max_memory_reserved() / 1024 / 1024 / 1024, 3)

max_memory = round(gpu_stats.total_memory / 1024 / 1024 / 1024, 3)

print(f"GPU = {gpu_stats.name}. Max memory = {max_memory} GB.")

print(f"{start_gpu_memory} GB of memory reserved.")

Step 5: Inference

Generate responses using the fine-tuned model:

Defining the Input Messages:

from unsloth.chat_templates import get_chat_template

tokenizer = get_chat_template(

tokenizer,

chat_template = "phi-4",

)

FastLanguageModel.for_inference(model) # Enable native 2x faster inferencemessages = [

{"role": "user", "content": "Continue the Fibonacci sequence: 1, 1, 2, 3, 5, 8,"},

]The input is structured as a list of message dictionaries. Each dictionary specifies the role (e.g., “user”) and the content (e.g., the user’s query).

This approach supports multi-turn conversations, aligning with the model’s chat-based functionality

Preprocessing Inputs with the Tokenizer

inputs = tokenizer.apply_chat_template(

messages,

tokenize=True,

add_generation_prompt=True,

return_tensors="pt",

).to("cuda")- apply_chat_template: Prepares the input for the Phi-4 model using the tokenizer and ensures compatibility with the chat format.

Parameters:

- tokenize=True: Converts text into token IDs.

- add_generation_prompt=True: Adds a special prompt token to guide the model’s response generation.

- return_tensors=”pt”: Converts the processed data into PyTorch tensors for GPU processing.

- .to(“cuda”): Moves the data to the GPU for accelerated computation.

Generating Text:

outputs = model.generate(

input_ids=inputs, max_new_tokens=64, use_cache=True, temperature=1.5, min_p=0.1

)Parameters:

- input_ids=inputs: The tokenized input.

- max_new_tokens=64: Limits the length of the generated output to 64 tokens.

- use_cache=True: Speeds up generation by using cached activations.

- temperature=1.5: Controls randomness in output (higher values = more creative, less deterministic).

- min_p=0.1: Ensures diversity by setting a minimum probability threshold for token sampling.

Decoding and Displaying the Output:

print(tokenizer.batch_decode(outputs))

- Decodes the generated token IDs back into human-readable text using the tokenizer.

- We use batch_decode because the outputs might contain multiple sequences.

FastLanguageModel.for_inference(model) # Enable native 2x faster inference

messages = [

{"role": "user", "content": "Continue the fibonnaci sequence: 1, 1, 2, 3, 5, 8,"},

]

inputs = tokenizer.apply_chat_template(

messages,

tokenize = True,

add_generation_prompt = True, # Must add for generation

return_tensors = "pt",

).to("cuda")

from transformers import TextStreamer

text_streamer = TextStreamer(tokenizer, skip_prompt = True)

_ = model.generate(

input_ids = inputs, streamer = text_streamer, max_new_tokens = 128,

use_cache = True, temperature = 1.5, min_p = 0.1

Step 6: Saving and Uploading the Model

Save Locally or Push to Hugging Face:

model.save_pretrained("lora_model")

tokenizer.save_pretrained("lora_model")

To upload to Hugging Face:

model.push_to_hub_merged("hf/model", tokenizer, save_method="lora", token="<your_hf_token>")This code pushes a model to the Hugging Face Hub, using the LoRA method for efficient saving, and it also includes the associated tokenizer. You would need a valid Hugging Face authentication token (<your_hf_token>) to execute the action successfully.

Conclusion

Fine-tuning Microsoft Phi-4 locally and pushing it to Hugging Face allows developers to create highly specialized models efficiently. With tools like Unsloth, LoRA Adapters, and Hugging Face, the process becomes accessible and scalable. Try it out with your dataset today!

Key Takeaways

- Fine-tuning Microsoft Phi-4 with LoRA adapters optimizes domain-specific performance while saving computational resources.

- The Unsloth library simplifies the process of integrating LoRA adapters and working with Hugging Face datasets.

- Efficient dataset transformation and tokenization are critical for preparing data for fine-tuning Phi-4 on custom tasks.

- Training with Hugging Face’s SFTTrainer and advanced settings allows for fast, memory-efficient fine-tuning.

- Uploading fine-tuned models to Hugging Face enables easy sharing and deployment for specialized applications.

Frequently Asked Questions

A. Microsoft Phi-4 is a large language model (LLM) optimized for language understanding and generation tasks. Fine-tuning it on a custom dataset enables domain-specific performance, tailoring the model to specialized applications such as customer service, technical documentation, or niche industries.

A. LoRA (Low-Rank Adaptation) adapters allow efficient fine-tuning by training only a subset of model parameters instead of the entire model. This reduces computational requirements and memory usage, making it ideal for large models like Phi-4.

A. Key requirements include Python 3.8+, PyTorch with CUDA support, the unsloth library for streamlined workflows, and Hugging Face Transformers and Datasets for dataset handling and training.

A. Use a dataset like FineTome-100k in ShareGPT format. Convert and standardize the dataset using unsloth utilities to ensure compatibility with Hugging Face’s multi-turn conversation template.

A. Save your fine-tuned model and tokenizer locally, then use the .push_to_hub_merged() method from unsloth to upload the model and tokenizer to Hugging Face with your authentication token