Imagine having an AI assistant who doesn’t just respond to your queries but thinks through problems systematically, learns from past experiences, and plans multiple steps before taking action. Language Agent Tree Search (LATS) is an advanced AI framework that combines the systematic reasoning of ReAct prompting with the strategic planning capabilities of Monte Carlo Tree Search.

LATS operates by maintaining a comprehensive decision tree, exploring multiple possible solutions simultaneously, and learning from each interaction to make increasingly better decisions. With Vertical AI Agents being the focus, in this article, we will discuss and implement how to utilize LATS Agents in action using LlamaIndex and SambaNova.AI.

Learning Objectives

- Understand the working flow of ReAct (Reasoning + Acting) prompting framework and its thought-action-observation cycle implementation.

- Once we understand the ReAct workflow, we can explore the advancements made in this framework, particularly in the form of the LATS Agent.

- Learn to implement the Language Agent Tree Search (LATS) framework based on Monte Carlo Tree Search with language model capabilities.

- Explore the trade-offs between computational resources and outcome optimization in LATS implementation to understand when it is beneficial to use and when it is not.

- Implement a Recommendation Engine using LATS Agent from LlamaIndex using SambaNova System as an LLM provider.

This article was published as a part of the Data Science Blogathon.

Table of contents

What is React Agent?

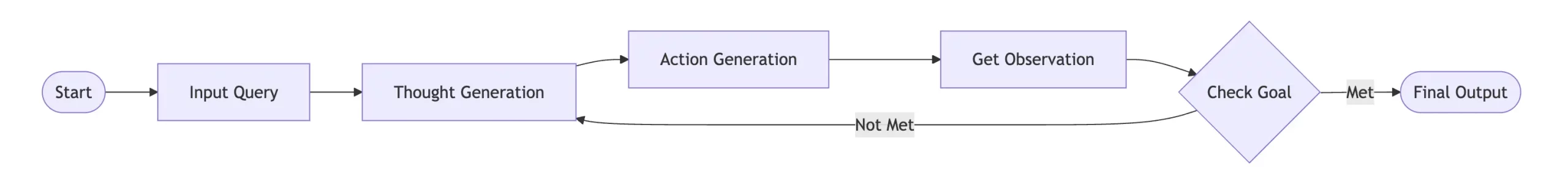

ReAct (Reasoning + Acting) is a prompting framework that enables language models to solve tasks through a thought, action, and observation cycle. Think of it like having an assistant who thinks out loud, takes action, and learns from what they observe. The agent follows a pattern:

- Thought: Reasons about the current situation

- Action: Decides what to do based on that reasoning

- Observation: Gets feedback from the environment

- Repeat: Use this feedback to inform the next thought

When implemented, it enables language models to break down problems into manageable components, make decisions based on available information, and adjust their approach based on feedback. For instance, when solving a multi-step math problem, the model might first think about which mathematical concepts apply, then take action by applying a specific formula, observe whether the result makes logical sense, and adjust its approach if needed. This structured cycle of reasoning and action closely behaves as human problem-solving processes and leads to more reliable responses.

Previous Read: Implementation of ReAct Agent using LlamaIndex and Gemini

What is a Language Agent Tree Search Agent?

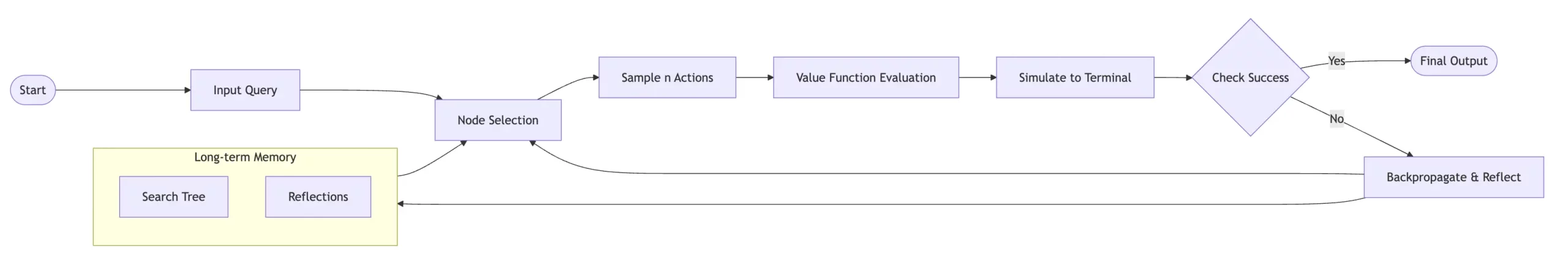

The Language Agent Tree Search (LATS) is an advanced Agentic framework that combines the Monte Carlo Tree Search with language model capabilities to create a better sophisticated decision-making system for reasoning and planning.

It operates through a continuous cycle of exploration, evaluation, and learning, starting with an input query that initiates a structured search process. The system maintains a comprehensive long-term memory containing both a search tree of previous explorations and reflections from past attempts, which helps guide future decision-making.

At its operational core, LATS follows a systematic workflow where it first selects nodes based on promising paths and then samples multiple possible actions at each decision point. Each potential action undergoes a value function evaluation to assess its merit, followed by a simulation to a terminal state to determine its effectiveness.

In the code demo, we will see how this Tree expansion works and how the evaluation score is also executed.

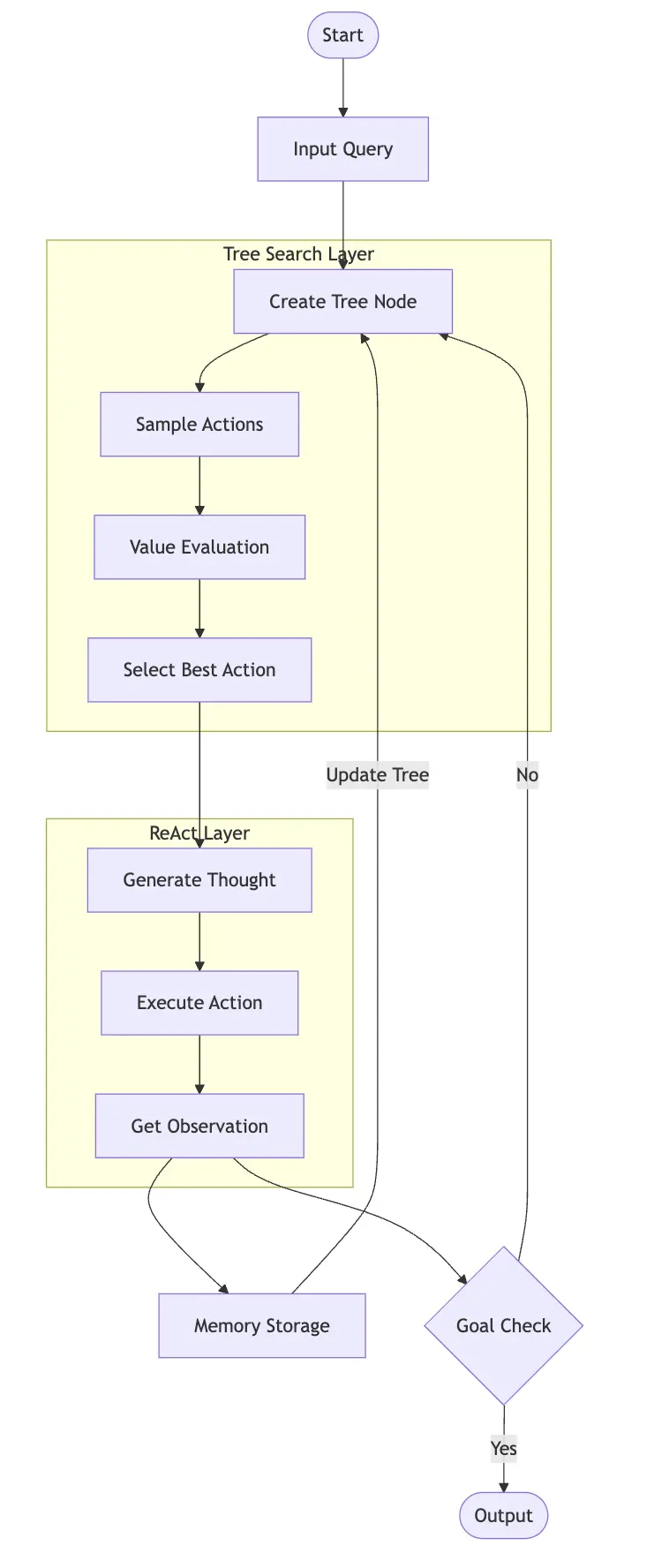

How do LATS use REACT?

LATS integrates ReAct’s thought-action-observation cycle into its tree search framework. Here’s how:

At each node in the search tree, LATS uses ReAct’s:

- Thought generation to reason about the state.

- Action selection to choose what to do.

- Observation collection to get feedback.

But LATS enhances this by:

- Exploring multiple possible ReAct sequences simultaneously in Tree expansion i.e., different nodes to think, and take action.

- Using past experiences to guide which paths to explore Learning from successes and failures systematically.

This approach to implement is very expensive. Let’s understand when and when not to use LATS.

Cost Trade-Offs: When to Use LATS?

While the paper focuses on the higher benchmarks of LATS compared to COT, ReAcT, and other techniques, the execution comes with a higher cost. The deeper the complex tasks get, the more nodes are created for reasoning and planning, which means we will end up making multiple LLM calls – a setup that’s not ideal in production environments.

This computational intensity becomes particularly challenging when dealing with real-time applications where response time is critical, as each node expansion and evaluation requires separate API calls to the language model. Furthermore, organizations need to carefully weigh the trade-off between LATS’s superior decision-making capabilities and the associated infrastructure costs, especially when scaling across multiple concurrent users or applications.

Here’s when to use LATS:

- The task is complex and has multiple possible solutions (e.g., Programming tasks where there are many ways to solve a problem).

- Mistakes are costly and accuracy is crucial (e.g., Financial decision-making or medical diagnosis support, Education curriculum preparation).

- The task benefits from learning from past attempts (e.g.Complex product searches where user preferences matter)

Here’s when not to use LATS:

- Simple, straightforward tasks that need quick responses (e.g., basic customer service inquiries or data lookups)

- Time-sensitive operations where immediate decisions are required (e.g., real-time trading systems or emergency response)

- Resource-constrained environments with limited computational power or API budget (e.g., mobile applications or edge devices)

- High-volume, repetitive tasks where simpler models can provide adequate results (e.g., content moderation or spam detection)

However, for simple, straightforward tasks where quick responses are needed, the simpler ReAct framework might be more appropriate.

Think of it this way: ReAct is like making decisions one step at a time, while LATS is like planning a complex strategy game – it takes more time and resources but can lead to better outcomes in complex situations.

Build a Recommendation System with LATS Agent using LlamaIndex

If you’re looking to build a recommendation system that thinks and analyzes the internet, let’s break down this implementation using LATS (Language Agent Task System) and LlamaIndex.

Step 1: Setting Up Your Environment

First up, we need to get our tools in order. Run these pip install commands to get everything we need:

!pip install llama-index-agent-lats

!pip install llama-index-core llama-index-readers-file

!pip install duckduckgo-search

!pip install llama-index-llms-sambanovasystems

import nest_asyncio

nest_asyncio.apply()Along with nest_asyncio for handling async operations in your notebooks.

Step 2: Configuration and API Setup

Here’s where we set up our LLM – the SambaNova LLM. You’ll need to create your API key and plug it inside the environment variable i.e., SAMBANOVA_API_KEY.

Follow these steps to get your API key:

- Create your account at: https://cloud.sambanova.ai/

- Select APIs and choose the model you need to use.

- You can also click on the Generate New API key and use that key to replace the below environment variable.

import os

os.environ["SAMBANOVA_API_KEY"] = "<replace-with-your-key>"SambaNova Cloud is considered to have the World’s Fastest AI Inference, where you can get the response from Llama open-source models within seconds. Once you define the LLM from the LlamaIndex LLM integrations, you need to override the default LLM using Settings from LlamaIndex core. By default, Llamaindex uses OpenAI as the LLM.

from llama_index.core import Settings

from llama_index.llms.sambanovasystems import SambaNovaCloud

llm = SambaNovaCloud(

model="Meta-Llama-3.1-70B-Instruct",

context_window=100000,

max_tokens=1024,

temperature=0.7,

top_k=1,

top_p=0.01,

)

Settings.llm = llmStep 3: Defining Tool-Search

Now for the fun part – we’re integrating DuckDuckGo search to help our system find relevant information. This tool fetches real-world data for the given user question and fetches the max results of 4.

To define the tool i.e., Function calling in the LLMs always remember these two steps:

- Properly define the data type the function will return, in our case it is: -> str.

- Always include docstrings for your function call that needs to be added in Agentic Workflow or Function calling. Since function calling can help in query routing, the Agent needs to know when to choose which tool to action, this is where docstrings are very helpful.

Now use FunctionTool from LlamaIndex and define your custom function.

from duckduckgo_search import DDGS

from llama_index.core.tools import FunctionTool

def search(query:str) -> str:

"""

Use this function to get results from Web Search through DuckDuckGo

Args:

query: user prompt

return:

context (str): search results to the user query

"""

# def search(query:str)

req = DDGS()

response = req.text(query,max_results=4)

context = ""

for result in response:

context += result['body']

return context

search_tool = FunctionTool.from_defaults(fn=search)Step 4: LlamaIndex Agent Runner – LATS

This is the final part of the Agent definition. We need to define LATSAgent Worker from the LlamaIndex agent. Since this is a Worker class, we further can run it through AgentRunner where we directly utilize the chat function.

Note: The chat and other features can also be directly called from AgentWorker, but it is better to use AgentRunner, as it has been updated with most of the latest changes done in the framework.

Key hyperparameters:

- num_expansions: Number of children nodes to expand.

- max_rollouts: Maximum number of trajectories to sample.

from llama_index.agent.lats import LATSAgentWorker

from llama_index.core.agent import AgentRunner

agent_worker = LATSAgentWorker(

tools=[search_tool],

llm=llm,

num_expansions=2,

verbose=True,

max_rollouts=3)

agent = AgentRunner(agent_worker)

Step 5: Execute Agent

Finally, it is time to execute the LATS agent, just ask the recommendation you need to ask. During the execution, observe the verbose logs:

- LATS Agent divides the user task into num_expansion.

- When it divides this task, it runs the thought process and then uses the relevant action to pick the tool. In our case, it’s just one tool.

- Once it runs the rollout and gets the observation, it evaluates the results it generates.

- It repeats this process and creates a tree node to get the best observation possible.

query = "Looking for a mirrorless camera under $2000 with good low-light performance"

response = agent.chat(query)

print(response.response)Output:

Here are the top 5 mirrorless cameras under $2000 with good low-light performance:

1. Nikon Zf – Features a 240M full-frame BSI ONOS sensor, full-width 4K/30 video, cropped 4K/80, and stabilization rated to SEV.

2. Sony ZfC II – A compact, full-frame mirrorless camera with unlimited 4K recording, even in low-light conditions.

3. Fujijiita N-Yu – Offers an ABC-C format, 25.9M X-frame ONOS 4 sensor, and a wide native sensitivity range of ISO 160-12800 for better performance.

4. Panasonic Lunix OHS – A 10 Megapixel camera with a four-thirds ONOS sensor, capable of unlimited 4K recording even in low light.

5. Canon EOS R6 – Equipped with a 280M full-frame ONOS sensor, 4K/60 video, stabilization rated to SEV, and improved low-light performance.

Note: The ranking may vary based on individual preferences and specific needs.

The above approach works well, but you must be prepared to handle edge cases. Sometimes, if the user’s task query is highly complex or involves multiple num_expansion or rollouts, there’s a high chance the output will be something like, “I am still thinking.” Obviously, this response is not acceptable. In such cases, there’s a hacky approach you can try.

Step 6: Error Handling and Hacky Approaches

Since the LATS Agent creates a node, each node generates a child tree. For each child tree, the Agent retrieves observations. To inspect this, you need to check the list of tasks the Agent is executing. This can be done by using agent.list_tasks(). The function will return a dictionary containing the state, from which you can identify the root_node and navigate to the last observation to analyze the reasoning executed by the Agent.

print(agent.list_tasks()[0])

print(agent.list_tasks()[0].extra_state.keys())

print(agent.list_tasks()[-1].extra_state["root_node"].children[0].children[0].current_reasoning[-1].observation)Now whenever you get I am still thinking just use this hack approach to get the outcome of the result.

def process_recommendation(query: str, agent: AgentRunner):

"""Process the recommendation query with error handling"""

try:

response = agent.chat(query).response

if "I am still thinking." in response:

return agent.list_tasks()[-1].extra_state["root_node"].children[0].children[0].current_reasoning[-1].observation

else:

return response

except Exception as e:

return f"An error occurred while processing your request: {str(e)}"Conclusion

Language Agent Tree Search (LATS) represents a significant advancement in AI agent architectures, combining the systematic exploration of Monte Carlo Tree Search with the reasoning capabilities of large language models. While LATS offers superior decision-making capabilities compared to simpler approaches like Chain-of-Thought (CoT) or basic ReAct agents, it comes with increased computational overhead and complexity.

Key Takeaways

- Understood the ReAcT Agent, the technique that is used in most of the Agentic frameworks for task execution.

- Research on Language Tree Search (LATS), the advancement for ReAcT agent that uses Monte Carlo Tree searches to further improve the output response for complex tasks.

- LATS Agent is only ideal for complex, high-stakes scenarios requiring accuracy and learning where latency is not an issue.

- Implementation of LATS Agent using a custom search tool to get real-world responses for the given user task.

- Due to the complexity of LATS, error handling, and potentially “hacky” approaches might be needed to extract results in certain scenarios.

Frequently Asked Questions

A. LATS enhances ReAct by exploring multiple possible sequences of thoughts, actions, and observations simultaneously within a tree structure, using past experiences to guide the search and learning from successes and failures systematically using evaluation where LLM acts as a Judge.

A. Standard language models primarily focus on generating text based on a given prompt. Agents, like ReAct, go a step further by being able to interact with their environment, take actions based on reasoning, and observe the outcomes to improve future actions.

A. When setting up a LATS agent in LlamaIndex, key hyperparameters to consider include num_expansions, the breadth of the search by determining how many child nodes are explored from each point, and max_rollouts, the depth of the search by limiting the number of simulated action sequences. Additionally, max_iterations is another optional parameter that limits the overall reasoning cycles of the agent, preventing it from running indefinitely and managing computational resources effectively.

A. The official implementation for “Language Agent Tree Search Unifies Reasoning, Acting, and Planning in Language Models” is available on GitHub: https://github.com/lapisrocks/LanguageAgentTreeSearch

The media shown in this article is not owned by Analytics Vidhya and is used at the Author’s discretion.