You have heard the famous quote “Data is the new Oil” by British mathematician Clive Humby it is the most influential quote that describes the importance of data in the 21st century but, after the explosive development of the Large Language Model and its training what we don’t have right is the data. because the development speed and training speed of the LLM model nearly surpass the data generation speed of humans. The solution is making the data more refined and specific to the task or the Synthetic data generation. The former is the more domain expert loaded tasks but the latter is more prominent to the huge hunger of today’s problems.

The high-quality training data remains a critical bottleneck. This blog post explores a practical approach to generating synthetic data using LLama 3.2 and Ollama. It will demonstrate how we can create structured educational content programmatically.

Learning Outcomes

- Understand the importance and techniques of Local Synthetic Data Generation for enhancing machine learning model training.

- Learn how to implement Local Synthetic Data Generation to create high-quality datasets while preserving privacy and security.

- Gain practical knowledge of implementing robust error handling and retry mechanisms in data generation pipelines.

- Learn JSON validation, cleaning techniques, and their role in maintaining consistent and reliable outputs.

- Develop expertise in designing and utilizing Pydantic models for ensuring data schema integrity.

Table of contents

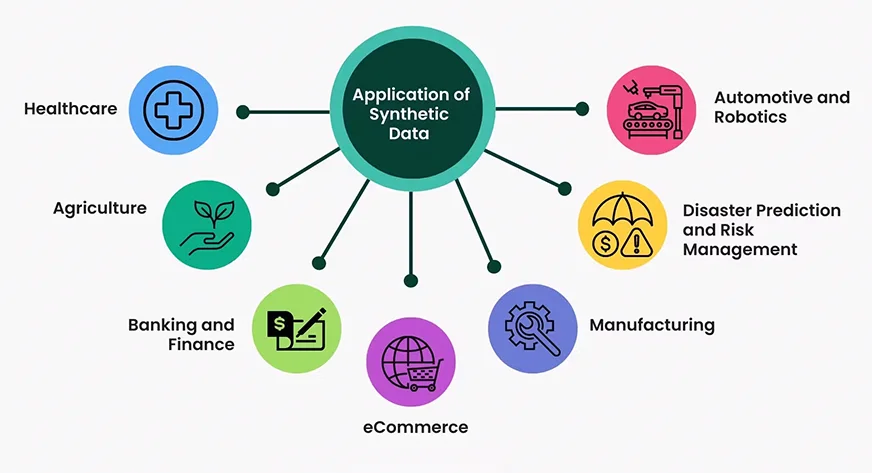

What is Synthetic Data?

Synthetic data refers to artificially generated information that mimics the characteristics of real-world data while preserving essential patterns and statistical properties. It is created using algorithms, simulations, or AI models to address privacy concerns, augment limited data, or test systems in controlled scenarios. Unlike real data, synthetic data can be tailored to specific requirements, ensuring diversity, balance, and scalability. It is widely used in fields like machine learning, healthcare, finance, and autonomous systems to train models, validate algorithms, or simulate environments. Synthetic data bridges the gap between data scarcity and real-world applications while reducing ethical and compliance risks.

Why We Need Synthetic Data Today?

The demand for synthetic data has grown exponentially due to several factors

- Data Privacy Regulations: With GDPR and similar regulations, synthetic data offers a safe alternative for development and testing

- Cost Efficiency: COllecting and annotating real data is expensive and time-consuming.

- Scalabilities: Synthetic data can be generated in Large quantities with controlled variations

- Edge Case Coverage: We can generate data for rare scenarios that might be difficult to collect naturally

- Rapid Prototyping: Quick iteration on ML models without waiting for real data collection.

- Less Biased: The data collected from the real world may be error prone and full of gender biases, racistic text, and not safe for children’s words so to make a model with this type of data, the model’s behavior is also inherently with these biases. With synthetic data, we can control these behaviors easily.

Impact on LLM and Small LM Performance

Synthetic data has shown promising results in improving both large and small language models

- Fine-tuning Efficiency: Models fine-tuned on high-quality synthetic data often show comparable performance to those trained on real data

- Domain Adaptation: Synthetic data helps bridge domain gaps in specialized applications

- Data Augmentation: Combining synthetic and real data often yields better results using either alone.

Project Structure and Environment Setup

In the following section, we’ll break down the project layout and guide you through configuring the required environment.

project/

├── main.py

├── requirements.txt

├── README.md

└── english_QA_new.jsonNow we will set up our project environment using conda. Follow below steps

Create Conda Environment

$conda create -n synthetic-data python=3.11

# activate the newly created env

$conda activate synthetic-dataInstall Libraries in conda env

pip install pydantic langchain langchain-community

pip install langchain-ollamaNow we are all set up to start the code implementation

Project Implementation

In this section, we’ll delve into the practical implementation of the project, covering each step in detail.

Importing Libraries

Before starting the project we will create a file name main.py in the project root and import all the libraries on that file:

from pydantic import BaseModel, Field, ValidationError

from langchain.prompts import PromptTemplate

from langchain_ollama import OllamaLLM

from typing import List

import json

import uuid

import re

from pathlib import Path

from time import sleepNow it is time to continue the code implementation part on the main.py file

First, we start with implementing the Data Schema.

EnglishQuestion data schema is a Pydantic model that ensures our generated data follows a consistent structure with required fields and automatic ID generation.

Code Implementation

class EnglishQuestion(BaseModel):

id: str = Field(

default_factory=lambda: str(uuid.uuid4()),

description="Unique identifier for the question",

)

category: str = Field(..., description="Question Type")

question: str = Field(..., description="The English language question")

answer: str = Field(..., description="The correct answer to the question")

thought_process: str = Field(

..., description="Explanation of the reasoning process to arrive at the answer"

)Now, that we have created the EnglishQuestion data class.

Second, we will start implementing the QuestionGenerator class. This class is the core of project implementation.

QuestionGenerator Class Structure

class QuestionGenerator:

def __init__(self, model_name: str, output_file: Path):

pass

def clean_json_string(self, text: str) -> str:

pass

def parse_response(self, result: str) -> EnglishQuestion:

pass

def generate_with_retries(self, category: str, retries: int = 3) -> EnglishQuestion:

pass

def generate_questions(

self, categories: List[str], iterations: int

) -> List[EnglishQuestion]:

pass

def save_to_json(self, question: EnglishQuestion):

pass

def load_existing_data(self) -> List[dict]:

passLet’s step by step implement the key methods

Initialization

Initialize the class with a language model, a prompt template, and an output file. With this, we will create an instance of OllamaLLM with model_name and set up a PromptTemplate for generating QA in a strict JSON format.

Code Implementation:

def __init__(self, model_name: str, output_file: Path):

self.llm = OllamaLLM(model=model_name)

self.prompt_template = PromptTemplate(

input_variables=["category"],

template="""

Generate an English language question that tests understanding and usage.

Focus on {category}.Question will be like fill in the blanks,One liner and mut not be MCQ type. write Output in this strict JSON format:

{{

"question": "<your specific question>",

"answer": "<the correct answer>",

"thought_process": "<Explain reasoning to arrive at the answer>"

}}

Do not include any text outside of the JSON object.

""",

)

self.output_file = output_file

self.output_file.touch(exist_ok=True)JSON Cleaning

Responses we will get from the LLM during the generation process will have many unnecessary extra characters which may poise the generated data, so you must pass these data through a cleaning process.

Here, we will fix the common formatting issue in JSON keys/values using regex, replacing problematic characters such as newline, and special characters.

Code implementation:

def clean_json_string(self, text: str) -> str:

"""Improved version to handle malformed or incomplete JSON."""

start = text.find("{")

end = text.rfind("}")

if start == -1 or end == -1:

raise ValueError(f"No JSON object found. Response was: {text}")

json_str = text[start : end + 1]

# Remove any special characters that might break JSON parsing

json_str = json_str.replace("\n", " ").replace("\r", " ")

json_str = re.sub(r"[^\x20-\x7E]", "", json_str)

# Fix common JSON formatting issues

json_str = re.sub(

r'(?<!\\)"([^"]*?)(?<!\\)":', r'"\1":', json_str

) # Fix key formatting

json_str = re.sub(

r':\s*"([^"]*?)(?<!\\)"(?=\s*[,}])', r': "\1"', json_str

) # Fix value formatting

return json_strResponse Parsing

The parsing method will use the above cleaning process to clean the responses from the LLM, validate the response for consistency, convert the cleaned JSON into a Python dictionary, and map the dictionary to an EnglishQuestion object.

Code Implementation:

def parse_response(self, result: str) -> EnglishQuestion:

"""Parse the LLM response and validate it against the schema."""

cleaned_json = self.clean_json_string(result)

parsed_result = json.loads(cleaned_json)

return EnglishQuestion(**parsed_result)Data Persistence

For, persistent data generation, although we can use some NoSQL Databases(MongoDB, etc) for this, here we use a simple JSON file to store the generated data.

Code Implementation:

def load_existing_data(self) -> List[dict]:

"""Load existing questions from the JSON file."""

try:

with open(self.output_file, "r") as f:

return json.load(f)

except (FileNotFoundError, json.JSONDecodeError):

return []Robust Generation

In this data generation phase, we have two most important methods:

- Generate with retry mechanism

- Question Generation method

The purpose of the retry mechanism is to force automation to generate a response in case of failure. It tries generating a question multiple times(the default is three times) and will log errors and add a delay between retries. It will also raise an exception if all attempts fail.

Code Implementation:

def generate_with_retries(self, category: str, retries: int = 3) -> EnglishQuestion:

for attempt in range(retries):

try:

result = self.prompt_template | self.llm

response = result.invoke(input={"category": category})

return self.parse_response(response)

except Exception as e:

print(

f"Attempt {attempt + 1}/{retries} failed for category '{category}': {e}"

)

sleep(2) # Small delay before retry

raise ValueError(

f"Failed to process category '{category}' after {retries} attempts."

)The Question generation method will generate multiple questions for a list of categories and save them in the storage(here JSON file). It will iterate over the categories and call generating_with_retries method for each category. And in the last, it will save each successfully generated question using save_to_json method.

def generate_questions(

self, categories: List[str], iterations: int

) -> List[EnglishQuestion]:

"""Generate multiple questions for a list of categories."""

all_questions = []

for _ in range(iterations):

for category in categories:

try:

question = self.generate_with_retries(category)

self.save_to_json(question)

all_questions.append(question)

print(f"Successfully generated question for category: {category}")

except (ValidationError, ValueError) as e:

print(f"Error processing category '{category}': {e}")

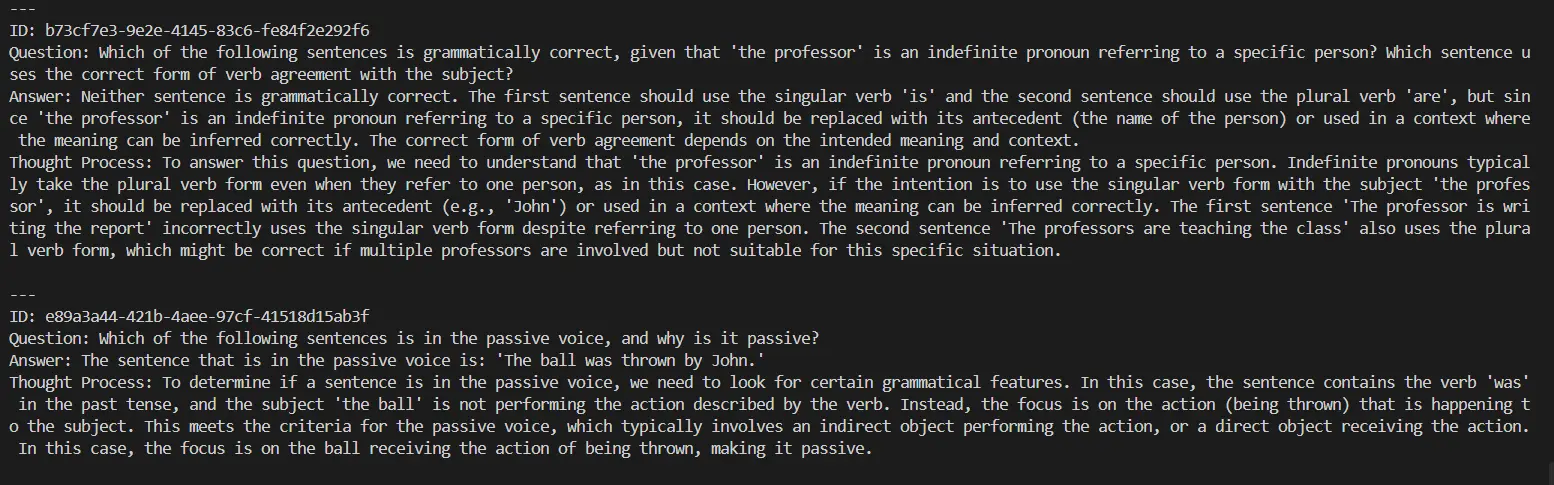

return all_questionsDisplaying the results on the terminal

To get some idea of what are the responses producing from LLM here is a simple printing function.

def display_questions(questions: List[EnglishQuestion]):

print("\nGenerated English Questions:")

for question in questions:

print("\n---")

print(f"ID: {question.id}")

print(f"Question: {question.question}")

print(f"Answer: {question.answer}")

print(f"Thought Process: {question.thought_process}")Testing the Automation

Before running your project create an english_QA_new.json file on the project root.

if __name__ == "__main__":

OUTPUT_FILE = Path("english_QA_new.json")

generator = QuestionGenerator(model_name="llama3.2", output_file=OUTPUT_FILE)

categories = [

"word usage",

"Phrasal Ver",

"vocabulary",

"idioms",

]

iterations = 2

generated_questions = generator.generate_questions(categories, iterations)

display_questions(generated_questions)

Now, Go to the terminal and type:

python main.pyOutput:

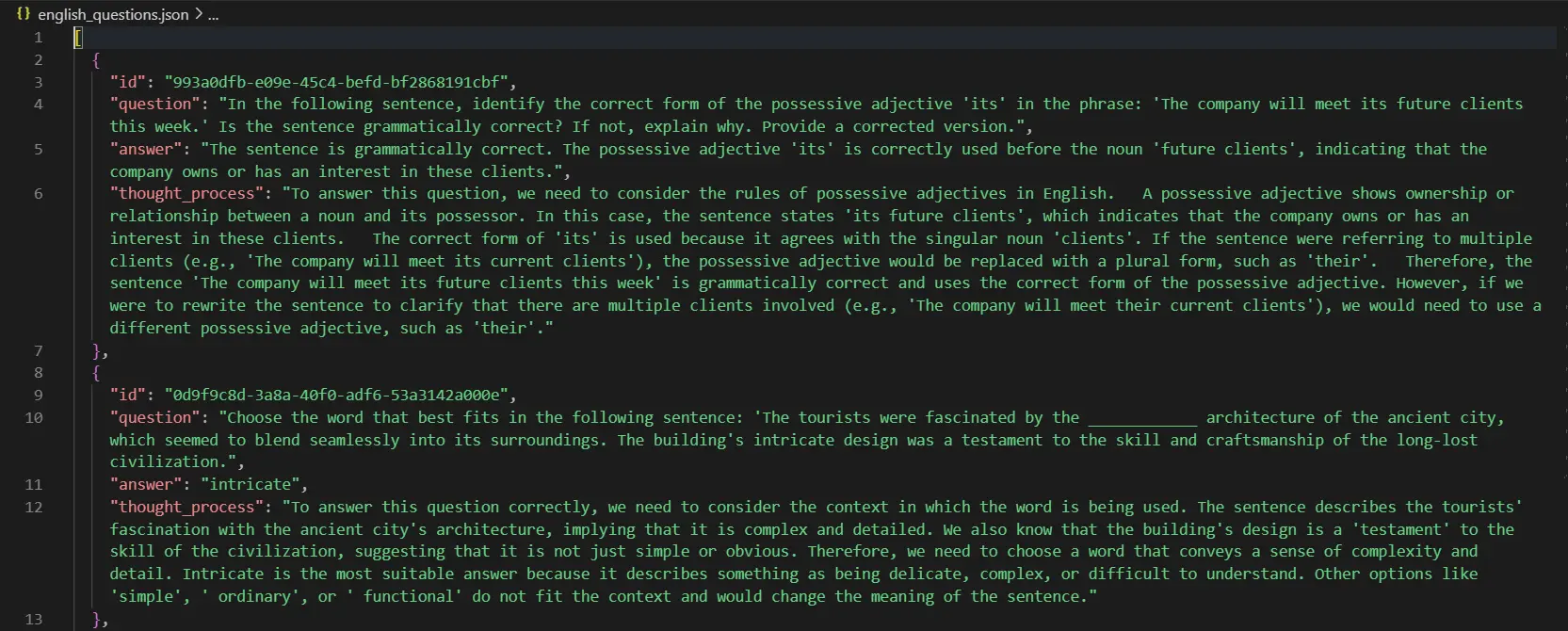

These questions will be saved in your project root. Saved Question look like:

All the code used in this project is here.

Conclusion

Synthetic data generation has emerged as a powerful solution to address the growing demand for high-quality training datasets in the era of rapid advancements in AI and LLMs. By leveraging tools like LLama 3.2 and Ollama, along with robust frameworks like Pydantic, we can create structured, scalable, and bias-free datasets tailored to specific needs. This approach not only reduces dependency on costly and time-consuming real-world data collection but also ensures privacy and ethical compliance. As we refine these methodologies, synthetic data will continue to play a pivotal role in driving innovation, improving model performance, and unlocking new possibilities in diverse fields.

Key Takeaways

- Local Synthetic Data Generation enables the creation of diverse datasets that can improve model accuracy without compromising privacy.

- Implementing Local Synthetic Data Generation can significantly enhance data security by minimizing reliance on real-world sensitive data.

- Synthetic data ensures privacy, reduces biases, and lowers data collection costs.

- Tailored datasets improve adaptability across diverse AI and LLM applications.

- Synthetic data paves the way for ethical, efficient, and innovative AI development.

Frequently Asked Questions

A. Ollama provides local deployment capabilities, reducing cost and latency while offering more control over the generation process.

A. To maintain quality, The implementation uses Pydantic validation, retry mechanisms, and JSON cleaning. Additional metrics and maintain validation can be implemented.

A. Local LLMs might have lower-quality output compared to larger models, and generation speed can be limited by local computing resources.

A. Yes, synthetic data ensures privacy by removing identifiable information and promotes ethical AI development by addressing data biases and reducing the dependency on real-world sensitive data.

A. Challenges include ensuring data realism, maintaining domain relevance, and aligning synthetic data characteristics with real-world use cases for effective model training.