I have been exploring Hugging Face’s SmolAgents to build AI agents in a few lines of code and it worked perfectly for me. From building a research agent to Agentic Rag, it has been a seamless experience. Hugging Face’s SmolAgents provide a lightweight and efficient way to create AI agents for various tasks, such as research assistance, question answering, and more. The simplicity of the framework allows developers to focus on the logic and functionality of their AI agents without getting bogged down by complex configurations. However, debugging multi-agent runs is challenging due to their unpredictable workflows and extensive logs and most of the errors are often “LLM dumb” kind of issues that the model self-corrects in subsequent steps. Finding effective ways to validate and inspect these runs remains a key challenge. This is where OpenTelemetry comes in handy. Let’s see how it works!

Table of contents

Why is the Debugging Agent Run is Difficult?

Here’s why debugging agent run is difficult:

- Unpredictability: AI Agents are designed to be flexible and creative, which means they don’t always follow a fixed path. This makes it hard to predict exactly what they’ll do, and therefore, hard to debug when something goes wrong.

- Complexity: AI Agents often perform many steps in a single run, and each step can generate a lot of logs (messages or data about what’s happening). This can quickly overwhelm you if you’re trying to figure out what went wrong.

- Errors are often minor: Many errors in agent runs are small mistakes (like the LLM writing incorrect code or making a wrong decision) that the agent fixes on its own in the next step. These errors aren’t always critical, but they still make it harder to track what’s happening.

What is the Importance of Log in Agent Run?

Log means recording what happens during an agent run. This is important because:

- Debugging: If something goes wrong, you can look at the logs to figure out what happened.

- Monitoring: In production (when your agent is being used by real users), you need to keep an eye on how it’s performing. Logs help you do that.

- Improvement: By reviewing logs, you can identify patterns or recurring issues and improve your agent over time.

What is OpenTelemetry?

OpenTelemetry is a standard for instrumentation, which means it provides tools to automatically record (or “log”) what’s happening in your software. In this case, it’s used to log agent runs.

How does it work?

- You add some instrumentation code to your agent. This code doesn’t change how the agent works; it just records what’s happening.

- When your agent runs, OpenTelemetry automatically logs all the steps, errors, and other important details.

- These logs are sent to a platform (like a dashboard or monitoring tool) where you can review them later.

Why is this helpful?

- Ease of use: You don’t have to manually add logging code everywhere. OpenTelemetry does it for you.

- Standardization: OpenTelemetry is a widely used standard, so it works with many tools and platforms.

- Clarity: The logs are structured and organized, making it easier to understand what happened during an agent run.

Logging agent runs is essential because AI agents are complex and unpredictable. Using OpenTelemetry makes it easy to automatically record and monitor what’s happening, so you can debug issues, improve performance, and ensure everything runs smoothly in production.

How to Use OpenTelemetry?

This script is setting up a Python environment with specific libraries and configuring OpenTelemetry for tracing. Here’s a step-by-step explanation:

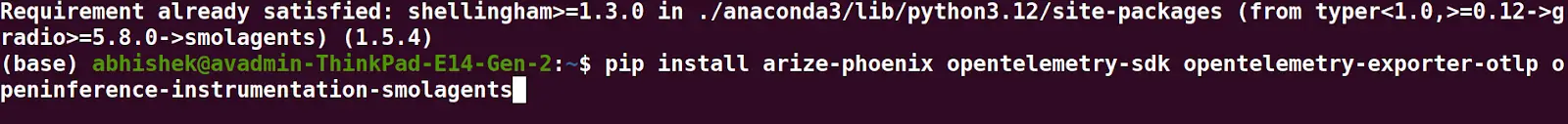

Here I have installed the dependencies, imported required modules and set up OpenTelemetry in terminal.

Install Dependencies

!pip install smolagents

!pip install arize-phoenix opentelemetry-sdk opentelemetry-exporter-otlp openinference-instrumentation-smolagents

- smolagents: A library for building lightweight agents (likely for AI or automation tasks).

- arize-phoenix: A tool for monitoring and debugging machine learning models.

- opentelemetry-sdk: The OpenTelemetry SDK for instrumenting, generating, and exporting telemetry data (traces, metrics, logs).

- opentelemetry-exporter-otlp: An exporter for sending telemetry data in the OTLP (OpenTelemetry Protocol) format.

- openinference-instrumentation-smolagents: A library that instruments smolagents to automatically generate OpenTelemetry traces.

Import Required Modules

from opentelemetry import trace

from opentelemetry.sdk.trace import TracerProvider

from opentelemetry.sdk.trace.export import BatchSpanProcessor

from openinference.instrumentation.smolagents import SmolagentsInstrumentor

from opentelemetry.exporter.otlp.proto.http.trace_exporter import OTLPSpanExporter

from opentelemetry.sdk.trace.export import ConsoleSpanExporter, SimpleSpanProcessor- trace: The OpenTelemetry tracing API.

- TracerProvider: The central component for creating and managing traces.

- BatchSpanProcessor: Processes spans in batches for efficient exporting.

- SmolagentsInstrumentor: Automatically instruments smolagents to generate traces.

- OTLPSpanExporter: Exports traces using the OTLP protocol over HTTP.

- ConsoleSpanExporter: Exports traces to the console (for debugging).

- SimpleSpanProcessor: Processes spans one at a time (useful for debugging or low-volume tracing).

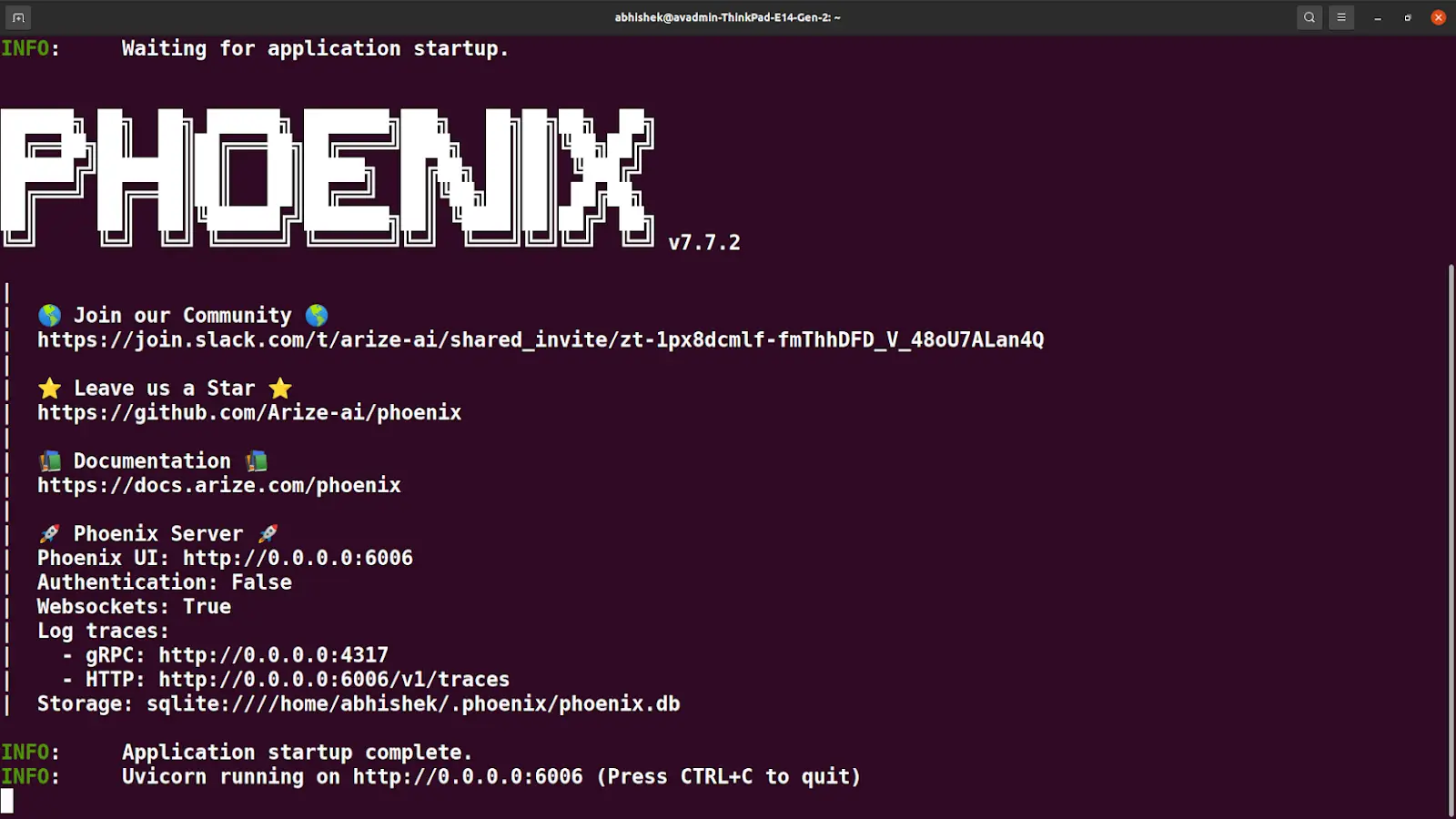

Set Up OpenTelemetry Tracing

endpoint = "http://0.0.0.0:6006/v1/traces"

trace_provider = TracerProvider()

trace_provider.add_span_processor(SimpleSpanProcessor(OTLPSpanExporter(endpoint)))- endpoint: The URL where traces will be sent (in this case, http://0.0.0.0:6006/v1/traces).

- trace_provider: Creates a new TracerProvider instance.

- add_span_processor: Adds a span processor to the provider. Here, it uses SimpleSpanProcessor to send traces to the specified endpoint via OTLPSpanExporter.

Instrument smolagents

SmolagentsInstrumentor().instrument(tracer_provider=trace_provider)This line instruments the smolagents library to automatically generate traces using the configured trace_provider.

- Installs the necessary Python libraries.

- Configures OpenTelemetry to collect traces from smolagents.

- Sends the traces to a specified endpoint (http://0.0.0.0:6006/v1/traces) using the OTLP protocol.

- If you want to debug, you can add a ConsoleSpanExporter to print traces to the terminal.

You will find all the details here: http://0.0.0.0:6006/v1/traces to inspact your agent’s run.

Run the Agent

from smolagents import (

CodeAgent,

ToolCallingAgent,

ManagedAgent,

DuckDuckGoSearchTool,

VisitWebpageTool,

HfApiModel,

)

model = HfApiModel()

agent = ToolCallingAgent(

tools=[DuckDuckGoSearchTool(), VisitWebpageTool()],

model=model,

)

managed_agent = ManagedAgent(

agent=agent,

name="managed_agent",

description="This is an agent that can do web search.",

)

manager_agent = CodeAgent(

tools=[],

model=model,

managed_agents=[managed_agent],

)

manager_agent.run(

"If the US keeps its 2024 growth rate, how many years will it take for the GDP to double?"

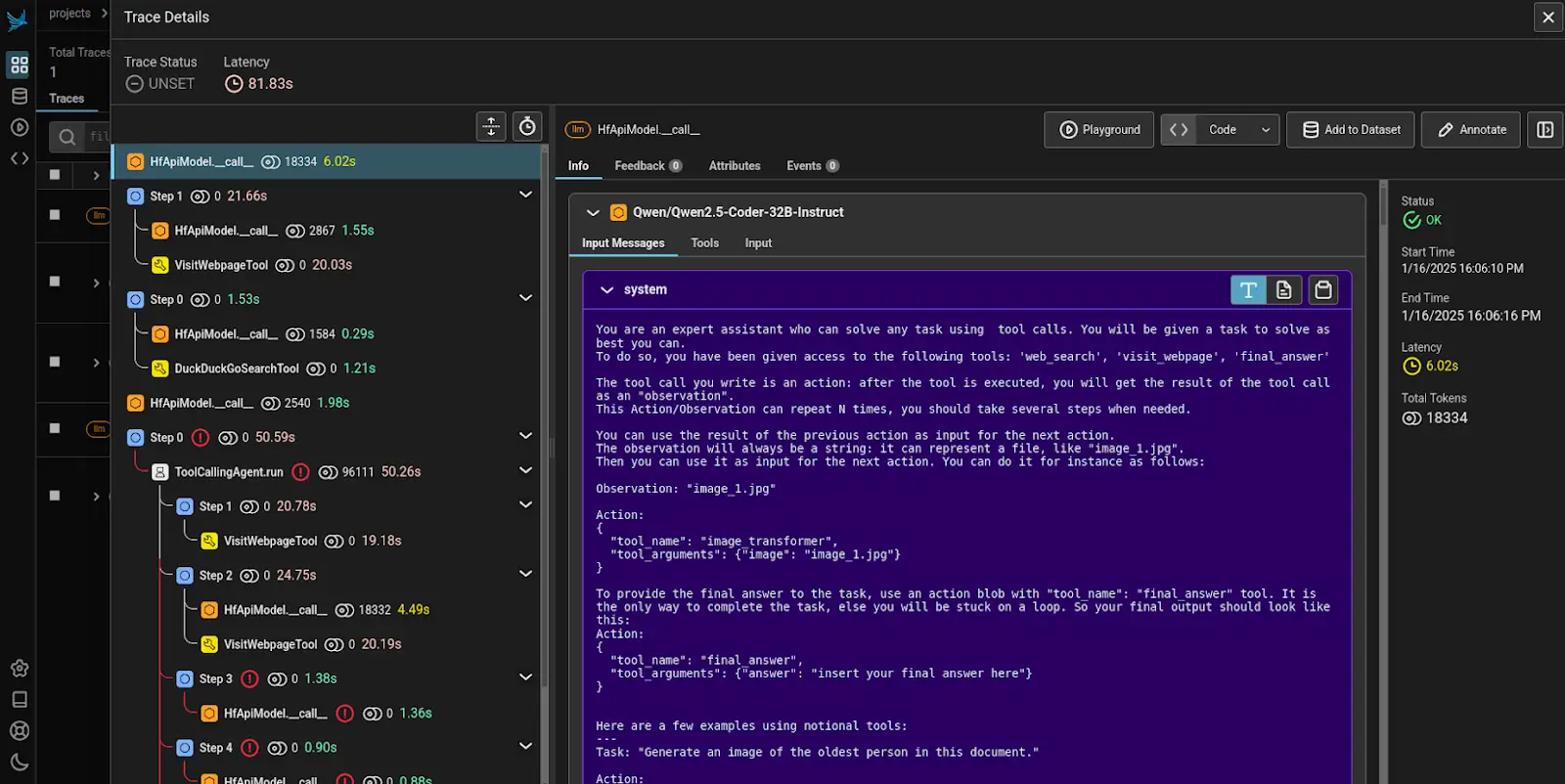

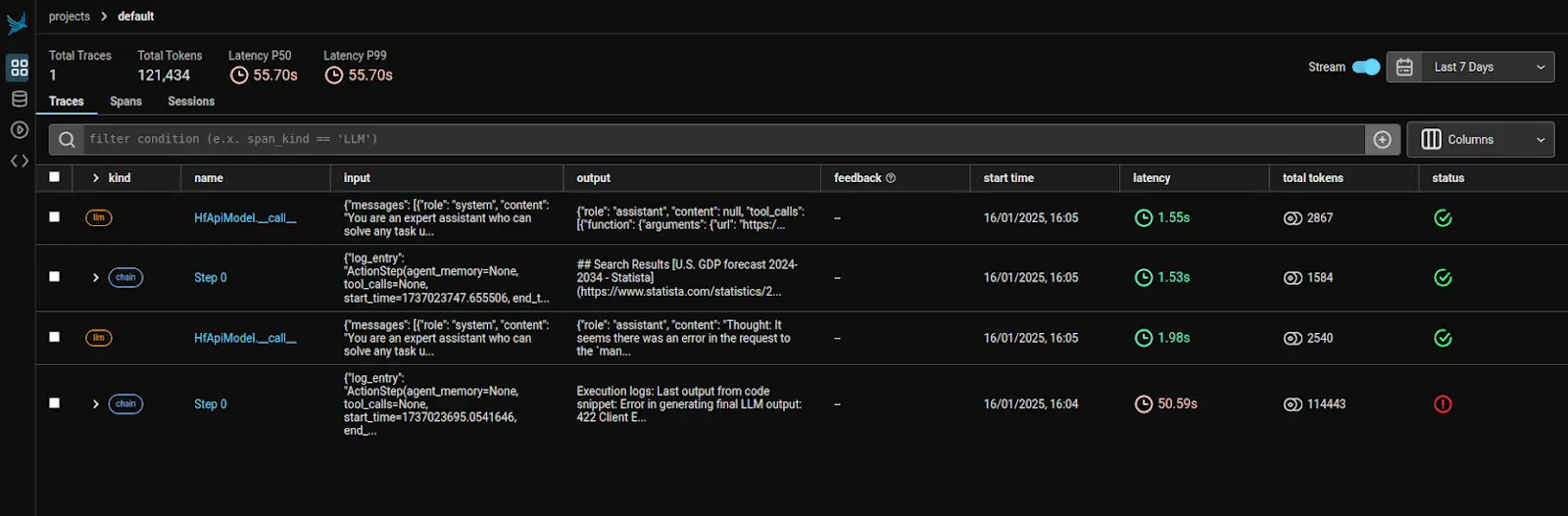

)Here’s how the logs will look:

Conclusion

In conclusion, debugging AI agent runs can be complex due to their unpredictable workflows, extensive logging, and self-correcting minor errors. These challenges highlight the critical role of effective monitoring tools like OpenTelemetry, which provide the visibility and structure needed to streamline debugging, improve performance, and ensure agents operate smoothly. Try it yourself and discover how OpenTelemetry can simplify your AI agent development and debugging process, making it easier to achieve seamless, reliable operations.

Explore the The Agentic AI Pioneer Program to deepen your understanding of Agent AI and unlock its full potential. Join us on this journey to discover innovative insights and applications!