In the fast-evolving world of AI, large language models are pushing boundaries in speed, accuracy, and cost-efficiency. The recent release of Deepseek R1, an open-source model rivaling OpenAI’s o1, is a hot topic in the AI space, especially given its 27x lower cost and superior reasoning capabilities. Pair this with Qdrant’s binary quantization for efficient and quick vector searches, we can index over 1,000+ page documents. In this article, we’ll create a Bhagavad Gita AI Assistant, capable of indexing 1,000+ pages, answering complex queries in seconds using Groq, and delivering insights with domain-specific precision.

Learning Objectives

- Implement binary quantization in Qdrant for memory-efficient vector indexing.

- Understand how to build a Bhagavad Gita AI Assistant using Deepseek R1, Qdrant, and LlamaIndex for efficient text retrieval.

- Learn to optimize Bhagavad Gita AI Assistant with Groq for fast, domain-specific query responses and large-scale document indexing.

- Build a RAG pipeline using LlamaIndex and FastEmbed local embeddings to process 1,000+ pages of the Bhagavad Gita.

- Integrate Deepseek R1 from Groq’s inferencing for real-time, low-latency responses.

- Develop a Streamlit UI to showcase AI-powered insights with thinking transparency.

This article was published as a part of the Data Science Blogathon.

Table of contents

Deepseek R1 vs OpenAI o1

Deepseek R1 challenges OpenAI’s dominance with 27x lower API costs and near-par performance on reasoning benchmarks. Unlike OpenAI’s o1 closed, subscription-based model ($200/month), Deepseek R1 is free, open-source, and ideal for budget-conscious projects and experimentation.

Reasoning- ARC-AGI Benchmark: [Source: ARC-AGI Deepseek]

- Deepseek: 20.5% accuracy (public), 15.8% (semi-private).

- OpenAI: 21% accuracy (public), 18% (semi-private).

From my experience so far, Deepseek does a great job with math reasoning, coding-related use cases, and context-aware prompts. However, OpenAI retains an edge in general knowledge breadth, making it preferable for fact-diverse applications.

What is Binary Quantization in Vector Databases?

Binary quantization (BQ) is Qdrant’s indexing compression technique to optimize high-dimensional vector storage and retrieval. By converting 32-bit floating-point vectors into 1-bit binary values, it slashes memory usage by 40x and accelerates search speeds dramatically.

How It Works

- Binarization: Vectors are simplified to 0s and 1s based on a threshold (e.g., values >0 become 1).

- Efficient Indexing: Qdrant’s HNSW algorithm uses these binary vectors for rapid approximate nearest neighbor (ANN) searches.

- Oversampling: To balance speed and accuracy, BQ retrieves extra candidates (e.g., 200 for a limit of 100) and re-ranks them using original vectors.

Why It Matters

- Storage: A 1536-dimension OpenAI vector shrinks from 6KB to 0.1875 KB.

- Speed: Boolean operations on 1-bit vectors execute faster, reducing latency.

- Scalability: Ideal for large datasets (1M+ vectors) with minimal recall tradeoffs.

Avoid binary quantization for low-dimension vectors (<1024), where information loss significantly impacts accuracy. Traditional scalar quantization (e.g., uint8) may suit smaller embeddings better.

Building the Bhagavad Gita Assistant

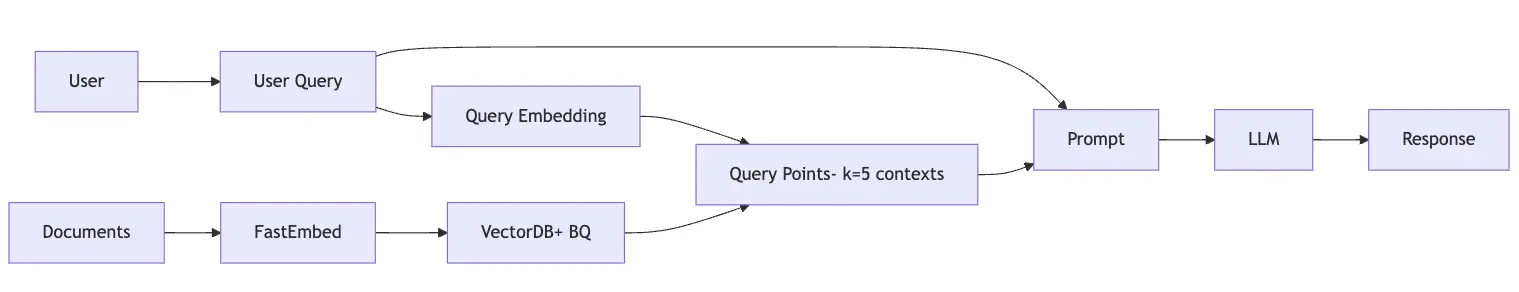

Below is the flow chart that explains on how we can build Bhagwad Gita Assistant:

Architecture Overview

- Data Ingestion: 900-page Bhagavad Gita PDF split into text chunks.

- Embedding: Qdrant FastEmbed’s text-to-vector embedding model.

- Vector DB: Qdrant with BQ stores embeddings, enabling millisecond searches.

- LLM Inference: Deepseek R1 via Groq LPUs generates context-aware responses.

- UI: Streamlit app with expandable “thinking process” visibility.

Step-by-Step Implementation

Let us now follow the steps on by one:

Step1: Installation and Initial Setup

Let’s set up the foundation of our RAG pipeline using LlamaIndex. We need to install essential packages including the core LlamaIndex library, Qdrant vector store integration, FastEmbed for embeddings, and Groq for LLM access.

Note:

- For document indexing, we will use a GPU from Colab to store the data. This is a one-time process.

- Once the data is saved, we can use the collection name to run inferences anywhere, whether on VS Code, Streamlit, or other platforms.

!pip install llama-index

!pip install llama-index-vector-stores-qdrant llama-index-embeddings-fastembed

!pip install llama-index-readers-file

!pip install llama-index-llms-groq Once the installation is done, let’s import the required modules.

import logging

import sys

import os

import qdrant_client

from qdrant_client import models

from llama_index.core import SimpleDirectoryReader

from llama_index.embeddings.fastembed import FastEmbedEmbedding

from llama_index.llms.groq import Groq # deep seek r1 implementationStep2: Document Processing and Embedding

Here, we handle the crucial task of converting raw text into vector representations. The SimpleDirectoryReader loads documents from a specified folder.

Create a folder, i.e., a data directory, and add all your documents inside it. In our case, we downloaded the Bhagavad Gita document and saved it in the data folder.

You can download the ~900-page Bhagavad Gita document here: iskconmangaluru

data = SimpleDirectoryReader("data").load_data()

texts = [doc.text for doc in data]

embeddings = []

BATCH_SIZE = 50Qdrant’s FastEmbed is a lightweight, fast Python library designed for efficient embedding generation. It supports popular text models and utilizes quantized model weights along with the ONNX Runtime for inference, ensuring high performance without heavy dependencies.

To convert the text chunks into embeddings, we will use Qdrant’s FastEmbed. We process these in batches of 50 documents to manage memory efficiently.

embed_model = FastEmbedEmbedding(model_name="thenlper/gte-large")

for page in range(0, len(texts), BATCH_SIZE):

page_content = texts[page:page + BATCH_SIZE]

response = embed_model.get_text_embedding_batch(page_content)

embeddings.extend(response)Step3: Qdrant Setup with Binary Quantization

Time to configure Qdrant client, our vector database, with optimized settings for performance. We create a collection named “bhagavad-gita” with specific vector parameters and enable binary quantization for efficient storage and retrieval.

There are three ways to use the Qdrant client:

- In-Memory Mode: Using location=”:memory:”, which creates a temporary instance that runs only once.

- Localhost: Using location=”localhost”, which requires running a Docker instance. You can follow the setup guide here: Qdrant Quickstart.

- Cloud Storage: Storing collections in the cloud. To do this, create a new cluster, provide a cluster name, and generate an API key. Copy the key and retrieve the URL from the curl command.

Note the collection name needs to be unique, after every data change this needs to be changed as well.

collection_name = "bhagavad-gita"

client = qdrant_client.QdrantClient(

#location=":memory:",

url = "QDRANT_URL", # replace QDRANT_URL with your endpoint

api_key = "QDRANT_API_KEY", # replace QDRANT_API_KEY with your API keys

prefer_grpc=True

)

We first check if a collection with the specified collection_name exists in Qdrant. If it doesn’t, only then we create a new collection configured to store 1,024-dimensional vectors and use cosine similarity for distance measurement.

We enable on-disk storage for the original vectors and apply binary quantization, which compresses the vectors to reduce memory usage and enhance search speed. The always_ram parameter ensures that the quantized vectors are kept in RAM for faster access.

if not client.collection_exists(collection_name=collection_name):

client.create_collection(

collection_name=collection_name,

vectors_config=models.VectorParams(size=1024,

distance=models.Distance.COSINE,

on_disk=True),

quantization_config=models.BinaryQuantization(

binary=models.BinaryQuantizationConfig(

always_ram=True,

),

),

)

else:

print("Collection already exists")Step4: Index the document

The indexing process uploads our processed documents and their embeddings to Qdrant in batches. Each document is stored alongside its vector representation, creating a searchable knowledge base.

The GPU will be used at this stage, and depending on the data size, this step may take a few minutes.

for idx in range(0, len(texts), BATCH_SIZE):

docs = texts[idx:idx + BATCH_SIZE]

embeds = embeddings[idx:idx + BATCH_SIZE]

client.upload_collection(collection_name=collection_name,

vectors=embeds,

payload=[{"context": context} for context in docs])

client.update_collection(collection_name= collection_name,

optimizer_config=models.OptimizersConfigDiff(indexing_threshold=20000)) Step5: RAG Pipeline with Deepseek R1

Process-1: R- Retrieve relevant document

The search function takes a user query, converts it to an embedding, and retrieves the most relevant documents from Qdrant based on cosine similarity. We demonstrate this with a sample query about the Bhagavad-gītā, showing how to access and print the retrieved context.

def search(query,k=5):

# query = user prompt

query_embedding = embed_model.get_query_embedding(query)

result = client.query_points(

collection_name = collection_name,

query=query_embedding,

limit = k

)

return result

relevant_docs = search("In Bhagavad-gītā who is the person devoted to?")

print(relevant_docs.points[4].payload['context'])Process-2: A- Augmenting prompt

For RAG it’s important to define the system’s interaction template using ChatPromptTemplate. The template creates a specialized assistant knowledgeable in Bhagavad-gita, capable of understanding multiple languages (English, Hindi, Sanskrit).

It includes structured formatting for context injection and query handling, with clear instructions for handling out-of-context questions.

from llama_index.core import ChatPromptTemplate

from llama_index.core.llms import ChatMessage, MessageRole

message_templates = [

ChatMessage(

content="""

You are an expert ancient assistant who is well versed in Bhagavad-gita.

You are Multilingual, you understand English, Hindi and Sanskrit.

Always structure your response in this format:

<think>

[Your step-by-step thinking process here]

</think>

[Your final answer here]

""",

role=MessageRole.SYSTEM),

ChatMessage(

content="""

We have provided context information below.

{context_str}

---------------------

Given this information, please answer the question: {query}

---------------------

If the question is not from the provided context, say `I don't know. Not enough information received.`

""",

role=MessageRole.USER,

),

]Process-3: G- Generating the response

The final pipeline brings everything together in a cohesive RAG system. It follows the Retrieve-Augment-Generate pattern: retrieving relevant documents, augmenting them with our specialized prompt template, and generating responses using the LLM. Here for LLM we will use Deepseek R-1 distill Llama 70 B hosted on Groq, get your keys from here: Groq Console.

os.environ['GROQ_API_KEY'] = "GROQ_API_KEY" # replace with your keys

llm = Groq(model="deepseek-r1-distill-llama-70b")

def pipeline(query):

# R - Retriver

relevant_documents = search(query)

context = [doc.payload['context'] for doc in relevant_documents.points]

context = "\n".join(context)

# A - Augment

chat_template = ChatPromptTemplate(message_templates=message_templates)

# G - Generate

response = llm.complete(

chat_template.format(

context_str=context,

query=query)

)

return response

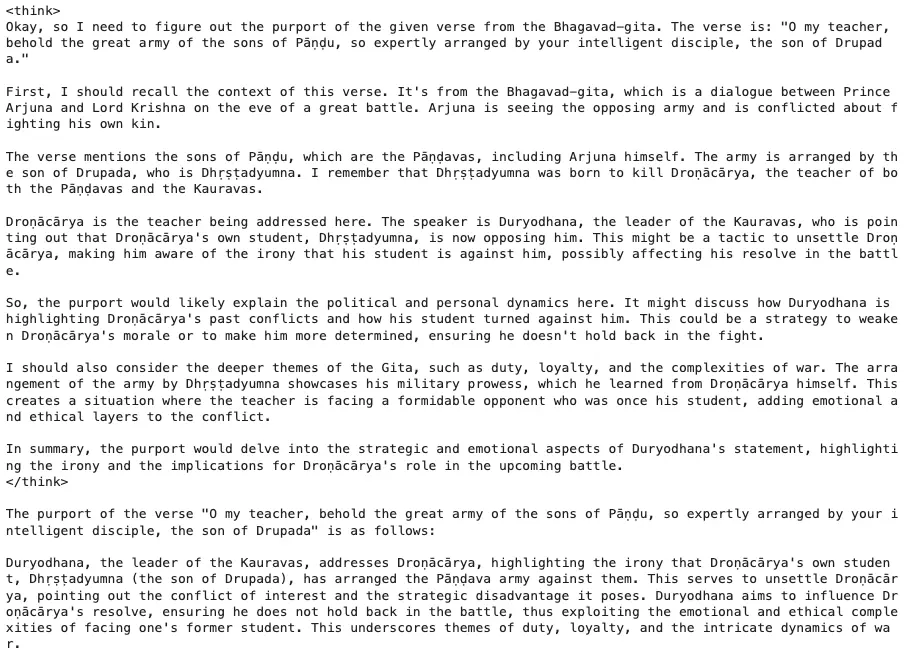

print(pipeline("""what is the PURPORT of O my teacher, behold the great army of the sons of Pāṇḍu, so

expertly arranged by your intelligent disciple, the son of Drupada."""))Output: (Syntax: <think> reasoning </think> response)

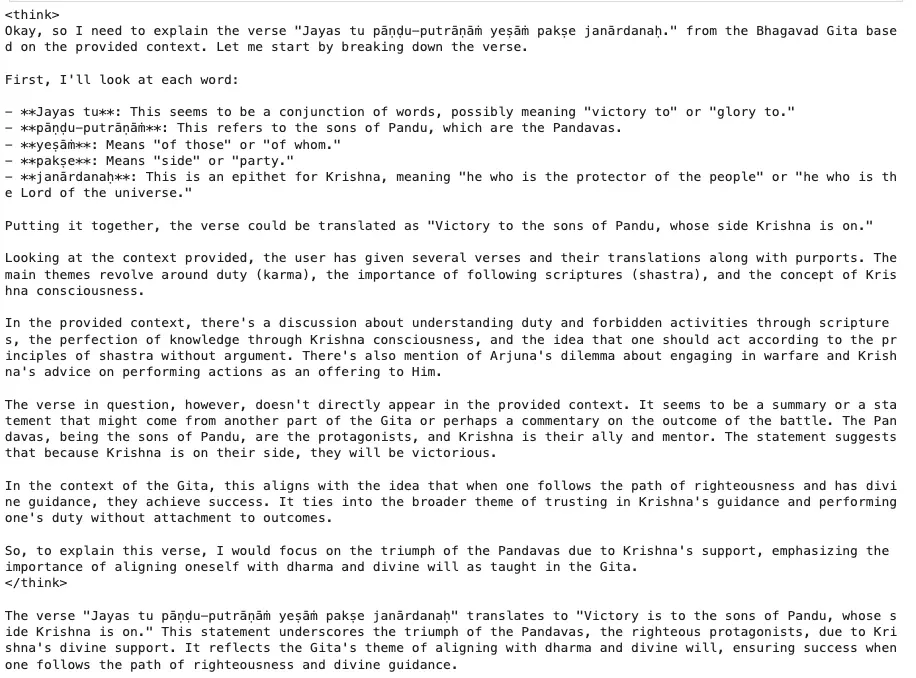

print(pipeline("""

Jayas tu pāṇḍu-putrāṇāṁ yeṣāṁ pakṣe janārdanaḥ.

explain this gita from translation

"""))

Now what if you need to use this application again? Are we supposed to undergo all the steps again?

The answer is no.

Step6: Saved Index Inference

There is not much difference in what you have already written. We will reuse the same search and pipeline function along with the collection name that we need to run the query_points.

client = qdrant_client.QdrantClient(

url= "QDRANT_URL",

api_key = "QDRANT_API_KEY",

prefer_grpc = True

)

# the search and pipeline code remain the same.

def search(query, client, embed_model, k=5):

collection_name = "bhagavad-gita"

query_embedding = embed_model.get_query_embedding(query)

result = client.query_points(

collection_name=collection_name,

query=query_embedding,

limit=k

)

return result

def pipeline(query, embed_model, llm, client):

# R - Retriever

relevant_documents = search(query, client, embed_model)

context = [doc.payload['context'] for doc in relevant_documents.points]

context = "\n".join(context)

# A - Augment

chat_template = ChatPromptTemplate(message_templates=message_templates)

# G - Generate

response = llm.complete(

chat_template.format(

context_str=context,

query=query)

)

return responseWe will use the same above two functions and message_template in the Streamlit app.py.

Step7: Streamlit UI

In Streamlit after every user question, the state is refreshed. To avoid refreshing the entire page again, we will define a few initialization steps under Streamlit cache_resource.

Remember when the user enters the question, the FastEmbed will download the model weights just once, the same goes for Groq and Qdrant instantiation.

import streamlit as st

from time import sleep

import qdrant_client

from qdrant_client import models

from llama_index.core import ChatPromptTemplate

from llama_index.core.llms import ChatMessage, MessageRole

from llama_index.embeddings.fastembed import FastEmbedEmbedding

from llama_index.llms.groq import Groq

from dotenv import load_dotenv

import os

load_dotenv()

@st.cache_resource

def initialize_models():

embed_model = FastEmbedEmbedding(model_name="thenlper/gte-large")

llm = Groq(model="deepseek-r1-distill-llama-70b")

client = qdrant_client.QdrantClient(

url=os.getenv("QDRANT_URL"),

api_key=os.getenv("QDRANT_API_KEY"),

prefer_grpc=True

)

return embed_model, llm, client

st.title("🕉️ Bhagavad Gita Assistant")

# this will run only once, and be saved inside the cache

embed_model, llm, client = initialize_models() If you noticed the response output, the format is <think> reasoning </think> response.

On the UI, I want to keep the reasoning under the Streamlit expander, to retrieve the reasoning part, let’s use string indexing to extract the reasoning and the actual response.

def extract_thinking_and_answer(response_text):

"""Extract thinking process and final answer from response"""

try:

thinking = response_text[response_text.find("<think>") + 7:response_text.find("</think>")].strip()

answer = response_text[response_text.find("</think>") + 8:].strip()

return thinking, answer

except:

return "", response_textChatbot Component

Initializes a messages history in Streamlit’s session state. A “Clear Chat” button in the sidebar allows users to reset this history.

Iterates through stored messages and displays them in a chat-like interface. For assistant responses, it separates the thinking process (shown in an expandable section) from the actual answer using the extract_thinking_and_answer function.

The remaining piece of code is a standard format to define the chatbot component in Streamlit i.e., input handling that creates an input field for user questions. When a question is submitted, it’s displayed and added to the message history. Now it processes the user’s question through the RAG pipeline while showing a loading spinner. The response is split into thinking process and answer components.

def main():

if "messages" not in st.session_state:

st.session_state.messages = []

with st.sidebar:

if st.button("Clear Chat"):

st.session_state.messages = []

st.rerun()

# Display chat messages

for message in st.session_state.messages:

with st.chat_message(message["role"]):

if message["role"] == "assistant":

thinking, answer = extract_thinking_and_answer(message["content"])

with st.expander("Show thinking process"):

st.markdown(thinking)

st.markdown(answer)

else:

st.markdown(message["content"])

# Chat input

if prompt := st.chat_input("Ask your question about the Bhagavad Gita..."):

# Display user message

st.chat_message("user").markdown(prompt)

st.session_state.messages.append({"role": "user", "content": prompt})

# Generate and display response

with st.chat_message("assistant"):

message_placeholder = st.empty()

with st.spinner("Thinking..."):

full_response = pipeline(prompt, embed_model, llm, client)

thinking, answer = extract_thinking_and_answer(full_response.text)

with st.expander("Show thinking process"):

st.markdown(thinking)

response = ""

for chunk in answer.split():

response += chunk + " "

message_placeholder.markdown(response + "▌")

sleep(0.05)

message_placeholder.markdown(answer)

# Add assistant response to history

st.session_state.messages.append({"role": "assistant", "content": full_response.text})

if __name__ == "__main__":

main()Important Links

- You can find the full code

- Alternative Bhagavad Gita PDF- Download

- Replace the “<replace-api-key>” placeholder with your keys.

Conclusion

By combining Deepseek R1’s reasoning, Qdrant’s binary quantization, and LlamaIndex’s RAG pipeline, we’ve built an AI assistant that delivers sub-2-second responses on 1,000+ pages. This project underscores how domain-specific LLMs and optimized vector databases can democratize access to ancient texts while maintaining cost efficiency. As open-source models continue to evolve, the possibilities for niche AI applications are limitless.

Key Takeaways

- Deepseek R1 rivals OpenAI o1 in reasoning at 1/27th the cost, ideal for domain-specific tasks like scripture analysis, while OpenAI suits broader knowledge needs.

- Understanding RAG Pipeline Implementation with demonstrated code examples for document processing, embedding generation, and vector storage using LlamaIndex and Qdrant.

- Efficient Vector Storage optimization through Binary Quantization in Qdrant, enabling processing of large document collections while maintaining performance and accuracy.

- Structured Prompt Engineering implementation with clear templates for handling multilingual queries (English, Hindi, Sanskrit) and managing out-of-context questions effectively.

- Interactive UI using Streamlit, to inference the application once stored in the vector database.

Frequently Asked Questions

A. Minimal impact on recall! Qdrant’s oversampling re-ranks top candidates using original vectors, maintaining accuracy while boosting speed 40x and slashing memory usage by 97%.

A. Yes! The RAG pipeline uses FastEmbed’s embeddings and Deepseek R1’s language flexibility. Custom prompts guide responses in English, Hindi, or Sanskrit. Whereas you can use the embedding model that can understand Hindi tokens, in our case the token used understand English and Hindi text.

A. Deepseek R1 offers 27x lower API costs, comparable reasoning accuracy (20.5% vs o1’s 21%), and superior coding/domain-specific performance. It’s ideal for specialized tasks like scripture analysis where cost and focused expertise matter.

The media shown in this article is not owned by Analytics Vidhya and is used at the Author’s discretion.