Digital documents have long presented a dual challenge for both human readers and automated systems: preserving rich structural nuances while converting content into machine-processable formats. Traditional methods, whether relying on complex ensemble pipelines or massive foundational models, often struggle to balance accuracy with computational efficiency. SmolDocling emerges as a game-changing solution, offering an ultra-compact 256M-parameter vision-language model that performs end-to-end document conversion with remarkable precision and speed.

Table of contents

The Challenge of Document Conversion

For decades, converting complex layouts ranging from business documents to academic papers into structured representations has been a difficult task. Common issues include:

- Layout Variability: Documents present a wide array of layouts and styles.

- Opaque Formats: Formats like PDF are optimized for printing rather than semantic parsing, obscuring the underlying structure.

- Resource Demands: Traditional large-scale models or ensemble solutions require extensive computational resources and intricate tuning.

These challenges have led to a lot of research, but finding a solution that is both efficient and accurate is still difficult.

Introducing SmolDocling

SmolDocling addresses these hurdles head-on by leveraging a unified approach:

- End-to-End Conversion: Instead of piecing together multiple specialized models, SmolDocling processes entire document pages in one go.

- Compact yet Powerful: With just 256M parameters, it delivers performance comparable to models up to 27 times larger.

- Robust Multi-Modal Capabilities: Whether dealing with code listings, tables, equations, or complex charts, SmolDocling adapts seamlessly across diverse document types.

At its core, the model introduces a novel markup format known as DocTags—a universal standard that meticulously captures every element’s content, structure, and spatial context.

Unpacking DocTags

DocTags revolutionize the way document elements are represented:

- Structured Vocabulary: Inspired by earlier work like OTSL, DocTags use XML-style tags to explicitly differentiate between text, images, tables, code, and more.

- Spatial Awareness: Each element is annotated with precise bounding box coordinates, ensuring that layout context is preserved.

- Unified Representation: Whether processing a full-page document or an isolated element (like a cropped table), the format remains consistent, boosting the model’s ability to learn and generalize.

- <picture> – Represents an image or visual content in the document.

- <flow_chart> – Likely represents a diagram or structured graphical representation.

- <caption> – Provides a description or annotation for an image or diagram.

- <otsl> – Possibly represents a structured document format for tables or layouts.

- <loc_XX> – Indicates the position of an element within the document.

- <ched> – Likely a shorthand for “header” or “categorical header” within a table.

- <fcel> – Probably refers to “formatted cell,” indicating specific cell content in tables.

- <nl> – Represents a new line or a break in text.

- <section_header_level_1> – Marks a major section heading in the document.

- <text> – Defines general text content within the document.

- <unordered_list> – Represents a bulleted or unordered list.

- <list_item> – Specifies an individual item within a list.

- <code> – Contains programming or script-related content, formatted for readability.

This clear, structured format minimizes ambiguity, a common issue with direct conversion methods to formats like HTML or Markdown.

Deep Dive: Dataset Training and Model Architecture

Dataset Training

A key pillar of SmolDocling’s success is its rich, diverse training data:

- Pre-training Data:

- DocLayNet-PT: A 1.4M page dataset extracted from unique PDF documents sourced from CommonCrawl, Wikipedia, and business documents. This dataset is enriched with weak annotations covering layout elements, table structures, language, topics, and figure classifications.

- DocMatix: Adapted using a similar weak annotation strategy as DocLayNet-PT, this dataset includes multi-task document conversion tasks.

- Task-Specific Data:

- Layout & Structure: High-quality annotated pages from DocLayNet v2, WordScape, and synthetically generated pages from SynthDocNet ensure robust layout and table structure learning.

- Charts, Code, and Equations: Custom-generated datasets provide extensive visual diversity. For instance, over 2.5 million charts are generated using three different visualization libraries, while 9.3M rendered code snippets and 5.5M formulas provide detailed coverage of technical document elements.

- Instruction Tuning: To reinforce the recognition of different page elements and introduce document-related features and no-code pipelines, rule-based techniques and the Granite-3.1-2b-instruct LLM were leveraged. Using samples from DocLayNet-PT pages, one instruction was generated by randomly sampling layout elements from a page. These instructions included tasks such as:

- “Perform OCR at bbox”

- “Identify page element type at bbox”

- “Extract all section headers from the page”

- Additionally, training with the Cauldron dataset helps avoid catastrophic forgetting due to the introduction of numerous conversation datasets.

Model Architecture of SmolDocling

SmolDocling builds upon the SmolVLM framework and incorporates several innovative techniques to ensure efficiency and effectiveness:

- Vision Encoder with SigLIP Backbone: The model uses a SigLIP base 16/512 encoder (93M parameters) which applies an aggressive pixel shuffle strategy. This compresses each 512×512 image patch into 64 visual tokens, significantly reducing the number of image hidden states.

- Enhanced Tokenization: By increasing the pixel-to-token ratio (up to 4096 pixels per token) and introducing special tokens for sub-image separation, tokenization efficiency is markedly improved. This design ensures that both full-page documents and cropped elements are processed uniformly.

- Curriculum Learning Approach: Training begins with freezing the vision encoder, focusing on aligning the language model with the new DocTags format. Once the model is familiar with the output structure, the vision encoder is unfrozen and fine-tuned along with task-specific datasets, ensuring comprehensive learning.

- Efficient Inference: With a maximum sequence length of 8,192 tokens and the ability to process up to three pages at a time, SmolDocling achieves page conversion times of just 0.35 seconds using VLLM on an A100 GPU, while occupying only 0.489 GB of VRAM.

Comparative Analysis: SmolDocling Versus Other Models

A thorough evaluation of SmolDocling against leading vision-language models highlights its competitive edge:

Text Recognition (OCR) and Document Formatting

| Method | Model Size | Edit Distance ↓ | F1-score ↑ | Precision ↑ | Recall ↑ | BLEU ↑ | METEOR ↑ |

| Qwen2.5 VL [9] | 7B | 0.56 | 0.72 | 0.80 | 0.70 | 0.46 | 0.57 |

| GOT [89] | 580M | 0.61 | 0.69 | 0.71 | 0.73 | 0.48 | 0.59 |

| Nougat (base) [12] | 350M | 0.62 | 0.66 | 0.72 | 0.67 | 0.44 | 0.54 |

| SmolDocling (Ours) | 256M | 0.48 | 0.80 | 0.89 | 0.79 | 0.58 | 0.67 |

Insights: SmolDocling outperforms larger models across all key metrics in full-page transcription. The significant improvements in F1-score, precision, and recall reflect its superior capability in accurately reproducing textual elements and preserving reading order.

Specialized Tasks: Code Listings and Equations

- Code Listings: For tasks like code listing transcription, SmolDocling exhibits an impressive F1-score of 0.92 and precision of 0.94, highlighting its expertise at handling indentation and syntax that carry semantic significance.

- Equations: In the domain of equation recognition, SmolDocling closely matches or exceeds the performance of models like Qwen2.5 VL and GOT, achieving an F1-score of 0.95 and precision of 0.96.

These results underscore SmolDocling’s ability to not only match but often surpass the performance of models that are significantly larger in size, affirming that a compact model can be both efficient and effective when built with a focused architecture and optimized training strategies.

Code Demonstration and Output Visualization

To provide a practical glimpse into how SmolDocling operates, the following section includes a sample code snippet along with an illustration of the expected output. This example demonstrates how to convert a document image into the DocTags markup format.

Example 1: Sample Code Snippet

!pip install docling_core

!pip install flash-attn

import torch

from docling_core.types.doc import DoclingDocument

from docling_core.types.doc.document import DocTagsDocument

from transformers import AutoProcessor, AutoModelForVision2Seq

from transformers.image_utils import load_image

DEVICE = "cuda" if torch.cuda.is_available() else "cpu"

# Load images

# Initialize processor and model

processor = AutoProcessor.from_pretrained("ds4sd/SmolDocling-256M-preview")

model = AutoModelForVision2Seq.from_pretrained(

"ds4sd/SmolDocling-256M-preview",

torch_dtype=torch.bfloat16,

_attn_implementation="flash_attention_2"# if DEVICE == "cuda" else "eager",

).to(DEVICE)

model.device

# Load images

image = load_image("https://user-images.githubusercontent.com/12294956/47312583-697cfe00-d65a-11e8-930a-e15fd67a5bb1.png")

# Create input messages

messages = [

{

"role": "user",

"content": [

{"type": "image"},

{"type": "text", "text": "Convert this page to docling."}

]

},

]

# Prepare inputs

prompt = processor.apply_chat_template(messages, add_generation_prompt=True)

inputs = processor(text=prompt, images=[image], return_tensors="pt")

inputs = inputs.to(DEVICE)

# Generate outputs

generated_ids = model.generate(**inputs, max_new_tokens=8192)

prompt_length = inputs.input_ids.shape[1]

trimmed_generated_ids = generated_ids[:, prompt_length:]

doctags = processor.batch_decode(

trimmed_generated_ids,

skip_special_tokens=False,

)[0].lstrip()

# Populate document

doctags_doc = DocTagsDocument.from_doctags_and_image_pairs([doctags], [image])

print(doctags)

# create a docling document

doc = DoclingDocument(name="Document")

doc.load_from_doctags(doctags_doc)

from IPython.display import display, Markdown

display(Markdown(doc.export_to_markdown()))Input Image

Output

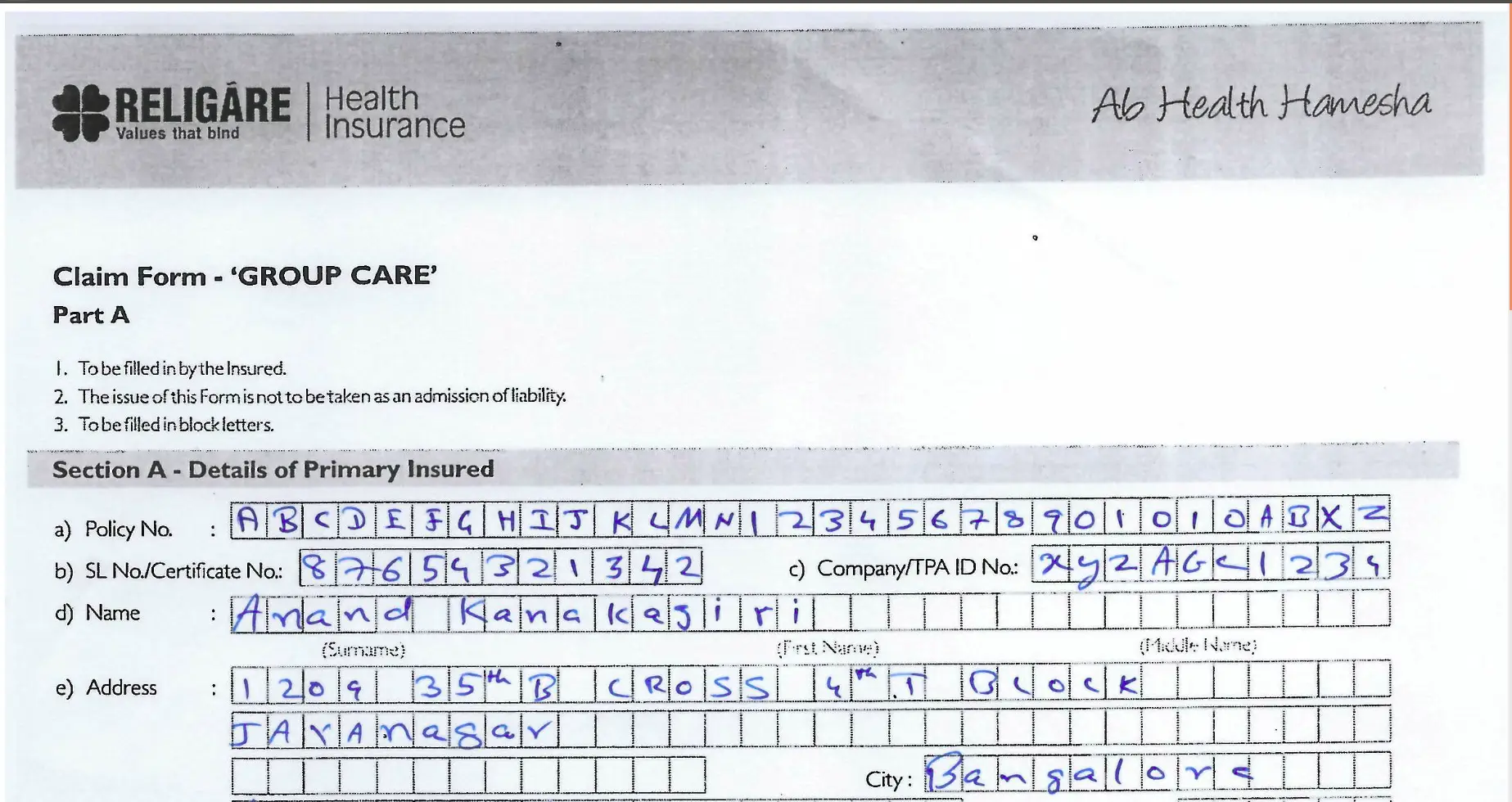

This output illustrates how various document elements—text blocks, tables, and code listings are precisely marked with their content and spatial information, making them ready for further processing or analysis. But the model is unable to convert all the text DocTags markup format. As you can see, model didn’t read the human written text.

Example 2: Sample Code Snippet

!curl -L -o image2.png https://i.imgur.com/BFN038S.png

The input image is receipt and now we are extracting the text from it.

image = load_image("./image2.png")# Create input messages

messages = [

{

"role": "user",

"content": [

{"type": "image"},

{"type": "text", "text": "Convert this page to docling."}

]

},

]

# Prepare inputs

prompt1 = processor.apply_chat_template(messages, add_generation_prompt=True)

inputs1 = processor(text=prompt1, images=[image], return_tensors="pt")

inputs1 = inputs1.to(DEVICE)

# Generate outputs

generated_ids = model.generate(**inputs1, max_new_tokens=8192)

prompt_length = inputs1.input_ids.shape[1]

trimmed_generated_ids = generated_ids[:, prompt_length:]

doctags = processor.batch_decode(

trimmed_generated_ids,

skip_special_tokens=False,

)[0].lstrip()

# Populate document

doctags_doc = DocTagsDocument.from_doctags_and_image_pairs([doctags], [image])

print(doctags)

# create a docling document

doc = DoclingDocument(name="Document")

doc.load_from_doctags(doctags_doc)

# export as any format

# HTML

# doc.save_as_html(output_file)

# MD

print(doc.export_to_markdown())from IPython.display import display, Markdown

display(Markdown(doc.export_to_markdown()))Output

It is quite impressive as the model extracted all the content from the receipt and it is better than the obove given example.

Notebook with full code: Click Here

Conclusion and Future Directions

SmolDocling sets a new benchmark in document conversion by proving that smaller, more efficient models can rival and even surpass the capabilities of their larger counterparts. Its innovative use of DocTags and an end-to-end conversion strategy provide a compelling blueprint for the next generation of vision-language models. It works well with receipts overall and performs acceptably with other documents, though not always perfectly this serves as a consequence of its memory-saving model design.

Key Takeaways

- Efficiency: With a compact 256M parameter architecture, SmolDocling achieves rapid page conversion with minimal computational overhead.

- Robustness: Extensive pre-training and task-specific datasets, along with a curriculum learning approach, ensure that the model generalizes well across diverse document types.

- Comparative Superiority: Through rigorous evaluations, SmolDocling has demonstrated superior performance in OCR, code listing transcription, and equation recognition compared to larger models.

As the research community continues to refine techniques for element localization and multimodal understanding, SmolDocling provides a clear pathway toward more resource-efficient and versatile document processing solutions. With plans to release the accompanying datasets publicly, this work paves the way for further advancements and collaborations in the field.

Great model!And it really helpful!