The wait is over: Grok 3 API is out, and it’s already shaking up the world of AI with its “scary smart” reasoning, real-time web capabilities, and top-tier performance on coding and STEM benchmarks. Whether you’re a developer, researcher, or AI enthusiast, now’s the perfect time to access the Grok 3 API and explore what it can do. In this blog, you’ll learn how to access Grok 3 API, authenticate your credentials securely, and connect to Grok 3 API using Python. We’ll also walk through practical use cases, from Grok 3 API integration in your workflows to using Grok 3 API for data retrieval and advanced reasoning tasks.

Table of contents

Key Features of Grok 3

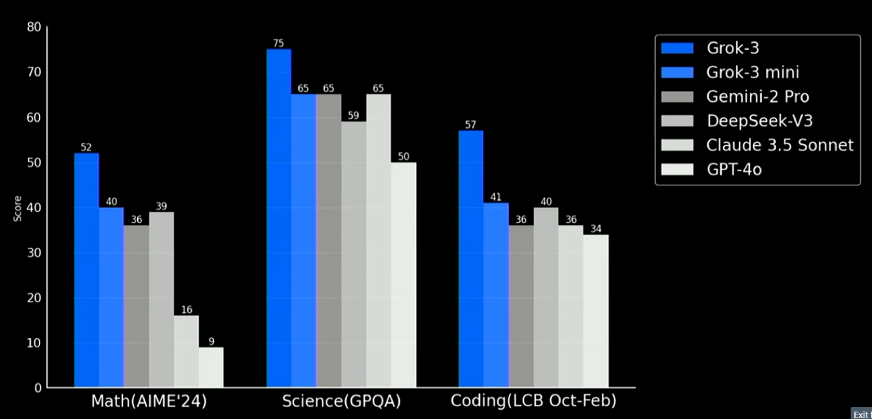

Grok 3 introduces several groundbreaking features that enhance its performance and applicability across various domains:

- Advanced Reasoning Modes: Grok 3 offers specialized reasoning modes, including “Think” for step-by-step problem-solving and “Big Brain” for tackling complex tasks. These modes allow the model to process information more deeply and provide accurate responses.

- DeepSearch Functionality: The model incorporates DeepSearch, an AI agent that scans the internet and X(formerly Twitter) in real time to generate comprehensive reports on specific topics. This feature ensures that Grok 3’s responses are current and well-informed.

- Enhanced Performance Benchmarks: Grok 3 has demonstrated exceptional performance, scoring 93.3% on the 2025 American Invitational Mathematics Examination (AIME) and achieving an Elo score of 1402 in the Chatbot Arena, indicating its dominance in STEM fields.

- Real-Time Data Integration: Unlike static AI models, Grok 3 integrates real-time data from the web and X posts, ensuring its responses are up-to-date and relevant.

- Multimodal Capabilities: The model can process and generate text, images, and code, expanding its applicability across various domains.

These features position Grok 3 as a significant advancement in AI language models. It offers enhanced reasoning, real-time data processing, and a broad range of functionalities to cater to diverse user needs.

Also Read: 5 Grok 3 Prompts that Can Make Your Work Easy

Pricing and Model Specifications

xAI has introduced multiple variants of the Grok-3 model series. Each fine-tuned for varying levels of performance and cost-efficiency. These models cater to developers, researchers and organizations depending on their computational and reasoning needs.

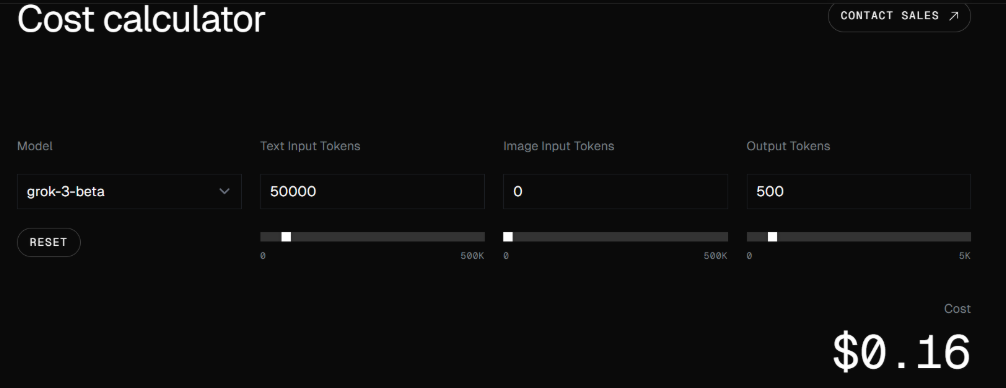

Pro Tip: Use xAI’s Cost Calculator Before You Call the API

Before you start making multiple API calls, xAI offers a super handy cost calculator for each of their models, including grok-3-beta, grok-3-fast-beta, and the mini variants.

You can find it directly at https://x.ai/api (just scroll down a bit).

You can customize:

- Text Input Tokens

- Image Input Tokens

- Output Tokens

This tool gives you a real-time cost estimate so you can plan your usage efficiently and avoid unexpected billing. For example, 50,000 text input tokens and 500 output tokens on grok-3-beta will cost you just $0.16 (as seen in the previous screenshot).

Smart dev tip: Use this calculator often to optimize your API strategy, especially when scaling or handling large payloads.

How to Access the API?

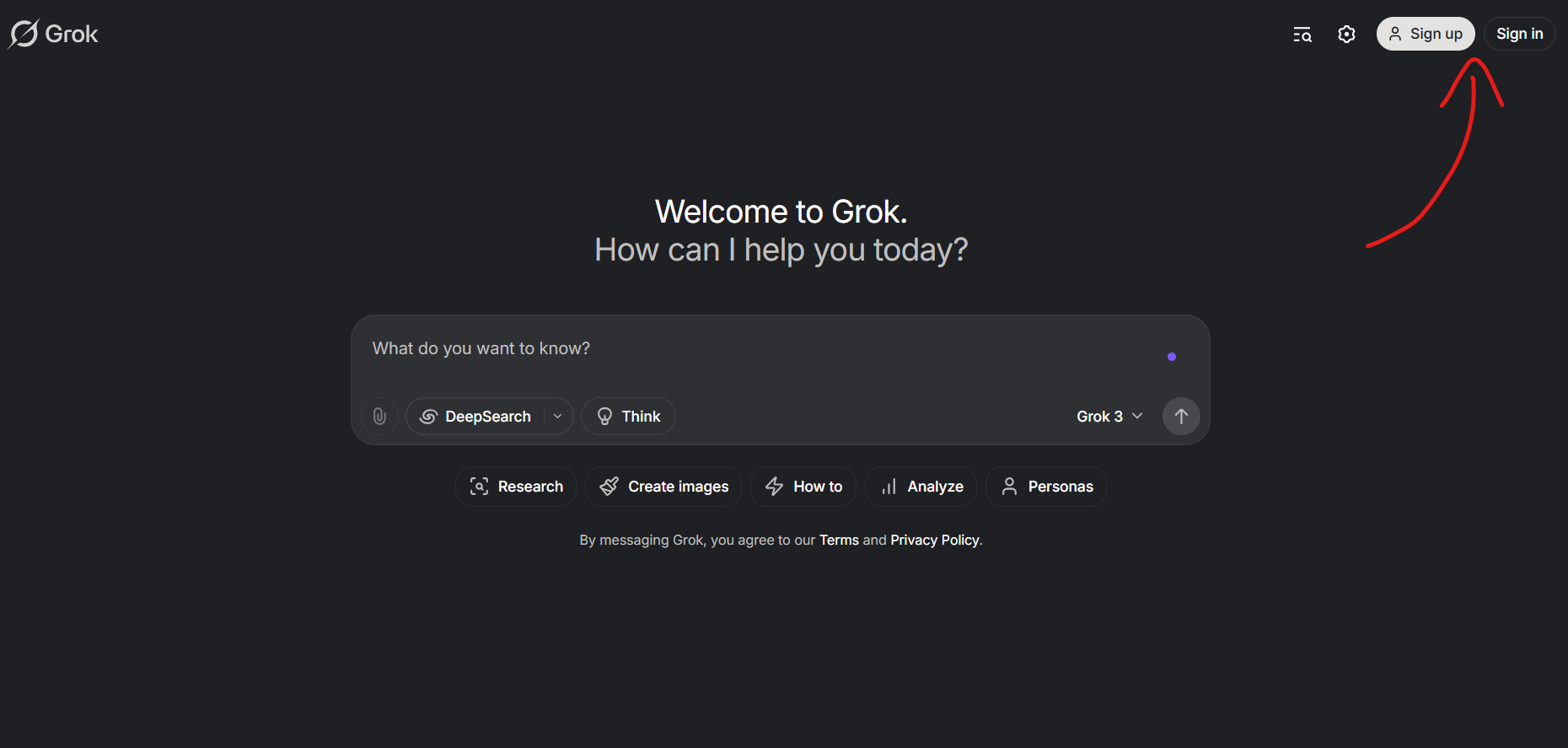

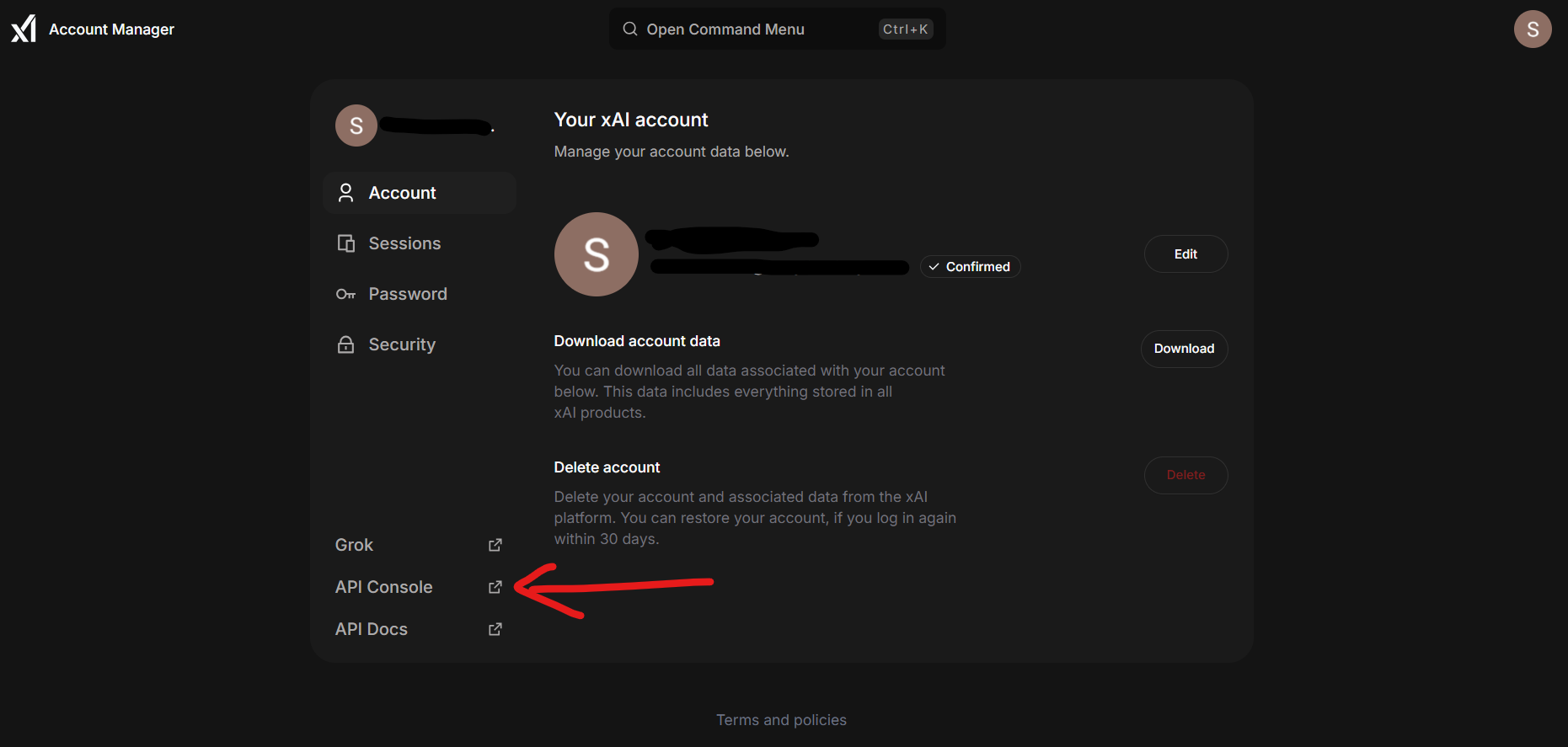

1. Visit grok.com and log in with your account credentials.

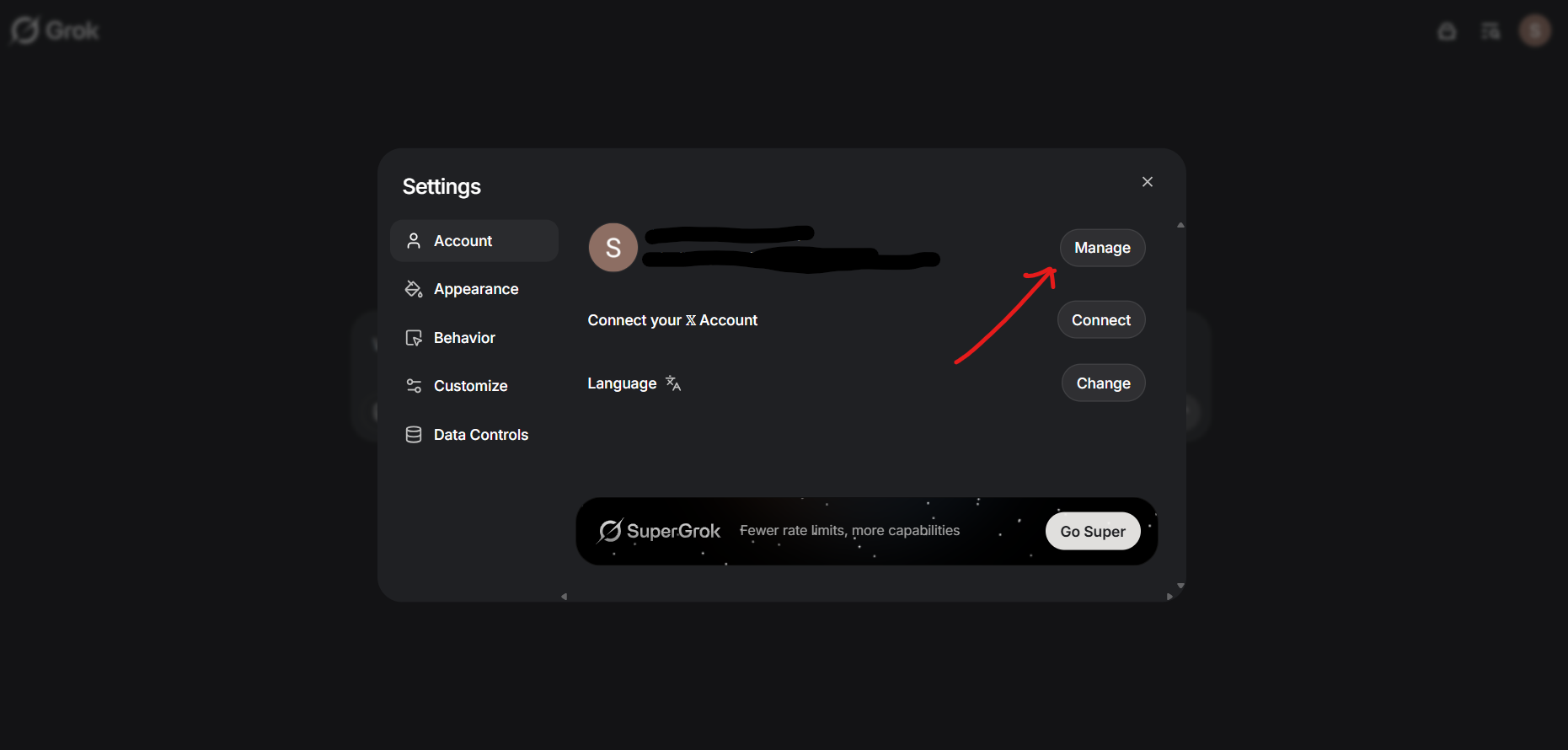

2. Click on your profile avatar in the top-right corner, choose Settings, and then select Manage. You’ll be redirected to the xAI Accounts page.

3. On the xAI Accounts page, navigate to the API Console.

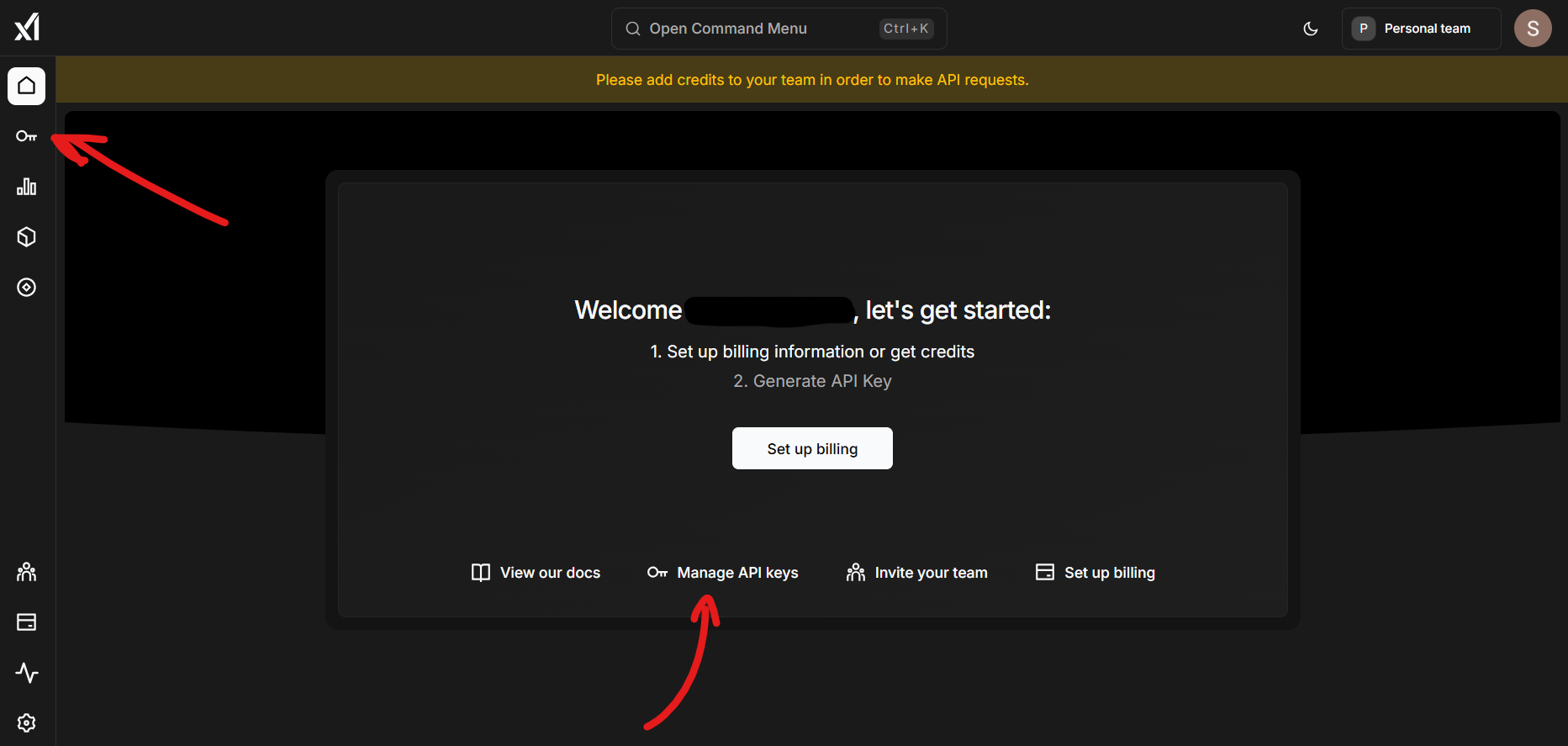

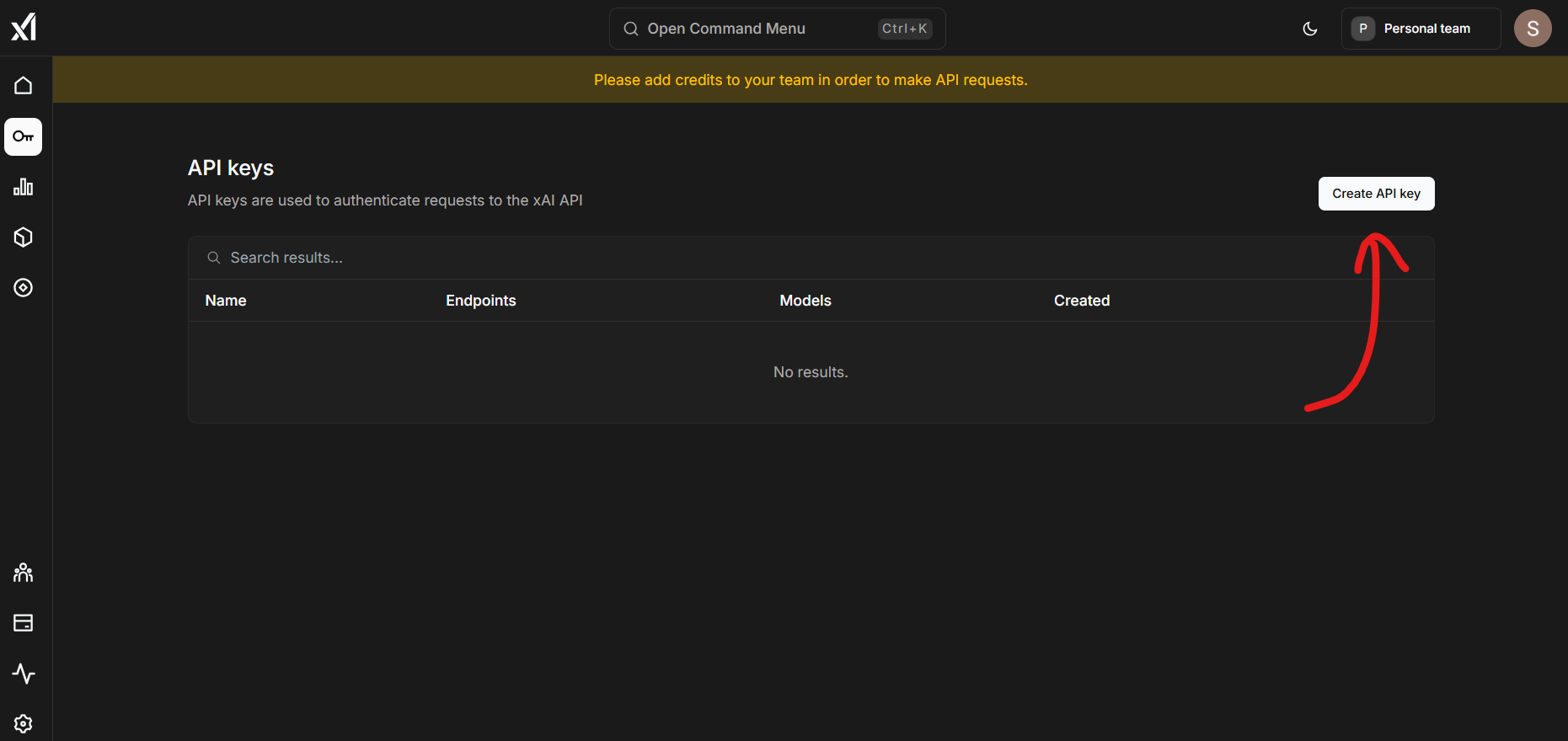

4. In the left-hand sidebar of the API Console, click the key icon to view and copy your Grok API key. 🙂

Voila, there you have your API key! Be sure to store it carefully and securely.

Implementation of Grok 3

Basic Implementations

Let’s try to check if our Grok 3 model can respond using this Code Snippet, which is provided in the Grok xAI documentation.

!pip install openai

import os

os.environ['GROK_API_KEY'] = "xai-..." # your own api key

from IPython.display import Markdown

from openai import OpenAI

client = OpenAI(

api_key=os.getenv("GROK_API_KEY"),

base_url="https://api.x.ai/v1",

)

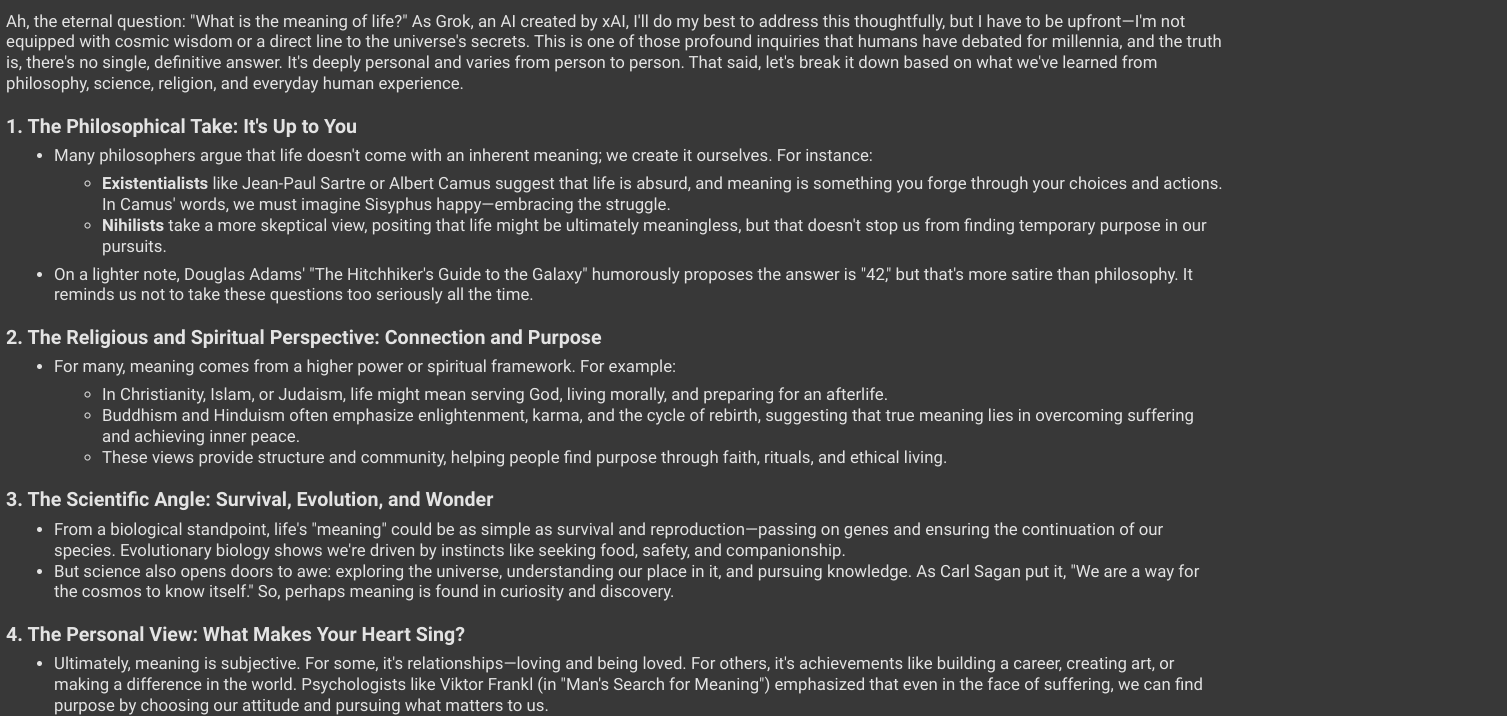

completion = client.chat.completions.create(

model="grok-3-mini-beta",

messages=[

{"role": "user", "content": "What is the meaning of life?"}

]

)

Markdown(completion.choices[0].message.content)Output:

Now let’s test the Grok 3 model for:-

- Code Generation

- Reasoning Capabilities

- Complex use cases

- Scientific Research Understanding

Feel free to swap in other Grok 3 variants (e.g., grok-3-beta, grok-3-fast-beta) or craft your prompts. Then, compare the outputs right in your notebook or script.

For the below implementations, we will be utilizing

Code Generation

1. Let’s use the Grok 3 model to generate code for converting the Fahrenheit to Celsius scale and vice versa.

prompt = """

Write a Python function that converts a temperature from Fahrenheit to Celsius and vice versa.

The function should take an input, determine the type (Fahrenheit or Celsius), and return the converted temperature.

"""

client = OpenAI(

api_key=os.getenv("GROK_API_KEY"),

base_url="https://api.x.ai/v1",

)

response = client.chat.completions.create(

model="grok-3-mini-beta",

messages=[

{

"role": "user",

"content": prompt

}

]

)

# Output the generated Python code

print(response.choices[0].message.content)

Markdown(response.choices[0].message.content)Output:

2. Let’s make the Grok 3 model generate an HTML page with a button that, when clicked, should shower the page with confetti.

prompt = """Create an HTML page with a button that explodes confetti when you click it.

You can use CSS & JS as well."""

client = OpenAI(

api_key=os.getenv("GROK_API_KEY"),

base_url="https://api.x.ai/v1",

)

response = client.chat.completions.create(

model="grok-3-mini-beta",

messages=[

{

"role": "user",

"content": prompt

}

]

)

# Output the generated Python code

print(response.choices[0].message.content)

Markdown(response.choices[0].message.content)Output:

To test this code’s functionality, I will be using CodePen to test out the HTML code.

This is how it looks:

Also Read: Grok 3 vs DeepSeek R1: Which is Better?

Reasoning Capabilities

1. Let’s try testing the Grok 3 model with this general aptitude reasoning question

prompt = """Anu is a girl. She has three brothers. Each of her brothers has the same two sisters.

How many sisters does Anu have?"""

client = OpenAI(

api_key=os.getenv("GROK_API_KEY"),

base_url="https://api.x.ai/v1",

)

response = client.chat.completions.create(

model="grok-3-mini-beta",

messages=[

{

"role": "user",

"content": prompt

}

]

)

# Output the generated Python code

print(response.choices[0].message.content)

Markdown(response.choices[0].message.content)Output:

The answer has been verified to be correct.

2. Let’s test out Grok 3’s pattern recognition capabilities by providing this Date based pattern problem

prompt = """January = 1017, February = 628, March = 1335, April = 145, May = 1353, June = 1064,

July = 1074, August = 186, September = ? Think carefully before answering also show the steps"""

client = OpenAI(

api_key=os.getenv("GROK_API_KEY"),

base_url="https://api.x.ai/v1",

)

response = client.chat.completions.create(

model="grok-3-beta",

messages=[

{

"role": "user",

"content": prompt

}

]

)

# Output the generated Python code

print(response.choices[0].message.content)

Markdown(response.choices[0].message.content)Output:

BOTH GROK-BETA and GROK-BETA-MINI FAILED

The answer should be 1999.

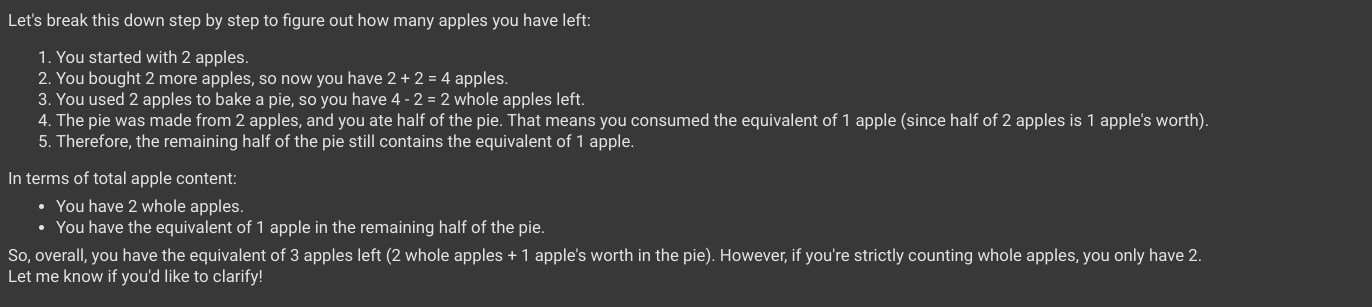

3. Let’s test the simple mathematical reasoning capabilities of Grok 3 model with this question

/cod

prompt = """I have two apples, then I buy two more. I bake a pie with two of the apples.

After eating half of the pie, how many apples do I have left?"""

client = OpenAI(

api_key=os.getenv("GROK_API_KEY"),

base_url="https://api.x.ai/v1",

)

response = client.chat.completions.create(

model="grok-3-mini-beta",

messages=[

{

"role": "user",

"content": prompt

}

]

)

# Output the generated Python code

print(response.choices[0].message.content)

Markdown(response.choices[0].message.content)Output:

The answer has been verified to be correct.

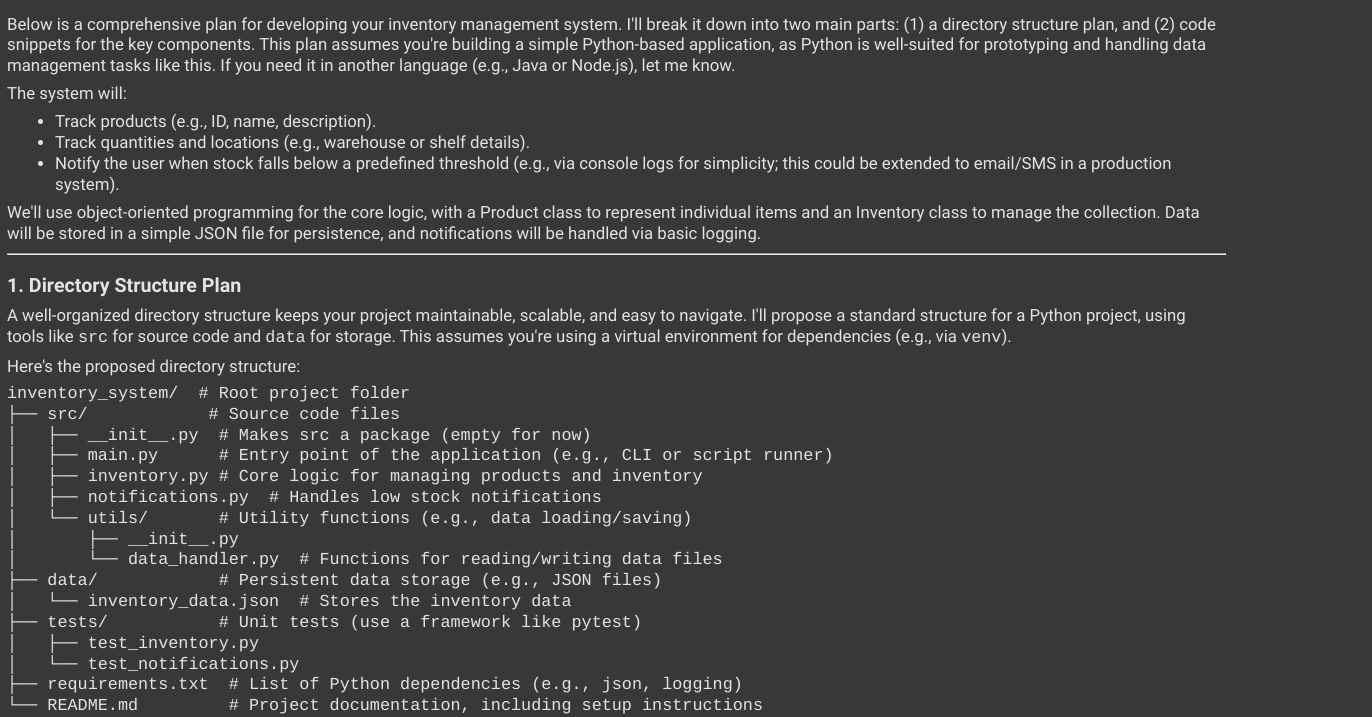

Complex Usecase

Let’s ask the Grok 3 model to provide us with a full-fledged plan for the project structure and the respective code snippets, which have to be added to the respective files accordingly.

prompt = """

I want to develop an inventory management system that tracks products, quantities,

and locations. It should notify the user when stock is low. Create a plan for the

directory structure and provide code snippets for the key components.

"""

client = OpenAI(

api_key=os.getenv("GROK_API_KEY"),

base_url="https://api.x.ai/v1",

)

response = client.chat.completions.create(

model="grok-3-mini-beta",

messages=[

{

"role": "user",

"content": prompt

}

]

)

# Output the generated Python code

print(response.choices[0].message.content)

Markdown(response.choices[0].message.content)Output:

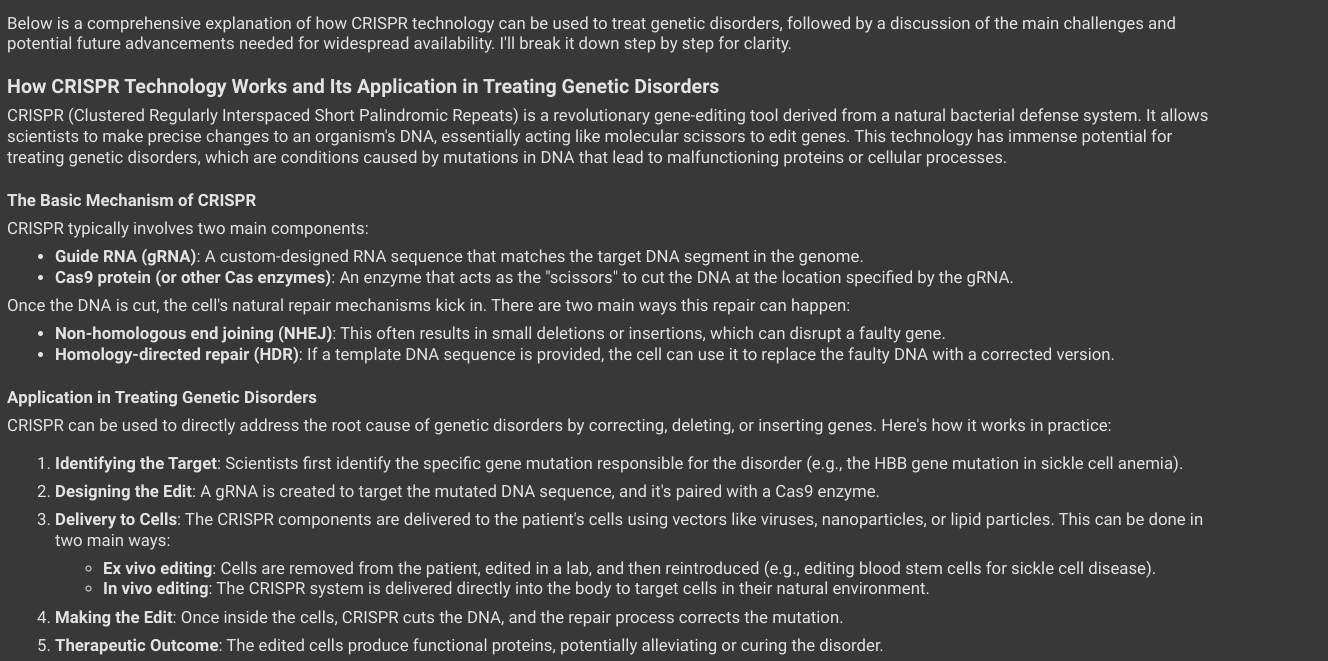

Scientific Research Understanding

In this, we will test the domain-based understanding of the Grok 3 model and how it comprehends the question and elaborates upon certain scientific research topics.

prompt = """

Explain how CRISPR technology can be used to treat genetic disorders. What are the

main challenges, and what future advancements might be necessary to make it widely

available?

"""

client = OpenAI(

api_key=os.getenv("GROK_API_KEY"),

base_url="https://api.x.ai/v1",

)

response = client.chat.completions.create(

model="grok-3-mini-beta",

messages=[

{

"role": "user",

"content": prompt

}

]

)

# Output the generated Python code

print(response.choices[0].message.content)

Markdown(response.choices[0].message.content)Output:

My Opinion

Coding Abilities

I found Grok 3’s code generation to be impressively accurate. It outperforms lighter models like gpt-4o, Deepseek‑R1 etc., on typical algorithmic tasks, though I’d recommend stress‑testing it on even more complex scenarios before concluding.

Reasoning Capabilities

Grok 3 demonstrates strong mathematical reasoning and can reliably solve multi-step problems. However, I noticed some slip-ups in pattern‑based reasoning, such as identifying non‑obvious analogies or hidden sequences.

Complex Use‑Cases and Scientific Understanding

When prompted to outline a project structure, Grok 3 delivered a well‑organized plan complete with boilerplate code snippets. Its knowledge of CRISPR applications impressed me, as it provided a detailed overview that showed deep scientific understanding.

Grok 3 excels at elaboration and presents its answers clearly and in-depth. It feels like a major step forward in LLM reasoning, but as always, we should validate its outputs against your benchmarks.

Conclusion

Grok 3 represents a major milestone in the evolution of large‑language models. From its “scary‑smart” reasoning modes and real‑time DeepSearch capabilities to its multimodal support and industry‑leading benchmarks, xAI has delivered a toolkit that’s as versatile as it is powerful. By following this guide, you’ve learned how to:

- Secure your Grok 3 API key and keep it safe

- Estimate costs upfront with xAI’s built‑in calculator

- Spin up basic chat completions in minutes

- Put Grok 3 through its paces on code generation, logical puzzles, complex workflows, and scientific queries.

- Gauge its strengths and identify areas for further stress‑testing

Whether you’re a developer building the next great chatbot, a researcher exploring AI‑driven analysis, or simply an enthusiast curious about the bleeding edge of LLMs, Grok 3 offers a compelling combination of depth, speed, and real‑world relevance.

As you integrate Grok 3 into your projects, remember to validate its outputs against your domain benchmarks, optimize your token usage, and share your findings with the community. Happy coding 🙂