March 2025 has been a pivotal month for Generative AI, with significant advancements propelling us closer to Artificial General Intelligence (AGI). From intelligent model releases like Gemini 2.5 Pro and ERNIE 4.5 to innovations in agentic AI with Manus AI, we’ve witnessed a rapid evolution in the AI landscape in the last month. All these developments underscore the potential to emulate and surpass human-like understanding and reasoning, leading the road towards AGI. In this article, we’ll see how close we are to achieving AGI and explore the top GenAI launches and updates of March 2025 to see how they contribute to the AGI landscape.

Table of Contents

What is AGI?

Artificial General Intelligence or AGI refers to AI systems possessing the ability to understand, learn, and apply knowledge across a broad range of tasks, akin to human cognitive functions. It means that AI would be able to perform any intellectual task that a human can, with the ability to reason, learn, and adapt across different domains without task-specific training. Unlike current AI models, which are specialized and limited in scope, AGI would possess general problem-solving abilities, self-improvement mechanisms, and a deeper understanding of context.

The whole idea of this technology is to bring artificial intelligence closer to human-level intelligence. Achieving AGI, therefore, represents a pivotal milestone in AI research. It has the potential to transform both industries as well as the daily lives of people, by automating complex decision-making and creative processes.

Are We Moving Closer to AGI?

With recent developments in AI, such as advanced generative features, enhanced contextual understanding, and unprecedented reasoning capabilities, it is quite evident that we are heading towards AGI. Here are some of the most notable advancements and trends in the AI landscape that signal our closeness to achieving AGI soon.

Increased Funding in the Direction of AGI

OpenAI secured a $40 billion investment led by SoftBank, with the goal of accelerating AI research toward AGI. Similarly, Turing raised $111 million in Series E funding, aiming to enhance AI infrastructure and complex real-world applications.

Meanwhile, Future AGI, an AI infrastructure startup, secured $1.6 million in pre-seed funding. Their research and development focus on scaling AI lifecycle management for enterprises, making these systems more autonomous in nature.

France-based H Company also entered the AGI race with a $220 million seed round, backed by Accel, Bpifrance, and Amazon, to develop AI agents for automating complex tasks. These investments highlight the increasing financial backing for AGI development, emphasizing the amount of resources being spent to accelerate this innovation.

OpenAI’s Agentic Features and Tools

As of late, OpenAI has been introducing several agentic features aimed at enhancing AI autonomy and utility. The Operator agent, for instance, enables AI to autonomously browse the web, interact with web pages, and perform tasks independently based on user instructions.

Complementing this, the Agents SDK provides developers with a streamlined framework to build AI applications equipped with tools, handoffs, and guardrails. These tools empower the development of AI systems that can operate with greater independence and adaptability, which are key characteristics of AGI.

The Emergence of Vibe Coding

The rising popularity of vibe coding has transformed the development landscape by allowing developers and non-developers to be part of AI innovation. This advanced technology enables programmers and even people without a tech background, to build and deploy AI agents more intuitively and efficiently, using simple prompts. This approach lowers the barrier to entry by leveraging AI-assisted development environments, thereby accelerating the proliferation of AI applications. It fosters innovation and majorly contributes to the broader advancement toward AGI.

Learn More:

Advancements in AI Reasoning and Thinking Models

Recent AI breakthroughs have significantly enhanced reasoning capabilities of models, taking them a step closer to AGI. DeepSeek’s R1, an open-source model, delivers performance comparable to leading proprietary models like GPT-4, at a much lower training cost. This efficiency paves the way for more scalable AGI development.

Meanwhile, xAI’s Grok-3 has pushed the boundaries of computational reasoning, outperforming GPT-4o on advanced benchmarks like AIME and GPQA. These advancements highlight AI’s growing ability to tackle complex problem-solving tasks with human-like cognition, signaling a leap forward in AGI evolution.

Advancements in Image Generation Models

Recent breakthroughs in AI-driven image generation have significantly enhanced the realism and diversity of synthesized visuals. For instance, GPT-4o’s advanced image generation capabilities have taken the internet by storm, enabling users to create highly detailed and specifically stylized images. Newer AI models can now generate images with text accurately and make edits to them directly via chatbot interfaces. These innovations showcase AI’s ability to understand and replicate complex visual concepts, and be as creative as humans, signaling a step toward AGI.

Also Read: GPT 4o, Gemini 2.5 Pro, or Grok 3: Which is the Best Image Generation Model?

Progress in Video Generation Models

The field of AI-driven video generation has seen remarkable progress, as shown by Runway’s Gen-4 model, Google’s Veo 2, and OpenAI’s Sora. These advanced models address the challenge of maintaining consistency in characters and scenes across multiple shots, which has been a common hurdle in AI-generated videos. Users can now create coherent narratives with consistent elements using a single reference image and descriptive prompts, thanks to such advanced video generation AI models. The intelligence and creativity shown by these models are also a sign of achieving AGI.

Also Read: Sora vs Veo 2: Which One Creates More Realistic Videos?

Top GenAI Launches of March 2025 that Lean Towards AGI

Here are some of the major GenAI launches and updates of the last month that signal our shift towards AGI.

1. Gemini 2.5 Pro Tops Chatbot Arena

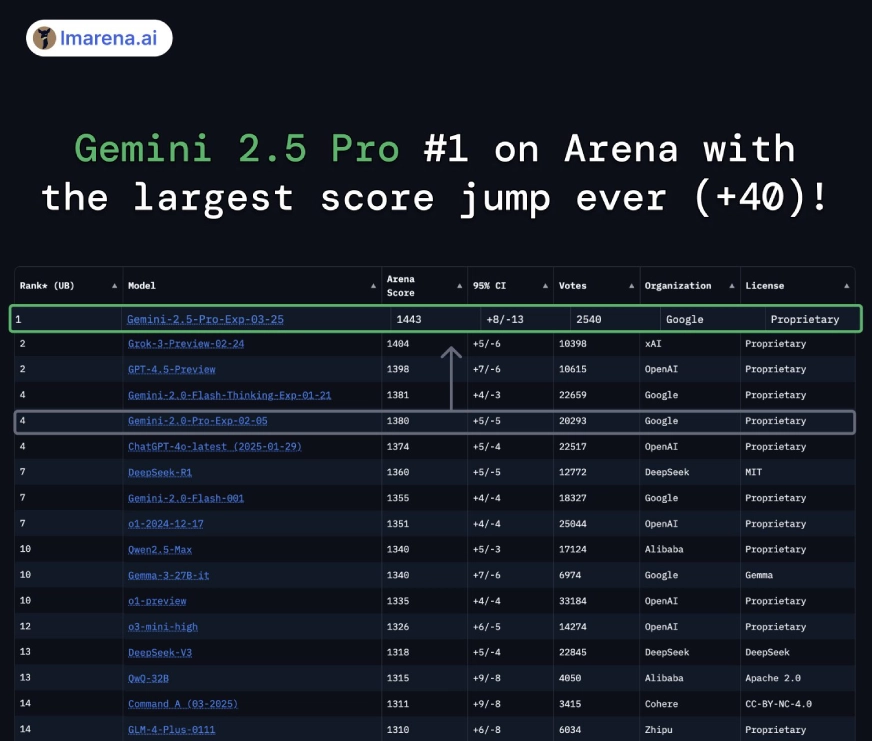

Google’s latest model, Gemini 2.5 Pro, has taken the AI community by storm, securing the #1 position on Chatbot Arena with an impressive score of 1440! This marks a notable increase of almost 40 points from other advanced models like ChatGPT-4o, Grok 3, and GPT-4.5, reflecting substantial enhancements in conversational AI capabilities.

This multimodal model excels in understanding text, audio, images, video, and code, showcasing enhanced reasoning and analytical capabilities. Initially available only to Gemini Advanced subscribers, Google recently surprised users by making Gemini 2.5 Pro accessible to all. This development not only underscores Google’s commitment to democratizing AI technology but also shows how close we are to achieving AGI.

2. GPT-4o Introduces Advanced Image Generation

The biggest GenAI highlight of March 2025 has got to be the advanced image generation capabilities of OpenAI’s GPT-4o! Its latest update allows ChatGPT users to create up to 3 highly detailed and stylistically diverse images per day, for free. GPT-4o quickly gained popularity for producing Studio Ghibli-style art, captivating millions and leading to a record surge in ChatGPT usage. This ability of an AI chatbot to mimic human creativity at this level surely represents a significant stride toward AGI.

3. Manus AI Revolutionizes Content Creation

The debut of Manus AI has transformed the agentic landscape, offering innovative solutions that blend natural language understanding with contextual awareness. It can perform complex tasks from simple prompts, without human oversight, leveraging real-time data retrieval, multi-step reasoning, and API integrations.

This new autonomous AI agent – the first of its kind from China – came in as a strong contender against OpenAI’s Operator, Scheduled Tasks, and Anthropic Claude’s Computer Use features. While some of its capabilities remain under wraps, the AI community is abuzz with anticipation regarding its potential applications and impact. Its ability to perform a broad spectrum of tasks with human-like proficiency further promises the onset of AGI.

4. MCP Expands with External App Integration

The Multimodal Communication Platform (MCP) has broadened the horizons of AI chatbots by facilitating integration with external applications. This technology, initially developed by Anthropic in 2024, now allows Claude and ChatGPT to seamlessly interact with various third-party services. This gives users access to a more interconnected ecosystem, streamlining workflows and improving productivity.

Meanwhile, Gemini chatbot has also integrated Google apps into its interface, giving users direct access to Google Workspace tools, YouTube, and more. Such app integrations and MCPs signal more adaptive and context-aware AI behaviors, which are essential characteristics of AGI.

Learn More: How to Use MCP: Model Context Protocol

5. Qwen Introduces Qwen Chat and a 32B Model

Alibaba added Qwen Chat and a 32B parameter counterpart of Qwen to its fleet of open-source models. Qwen Chat brings image generation, video generation, live coding, and other AI features to a single chatbot, revolutionizing user experience. And the best part is that all of these features are made available for free!

Outperforming ChatGPT and Grok, the performance of Qwen-32B has demonstrated that open models are on par with proprietary counterparts in terms of capabilities. This development democratizes access to advanced AI technologies and fosters collaborative innovation, accelerating the collective progress toward AGI.

Other GenAI Launches and Updates from March 2025

Here are a few more minor highlights in the field of generative AI that happened in March.

The Rising Popularity of Vibe Coding

The emergence of vibe coding, an intuitive and context-aware programming approach, is making coding more accessible to non-programmers. This trend reflects the broader movement toward AI systems that can understand and adapt to human intentions with minimal explicit instruction, a key aspect of AGI.

Claude’s Web Search Integration

Anthropic’s Claude AI has incorporated web search capabilities, enabling real-time information retrieval. This enhancement allows AI to access and process current data, aligning with the goal of creating systems that possess up-to-date knowledge and can engage in informed decision-making, essential traits of AGI.

NVIDIA GTC 2025 Announcements

Several groundbreaking AI technologies were unveiled at the NVIDIA GPU Technology Conference (GTC) 2025, held in March. This included the RTX Pro Blackwell series GPUs, DGX Spark personal AI supercomputers, and the GR00T N1 foundation model for robotics. These innovations provide the computational power and frameworks necessary for developing more sophisticated and capable AI systems, thereby accelerating the path toward AGI.

Learn More: 10 NVIDIA GTC 2025 Announcements that You Must Know

Baidu’s ERNIE 4.5 & X1

Baidu has unveiled ERNIE 4.5 and ERNIE X1, two AI models that are setting new standards in the industry. ERNIE 4.5, a multimodal foundation model, processes text, images, audio, and video, boasting enhanced logical reasoning and memory capabilities. Notably, it outperforms GPT-4.5 in multiple benchmarks while being cost-effective. ERNIE X1, designed for complex reasoning and tool use, has demonstrated superior performance, even defeating GPT-4.5 in chess matches, highlighting its advanced strategic thinking abilities.

Conclusion

March 2025 has brought us closer to AGI with breakthroughs in multimodal AI, autonomous agents, and open models. Gemini 2.5 Pro’s dominance, GPT-4o’s image generation, and Manus AI’s autonomous capabilities highlight AI’s growing capabilities. Meanwhile, the expansion of MCPs, Claude’s web search, and NVIDIA’s AI hardware push are creating a more interconnected AI ecosystem.

With models like Qwen-32B rivaling proprietary systems and ERNIE 4.5 showcasing strategic reasoning, AI is advancing at an unprecedented pace. As we progress through 2025, the convergence of these technologies brings us ever closer to realizing the vision of AGI – which is no longer a distant dream.

Frequently Asked Questions

A. Artificial General Intelligence (AGI) refers to AI that can understand, learn, and perform any intellectual task a human can, rather than being limited to specific tasks.

A. Gemini 2.5 Pro is Google’s latest AI model, known for its advanced reasoning and multimodal capabilities. It recently became the top-ranked AI chatbot, showing progress toward AGI.

A. GPT-4o now allows users to generate highly detailed images directly within ChatGPT, making AI more creative and versatile in producing visual content.

A. Vibe coding simplifies AI development by enabling intuitive, natural-language-based coding, allowing both developers and non-developers to build AI applications. This approach lowers the entry barrier and speeds up AI innovation.

A. MCP (Multimodal Communication Platform) is a system that enables AI chatbots to interact with external apps, making them more functional and connected.

A. Through MCP and integrations with platforms like Google Workspace and third-party APIs, AI chatbots can now perform tasks such as scheduling events, retrieving data, and more.

A. Breakthroughs in generative AI, reasoning models, autonomous agents, and multimodal capabilities are accelerating AGI development. Notable innovations include OpenAI’s agentic features, DeepSeek’s R1 model, and xAI’s Grok-3, which demonstrate enhanced reasoning and problem-solving skills.

A. At NVIDIA GTC 2025, the company unveiled the RTX Pro Blackwell GPUs, DGX Spark AI supercomputers, and the GR00T N1 foundation model for robotics. All these updates are designed to accelerate AI advancements, getting us closer to AGI, faster.