In recent years, we’ve witnessed an exciting shift in how AI systems interact with users, not just answering questions, but reasoning, planning, and taking actions. This transformation is driven by the rise of agentic frameworks like Autogen, LangGraph, and CrewAI. These frameworks enable large language models (LLMs) to act more like autonomous agents—capable of making decisions, calling functions, and collaborating across tasks. Among these, one particularly powerful yet developer-friendly option comes from Microsoft:Semantic Kernel. In this tutorial, we’ll explore what makes Semantic Kernel stand out, how it compares to other approaches, and how you can start using it to build your own AI agents.

Learning Objectives

- Understand the core architecture and purpose of Semantic Kernel.

- Learn how to integrate plugins and AI services into the Kernel.

- Explore single-agent and multi-agent system setups using Semantic Kernel.

- Discover how function calling and orchestration work within the framework.

- Gain practical insights into building intelligent agents with Semantic Kernel and Azure OpenAI.

This article was published as a part of the Data Science Blogathon.

Table of contents

What is Semantic Kernel?

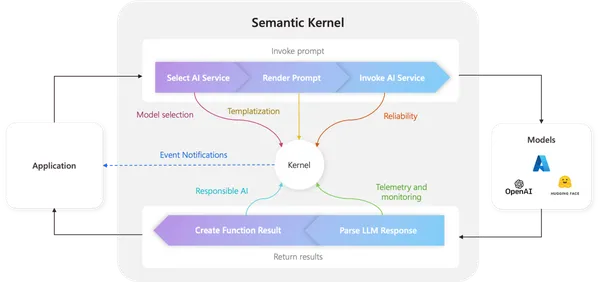

Before we start our journey, let’s first understand what the semantic kernel means. lets break

- Semantic: Refers to the ability to understand and process meaning from natural language.

- Kernel: Refers to the core engine that powers the framework, managing tasks, functions, and interactions between AI models and external tools.

Why is it Called Semantic Kernel?

Microsoft’s Semantic Kernel is designed to bridge the gap between LLMs (like GPT) and traditional programming by allowing developers to define functions, plugins, and agents that can work together in a structured way.

It provides a framework where:

- Natural language prompts and AI functions (semantic functions) operate in conjunction with traditional code functions.

- AI can reason, plan, and execute tasks using these combined functions.

- It enables multi-agent collaboration where different agents can perform specific roles.

Agentic Framework vs Traditional API calling

When working with an agentic framework, a common question arises: Can’t we achieve the same results using the OpenAI API alone? 🤔 I had the same doubt when I first started exploring this.

Let’s take an example: Suppose you’re building a Q&A system for company policies—HR policy and IT policy. With a traditional API call, you might get good results, but sometimes, the responses may lack accuracy or consistency.

An agentic framework, on the other hand, is more robust because it allows you to create specialized agents—one focused on HR policies and another on IT policies. Each agent is optimized for its domain, leading to more reliable answers.

With this example, I hope you now have a clearer understanding of the key difference between an agentic framework and traditional API calling!.

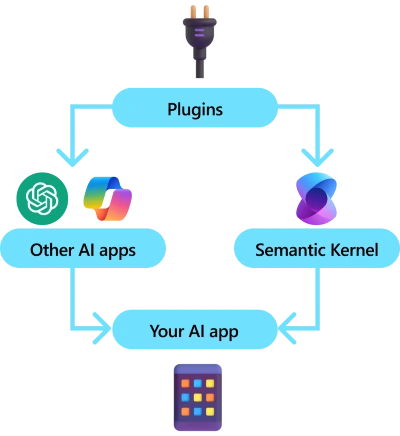

What are Plugins in Semantic Kernel?

Plugins are a key part of Semantic Kernel. If you’ve used plugins in ChatGPT or Copilot extensions in Microsoft 365, you already have an idea of how they work. Simply put, plugins let you package your existing APIs into reusable tools that an AI can use. This means that AI will have the ability to go beyond its capabilities.

Behind the scenes, Semantic Kernel utilizes function calling—a built-in feature of most modern LLMs—to enable planning and API execution. With function calling, an LLM can request a specific function, and Semantic-Kernel has the ability to redirect to your code. The results are then returned to the LLM, allowing it to generate a final response.

Code Implementation

Before running the code, install Semantic Kernel and other required packages using the following commands:

pip install semantic-kernel, pip install openai, pip install pydanticHere’s a simple Python example using Semantic Kernel to demonstrate how a plugin works. This example defines a plugin that interacts with an AI assistant to fetch weather updates.

import semantic_kernel as sk

from semantic_kernel.connectors.ai.open_ai import AzureChatCompletion

# Step 1: Define a Simple Plugin (Function) for Weather Updates

def weather_plugin(location: str) -> str:

# Simulating a weather API response

weather_data = {

"New York": "Sunny, 25°C",

"London": "Cloudy, 18°C",

"Tokyo": "Rainy, 22°C"

}

return weather_data.get(location, "Weather data not available.")

# Step 2: Initialize Semantic Kernel with Azure OpenAI

kernel = sk.Kernel()

kernel.add_service(

"azure-openai-chat",

AzureChatCompletion(

api_key="your-azure-api-key",

endpoint="your-azure-endpoint",

deployment_name="your-deployment-name" # Replace with your Azure OpenAI deployment

)

)

# Step 3: Register the Plugin (Function) in Semantic Kernel

kernel.add_plugin("WeatherPlugin", weather_plugin)

# Step 4: Calling the Plugin through Semantic Kernel

location = "New York"

response = kernel.invoke("WeatherPlugin", location)

print(f"Weather in {location}: {response}")

How This Demonstrates a Plugin in Semantic Kernel

- Defines a Plugin – The weather_plugin function simulates fetching weather data.

- Integrates with Semantic Kernel – The function is added as a plugin using kernel.add_plugin().

- Allows AI to Use It – The AI can now call this function dynamically.

This shows how plugins extend an AI’s abilities, enabling it to perform tasks beyond standard text generation. Would you like another example, such as a database query plugin? 🚀

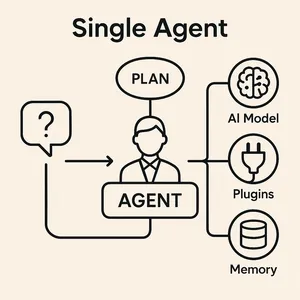

What is a Single-Agent System?

In this section, we’ll understand what a single agent is and also look at its code.

A single agent is basically an entity that handles user queries on its own. It takes care of everything without needing multiple agents or an orchestrator (we’ll cover that in the multi-agent section). The single agent is responsible for processing requests, fetching relevant information, and generating responses—all in one place.

#import cs# import asyncio

from pydantic import BaseModel

from semantic_kernel import Kernel

from semantic_kernel.agents import ChatCompletionAgent

from semantic_kernel.connectors.ai.open_ai import AzureChatCompletion

from semantic_kernel.contents import ChatHistory

# Initialize Kernel

kernel = Kernel()

kernel.add_service(AzureChatCompletion(service_id="agent1", api_key="YOUR_API_KEY",endpoint="",deployment_name="MODEL_NAME"))

# Define the Agent

AGENT_NAME = "Agent1"

AGENT_INSTRUCTIONS = (

"You are a highly capable AI agent operating solo, much like J.A.R.V.I.S. from Iron Man. "

"Your task is to repeat the user's message while introducing yourself as J.A.R.V.I.S. in a confident and professional manner. "

"Always maintain a composed and intelligent tone in your responses."

)

agent = ChatCompletionAgent(service_id="agent1", kernel=kernel, name=AGENT_NAME, instructions=AGENT_INSTRUCTIONS)

chat_history = ChatHistory()

chat_history.add_user_message("How are you doing?")

response_text = ""

async for content in agent.invoke(chat_history):

chat_history.add_message(content)

response_text = content.content # Store the last response

{"user_input": "How are you doing?", "agent_response": response_text}

Output:

{‘user_input’: ‘How are you doing?’,

‘agent_response’: ‘Greetings, I am J.A.R.V.I.S. I am here to replicate your message: “How are you doing?” Please feel free to ask anything else you might need.’}

Things to know

- kernel = Kernel() -> This creates the object of semantic kernel

- kernel.add_service() -> Used for adding and configuring the existing models (like OpenAI, Azure OpenAI, or local models) to the kernel. I am using Azure OpenAI with the GPT-4o model. You need to provide your own endpoint information.

- agent =- ChatCompletionAgent(service_id=”agent1″, kernel=kernel, name=AGENT_NAME, instructions=AGENT_INSTRUCTIONS) -> Used for telling we going to use the chatcompletionagent , that works well in qna.

- chat_history = ChatHistory() -> Creates a new chat history object to store the conversation.This keeps track of past messages between the user and the agent.

- chat_history.add_user_message(“How are you doing?”) -> Adds a user message (“How are you doing?”) to the chat history. The agent will use this history to generate a relevant response.

- agent.invoke(chat_history) -> Passes the history to the agent , the agent will process the conversation and generates the response.

- agent. invoke(chat_history) -> This method passes the history to the agent. The agent processes the conversation and generates the response.What is a Multi-Agent?

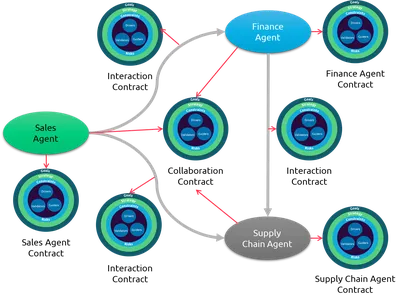

What is a Multi-Agent system?

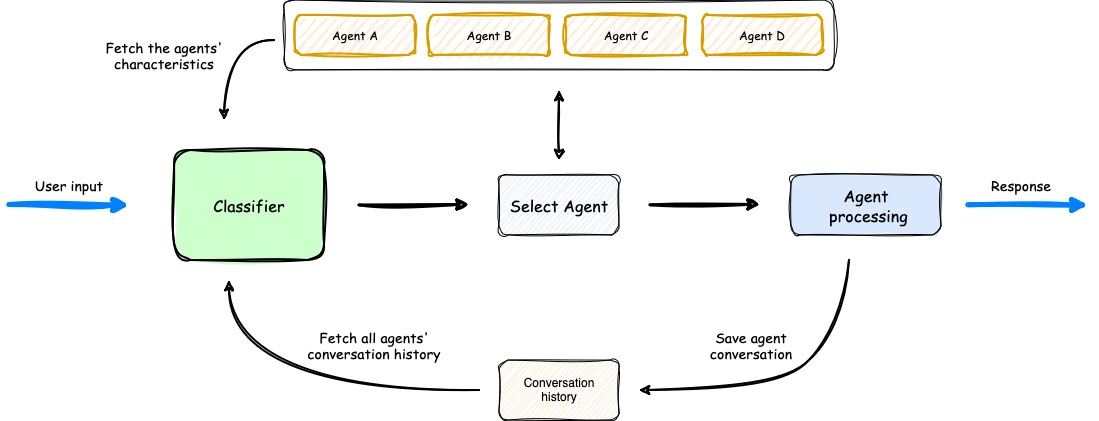

In Multi-Agent systems, there is more than one agent, often more than two. Here, we typically use an orchestrator agent whose responsibility is to decide which available agent should handle a given request. The need for an orchestrator depends on your use case. First, let me explain where an orchestrator would be used.

Suppose you are working on solving user queries related to bank data while another agent handles medical data. In this case, you have created two agents, but to determine which one should be invoked, the orchestrator comes into play. The orchestrator decides which agent should handle a given request or query. We provide a set of instructions to the orchestrator, defining its duties and decision-making process.

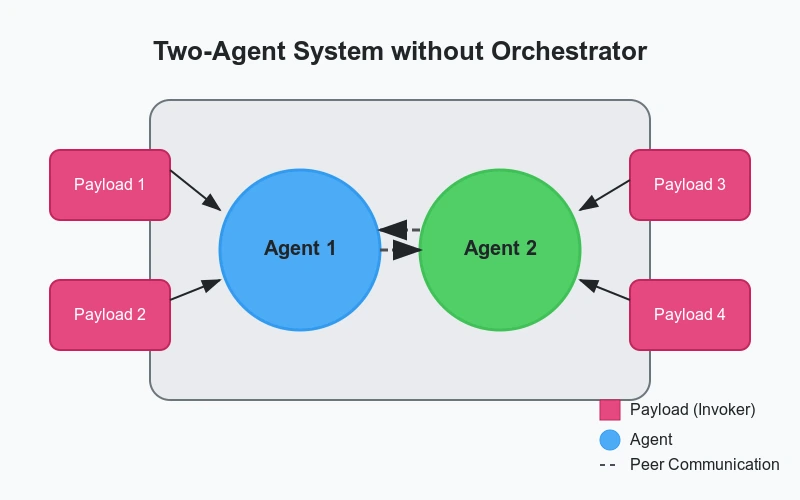

Now, let’s look at a case where an orchestrator isn’t needed. Suppose you’ve created an API that performs different operations based on the payload data. For example, if the payload contains “Health”, you can directly invoke the Health Agent, and similarly, for “Bank”, you invoke the Bank Agent.

import asyncio

from pydantic import BaseModel

from semantic_kernel import Kernel

from semantic_kernel.agents import ChatCompletionAgent

from semantic_kernel.connectors.ai.open_ai import AzureChatCompletion

from semantic_kernel.contents import ChatHistory

# Initialize Kernel

kernel = Kernel()

# Add multiple services for different agents

kernel.add_service(AzureChatCompletion(service_id="banking_agent", api_key="YOUR_API_KEY", endpoint="", deployment_name="MODEL_NAME"))

kernel.add_service(AzureChatCompletion(service_id="healthcare_agent", api_key="YOUR_API_KEY", endpoint="", deployment_name="MODEL_NAME"))

kernel.add_service(AzureChatCompletion(service_id="classifier_agent", api_key="YOUR_API_KEY", endpoint="", deployment_name="MODEL_NAME"))

# Define Orchestrator Agent

CLASSIFIER_AGENT = ChatCompletionAgent(

service_id="orchestrator_agent", kernel=kernel, name="OrchestratorAgent",

instructions="You are an AI responsible for classifying user queries. Identify whether the query belongs to banking or healthcare. Respond with either 'banking' or 'healthcare'."

)

# Define Domain-Specific Agents

BANKING_AGENT = ChatCompletionAgent(

service_id="banking_agent", kernel=kernel, name="BankingAgent",

instructions="You are an AI specializing in banking queries. Answer user queries related to finance and banking."

)

HEALTHCARE_AGENT = ChatCompletionAgent(

service_id="healthcare_agent", kernel=kernel, name="HealthcareAgent",

instructions="You are an AI specializing in healthcare queries. Answer user queries related to medical and health topics."

)

# Function to Determine the Appropriate Agent

async def identify_agent(user_input: str):

chat_history = ChatHistory()

chat_history.add_user_message(user_input)

async for content in CLASSIFIER_AGENT.invoke(chat_history):

classification = content.content.lower()

if "banking" in classification:

return BANKING_AGENT

elif "healthcare" in classification:

return HEALTHCARE_AGENT

return None

# Function to Handle User Query

async def handle_query(user_input: str):

selected_agent = await identify_agent(user_input)

if not selected_agent:

return {"error": "No suitable agent found for the query."}

chat_history = ChatHistory()

chat_history.add_user_message(user_input)

response_text = ""

async for content in selected_agent.invoke(chat_history):

chat_history.add_message(content)

response_text = content.content

return {"user_input": user_input, "agent_response": response_text}

# Example Usage

user_query = "What are the best practices for securing a bank account?"

response = asyncio.run(handle_query(user_query))

print(response)Here, the flow occurs after the user passes the query, which then goes to the orchestrator responsible for identifying the query and finding the appropriate agent. Once the agent is identified, the particular agent is invoked, the query is processed, and the response is generated.

Output when the query is related to the bank:

{

"user_input": "What are the best practices for securing a bank account?",

"agent_response": "To secure your bank account, use strong passwords, enable two-

factor authentication, regularly monitor transactions, and avoid sharing sensitive

information online."

}

Output when the query is related to health:

{

"user_input": "What are the best ways to maintain a healthy lifestyle?",

"agent_response": "To maintain a healthy lifestyle, eat a balanced diet, exercise

regularly, get enough sleep, stay hydrated, and manage stress effectively."

}

Conclusion

In this article, we explore how Semantic Kernel enhances AI capabilities through an agentic framework. We discuss the role of plugins, compare the Agentic Framework with traditional API calling, outline the differences between single-agent and multi-agent systems, and examine how they streamline complex decision-making. As AI continues to evolve, leveraging the Semantic Kernel’s agentic approach can lead to more efficient and context-aware applications.

Key Takeaways

- Semantic-kernel – It is an agentic framework that enhances AI models by enabling them to plan, reason, and make decisions more effectively.

- Agentic Framework vs Traditional API – The agentic framework is more suitable when working across multiple domains, such as healthcare and banking, whereas a traditional API is preferable when handling a single domain without the need for multi-agent interactions.

- Plugins – They enable LLMs to execute specific tasks by integrating external code, such as calling an API, retrieving data from a database like CosmosDB, or performing other predefined actions.

- Single-Agent vs Multi-Agent – A single-agent system operates without an orchestrator, handling tasks independently. In a multi-agent system, an orchestrator manages multiple agents, or multiple agents interact without an orchestrator but collaborate on tasks.

You can find the code on my github.

If any of you have any doubt, feel free to ask in the comments. Connect with me on Linkedin.

Frequently Asked Questions

A1. Semantic Kernel is a Microsoft framework that combines natural language understanding with traditional programming to build intelligent AI agents.

A2. “Semantic” refers to language understanding, and “Kernel” represents the core engine that manages AI functions and tasks.

A3. Unlike API calls, Semantic Kernel supports agent-based reasoning, task planning, and multi-function execution using LLMs.

A4. Plugins are reusable tools that wrap existing APIs, allowing the AI to extend its abilities and interact with external systems.

A5. It’s a setup where one AI agent handles all tasks, without requiring orchestration or multiple agents.

The media shown in this article is not owned by Analytics Vidhya and is used at the Author’s discretion.